Jetsonnano B01 笔记7:Mediapipe与人脸手势识别

今日继续我的Jetsonnano学习之路,今日学习安装使用的是:MediaPipe 一款开源的多媒体机器学习模型应用框架。可在移动设备、工作站和服务 器上跨平台运行,并支持移动 GPU 加速。

介绍与程序搬运官方,只是自己的学习记录笔记,同时记录一些自己的操作过程。

目录

MediaPipe介绍与安装:

安装更新 APT 下载列表:

安装 pip:

更新 pip:

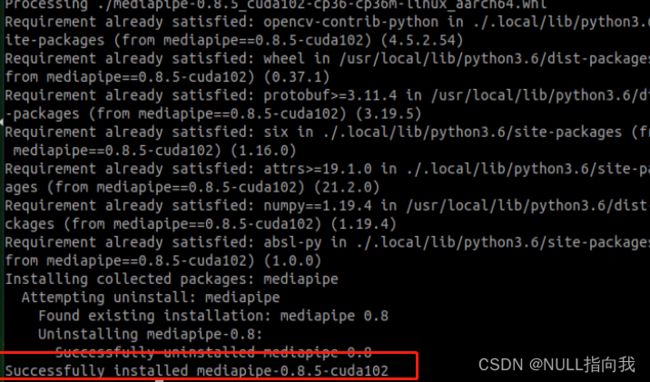

传输文件:

MediaPipe使用流程:

Mediapipe 人脸识别:

输入指令安装依赖包:

编写Python程序:

效果测试:

Mediapipe 手势识别:

编写python程序:

效果测试:

MediaPipe介绍与安装:

MediaPipe 优点1) 支持各种平台和语言,如 IOS 、 Android 、 C++ 、 Python 、 JAVAScript 、 Coral 等。2) 速度很快,模型基本可以做到实时运行。3) 模型和代码能够实现很高的复用率。MediaPipe 缺点1) 对于移动端, MediaPipe 略显笨重,需要至少 10M 以上的空间。2) 深度依赖于 Tensorflow ,若想更换成其他机器学习框架,需要更改大量代码。3) 使用的是静态图,虽然有助于提高效率,但也会导致很难发现错误。

安装更新 APT 下载列表:

sudo apt update安装 pip:

sudo apt install python3-pip更新 pip:

文件下载:https://download.csdn.net/download/qq_64257614/88322416?spm=1001.2014.3001.5503

在jetson桌面将其拖进文件管理的home目录然后输入终端指令进行安装:

MediaPipe使用流程:

下图是 MediaPipe 的使用流程。其中,实线部分需要自行编写代码,虚线部分则无需编

写。 MediaPipe 内部已经集成好了 AI 相关的模型和玩法,用户可以利用 MediaPipe 来快速推

算出实现一个功能所需的框架

Mediapipe 人脸识别:

输入指令安装依赖包:

pip3 install dataclasses编写Python程序:

import cv2

import mediapipe as mp

import time

last_time = 0

current_time = 0

fps = 0.0

def show_fps(img):

global last_time, current_time, fps

last_time = current_time

current_time = time.time()

new_fps = 1.0 / (current_time - last_time)

if fps == 0.0:

fps = new_fps if last_time != 0 else 0.0

else:

fps = new_fps * 0.2 + fps * 0.8

fps_text = 'FPS: {:.2f}'.format(fps)

cv2.putText(img, fps_text, (11, 20), cv2.FONT_HERSHEY_PLAIN, 1.0, (32, 32, 32), 4, cv2.LINE_AA)

cv2.putText(img, fps_text, (10, 20), cv2.FONT_HERSHEY_PLAIN, 1.0, (240, 240, 240), 1, cv2.LINE_AA)

return img

mp_face_detection = mp.solutions.face_detection

mp_drawing = mp.solutions.drawing_utils

# For webcam input:

cap = cv2.VideoCapture(0)

with mp_face_detection.FaceDetection(

min_detection_confidence=0.5) as face_detection:

while cap.isOpened():

success, image = cap.read()

if not success:

print("Ignoring empty camera frame.")

# If loading a video, use 'break' instead of 'continue'.

continue

# To improve performance, optionally mark the image as not writeable to

# pass by reference.

image.flags.writeable = False

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

results = face_detection.process(image)

# Draw the face detection annotations on the image.

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

if results.detections:

for detection in results.detections:

mp_drawing.draw_detection(image, detection)

# Flip the image horizontally for a selfie-view display.

image = show_fps(cv2.flip(image, 1))

cv2.imshow('MediaPipe Face Detection', image)

if cv2.waitKey(5) & 0xFF == 27:

break

cap.release()

最后传输python文件,然后输入指令运行,注意放在文件夹中的需要使用cd命令进行目录的跳转

效果测试:

Mediapipe人脸识别

Mediapipe 手势识别:

编写python程序:

import cv2

import mediapipe as mp

import numpy as np

import time

last_time = 0

current_time = 0

fps = 0.0

def show_fps(img):

global last_time, current_time, fps

last_time = current_time

current_time = time.time()

new_fps = 1.0 / (current_time - last_time)

if fps == 0.0:

fps = new_fps if last_time != 0 else 0.0

else:

fps = new_fps * 0.2 + fps * 0.8

fps_text = 'FPS: {:.2f}'.format(fps)

cv2.putText(img, fps_text, (11, 20), cv2.FONT_HERSHEY_PLAIN, 1.0, (32, 32, 32), 4, cv2.LINE_AA)

cv2.putText(img, fps_text, (10, 20), cv2.FONT_HERSHEY_PLAIN, 1.0, (240, 240, 240), 1, cv2.LINE_AA)

return img

def distance(point_1, point_2):

"""

计算两个点间的距离

:param point_1: 点1

:param point_2: 点2

:return: 两点间的距离

"""

return math.sqrt((point_1[0] - point_2[0]) ** 2 + (point_1[1] - point_2[1]) ** 2)

def vector_2d_angle(v1, v2):

"""

计算两向量间的夹角 -pi ~ pi

:param v1: 第一个向量

:param v2: 第二个向量

:return: 角度

"""

norm_v1_v2 = np.linalg.norm(v1) * np.linalg.norm(v2)

cos = v1.dot(v2) / (norm_v1_v2)

sin = np.cross(v1, v2) / (norm_v1_v2)

angle = np.degrees(np.arctan2(sin, cos))

return angle

def get_hand_landmarks(img_size, landmarks):

"""

将landmarks从medipipe的归一化输出转为像素坐标

:param img: 像素坐标对应的图片

:param landmarks: 归一化的关键点

:return:

"""

w, h = img_size

landmarks = [(lm.x * w, lm.y * h) for lm in landmarks]

return np.array(landmarks)

def hand_angle(landmarks):

"""

计算各个手指的弯曲角度

:param landmarks: 手部关键点

:return: 各个手指的角度

"""

angle_list = []

# thumb 大拇指

angle_ = vector_2d_angle(landmarks[3] - landmarks[4], landmarks[0] - landmarks[2])

angle_list.append(angle_)

# index 食指

angle_ = vector_2d_angle(landmarks[0] - landmarks[6], landmarks[7] - landmarks[8])

angle_list.append(angle_)

# middle 中指

angle_ = vector_2d_angle(landmarks[0] - landmarks[10], landmarks[11] - landmarks[12])

angle_list.append(angle_)

# ring 无名指

angle_ = vector_2d_angle(landmarks[0] - landmarks[14], landmarks[15] - landmarks[16])

angle_list.append(angle_)

# pink 小拇指

angle_ = vector_2d_angle(landmarks[0] - landmarks[18], landmarks[19] - landmarks[20])

angle_list.append(angle_)

angle_list = [abs(a) for a in angle_list]

return angle_list

def h_gesture(angle_list):

"""

通过二维特征确定手指所摆出的手势

:param angle_list: 各个手指弯曲的角度

:return : 手势名称字符串

"""

thr_angle = 65.

thr_angle_thumb = 53.

thr_angle_s = 49.

gesture_str = "none"

if (angle_list[0] > thr_angle_thumb) and (angle_list[1] > thr_angle) and (angle_list[2] > thr_angle) and (

angle_list[3] > thr_angle) and (angle_list[4] > thr_angle):

gesture_str = "fist"

elif (angle_list[0] < thr_angle_s) and (angle_list[1] < thr_angle_s) and (angle_list[2] > thr_angle) and (

angle_list[3] > thr_angle) and (angle_list[4] > thr_angle):

gesture_str = "gun"

elif (angle_list[0] < thr_angle_s) and (angle_list[1] > thr_angle) and (angle_list[2] > thr_angle) and (

angle_list[3] > thr_angle) and (angle_list[4] > thr_angle):

gesture_str = "hand_heart"

elif (angle_list[0] > thr_angle_thumb) and (angle_list[1] < thr_angle_s) and (angle_list[2] > thr_angle) and (

angle_list[3] > thr_angle) and (angle_list[4] > thr_angle):

gesture_str = "one"

elif (angle_list[0] > thr_angle_thumb) and (angle_list[1] < thr_angle_s) and (angle_list[2] < thr_angle_s) and (

angle_list[3] > thr_angle) and (angle_list[4] > thr_angle):

gesture_str = "two"

elif (angle_list[0] > thr_angle_thumb) and (angle_list[1] < thr_angle_s) and (angle_list[2] < thr_angle_s) and (

angle_list[3] < thr_angle_s) and (angle_list[4] > thr_angle):

gesture_str = "three"

elif (angle_list[0] > thr_angle_thumb) and (angle_list[1] > thr_angle) and (angle_list[2] < thr_angle_s) and (

angle_list[3] < thr_angle_s) and (angle_list[4] < thr_angle_s):

gesture_str = "ok"

elif (angle_list[0] > thr_angle_thumb) and (angle_list[1] < thr_angle_s) and (angle_list[2] < thr_angle_s) and (

angle_list[3] < thr_angle_s) and (angle_list[4] < thr_angle_s):

gesture_str = "four"

elif (angle_list[0] < thr_angle_s) and (angle_list[1] < thr_angle_s) and (angle_list[2] < thr_angle_s) and (

angle_list[3] < thr_angle_s) and (angle_list[4] < thr_angle_s):

gesture_str = "five"

elif (angle_list[0] < thr_angle_s) and (angle_list[1] > thr_angle) and (angle_list[2] > thr_angle) and (

angle_list[3] > thr_angle) and (angle_list[4] < thr_angle_s):

gesture_str = "six"

else:

"none"

return gesture_str

mp_drawing = mp.solutions.drawing_utils

mp_hands = mp.solutions.hands

# For webcam input:

cap = cv2.VideoCapture(0)

with mp_hands.Hands(

min_detection_confidence=0.5,

min_tracking_confidence=0.5) as hands:

while cap.isOpened():

success, image = cap.read()

if not success:

print("Ignoring empty camera frame.")

# If loading a video, use 'break' instead of 'continue'.

continue

# Flip the image horizontally for a later selfie-view display, and convert

# the BGR image to RGB.

image = cv2.cvtColor(cv2.flip(image, 1), cv2.COLOR_BGR2RGB)

# To improve performance, optionally mark the image as not writeable to

# pass by reference.

image.flags.writeable = False

results = hands.process(image)

# Draw the hand annotations on the image.

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

gesture = "none"

if results.multi_hand_landmarks:

for hand_landmarks in results.multi_hand_landmarks:

mp_drawing.draw_landmarks(image, hand_landmarks, mp_hands.HAND_CONNECTIONS)

landmarks = get_hand_landmarks((image.shape[1], image.shape[0]), hand_landmarks.landmark)

angle_list = hand_angle(landmarks)

gesture = h_gesture(angle_list)

if gesture != "none":

break;

image = show_fps(cv2.flip(image, 1))

cv2.putText(image, gesture, (20, 60), cv2.FONT_HERSHEY_SIMPLEX, 1.5, (255, 0, 0), 4)

cv2.imshow('MediaPipe Hands', image)

if cv2.waitKey(5) & 0xFF == 27:

break

cap.release()

效果测试:

Mediapipe手势识别