ClickHouse从入门到精通(一)

文章目录

- ClickHouse从入门到精通

-

- ClickHouse 入门篇

-

- ClickHouse入门

-

- 1. ClickHouse 的特点

-

- 列式存储

- DBMS的功能

- 多样化引擎

- 高吞吐写入能力

- 数据分区与线程级并行

- 2. 性能对比

- ClickHouse安装

-

- 1. 准备工作

- 2. 单机安装

- 3. 使用 tgz 安装

- 4. docker 安装

- 数据类型

-

- 1. 整型

- 2. 浮点型

- 3. 布尔型

- 4. Decimal型

- 5. 字符型

- 6. 枚举类型

- 7. 时间类型

- 8. 数组

- 9. 可为空

- 10. 其它类型

- 库引擎

-

- 1. Atomic 库引擎

- 2. MySQL 库引擎

- 表引擎

-

- 1. TinyLog

- 2. Memory

- 3. MergeTree

-

- partition by 分区(可选)

- primary key(可选)

- order by(必选)

- 二级索引

- 数据TTL

- 4. ReplacingMergeTree

-

- 去重机制

- 去重范围

- 案例演示

- 结论

- 5. SummingMergeTree

-

- 案例演示

- 结论

- 开发建议和问题

ClickHouse从入门到精通

ClickHouse 入门篇

ClickHouse入门

- ClickHouse 是由俄罗斯的 Yandex 开源的一个用于联机分析 OLAP 的列式存储数据库管理系统,使用 C++ 语言编写,支持 SQL 实时查询的大型数据管理系统。由于 ClickHouse 在大型数据集查询处理的高效表现,从 2016 年开源以来,就吸引了全球的目光,甚至一度登上 github 的关注度头把交椅。

- OLAP:ClickHouse 的设计定位就是用于 OLAP 离线数据处理,相比于 OLTP 在线事务处理,ClickHouse 更关注对海量数据的计算分析,关注的是数据吞吐、查询速度、计算性能能指标。而对于数据频繁的修改变更,则不太擅长。所以 ClickHouse 通常用来构建后端的实时数仓或者离线数仓。

1. ClickHouse 的特点

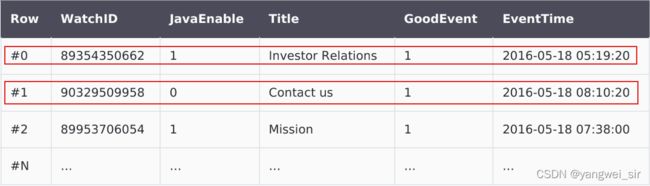

列式存储

- ClickHouse 是一个真正意义上的列式存储数据库,传统数据库存储数据都是按照数据行进行存储。比如,常用的 MySQL,使用 B+ 树的叶子节点完整保留一行数据。

- 行式存储好处是,当想要查询一条数据时,可以通过一次磁盘查找+顺序读获取得到一条完整的数据。

- 而 ClickHouse 存储数据的方式是按列来存储,将来自不同的数据进行单独存储,每一列为一个文件进行存储。

- 列式存储的好处:

- 对于列的聚合、计数、求和等统计操作远优于行式存储;

- 由于每一列的数据类型都是相同的,针对于数据存储更容易进行数据压缩,每一列选择更优的数据压缩算法,大大提高了数据的压缩比;

- 由于数据压缩比更好,一方面节省了磁盘空间,另一方面对于 cache 也有了更大的发挥空间。

DBMS的功能

- 几乎覆盖了标准 SQL 的大部分语法,包括 DDL 和 DML,以及配套的各种函数,用户管理、权限管理,数据的备份与恢复。

多样化引擎

- ClickHouse 和 MySQL 类似,把表级的存储引擎插件化,根据表的不同需求可以设定不同的存储引擎,包括合并树、日志、接口和其它四大类 20 多种引擎。

高吞吐写入能力

- ClickHouse 采用类 LSM Tree 的结构,数据写入后定期在后台 Compation。通过类 LSM Tree 的结构,ClickHouse 在数据导入时全部都是顺序 append 写,写入后数据段不可更改,在后台 compation 时也是多个段 merge sort 后顺序协会磁盘。顺序写的特性,充分利用了磁盘的吞吐能力,即便在 HDD 上也有着优异的写入性能。

- 官方公开 benchmark 测试显示能够达到 50MB-200MB/s 的写入吞吐能力,按照每行 100Byte 估算,大约相当于 50W-200W 条/s 的写入速度。

数据分区与线程级并行

- ClickHouse 将数据划分为多个 partition,每个 partition 再进一步划分为多个 index granularity(索引粒度),然后通过多个 CPU 核心分别处理其中的一部分来实现并行数据处理。在这种设计下,单条Query就能利用整机所有 CPU。极致的并行处理能力,极大的降低了查询延迟。

- 所以,ClickHouse 即使对于大量数据的查询也能够化整为零并行处理。但是有一个弊端就是对于单条查询使用多 CPU,就不利于同时并发多条查询。所以对于高 QPS 的查询业务,ClickHouse 并不是强项。

2. 性能对比

- 单表查询

- 关联查询

- ClickHouse 像很多 OLAP 数据库一样,单表查询速度优于关联查询,而且 ClickHouse 的两种差距更为明显。

ClickHouse安装

- ClickHouse 提供了多种安装方式,安装部署教程:https://clickhouse.com/docs/zh/getting-started/install/

1. 准备工作

- 确认关闭防火墙

systemctl status firewalld.service

- CentOS 取消打开文件数限制

# vim /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

* soft nproc 131072

* hard nproc 131072

# vim /etc/security/limits.d/20-nproc.conf

* soft nofile 65536

* hard nofile 65536

* soft nproc 131072

* hard nproc 131072

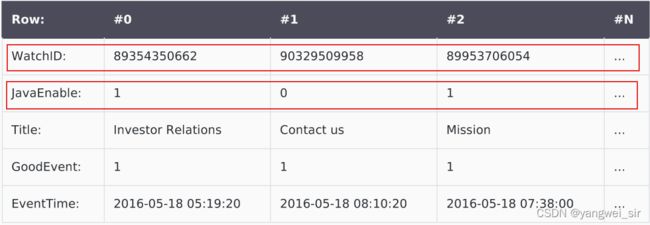

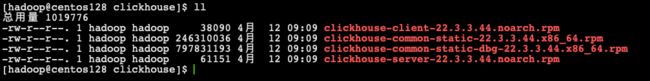

2. 单机安装

- 下载安装包:https://packages.clickhouse.com/rpm/stable/,这里选择目前最新版本 22.3.3.44

- 安装:

sudo rpm -ivh *.rpm

# 查看安装情况

sudo rpm -qa|grep clickhouse

- 修改配置文件:把

:: 的注释打开,这样的话才能让 ClickHouse 被除本机以外的服务器访问

vim /etc/clickhouse-server/config.xml

- 启动 Server:

systemctl start clickhouse-server

# 关闭开机自启动

systemctl disable clickhouse-server

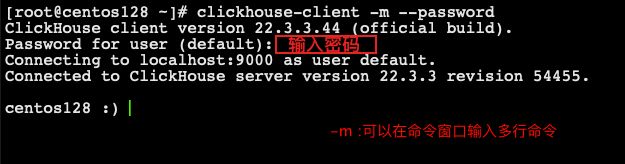

- 使用 client 连接 server:

3. 使用 tgz 安装

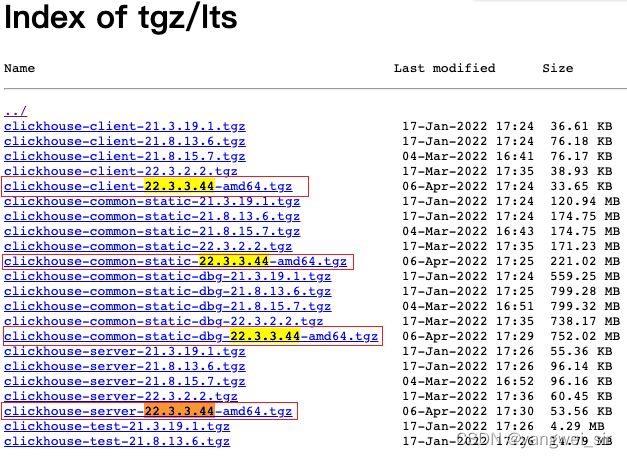

- 下载最新版本安装包:https://packages.clickhouse.com/tgz/lts/

- 解压缩安装

export LATEST_VERSION=22.3.3.44

# 1.

tar -zxvf clickhouse-common-static-$LATEST_VERSION-amd64.tgz

sudo clickhouse-common-static-$LATEST_VERSION/install/doinst.sh

# 2.

tar -zxvf clickhouse-common-static-dbg-$LATEST_VERSION-amd64.tgz

sudo clickhouse-common-static-dbg-$LATEST_VERSION/install/doinst.sh

# 3.

tar -zxvf clickhouse-client-$LATEST_VERSION-amd64.tgz

sudo clickhouse-client-$LATEST_VERSION/install/doinst.sh

# 4. 需要设置密码

tar -zxvf clickhouse-server-$LATEST_VERSION-amd64.tgz

sudo clickhouse-server-$LATEST_VERSION/install/doinst.sh

- 启动

sudo clickhouse start

- 执行脚本:/usr/bin 里面有 clickhouse、clickhouse-client 等指令脚本

- 配置文件:/etc/clickhouse-server/ 这个目录下的 config.xml 和 users.xml 是最为重要的两个配置文件。

- 运行日志:/var/log/clickhouse-server/ 服务运行的详细日志。

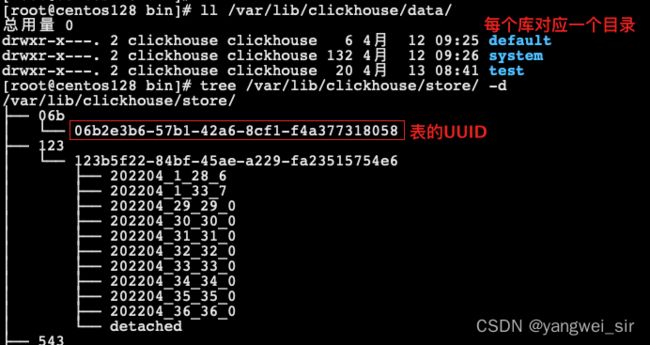

- 数据目录:/var/lib/clickhouse/ 这个目录包含了clickhouse运行时的所有数据文件。例如metadata目录下存放了所有表的元数据,可以看到,clickhouse就是以sql文件的方式保存表结构,启动时加载这些sql文件就完成了数据加载。而data目录下存放了所有的表数据。像之前看到的default和system两个默认的数据库就对应data目录下的两个文件夹。

另外,clickhouse在安装时,会默认创建一个clickhouse用户来部署这些文件。所以,如果不是使用root用户进行操作的话,需要注意下用户权限的问题。

4. docker 安装

docker run -di --name docker_ch -p 8123:8123 -p 9000:9000 --ulimit nofile=262144:262144 --volume=/Volumes/D/dokcer_data/clickhouse:/var/lib/clickhouse clickhouse/clickhouse-server

数据类型

- 官方参考文档:https://clickhouse.com/docs/zh/sql-reference/data-types/

1. 整型

- 固定长度的整型,包括有符号整型或无符号整型,数值范围: [ − 2 n − 1 , 2 n − 1 − 1 ] [-2^{n-1},\ 2^{n-1}-1] [−2n−1, 2n−1−1]。

| 类型 | 数值范围 | Java类型 | |

|---|---|---|---|

| 有符号整型 | Int8 | [ − 128 , 127 ] [-128,\ 127] [−128, 127] | byte |

| Int16 | [ − 32768 , 32767 ] [-32768,\ 32767] [−32768, 32767] | short | |

| Int32 | [ − 2147483648 , 2147483647 ] [-2147483648,\ 2147483647] [−2147483648, 2147483647] | int | |

| Int64 | [ − 9223372036854775808 , 9223372036854775807 ] [-9223372036854775808,\ 9223372036854775807] [−9223372036854775808, 9223372036854775807] | long | |

| 无符号整型 | UInt8 | [ 0 , 255 ] [0,\ 255] [0, 255] | |

| UInt16 | [ 0 , 65535 ] [0,\ 65535] [0, 65535] | ||

| UInt32 | [ 0 , 4294967295 ] [0,\ 4294967295] [0, 4294967295] | ||

| UInt64 | [ 0 , 18446744073709551615 ] [0,\ 18446744073709551615] [0, 18446744073709551615] |

- 适用场景:个数、数量、存储型 id。

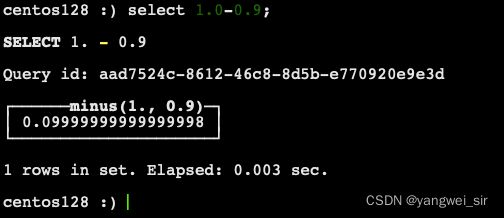

2. 浮点型

- Float32 —— float、Float64 —— double

- 建议尽可能以整型形式存储数据。例如:将固定精度的数字转化为整数值,时间用毫秒为单位表示,因为浮点型进行计算时可能引起四舍五入的误差。

- 适用场景:一般数据值比较小,不涉及大量的统计计算,精度要求不高的时候。 比如:保存商品的重量。

3. 布尔型

- 没有单独的类型来存储布尔值。可以使用 UInt8 类型,取值限制为 0 或 1。

4. Decimal型

- 有符号的浮点数,可在加、减和乘法运算过程中保持精度。对于除法,最低有效数字会被丢弃(不舍入)。

- 有三种声明:s 标识小数位

- Decimal32(s):相当于 Decimal(9-s,s),有效位数为 1~9

- Decimal64(s):相当于 Decimal(18-s,s),有效位数为 1~18

- Decimal128(s):相当于 Decimal(38-s,s),有效位数为 1~38

- 适用场景:金额、汇率、利率等字段为了保证小数点精度,都使用 Decimal 进行存储。

5. 字符型

- String:字符串可以任意长度的。它可以包含任意的字节集,包含空字节。

- FixedString(N):固定长度 N 的字符串,N 必须是严格的正自然数。当服务端读取长度小于 N 的字符串时候,通过在字符串末尾添加空字节来达到 N 字节长度。 当服务端读取长度大于 N 的字符串时候,将返回错误消息。

- 与 String 相比,极少会使用 FixedString,因为使用起来不是很方便。

- 适用场景:名称、文字描述、字符型编码。固定长度的可以保存一些定长的内容,比如一些编码、性别等,但是考虑到一定的变化风险,带来收益不够明显,所以定长字符串使用意义有限。

6. 枚举类型

- 有 Enum8 和 Enum16 两种,保存 ‘string’ = integer 的对应关系。

- 用法演示:

-- 创建表

create table t_enum (

x Enum8('hello' = 1, 'world' = 2)

) ENGINE = TinyLog;

-- 插入数据

insert into t_enum values('hello'), ('world'), ('hello');

-- 查询

select * from t_enum;

-- 尝试插入任何其它值,会报错

insert into t_enum values('hehe');

-- 查看对应行的数值

select cast(x, 'Int8') from t_enum;

- 适用场景:对一些状态、类型的字段算是一种空间优化,也算是一种数据约束。但是实际使用中往往因为一些数据内容的变化增加了一定的维护成本,甚至是数据丢失问题,所以谨慎使用。

7. 时间类型

| 类型 | 说明 | |

|---|---|---|

| Date | ‘年-月-日’ 字符串 | 2022-04-12 |

| Datetime | ‘年-月-日时:分:秒’ 字符串 | 2022-04-12 12:12:12 |

| Datetime64 | ‘年-月-日时:分:秒.毫秒’ 字符串 | 2022-04-12 12:12:12:123 |

- 日期类型,用两个字节存储,表示从 1970-01-01 (无符号) 到当前的日期值。

8. 数组

- Array(T):由 T 类型元素组成的数组。

- T 可以是任意类型,包含数组类型。但不推荐使用多维数组,ClickHouse 对多维数组的支持有限。例如,不能在 MergeTree 表中存储多维数组。

- 用法演示:

-- 创建数组方式一:使用 array 函数

select array(1, 2) as x, toTypeName(x);

-- 创建数组方式二:使用方括号

select [1, 2] as x, toTypeName(x);

9. 可为空

- 绝大部分的基础类型都可以通过在前面添加一个Nullable()声明来允许接受Null空值。例如Nullable(Int8)类型的列可以存储Int8类型的值,没有值的行将存储NULL。

- Nullable类型字段不能包含在表索引中。并且使用Nullable几乎总是对性能产生负面影响,在设计数据库时要尽量避免使用Nullable。例如对于字符串,可以用空字符代替Null。而对于整型数据,可以用无业务意义的数字例如-1来表示Null。

10. 其它类型

- clickhouse中还设计了很多非常有特色的数据类型,例如 Geo、Map、Tuple、UUID 等类型。

库引擎

- 使用数据库首先要建库,clickhouse提供了多种库引擎实现不同场景下的库声明。

1. Atomic 库引擎

- 是 ClickHouse 默认的库引擎,默认创建的 default 库就是这种引擎。可以在建库时进行声明:

CREATE DATABASE test [ENGINE = Atomic]

- Atomic类型的数据库完全由clickhouse自己管理数据。每个数据库对应 /var/lib/clickhouse/data/ 目录下的一个子目录。数据库中的每个表会分配一个唯一的 UUID,数据存储在目录 /var/lib/clickhouse/store/。

2. MySQL 库引擎

- ClickHouse 作为一个数据仓库,还提供了非常多与其它数据库整合的库引擎,最为常见的就是 MySQL。

- MySQL引擎用于将远程的MySQL服务器中的表映射到ClickHouse中,并允许您对表进行INSERT和SELECT查询,以方便您在ClickHouse与MySQL之间进行数据交换。MySQL数据库引擎会将对其的查询转换为MySQL语法并发送到MySQL服务器中,因此您可以执行诸如SHOW TABLES或SHOW CREATE TABLE之类的操作。

- 通过MySQL引擎可以省掉很多 ETL 的过程。例如下面的语句就可以在 clickhouse 中创建一个mysqldb。

CREATE DATABASE IF NOT EXISTS mysqldb ENGINE = MySQL('node01:3306', 'test', 'root', '123456');

- 对于 mysqldb 库的操作,会转义成 mysql 语法,发送到相对于的 MySQL 中执行。接下来就可以像操作 clickhouse 自己的表一样进行 insert、delete 等操作,但是不能进行 RENAME、CREATE TABLE、ALTER 操作。

- 这种库引擎,clickhouse本身并不存储数据,只是将请求转发到mysql。同样,clickhouse还提供了针对PostgreSQL、SQLLite的库引擎。

- 是不是觉得只是请求转发还不够爽?性能不够高?clickhouse还提供了自己存储数据的物化引擎,针对 MySQL 的 MaterializedMySQL 引擎和针对 PostgreSQL 的MaterializedPostgreSQL 引擎。这两个引擎都会将 clickhouse 服务器作为对应数据库的从库工作。通过执行日志实时将主库中的数据同步到 clickhouse中。但是目前这两个引擎还在实验阶段。可以尝试,但不建议在生产上使用。

- 具体使用方法详见官方文档:https://clickhouse.com/docs/zh/engines/database-engines/materialized-mysql/

- 实际上,大部分场景下,我们就使用clickhouse自己的默认引擎就够了。而其他的引擎会通过定制的 ETL 过程来实现。但是 clickhouse 功能的朴实无华已经尽显无疑。

表引擎

- 官方文档:https://clickhouse.com/docs/zh/engines/table-engines/

- 表引擎是 ClickHouse 的一大特色,可以说表引擎决定了如何存储表中的数据。包括:

- 数据的存储方式和位置,写到哪里以及从哪里读取数据;

- 支持哪些查询以及如何支持;

- 并发数据访问;

- 索引的使用(如果存在);

- 是否可以执行多线程请求;

- 数据复制参数

- 表引擎的使用方式就是必须显式在创建表时定义该表使用的引擎,以及引擎使用的相关参数。

- 注意:引擎的名称大小写敏感

1. TinyLog

- 以列文件的形式保存在磁盘上,不支持索引,没有并发控制。一般保存少量数据的小表,生产环境上作用有限,可以用作平时练习测试。

CREATE TABLE t_tinylog (id Int32, name String) ENGINE = TinyLog;

2. Memory

- 内存引擎,数据以未压缩的原始形式直接保存在内存当中,服务器重启数据就会消失。读写操作不会相互阻塞,不支持索引。简单查询下有非常非常高的性能表现(超过 10G/s)。

- 一般用到它的地方不多,除了用来测试,就是在需要非常高的性能,同时数据量又不太大(上限大概 1 亿行)的场景。

3. MergeTree

- ClickHouse 中最强大的表引擎当属 MergeTree(合并树)引擎及该系列(*MergeTree)中的其它引擎,支持索引和分区,地位可以相当于 InnoDB 之于 MySQL。

- 建表语句:

CREATE TABLE [IF NOT EXISTS] [db.]table_name [ON CLUSTER cluster]

(

name1 [type1] [DEFAULT|MATERIALIZED|ALIAS expr1] [TTL expr1],

name2 [type2] [DEFAULT|MATERIALIZED|ALIAS expr2] [TTL expr2],

...

INDEX index_name1 expr1 TYPE type1(...) GRANULARITY value1,

INDEX index_name2 expr2 TYPE type2(...) GRANULARITY value2

) ENGINE = MergeTree()

ORDER BY expr

[PARTITION BY expr]

[PRIMARY KEY expr]

[SAMPLE BY expr]

[TTL expr [DELETE|TO DISK 'xxx'|TO VOLUME 'xxx'], ...]

[SETTINGS name=value, ...]

- MergeTree 有很多参数,但比较重要的有三个:partition by、primary key、order by

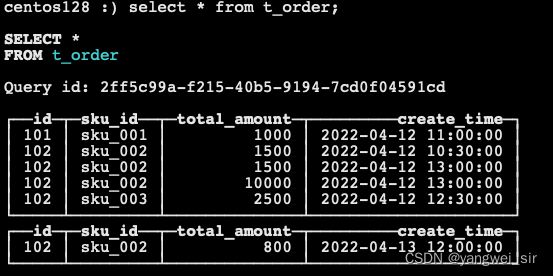

-- 创建订单表

create table t_order (

id UInt32,

sku_id String,

total_amount Decimal(16, 2),

create_time Datetime

) engine = MergeTree

partition by toYYYYMMDD(create_time)

primary key (id)

order by (id, sku_id);

-- 插入数据

insert into t_order values (101, 'sku_001', 1000.00, '2022-04-12 11:00:00'), (102, 'sku_002', 1500.00, '2022-04-12 10:30:00'), (102, 'sku_003', 2500.00, '2022-04-12 12:30:00'), (102, 'sku_002', 1500.00, '2022-04-12 13:00:00'), (102, 'sku_002', 10000.00, '2022-04-12 13:00:00'), (102, 'sku_002', 800.00, '2022-04-13 12:00:00');

partition by 分区(可选)

- 作用:降低数据扫描范围,优化查询速度。例如示例中,按创建时间 create_time 进行了分区,当查询条件 where 中指定了日期,就只需要去扫描对应日期的数据,而不用进行全表扫描了。

- 使用分区后,涉及到跨分区的查询操作,clickhouse 将会以分区为单位进行并行处理。在clickhouse中这是一个可选项,如果不填,相当于只用一个分区。

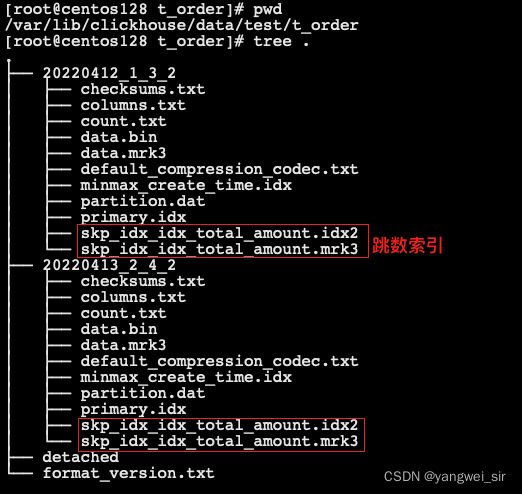

- 分区目录:MergeTree 是以列文件+索引文件+表定义文件组成的,但是如果设定了分区那么这些文件就会保存到不同的分区目录中。

[root@centos128 t_order]# cd /var/lib/clickhouse/data/test/t_order/

[root@centos128 t_order]# tree .

.

├── 20220412_1_1_0

│ ├── checksums.txt # 校验文件,用于校验各个文件的正确性,存放各个文件的 size 以及 hash 值

│ ├── columns.txt # 表的结构信息

│ ├── count.txt # 当前分区的数据条数,所以对于clickhouse来说,查表的行数非常非常快

│ ├── data.bin # 数据文件

│ ├── data.mrk3 # 标记文件,在 idx 索引文件和 bin 数据文件之间起到桥梁作用

│ ├── default_compression_codec.txt

│ ├── minmax_create_time.idx # 分区键的最大最小值

│ ├── partition.dat

│ └── primary.idx # 主键索引文件,用于加快查询效率

├── 20220413_2_2_0

│ ├── checksums.txt

│ ├── columns.txt

│ ├── count.txt

│ ├── data.bin

│ ├── data.mrk3

│ ├── default_compression_codec.txt

│ ├── minmax_create_time.idx

│ ├── partition.dat

│ └── primary.idx

├── detached

└── format_version.txt

-

PartitionId_MinBlockNum_MaxBlockNum_Level:分区_最小分区块编号_最大分区块编号_合并层级

- PartitionId:数据分区ID,生成规则由PARTITION BY分区键决定。

- 未定义分区键:默认生成一个目录名为 all 的数据分区,所有数据均存放在 all 目录下;

- 整型分区键:直接用该整型值的字符串形式作为分区ID;

- 日期类型分区键,或者可以转化为日志类型的字符串;

- 其它类型分区键:String、Float 通过 128 位 Hash 算法取其 Hash 值作为分区 ID。

- MinBlockNum:最小分区块编号,自增类型,从 1 开始向上递增。每产生一个新的目录,分区就向上递增一个数字。

- MaxBlockNum:最大分区块编号,新创建的分区 MinBlockNum 等于 MaxBlockNum。

- Level:合并的层级,被合并的次数。合并次数越多,层级值越大。

- PartitionId:数据分区ID,生成规则由PARTITION BY分区键决定。

-

并行:分区后,面对涉及跨分区的查询统计,ClickHouse 会以分区为单位进行处理。

-

数据写入和分区合并:任何一个批次的数据写入都会产生一个临时分区,不会纳入任何一个已有的分区。写入后的某个时刻(大概 10-15 分钟后),ClickHouse 会自动执行合并操作(等不及也可以手动通过 optimize 执行),把临时分区的数据,合并到已有分区中。

OPTIMIZE TABLE ${table_name} FINAL;

- 日志查看分区合并:

# pwd

/var/log/clickhouse-server

less clickhouse-server.log | grep 'c145f3c8-0833-4fa3-9dc1-17adf3ba65b7' -C 10

primary key(可选)

- ClickHouse 中的主键和其它数据库不太一样,它只提供了数据的一级索引,但是却不是唯一约束。这就意味着是可以存在相同 primary key 的数据的。

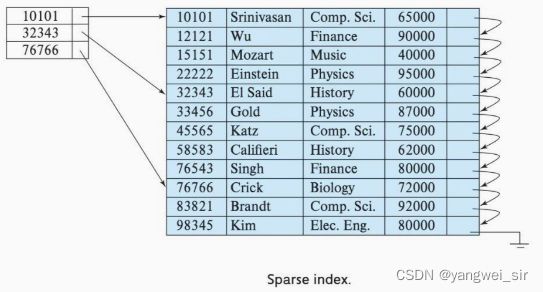

- 主键的设定主要依据是查询语句中的 where 条件,根据条件通过对主键进行某种形式的二分查找,能够定位到对应的 index granularity,避免了全表扫描。

- index granularity:索引粒度,指在稀疏索引中两个相邻索引对应数据的间隔。ClickHouse 中的 MergeTree 默认是 8192。官方不建议修改这个值,除非该列存在大量重复值,比如在一个分区中几万行才有一个不同数据。

- 稀疏索引:可以用很少的索引数据,定位更多的数据,代价就是只能定位到索引粒度的第一行,然后再进行进行一点扫描。

order by(必选)

- 指定了分区内的数据按照哪些字段顺序进行有序保存。

- order by 是 MergeTree 中唯一一个必填项,甚至比 primary key 还重要,因为当用户不设置主键的情况,很多处理会依照 order by 的字段进行处理(比如后面会讲的去重和汇总)。

- 注意:主键必须是 order by 字段的前缀字段。

- 比如 order by (id, sku_id),那么主键必须是 id 或者 (id, sku_id)

二级索引

- 目前在 ClickHouse 的官网上二级索引的功能在 v20.1.2.4 之前是被标注为实验性的,在这个版本之后默认是开启的。

- 老版本使用二级索引前需要增加设置

set allow_experimental_data_skipping_indices=1;

- 添加索引:其中 GRANULARITY 是指定二级索引对于一级索引的粒度

ALTER TABLE t_order ADD INDEX idx_total_amount total_amount TYPE minmax GRANULARITY 5;

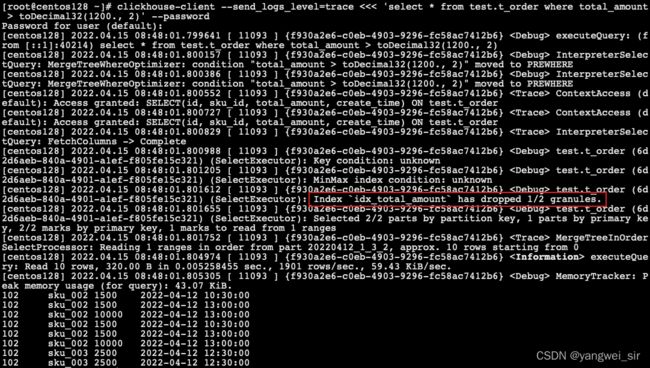

- 查询测试:二级索引能够为非主键字段的查询发挥作用

clickhouse-client --send_logs_level=trace <<< 'select * from test.t_order where total_amount > toDecimal32(1200., 2)' --password

数据TTL

- TTL 即 Time To Live,MergeTree 提供了可以管理数据表或者列的生命周期的功能。

- 列级别TTL:

-- 创建测试表

create table t_order2 (

id UInt32,

sku_id String,

total_amount Decimal(16, 2) TTL create_time + interval 10 SECOND,

create_time Datetime

) engine = MergeTree

partition by toYYYYMMDD(create_time)

primary key (id)

order by (id, sku_id);

-- 插入数据

insert into t_order2 values (101, 'sku_001', 1000.00, '2022-04-15 09:00:00'), (102, 'sku_002', 1500.00, '2022-04-15 10:30:00'), (102, 'sku_003', 2500.00, '2022-04-15 12:30:00'), (102, 'sku_002', 1500.00, '2022-04-15 13:00:00'), (102, 'sku_002', 10000.00, '2022-04-15 13:00:00'), (102, 'sku_002', 800.00, '2022-04-15 12:00:00');

- 表级别TTL:

- 可以设置一个用于移除过期行的表达式,以及多个用于在磁盘或卷上自动转移数据片段的表达式

TTL expr

[DELETE|TO DISK 'xxx'|TO VOLUME 'xxx'][, DELETE|TO DISK 'aaa'|TO VOLUME 'bbb'] ...

[WHERE conditions]

[GROUP BY key_expr [SET v1 = aggr_func(v1) [, v2 = aggr_func(v2) ...]] ]

-- TTL 规则的类型紧跟在每个 TTL 表达式后面,它会影响满足表达式时(到达指定时间时)应当执行的操作:

-- DELETE - 删除过期的行(默认操作);

-- TO DISK 'aaa' - 将数据片段移动到磁盘 aaa;

-- TO VOLUME 'bbb' - 将数据片段移动到卷 bbb;

-- GROUP BY - 聚合过期的行。

-- 例如:下面这条语句是整行数据会在 create_time 之后 10 秒丢失

ALTER TABLE t_order2 MODIFY TTL create_time + INTERVAL 10 SECOND;

- TTL 指定的字段不能指定是主键字段,而且必须是 Date 或者 Datetime 类型,推荐使用分区的日期字段。能够使用的的时间单位有:

- SECOND、MINUTE、HOUR、DAY、WEEK、MONTH、QUARTER、YEAR。

4. ReplacingMergeTree

- 是 MergeTree 的一个变种,它存储特性完全继承 MergeTree,只是多了一个去重的功能。 尽管 MergeTree 可以设置主键,但是 primary key 其实没有唯一约束的功能。如果你想处理掉重复的数据,可以借助这个 ReplacingMergeTree。

去重机制

- 数据的去重只会在合并的过程中出现,合并会在未知的时间在后台进行,所以你无法预先作出计划,有一些数据可能仍未被处理。

去重范围

- 如果表经过了分区,去重只会在分区内部进行去重,不能执行跨分区的去重。

- 所以 ReplacingMergeTree 能力有限, ReplacingMergeTree 适用于在后台清除重复的数据以节省空间,但是它不保证没有重复的数据出现。

案例演示

- 创建表

create table t_order_rmt(

id UInt32,

sku_id String,

total_amount Decimal(16, 2),

create_time Datetime

) engine = ReplacingMergeTree(create_time)

partition by toYYYYMMDD(create_time)

primary key (id)

order by (id, sku_id);

- ReplacingMergeTree() 填入的参数为版本字段,重复数据保留版本字段值最大的。如果不填版本字段,默认按照插入顺序保留最后一条。

- 插入数据:

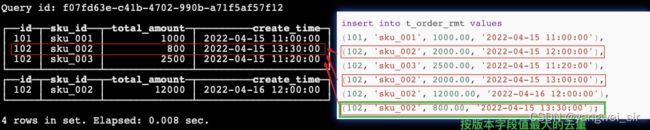

insert into t_order_rmt values (101, 'sku_001', 1000.00, '2022-04-15 11:00:00'), (102, 'sku_002', 2000.00, '2022-04-15 12:00:00'), (102, 'sku_003', 2500.00, '2022-04-15 11:20:00'), (102, 'sku_002', 2000.00, '2022-04-15 13:00:00'), (102, 'sku_002', 12000.00, '2022-04-16 12:00:00'), (102, 'sku_002', 800.00, '2022-04-15 13:30:00');

- 再次插入数据,并执行查询:

select * from t_order_rmt;

-- 手动合并

OPTIMIZE TABLE t_order_rmt FINAL;

-- 再次查询

select * from t_order_rmt;

结论

- 实际是使用 order by 字段作为唯一键;

- 去重不能跨分区;

- 只有同一批插入(新版本)或者合并分区时才会进行去重;

- 认定重复的数据保留版本字段值最大的;

- 如果版本字段相同则按插入顺序保留最后一条。

5. SummingMergeTree

- 对于不查询明细,只关心以维度进行汇总聚合结果的场景。如果只使用普通的 MergeTree 的话,无论是存储空间的开销,还是查询时临时聚合的开销都比较大。

- ClickHouse 为这种场景,提供了一种能够“预聚合”的引擎 SummingMergeTree。

案例演示

- 创建表:

create table t_order_smt(

id UInt32,

sku_id String,

total_amount Decimal(16, 2),

create_time Datetime

) engine = SummingMergeTree(total_amount)

partition by toYYYYMMDD(create_time)

primary key (id)

order by (id, sku_id);

- 插入数据:

insert into t_order_smt values (101, 'sku_001', 1000.00, '2022-04-15 11:00:00'), (102, 'sku_002', 2000.00, '2022-04-15 12:00:00'), (102, 'sku_003', 2500.00, '2022-04-15 11:20:00'), (102, 'sku_002', 2000.00, '2022-04-15 13:00:00'), (102, 'sku_002', 12000.00, '2022-04-16 12:00:00'), (102, 'sku_002', 800.00, '2022-04-15 13:30:00');

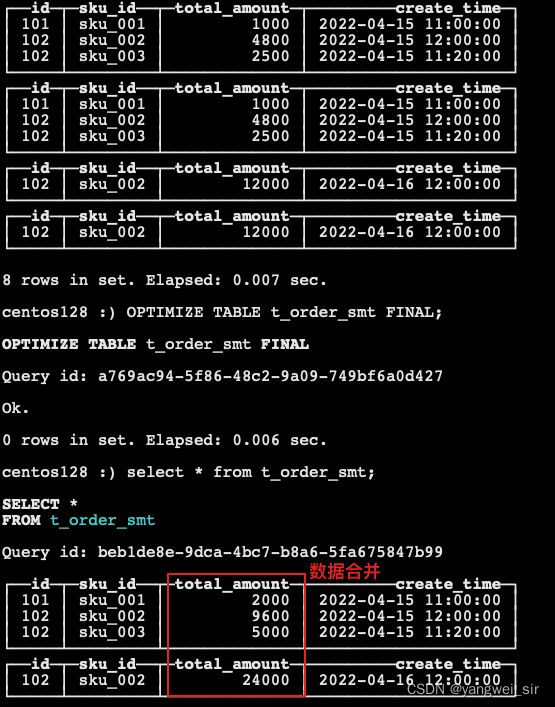

- 再次插入数据,并执行查询:

select * from t_order_smt;

-- 手动合并

OPTIMIZE TABLE t_order_smt FINAL;

-- 再次查询

select * from t_order_smt;

结论

- 以 SummingMergeTree() 中指定的列作为汇总数据列;

- 可以填写多列,必须是数字列;如果不填,那么以非维度列且为数字列的字段为汇总数据列;

- 以 order by 的列为准,作为维度列;

- 其它列按插入顺序保留第一行;

- 不在一个分区的数据不会被聚合;

- 只有在同一批次插入(新版本)或分片合并时才会进行聚合。

开发建议和问题

- 设计聚合表的话,唯一键值、流水号可以去掉,所有字段全部是维度、度量或者时间戳。

能不能直接执行以下 SQL 得到汇总值?

select total_amount from t_order_smt where sku_id = 'xxx' and create_time = 'yyy';

- 不行,可能会包含一些还没来得及聚合的临时明细。

- 如果要是获取汇总值,还是需要使用 sum 进行聚合,这样效率会有一定的提高,但本身 ClickHouse 是列式存储的,效率提升有限,不会特别明显。

select sum(total_amount) from t_order_smt where sku_id = 'xxx' and create_time = 'yyy';