Android Camera2 获取预览帧的回调数据(带demo)

一、前言

众所周知,在旧Camera接口中,我们通过 setPreviewCallback 或 setPreviewCallbackWithBuffer 接口来获取相机预览的帧数据。然而,在 Camera2 中,这些接口以及不复存在了,那么 Camera2 该如何获取预览帧数据呢?

在 Camera2 中,我们通过 ImageReader 类来间接的获取预览帧数据,并在 onImageAvailable(ImageReader reader) 回调中得到具体数据。具体做法后面详细介绍。

如果你还不熟悉 Camera2 的开发,建议先了解和熟悉一下。

二、开发步骤

1. 新建

新建一个 ImageReader 对象,用来接收预览帧数据。

private ImageReader mPreviewImageReader;

建议在 open camera 的时机新建。

2. 初始化

在此之前,我们先得初始化好预览大小,比较只有预览大小确定后,我们才知道得使用一块多大的内存来接收这块数据。

mPreviewImageReader = ImageReader.newInstance(

mPreviewSize.getWidth(), // 宽度

mPreviewSize.getHeight(), // 高度

ImageFormat.YUV_420_888, // 图像格式

2); // 用户能同时得到的最大图像数

图像格式可以在 ImageFormat 类中查看,值得注意的是,并不是所有的格式在 Camera2 的预览中都支持,例如 ImageFormat.NV21 便不再支持。另外就是预览不建议使用 ImageFormat.JPEG 这样的格式,因为转码会消耗大量的性能,推荐使用 ImageFormat.YUV_420_888 之类的格式。

扩展:YUV_420_888 介绍

首先YUV是一种颜色空间,基于YUV的颜色编码是流媒体的常用编码方式。“Y”表示明亮度(Luminance、Luma),“U”和“V”则是色度、浓度(Chrominance、Chroma)。

YUV420是一类格式的集合,YUV420并不能完全确定颜色数据的存储顺序。举例来说,对于4x4的图片,在YUV420下,任何格式都有16个Y值,4个U值和4个V值,不同格式只是Y、U和V的排列顺序变化。I420(YUV420Planar的一种)则为YYYYYYYYYYYYYYYYUUUUVVVV,NV21(YUV420SemiPlanar)则为YYYYYYYYYYYYYYYYUVUVUVUV。

在Camera2中,YUV_420_888通常为YUV420Planar排列(手机不同可能会存在差异,目前我用过的几个手机都是这个格式)。实际应用中,可以根据采集到的数据中的pixelStride值判断具体的格式,再去做数据转换,其中pixelStride代表行内颜色值间隔。

3. 释放

当我们不再需要这个 ImageReader 时,我们需要手动释放它。建议在 release camera 的时机释放。

if (mPreviewImageReader != null) {

mPreviewImageReader.close();

mPreviewImageReader = null;

}

4. 添加到预览请求中,获取帧数据

这是最关键的一步了,我们只需要在预览的 CaptureRequest.Builder 中添加一个目标到该 ImageReader,就可以获取到相应的帧数据了。

private void initPreviewRequest() {

try {

mPreviewRequestBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

mPreviewRequestBuilder.addTarget(mPreviewSurface); // 设置预览输出的 Surface

mPreviewRequestBuilder.addTarget(mPreviewImageReader.getSurface()); // 设置预览回调的 Surface

mCameraDevice.createCaptureSession(Arrays.asList(mPreviewSurface, mPreviewImageReader.getSurface()),

new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(CameraCaptureSession session) {

mCaptureSession = session;

startPreview();

}

@Override

public void onConfigureFailed(CameraCaptureSession session) {

Log.e(TAG, "ConfigureFailed. session: mCaptureSession");

}

}, mBackgroundHandler); // handle 传入 null 表示使用当前线程的 Looper

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

5. 添加回调监听

给这个 ImageReader 添加一个回调监听事件,我们就可以在回调方法中获取到相应的预览帧数据了。

mPreviewImageReader.setOnImageAvailableListener(new ImageReader.OnImageAvailableListener() {

@Override

public void onImageAvailable(ImageReader reader) {

// do something

}

}, null);

三、数据处理

上述 onImageAvailable(ImageReader reader) 回调得到并非我们之前所熟悉的 byte[] 数组,而是一个 ImageReader 对象,我们可以通过这个 ImageReader 对象来得到以往所熟悉的 byte[] 数组。

取数据的方式可以参考下面这篇博客:

Android下使用camera2和Surfaceview预览图像并取得YUV420p数据回调

另外如果要将预览的 YUV 数据转换成 RGB 格式,类似这样的图像格式转换,在 Java 做效率并不高,建议使用一个第三方的库专门进行图像处理,如 OpenCV。

四、示例代码

通过一个简单的示例工程,来了解怎么设置和获取帧预览,并将其转化为可使用的 yuv 数据把。

1. MainActivity

public class MainActivity extends AppCompatActivity {

private CameraFragment mCameraFragment;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

}

//...省略部分代码,完整代码可看最后链接

private void initCameraFragment() {

if (mCameraFragment == null) {

mCameraFragment = new CameraFragment();

getSupportFragmentManager()

.beginTransaction()

.replace(R.id.fragment_container, mCameraFragment)

.commit();

}

}

}

首先准备一个 Activity 来获取权限和加载页面。

2. Camera2Proxy

public class Camera2Proxy {

private static final String TAG = "Camera2Proxy";

private Activity mActivity;

// camera

private int mCameraId = CameraCharacteristics.LENS_FACING_FRONT; // 要打开的摄像头ID

private Size mPreviewSize = new Size(640, 480); // 固定640*480演示

private CameraDevice mCameraDevice; // 相机对象

private CameraCaptureSession mCaptureSession;

// handler

private Handler mBackgroundHandler;

private HandlerThread mBackgroundThread;

// output

private Surface mPreviewSurface; // 输出到屏幕的预览

private ImageReader mImageReader; // 预览回调的接收者

private ImageReader.OnImageAvailableListener mOnImageAvailableListener;

/**

* 打开摄像头的回调

*/

private CameraDevice.StateCallback mStateCallback = new CameraDevice.StateCallback() {

@Override

public void onOpened(@NonNull CameraDevice camera) {

Log.d(TAG, "onOpened");

mCameraDevice = camera;

initPreviewRequest();

}

@Override

public void onDisconnected(@NonNull CameraDevice camera) {

Log.d(TAG, "onDisconnected");

releaseCamera();

}

@Override

public void onError(@NonNull CameraDevice camera, int error) {

Log.e(TAG, "Camera Open failed, error: " + error);

releaseCamera();

}

};

@TargetApi(Build.VERSION_CODES.M)

public Camera2Proxy(Activity activity) {

mActivity = activity;

}

@SuppressLint("MissingPermission")

public void openCamera() {

Log.v(TAG, "openCamera");

startBackgroundThread(); // 对应 releaseCamera() 方法中的 stopBackgroundThread()

try {

CameraManager cameraManager = (CameraManager) mActivity.getSystemService(Context.CAMERA_SERVICE);

Log.d(TAG, "preview size: " + mPreviewSize.getWidth() + "*" + mPreviewSize.getHeight());

mImageReader = ImageReader.newInstance(mPreviewSize.getWidth(), mPreviewSize.getHeight(),

ImageFormat.YUV_420_888, 2);

mImageReader.setOnImageAvailableListener(mOnImageAvailableListener, null);

// 打开摄像头

cameraManager.openCamera(Integer.toString(mCameraId), mStateCallback, mBackgroundHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

public void releaseCamera() {

Log.v(TAG, "releaseCamera");

if (mImageReader != null) {

mImageReader.close();

mImageReader = null;

}

if (mCaptureSession != null) {

mCaptureSession.close();

mCaptureSession = null;

}

if (mCameraDevice != null) {

mCameraDevice.close();

mCameraDevice = null;

}

stopBackgroundThread(); // 对应 openCamera() 方法中的 startBackgroundThread()

}

public void setImageAvailableListener(ImageReader.OnImageAvailableListener onImageAvailableListener) {

mOnImageAvailableListener = onImageAvailableListener;

}

public void setPreviewSurface(SurfaceTexture surfaceTexture) {

// mPreviewSize必须先初始化完成

surfaceTexture.setDefaultBufferSize(mPreviewSize.getWidth(), mPreviewSize.getHeight());

mPreviewSurface = new Surface(surfaceTexture);

}

private void initPreviewRequest() {

try {

final CaptureRequest.Builder builder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

// 添加输出到屏幕的surface

builder.addTarget(mPreviewSurface);

// 添加输出到ImageReader的surface。然后我们就可以从ImageReader中获取预览数据了

builder.addTarget(mImageReader.getSurface());

mCameraDevice.createCaptureSession(Arrays.asList(mPreviewSurface, mImageReader.getSurface()),

new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(@NonNull CameraCaptureSession session) {

mCaptureSession = session;

// 设置连续自动对焦和自动曝光

builder.set(CaptureRequest.CONTROL_AF_MODE,

CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

builder.set(CaptureRequest.CONTROL_AE_MODE,

CaptureRequest.CONTROL_AE_MODE_ON_AUTO_FLASH);

CaptureRequest captureRequest = builder.build();

try {

// 一直发送预览请求

mCaptureSession.setRepeatingRequest(captureRequest, null, mBackgroundHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onConfigureFailed(@NonNull CameraCaptureSession session) {

Log.e(TAG, "ConfigureFailed. session: mCaptureSession");

}

}, mBackgroundHandler); // handle 传入 null 表示使用当前线程的 Looper

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

public Size getPreviewSize() {

return mPreviewSize;

}

public void switchCamera() {

mCameraId ^= 1;

Log.d(TAG, "switchCamera: mCameraId: " + mCameraId);

releaseCamera();

openCamera();

}

private void startBackgroundThread() {

if (mBackgroundThread == null || mBackgroundHandler == null) {

Log.v(TAG, "startBackgroundThread");

mBackgroundThread = new HandlerThread("CameraBackground");

mBackgroundThread.start();

mBackgroundHandler = new Handler(mBackgroundThread.getLooper());

}

}

private void stopBackgroundThread() {

Log.v(TAG, "stopBackgroundThread");

mBackgroundThread.quitSafely();

try {

mBackgroundThread.join();

mBackgroundThread = null;

mBackgroundHandler = null;

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

重点关注 mImageReader 相关操作即可。

3. Camera2View

public class Camera2View extends TextureView {

private static final String TAG = "Camera2View";

private Camera2Proxy mCameraProxy;

private int mRatioWidth = 0;

private int mRatioHeight = 0;

public Camera2View(Context context) {

this(context, null);

}

public Camera2View(Context context, AttributeSet attrs) {

this(context, attrs, 0);

}

public Camera2View(Context context, AttributeSet attrs, int defStyleAttr) {

this(context, attrs, defStyleAttr, 0);

}

public Camera2View(Context context, AttributeSet attrs, int defStyleAttr, int defStyleRes) {

super(context, attrs, defStyleAttr, defStyleRes);

init(context);

}

private void init(Context context) {

mCameraProxy = new Camera2Proxy((Activity) context);

}

public void setAspectRatio(int width, int height) {

if (width < 0 || height < 0) {

throw new IllegalArgumentException("Size cannot be negative.");

}

mRatioWidth = width;

mRatioHeight = height;

requestLayout();

}

public Camera2Proxy getCameraProxy() {

return mCameraProxy;

}

@Override

protected void onMeasure(int widthMeasureSpec, int heightMeasureSpec) {

super.onMeasure(widthMeasureSpec, heightMeasureSpec);

int width = MeasureSpec.getSize(widthMeasureSpec);

int height = MeasureSpec.getSize(heightMeasureSpec);

if (0 == mRatioWidth || 0 == mRatioHeight) {

setMeasuredDimension(width, height);

} else {

if (width < height * mRatioWidth / mRatioHeight) {

setMeasuredDimension(width, width * mRatioHeight / mRatioWidth);

} else {

setMeasuredDimension(height * mRatioWidth / mRatioHeight, height);

}

}

}

}

一个可以根据预览大小来修正View的大小,保持长宽比例一致的控件。

4. CameraFragment

public class CameraFragment extends Fragment implements View.OnClickListener {

private static final String TAG = "CameraFragment";

private ImageView mCloseIv;

private ImageView mSwitchCameraIv;

private ImageView mTakePictureIv;

private Camera2View mCameraView;

private Camera2Proxy mCameraProxy;

private byte[] mYuvBytes;

private boolean mIsShutter;

private final TextureView.SurfaceTextureListener mSurfaceTextureListener

= new TextureView.SurfaceTextureListener() {

@Override

public void onSurfaceTextureAvailable(SurfaceTexture texture, int width, int height) {

mCameraProxy.openCamera();

mCameraProxy.setPreviewSurface(texture);

// 根据相机预览设置View大小,避免显示变形

Size previewSize = mCameraProxy.getPreviewSize();

mCameraView.setAspectRatio(previewSize.getHeight(), previewSize.getWidth());

}

@Override

public void onSurfaceTextureSizeChanged(SurfaceTexture texture, int width, int height) {

}

@Override

public boolean onSurfaceTextureDestroyed(SurfaceTexture texture) {

return true;

}

@Override

public void onSurfaceTextureUpdated(SurfaceTexture texture) {

}

};

private ImageReader.OnImageAvailableListener mOnImageAvailableListener

= reader -> {

Image image = reader.acquireLatestImage();

if (image == null) {

return;

}

processImage(image);

image.close(); // 一定不能忘记close

};

@Nullable

@Override

public View onCreateView(@NonNull LayoutInflater inflater, @Nullable ViewGroup container,

@Nullable Bundle savedInstanceState) {

View rootView = inflater.inflate(R.layout.fragment_camera, null);

initView(rootView);

return rootView;

}

private void initView(View rootView) {

mCloseIv = rootView.findViewById(R.id.toolbar_close_iv);

mSwitchCameraIv = rootView.findViewById(R.id.toolbar_switch_iv);

mTakePictureIv = rootView.findViewById(R.id.take_picture_iv);

mCameraView = rootView.findViewById(R.id.camera_view);

mCameraProxy = mCameraView.getCameraProxy();

mCloseIv.setOnClickListener(this);

mSwitchCameraIv.setOnClickListener(this);

mTakePictureIv.setOnClickListener(this);

mCameraProxy.setImageAvailableListener(mOnImageAvailableListener);

}

@Override

public void onResume() {

super.onResume();

if (mCameraView.isAvailable()) {

mCameraProxy.openCamera();

} else {

mCameraView.setSurfaceTextureListener(mSurfaceTextureListener);

}

}

@Override

public void onPause() {

super.onPause();

mCameraProxy.releaseCamera();

}

@Override

public void onClick(View v) {

switch (v.getId()) {

case R.id.toolbar_close_iv:

getActivity().finish();

break;

case R.id.toolbar_switch_iv:

mCameraProxy.switchCamera();

break;

case R.id.take_picture_iv:

mIsShutter = true;

break;

}

}

private void processImage(Image image) {

int width = mCameraProxy.getPreviewSize().getWidth();

int height = mCameraProxy.getPreviewSize().getHeight();

if (mYuvBytes == null) {

// YUV420 大小总是 width * height * 3 / 2

mYuvBytes = new byte[width * height * 3 / 2];

}

ColorConvertUtil.getI420FromImage(image, mYuvBytes);

if (mIsShutter) {

mIsShutter = false;

// save yuv data

String yuvPath = FileUtil.SAVE_DIR + System.currentTimeMillis() + ".yuv";

FileUtil.saveBytes(mYuvBytes, yuvPath);

// save bitmap data

String jpgPath = yuvPath.replace(".yuv", ".jpg");

Bitmap bitmap = ColorConvertUtil.yuv420pToBitmap(mYuvBytes, width, height);

FileUtil.saveBitmap(bitmap, jpgPath);

bitmap.recycle();

}

}

}

核心的数据获取和处理就在 mOnImageAvailableListener 的 onImageAvailable(ImageReader reader) 回调方法中了。这里保存的图片,可以使用 7yuv 等工具直接查看。

5. ColorConvertUtil.getI420FromImage()实现

public static void getI420FromImage(Image image, byte[] outBuffer) {

Rect crop = image.getCropRect();

int width = crop.width();

int height = crop.height();

Log.d(TAG, "getI420FromImage crop width: " + crop.width() + ", height: " + crop.height());

int yLength = width * height;

if (outBuffer == null || outBuffer.length != yLength * 3 / 2) {

Log.e(TAG, "outBuffer size error");

return;

}

long time = System.currentTimeMillis();

// YUV_420_888

Image.Plane[] planes = image.getPlanes();

ByteBuffer yBuffer = planes[0].getBuffer();

ByteBuffer uBuffer = planes[1].getBuffer();

ByteBuffer vBuffer = planes[2].getBuffer();

int stride = planes[0].getRowStride();

Log.d(TAG, "stride y: " + stride);

int pixelStrideUV = planes[1].getPixelStride(); // pixelStride = 2

if (stride == width) {

yBuffer.get(outBuffer, 0, yLength);

int index = yLength;

for (int i = 0; i < yLength / 2; i += pixelStrideUV) {

outBuffer[index++] = uBuffer.get(i);

}

for (int i = 0; i < yLength / 2; i += pixelStrideUV) {

outBuffer[index++] = vBuffer.get(i);

}

} else {

for (int i = 0; i < height; i++) {

yBuffer.position(i * stride);

yBuffer.get(outBuffer, i * width, width);

}

int index = yLength;

for (int i = 0; i < height / 2; i++) {

int offset = i * stride;

for (int j = 0; j < width; j += pixelStrideUV) {

outBuffer[index++] = uBuffer.get(offset + j);

}

}

for (int i = 0; i < height / 2; i++) {

int offset = i * stride;

for (int j = 0; j < width; j += pixelStrideUV) {

outBuffer[index++] = vBuffer.get(offset + j);

}

}

}

Log.d(TAG, "getI420FromImage time: " + (System.currentTimeMillis() - time));

}

java中处理的数据的效率其实并不是最佳的,ByteBuffer其实也是native上的一块内存,可以的话由native函数去处理更合适。

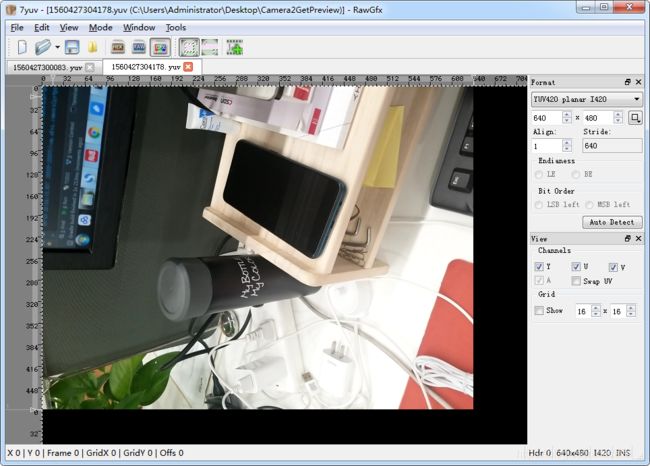

预览如下:

注意,选择的宽高要和预览宽高(640*480)保持一致,图片格式选择 YUV420 planner I420。

如果还想要进一步将 yuv I420 的数据转为 Bitmap,可以参考:

Android Camera2 预览数据格式 YUV_420_888 合集之 I420 转 Bitmap

五、工程地址

下面工程是一个完整可直接运行的 demo,包括相机预览数据的获取和保存。部分没有给出的类和布局文件,可以在下面地址中找到:

https://github.com/afei-cn/Camera2GetPreview