Ambari 2.7.5安装Flink1.13.2

目录

- 1.创建flink源

- 2.下载ambari-flink-service服务

- 3. 修改配置文件

- 4.创建用户和组

- 5.重启 ambari-server

- 6.ambari 安装 Flink

- 7.问题解决:

-

- 异常1

- 异常2

- 异常3

- 异常4

- 异常5

1.创建flink源

wget https://downloads.apache.org/flink/flink-1.13.2/flink-1.13.2-bin-scala_2.11.tgz

wget https://repo.maven.apache.org/maven2/org/apache/flink/flink-shaded-hadoop-2-uber/2.8.3-10.0/flink-shaded-hadoop-2-uber-2.8.3-10.0.jar

mv flink-1.13.2-bin-scala_2.11 /var/www/html/flink/

mv flink-shaded-hadoop-2-uber-2.8.3-10.0.jar /var/www/html/flink/

2.下载ambari-flink-service服务

查看hdp版本

[root@node001 ~]# VERSION=`hdp-select status hadoop-client | sed 's/hadoop-client - \([0-9]\.[0-9]\).*/\1/'`

[root@node001 ~]# echo $VERSION

3.1

载ambari-flink-service服务

下载ambari-flink-service服务到 ambari-server 资源目录下

[root@node001 ~]# git clone https://github.com/abajwa-hw/ambari-flink-service.git /var/lib/ambari-server/resources/stacks/HDP/$VERSION/services/FLINK

正克隆到 '/var/lib/ambari-server/resources/stacks/HDP/3.1/services/FLINK'...

remote: Enumerating objects: 198, done.

remote: Counting objects: 100% (6/6), done.

remote: Compressing objects: 100% (5/5), done.

remote: Total 198 (delta 0), reused 3 (delta 0), pack-reused 192

接收对象中: 100% (198/198), 2.09 MiB | 575.00 KiB/s, done.

处理 delta 中: 100% (89/89), done.

查看文件

[root@node001 ~]# cd /var/lib/ambari-server/resources/stacks/HDP/3.1/services/FLINK/

[root@node001 FLINK]# ll

总用量 20

drwxr-xr-x 2 root root 58 8月 30 20:48 configuration

-rw-r--r-- 1 root root 223 8月 30 20:48 kerberos.json

-rw-r--r-- 1 root root 1777 8月 30 20:48 metainfo.xml

drwxr-xr-x 3 root root 21 8月 30 20:48 package

-rwxr-xr-x 1 root root 8114 8月 30 20:48 README.md

-rw-r--r-- 1 root root 125 8月 30 20:48 role_command_order.json

drwxr-xr-x 2 root root 236 8月 30 20:48 screenshots

3. 修改配置文件

1.编辑 metainfo.xml 将安装的版本修改为 1.13.2

cd /var/lib/ambari-server/resources/stacks/HDP/3.1/services/FLINK/

vim metainfo.xml

<displayName>FlinkdisplayName>

<comment>Apache Flink is a streaming dataflow.。。。。.comment>

<version>1.13.2version>

2.配置JAVA_HOME

cd /var/lib/ambari-server/resources/stacks/HDP/3.1/services/FLINK/configuration

vim flink-env.xml

env.java.home: /usr/local/java/ #改为自己的java路径

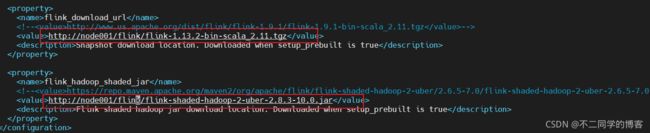

3.编辑flink-ambari-config.xml修改下载地址为第一步创建的网络路径

cd /var/lib/ambari-server/resources/stacks/HDP/3.1/services/FLINK/configuration

vim flink-ambari-config.xml

<property>

<name>flink_download_urlname>

<value>http://node001/flink/flink-1.13.2-bin-scala_2.11.tgzvalue>

<description>Snapshot download location. Downloaded when setup_prebuilt is truedescription>

property>

<property>

<name>flink_hadoop_shaded_jarname>

<value>http://node001/flink/flink-shaded-hadoop-2-uber-2.8.3-10.0.jarvalue>

<description>Flink shaded hadoop jar download location. Downloaded when setup_prebuilt is truedescription>

property>

4.创建用户和组

# 添加用户组

[root@node0011 ~]# groupadd flink

# 添加用户

[root@node0011 ~]# useradd -d /home/flink -g flink flink

5.重启 ambari-server

[root@node001 ~]# ambari-server restart

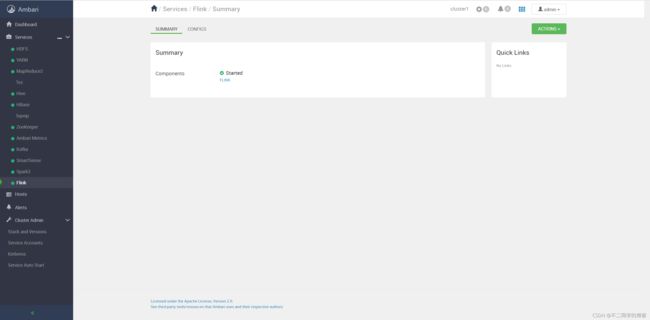

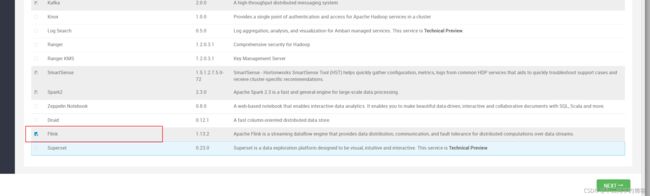

6.ambari 安装 Flink

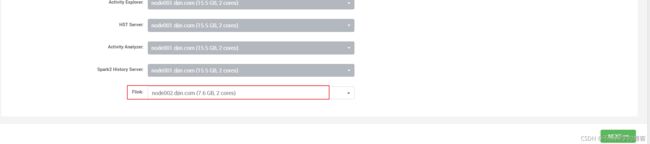

2.选择Flink安装到那台服务器

3.配置 Flink on yarn 故障转移方式

<property>

<name>yarn.client.failover-proxy-providername>

<value>org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvidervalue>

property>

7.问题解决:

异常1

500 status code received on POST method for API: /api/v1/stacks/HDP/versions/3.1/recommendations

Error message: Error occured during stack advisor command invocation: Cannot create /var/run/ambari-server/stack-recommendations

解决方案:

sudo chown -R ambari /var/run/ambari-server

异常2

org.apache.ambari.server.api.services.stackadvisor.StackAdvisorException: Error occured during stack advisor command invocation: Cannot create /var/run/ambari-server/stack-recommendations

解决:

cd /var/run/ambari-server

mkdir stack-recommendations

chown -R ambari:ambari /var/run/ambari-server/stack-recommendations/

异常3

stderr:

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stack-hooks/before-ANY/scripts/hook.py", line 38, in <module>

BeforeAnyHook().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 352, in execute

method(env)

File "/var/lib/ambari-agent/cache/stack-hooks/before-ANY/scripts/hook.py", line 31, in hook

setup_users()

File "/var/lib/ambari-agent/cache/stack-hooks/before-ANY/scripts/shared_initialization.py", line 50, in setup_users

groups = params.user_to_groups_dict[user],

KeyError: u'flink'

Error: Error: Unable to run the custom hook script ['/usr/bin/python', '/var/lib/ambari-agent/cache/stack-hooks/before-ANY/scripts/hook.py', 'ANY', '/var/lib/ambari-agent/data/command-710.json', '/var/lib/ambari-agent/cache/stack-hooks/before-ANY', '/var/lib/ambari-agent/data/structured-out-710.json', 'INFO', '/var/lib/ambari-agent/tmp', 'PROTOCOL_TLSv1_2', '']

stdout:

2021-08-30 23:22:11,769 - Stack Feature Version Info: Cluster Stack=3.1, Command Stack=None, Command Version=None -> 3.1

2021-08-30 23:22:11,773 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2021-08-30 23:22:11,773 - Group['flink'] {}

2021-08-30 23:22:11,774 - Group['livy'] {}

2021-08-30 23:22:11,774 - Group['spark'] {}

2021-08-30 23:22:11,774 - Group['hdfs'] {}

2021-08-30 23:22:11,774 - Group['hadoop'] {}

2021-08-30 23:22:11,775 - Group['users'] {}

2021-08-30 23:22:11,775 - User['yarn-ats'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-08-30 23:22:11,775 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-08-30 23:22:11,776 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-08-30 23:22:11,776 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-08-30 23:22:11,777 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

Error: Error: Unable to run the custom hook script ['/usr/bin/python', '/var/lib/ambari-agent/cache/stack-hooks/before-ANY/scripts/hook.py', 'ANY', '/var/lib/ambari-agent/data/command-710.json', '/var/lib/ambari-agent/cache/stack-hooks/before-ANY', '/var/lib/ambari-agent/data/structured-out-710.json', 'INFO', '/var/lib/ambari-agent/tmp', 'PROTOCOL_TLSv1_2', '']

2021-08-30 23:22:11,788 - The repository with version 3.1.5.0-152 for this command has been marked as resolved. It will be used to report the version of the component which was installed

2021-08-30 23:22:11,791 - Skipping stack-select on FLINK because it does not exist in the stack-select package structure.

Command failed after 1 tries

解决:

方法一:

cd /var/lib/ambari-server/resources/scripts

#python configs.py -u admin -p admin -n $cluster_name -l $ambari_server -t 8080 -a set -c cluster-env -k ignore_groupsusers_create -v ture

python configs.py -u admin -p admin -n cluster1 -l node001 -t 8080 -a set -c cluster-env -k ignore_groupsusers_create -v ture

方法二:

执行如下命令,将IP和集群名改为自己对应的

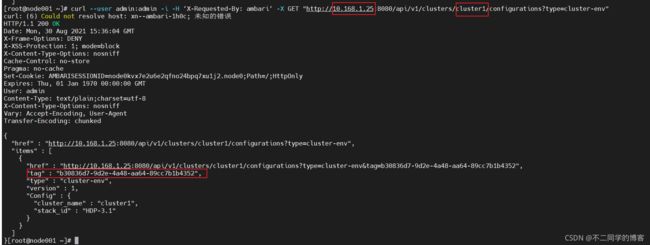

curl --user admin:admin -i -H ‘X-Requested-By: ambari‘ -X GET "http://10.168.1.25:8080/api/v1/clusters/cluster1/configurations?type=cluster-env"

从返回的items中找到tag,然后再请求(增加tag参数):

curl --user admin:admin -i -H ‘X-Requested-By: ambari‘ -X GET "http://10.168.1.25:8080/api/v1/clusters/cluster1/configurations?type=cluster-env&tag=b30836d7-9d2e-4a48-aa64-89cc7b1b4352"

返回结果中包含所有的property

"properties" : {

"agent_mounts_ignore_list" : "",

"alerts_repeat_tolerance" : "1",

"enable_external_ranger" : "false",

"fetch_nonlocal_groups" : "true",

"hide_yarn_memory_widget" : "false",

"ignore_bad_mounts" : "false",

"ignore_groupsusers_create" : "false",

"kerberos_domain" : "EXAMPLE.COM",

"manage_dirs_on_root" : "true",

.......

修改需要先将上面的配置保存到一个文件,比如/tmp/configurations.tmp,然后修改,然后再调用接口:

curl --user admin:admin -i -H ‘X-Requested-By: ambari‘ -X PUT -d @/tmp/configurations.tmp http://10.168.1.25:8080/api/v1/clusters/cluster1/

又出现如下错误

{

"status" : 400,

"message" : "CSRF protection is turned on. X-Requested-By HTTP header is required."

}

参考如下文章禁用 CSRF 保护

https://community.cloudera.com/t5/Community-Articles/How-to-resolve-CSRF-protection-error-while-adding-service/ta-p/246264

1.使用root登录ambari服务器

vi /etc/ambari-server/conf/ambari.properties

2.在文件底部添加以下行

api.csrfPrevention.enabled=false

3.重启ambari服务器

ambari-server restart

再次执行

curl --user admin:admin -i -H ‘X-Requested-By: ambari‘ -X PUT -d @/tmp/configurations.tmp http://10.168.1.25:8080/api/v1/clusters/cluster1/

异常4

stderr: /var/lib/ambari-agent/data/errors-1105.txt

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/HDP/3.1/services/FLINK/package/scripts/flink.py", line 172, in <module>

Master().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 352, in execute

method(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.1/services/FLINK/package/scripts/flink.py", line 108, in start

self.configure(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.1/services/FLINK/package/scripts/flink.py", line 91, in configure

File(format("{conf_dir}/flink-conf.yaml"), content=properties_content, owner=params.flink_user)

File "/usr/lib/ambari-agent/lib/resource_management/core/base.py", line 166, in __init__

self.env.run()

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/ambari-agent/lib/resource_management/core/providers/system.py", line 120, in action_create

raise Fail("Applying %s failed, parent directory %s doesn't exist" % (self.resource, dirname))

resource_management.core.exceptions.Fail: Applying File['/opt/flink/conf/flink-conf.yaml'] failed, parent directory /opt/flink/conf doesn't exist

不知啥原因,没解压过来,直接手动解压到该目录

tar -zxvf flink-1.13.2-bin-scala_2.11.tgz -C /opt/flink

cd /opt/flink

mv flink-1.13.2/* /opt/flink

异常5

stderr: /var/lib/ambari-agent/data/errors-1108.txt

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/HDP/3.1/services/FLINK/package/scripts/flink.py", line 172, in <module>

Master().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 352, in execute

method(env)

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 980, in restart

self.stop(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.1/services/FLINK/package/scripts/flink.py", line 98, in stop

pid = str(sudo.read_file(status_params.flink_pid_file))

File "/usr/lib/ambari-agent/lib/resource_management/core/sudo.py", line 151, in read_file

with open(filename, "rb") as fp:

IOError: [Errno 2] No such file or directory: u'/var/run/flink/flink.pid'

[root@node001 bin]# pwd

/opt/flink/bin

[root@node001 bin]# yarn-session.sh -n 1 -s 1 -jm 768 -tm 1024 -qu default -nm flinkapp-from-ambari -d >> /var/log/flink/flink-setup.log

参考:

https://www.136.la/jingpin/show-42067.html

http://www.hnbian.cn/posts/a1601de4.html