【记录自己踩坑】第一次自己写整个训练过程的代码

项目场景:

就是简简单单的3D分类网络,之前是这么觉得的。但是出了很多bug,之前自己是做2D分割的。

问题描述

我是先用师兄找的一个文章中的代码运行的,它是tensorflow版本,我之前大多使用的pytorch。Uniformizing Techniques to Process CT scans with 3D CNNs for Tuberculosis Prediction

不过它是jupyter notebook,我复制粘贴到一个.py文件中上传到服务器运行的。前面数据加载部分使用的是cpu,所以刚开始特别慢。

之后我发现自己数据保存为.nii错了,详情见上一篇博文。

然后我在找计算HU值(CT图像的)的时候,想改进一下代码,使用pytorch。找到了Resource Efficient 3D Convolutional Neural Networks,它代码有点多,我代码也不强,很多参数不太懂,想简略一点能够用个模型跑出来就行。

艰难历程:

数据读取

上一个文章。

- 补充一下数组堆叠吧,先把读取得到的数组放到一个列表中用np.stack()堆叠起来,作为三维数据。

import numpy as np

# 创建一个空列表,用于存储生成的数组

array_list = []

# 循环生成数组并添加到列表中

for i in range(5):

# 生成随机数组(示例中使用随机数)

random_array = np.random.rand(3, 3)

array_list.append(random_array)

# 将列表中的数组按照第0维进行堆叠

stacked_array = np.stack(array_list, axis=0)

# 现在,stacked_array是一个包含5个3x3随机数组的3维数组

print(stacked_array)

- 不同病例的CT切片数不同,放到三位网络中,输入数据的维度大小必须相同。

首先,我尝试了resize不行,像素数量不能发生改变。

然后我用了插值方法。ndimage.zoom()

scale_factor = 0.25 # 缩小为原来的1/4

resized_hu = ndimage.zoom(hu_values, scale_factor, order=1)

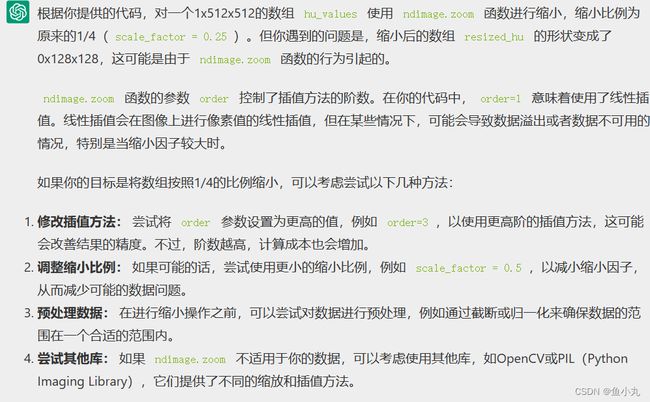

发现数据读取一直出错,进行调试发现经过这个函数把我原来1512512的数组,变成了0512512?????????

我问了chatgpt3.5,他说:

我试了试修改了插值方法order调高,不行。阿巴阿巴。我查士找了其他的,后来想到,原来tensorflow那个库中有个改变三维数据大小的函数。我在上面改了改可以运行了。

def resize_volume(img):

"""Resize across z-axis"""

# Set the desired depth

desired_depth = 64

desired_width = 128

desired_height = 128

# Get current depth

current_channel = img.shape[0]

current_depth = img.shape[1]

current_width = img.shape[2]

current_height = img.shape[3]

# Compute depth factor

depth = current_depth / desired_depth

width = current_width / desired_width

height = current_height / desired_height

depth_factor = 1 / depth

width_factor = 1 / width

height_factor = 1 / height

# Resize across z-axis

img = ndimage.zoom(img, (1,depth_factor,width_factor, height_factor), order=1)

return img

- Dataloader 我改完所有bug发现它能跑起来了,跑的还特别快,我就想是不是哪里出了问题,主要是他准确率有时候1有时候0。很像只读取了一个数据,才发现 __len__这个函数是有用的,它来确定读多少数据。

def __len__(self):

return len(self.data_list)

模型训练

RuntimeError: Input type (torch.cuda.DoubleTensor) and weight type (torch.cuda.FloatTensor) should be the same

- 我发现自己读取数据用的.float(),它默认是float64,我就改成.astype(np.float32)。

- 输出和标签大小不匹配,我print它们的.shape发现一个torch.Size([2, 1]),一个torch.Size([2])。我就查怎么把torch.Size([2,1])变成torch.Size([2])。网上说squeeze(),view()。但是我改完了还报错。我想到了我以前做分割的时候用的一个库,我都改烂了,那里面有对标签的处理,我想应该都差不多。在loss和评价指标中输入SR_flat(处理过后的输出),GT_flat(处理过后的标签),就可以了。

SR_flat = SR_probs.view(SR_probs.size(0), -1)

GT_flat = GT.view(GT.size(0), -1)

RuntimeError: Expected floating point type for target with class probabilities, got Long

GT是整形,变成float()

GT = GT.float()

File "/Self_classification/solver.py", line 159, in train

loss = self.criterion(SR, GT)

File "/site-packages/torch/nn/modules/module.py", line 1102, in _call_impl

return forward_call(*input, **kwargs)

File "/site-packages/torch/nn/modules/loss.py", line 1152, in forward

label_smoothing=self.label_smoothing)

File "/site-packages/torch/nn/functional.py", line 2846, in cross_entropy

return torch._C._nn.cross_entropy_loss(input, target, weight, _Reduction.get_enum(reduction), ignore_index, label_smoothing)

IndexError: Dimension out of range (expected to be in range of [-1, 0], but got 1)

- 我是二分类就换成BCELoss了,这个cross_entropy函数我可能参数赋错值了。

Traceback (most recent call last):

File "/code/Self_classification/main.py", line 76, in <module>

main(config)

File "/code/Self_classification/main.py", line 47, in main

solver.train()

File "/code/Self_classification/solver.py", line 160, in train

loss = self.criterion(SR, GT)

File "/anaconda3/envs/ddpm/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1102, in _call_impl

return forward_call(*input, **kwargs)

File "/anaconda3/envs/ddpm/lib/python3.7/site-packages/torch/nn/modules/loss.py", line 1152, in forward

label_smoothing=self.label_smoothing)

File "/anaconda3/envs/ddpm/lib/python3.7/site-packages/torch/nn/functional.py", line 2846, in cross_entropy

return torch._C._nn.cross_entropy_loss(input, target, weight, _Reduction.get_enum(reduction), ignore_index, label_smoothing)

RuntimeError: "nll_loss_forward_reduce_cuda_kernel_2d_index" not implemented for 'Float'

- 这个我把GT和SR都加了.float()和.to(device)。可能有些数据不在cuda上。

- 计算评价指标的函数也要.to(device)不然会报错。

- 记得每次训练将梯度置零

这三个缺一不可。

optimizer.zero_grad()

loss.backward()

optimizer.step()