[论文精读]Characterizing functional brain networks via Spatio-Temporal Attention 4D Convolutional Neural

论文全名:Characterizing functional brain networks via Spatio-Temporal Attention 4D Convolutional Neural Networks (STA-4DCNNs)

论文原文:Characterizing functional brain networks via Spatio-Temporal Attention 4D Convolutional Neural Networks (STA-4DCNNs) - ScienceDirect

英文是纯手打的!论文原文的summarizing and paraphrasing。可能会出现难以避免的拼写错误和语法错误,若有发现欢迎评论指正!文章偏向于笔记,谨慎食用!

1. 省流版

1.1. 心得

(1)写了如同没写的贡献,全给新颖去了倒是感觉不到几个贡献

(2)仅考虑个体不考虑组间

(3)咦所以什么是浅层数据什么是深层数据?

1.2. 论文框架图

2. 论文逐段精读

2.1. Abstract

①There is still a broad space of 4D-fMRI

②They proposed a Spatio-Temporal Attention 4D Convolutional Neural Network (STA-4DCNN) model for functional brain networks (FBNs) which includes Spatial Attention 4D CNN (SA-4DCNN) and Temporal Guided Attention Network (T-GANet) subnetworks.

③Introduce the dataset they adopted

2.2. Introduction

①4D-fMRI is come from the combination of 3D brain image and 1D time series

②Characterizations of FBNs such as general linear model (GLM) in 1D, principal component analysis (PCA), sparse representation (SR) and independent component analysis (ICA) in 2D and 3D convolutionn in 3D are all limited in spatio-temporal analysis

③Briefly introduce how they experimented

④Fine, they think their contributions are combining U-Net and attention mechanism and the model works...

2.3. Method and materials

2.3.1. Method overview

The overview of SA-4DCNN:

where SA-4DCNN receives 4D data and converts it into 3D spatial output and T-GANet receives both 3D spatial output and 4D data and converts it into 1D Temporal output.

2.3.2. Data description and preprocessing

(1)Data selection

①Types of data: seven t-fMRI including emotion, gambling, language, motor, relational, social, and working memory and Rest state fMRI (rs-fMRI)

②Dataset: HCP S900 release (both types) and ABIDE I (only rs-fMRI, only for evaluating generalizability)

③Sample: randomly select 200 in HCP, 64 ASD and 83 typical developing (TD) in ABIDE I

④Preprocessing: FSL FEAT toolbox, normalized to 0–1 distribution, down-sampled to 48*56*48 (spatial size) and 88 (temporal size) for HCP data, standard Configurable Pipeline for the Analysis of Connectomes (CPAC) for ABIDE I

(2)Data processing

①Training labels: dictionary learning and sparse representation (SR)

②Presenting 4D-fMRI as matrix ![]() , where

, where ![]() denotes time points and

denotes time points and ![]() denotes the total number of brain voxels

denotes the total number of brain voxels

③Decompose ![]() to

to ![]() with dictionary learning and sparse representation (SR) where

with dictionary learning and sparse representation (SR) where ![]() is error term and

is error term and ![]() is predefined dictionary size

is predefined dictionary size

④Each column in dictionary matrix ![]() is temporal pattern of the FBN

is temporal pattern of the FBN

⑤Each row in sparse coefficient matrix ![]() is spatial pattern of the FBN

is spatial pattern of the FBN

2.3.3. 4D convolution/deconvolution and attention copy

(1)Convolution

①4D convolution (a) and 4D deconvolution (b) figure:

①The authors explain they decomposed 4D filter into different 3D convolution kernels along the temporal dimension. Then use these 3D convolution kernels to perform 3D convolution on the input 4D data. The final output is the sum of 4D data.

②⭐为了把D*H*W*C(长宽高*时间)的4D图变成2D*2H*2W*2C,第一个绷带大方块(2D*2D*2W)是padding一个小方块得来的(但是作者没有具体说怎么padding只是cite了别人的论文)。然后对于每个绷带大方块都padding出一个新的空白方块。这样就得到了2D*2H*2W*2C阵列。(因为我觉得蛮重要的就中文解释惹,这样下次看笔记一眼就看到了)

(2)Attention

①They concatenate shallow and deep layers feature with 5D (D*H*W*C*L where L is the channel dimension):

②The specific operation is:

where ![]() denotes re-ordering the 4D matrix of size D*H*W*C into a 2D one of size (D*H*W)*C

denotes re-ordering the 4D matrix of size D*H*W*C into a 2D one of size (D*H*W)*C

③The 4D attention output is:

where ![]() denotes the number of features

denotes the number of features

④Then restore the dimension through reverse operations:

where ![]() is the 4D matrix in s along the last dimension(其实我没太懂这里的表述呢?我觉得就是attention()完的东西?)

is the 4D matrix in s along the last dimension(其实我没太懂这里的表述呢?我觉得就是attention()完的东西?)

2.3.4. Model architecture of STA-4DCNN

The whole framework of STA-4DCNN:

(1)Spatial attention 4D CNN (SA-4DCNN)

①The original chanel is 1, but transfer to 2 after 2 4D convolutional layers

②The red arrows are maxpooling layers

③For the output similar to input with 4D, they adpot 3D CNN to reduce dimension

(2)Temporal guided attention network (T-GANet)

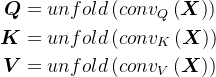

①Firstly they combine spatial pattern and 4D fMRI IN:

![]()

showed in the top orange line, where ![]() transfer input with S*S*S*C to P*C (P=S*S*S)

transfer input with S*S*S*C to P*C (P=S*S*S)

②Then combine ![]() and

and ![]() :

:

![]()

感觉(2)的公式好迷惑啊,其实图已经很清晰了但是总感觉公式不太对的上

2.3.5. Model setting and training scheme

①The designed two separate loss function to SA-4DCNN and T-GANet

②Loss function in SA-4DCNN:

where ![]() is characterized spatial pattern (那大哥你为啥不用

is characterized spatial pattern (那大哥你为啥不用 ![]() 啊?) and

啊?) and ![]() denotes training label

denotes training label

③Loss function in T-GANet:

④Training curve:

⑤Training set: 160 for training and 40 for testing

⑥Parameter: the same as their previous paper (Yan et al., 2021)

⑦Learning rate: 0.0001 in the first and 0.0005 in second training stages

⑧Epoch: 150 in spatial and 20 in temporal

⑨Optimizer: Adam in both stages

⑩Convolutional kernals: 3*3*3*3 in 4D and 3*3*3 in 3D

⑪Activation: a batch norm (BN) and a rectified linear unit (ReLU) are followed by each convolutional layer

⑫When ![]() in Fast Down-sampling Block is set by 12, they achieve the hightest accuracy

in Fast Down-sampling Block is set by 12, they achieve the hightest accuracy

2.3.6. Model evaluation and validation

2.4. Results

2.4.1. Spatio-temporal pattern characterization of DMN in emotion T-fMRI

2.4.2. Generalizability of spatio-temporal pattern characterization of DMN in other six T-fMRI and One Rs-fMRI

2.4.3. Effectiveness of spatio-temporal pattern characterization of other FBNs

2.4.5. Ablation study

2.4.6. Characterization of abnormal spatio-temporal patterns of FBNs in ASD patients

2.5. Conclusion

3. 知识补充

3.1. Boltzmann machine

过于复杂不做多解释机器学习笔记之深度玻尔兹曼机(一)玻尔兹曼机系列整体介绍_静静的喝酒的博客-CSDN博客

3.2. U-Net

U-Net神经网络_uodgnez的博客-CSDN博客

3.3. Deconvolution/Transposed convolution/Fractionally-strided convolution

【深度学习反卷积】反卷积详解, 反卷积公式推导和在Tensorflow上的应用 - 知乎 (zhihu.com)

4. Reference List

Xi, J. (2022) 'Characterizing functional brain networks via Spatio-Temporal Attention 4D Convolutional Neural Networks (STA-4DCNNs)', Neural Networks, vol. 158, pp. 00-110. doi: Redirecting

Yan, J. et al. (2021) 'A Guided Attention 4D Convolutional Neural Network for Modeling Spatio-Temporal Patterns of Functional Brain Networks', The 4th Chinese conference on pattern recognition and computer vision.

![[论文精读]Characterizing functional brain networks via Spatio-Temporal Attention 4D Convolutional Neural_第1张图片](http://img.e-com-net.com/image/info8/f3062c0dda7c4cad9245124b64cf87ad.jpg)

![[论文精读]Characterizing functional brain networks via Spatio-Temporal Attention 4D Convolutional Neural_第2张图片](http://img.e-com-net.com/image/info8/4d2f533f364748e3b9ab1ee7a943e074.jpg)

![[论文精读]Characterizing functional brain networks via Spatio-Temporal Attention 4D Convolutional Neural_第3张图片](http://img.e-com-net.com/image/info8/9881ff723d7c4df498e438b82c54d770.jpg)

![[论文精读]Characterizing functional brain networks via Spatio-Temporal Attention 4D Convolutional Neural_第4张图片](http://img.e-com-net.com/image/info8/e974f6dc0ac04b8e9b6ca7f5f87221f5.jpg)

![[论文精读]Characterizing functional brain networks via Spatio-Temporal Attention 4D Convolutional Neural_第5张图片](http://img.e-com-net.com/image/info8/9104e81b0ee844bdb1510b89895ec07a.jpg)