【ROS学习】 rosbag 转化为 mp4 格式视频

文章目录

- 写在前面

- 一、文件准备

- 二、安装依赖

- 三、使用方法

- 四、举例:执行rosbag 转化为 mp4 格式视频的脚本

- 五、参考链接

- 六、附录:rosbag2video.py 源码

写在前面

本文系统测试环境:

Ubuntu 18.04

ROS-melodic

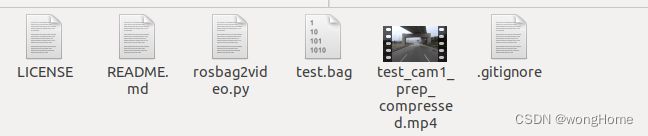

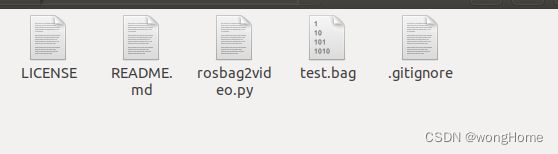

一、文件准备

从 github 中下载对应的文件(或者直接复制文章最后的 rosbag2video.py源码),并解压,然后将自己的 bag 文件(test.bag)放到文件夹内:

二、安装依赖

脚本运行需要安装依赖

sudo apt install ffmpeg

三、使用方法

rosbag2video.py 功能:利用ffmpeg 将 rosbag (单位:s) 文件中的图像序列 (单位:s) 转换为 视频文件。

rosbag2video.py [--fps 25] [--rate 1] [-o outputfile] [-v] [-s] [-t topic] bagfile1 [bagfile2] ...

参数解释:

| 参数 | 英语解释 | 中文翻译 |

|---|---|---|

| –fps | Sets FPS value that is passed to ffmpeg. Default is 25. | 设置传递给ffmpeg的FPS的值. 默认是25. |

| -h | Displays this help. | 显示帮助信息. |

| –ofile (-o) | sets output file name. If no output file name (-o) is given the filename ‘[prefix]_[topic].mp4’ is used and default output codec is h264. Multiple image topics are supported only when -o option is _not used. ffmpeg will guess the format according to given extension. Compressed and raw image messages are supported with mono8 and bgr8/rgb8/bggr8/rggb8 formats. |

设置输出文件名. 如果没有设置输出文件名, 则默认是 [prefix]_[topic].mp4 命名, 并以h264 编码. 当 -o 没有被使用的时候,才会支持多个图像话题。 ffmpeg将根据给定的扩展猜测其格式。 支持压缩和原始的、mono8 和bgr8/rgb8/bggr8/rggb8 格式的图像消息。 |

| –rate (-r) | You may slow down or speed up the video. Default is 1.0, that keeps the original speed. | 可以放慢或者加速视频。默认是1,也就是保持原始速度。 |

| -s | Shows each and every image extracted from the rosbag file (cv_bride is needed). | 显示从rosbag文件提取的每帧图像。 |

| –topic (-t) | Only the images from topic “topic” are used for the video output. | 指定用于视频输出的 相机"话题" 。 |

| -v | Verbose messages are displayed. | 显示详细消息 |

| –prefix (-p) | set a output file name prefix othervise ‘bagfile1’ is used (if -o is not set). | 设置输出文件名前缀,否则的话使用“ bagfile1”(也就是如果未设置-o); |

| –start | Optional start time in seconds. | 可选的开始时间 (单位:秒) |

| –end | Optional end time in seconds. | 可选的结束时间 (单位:秒) |

四、举例:执行rosbag 转化为 mp4 格式视频的脚本

上面列出的参数可以不设置,也就是保持默认值,直接执行脚本文件:

python rosbag2video.py test.bag

五、参考链接

[1] mlaiacker. rosbag2video [EB/OL]. https://github.com/mlaiacker/rosbag2video, 2021-04-27/2022-10-18.

[2] hywmj. rosbag转化为.mp4格式视频 [EB/OL]. https://blog.csdn.net/wangmj_hdu/article/details/114130648, 2021-02-26/2022-10-18.

六、附录:rosbag2video.py 源码

#!/usr/bin/env python3

"""

rosbag2video.py

rosbag to video file conversion tool

by Abel Gabor 2019

[email protected]

requirements:

sudo apt install python3-roslib python3-sensor-msgs python3-opencv ffmpeg

based on the tool by Maximilian Laiacker 2016

[email protected]"""

import roslib

#roslib.load_manifest('rosbag')

import rospy

import rosbag

import sys, getopt

import os

from sensor_msgs.msg import CompressedImage

from sensor_msgs.msg import Image

import cv2

import numpy as np

import shlex, subprocess

MJPEG_VIDEO = 1

RAWIMAGE_VIDEO = 2

VIDEO_CONVERTER_TO_USE = "ffmpeg" # or you may want to use "avconv"

def print_help():

print('rosbag2video.py [--fps 25] [--rate 1] [-o outputfile] [-v] [-s] [-t topic] bagfile1 [bagfile2] ...')

print()

print('Converts image sequence(s) in ros bag file(s) to video file(s) with fixed frame rate using',VIDEO_CONVERTER_TO_USE)

print(VIDEO_CONVERTER_TO_USE,'needs to be installed!')

print()

print('--fps Sets FPS value that is passed to',VIDEO_CONVERTER_TO_USE)

print(' Default is 25.')

print('-h Displays this help.')

print('--ofile (-o) sets output file name.')

print(' If no output file name (-o) is given the filename \'.mp4\' is used and default output codec is h264.'