DATASET CONDENSATION WITH GRADIENT MATCHING

ABSTRACT

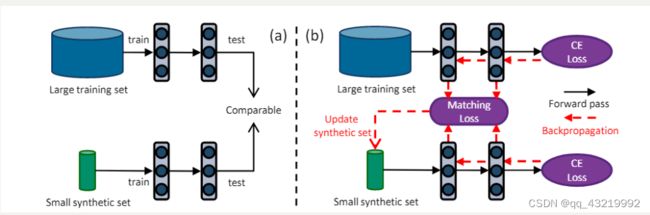

This paper proposes a training set synthesis technique for data-efficient learning, called Dataset Condensation, that learns to condense large dataset into a small set of informative synthetic samples for training deep neural networks from scratch.

本文提出了一种用于数据高效学习的训练集合成技术,称为数据集压缩,它学习将大型数据集压缩成一小组信息丰富的合成样本,用于从头开始训练深度神经网络.

1.INTRODUCTION

We formulate this goal as a minimization problem between two sets of gradients of the network parameters that are computed for a training loss over a large fixed training set and a learnable condensed set

我们将此目标表述为网络参数的两组梯度之间的最小化问题,这些梯度是针对大型固定训练集和可学习的浓缩集的训练损失计算的

2.METHOD

2.1 DATASET CONDENSATION

l a r g e d a t a s e t : τ τ : ( x i , y i ) ∣ i τ d e e p n e u r a l n e t w o r k w i t h θ : ϕ large~dataset:\tau \\ \tau : {(x_i,y_i)}|_i^\tau\ \\ deep~neural~network~with~\theta:\phi large dataset:ττ:(xi,yi)∣iτ deep neural network with θ:ϕ

Our goal is to generate a small set of condensed synthetic samples with their labels: S

S : ( x i , y i ) ∣ i S S:(x_i,y_i)|_i^S S:(xi,yi)∣iS

Discussion

pose the parameters θS as a function of the synthetic data S

S ∗ = a S r g m i n L τ ( θ S ( S ) ) s u b j e c t t o θ S ( S ) = a θ r g m a i n L S ( θ ) S^* = \underset {S} argmin\mathcal{L} ^\tau(\theta^S(S))~~subject~to~~\theta^S(S) = \underset {\theta} argmain \mathcal L^S(\theta) S∗=SargminLτ(θS(S)) subject to θS(S)=θargmainLS(θ)

Issue:

requires a computationally expensive procedure and it does not scale to large models

2.2 DATASET CONDENSATION WITH PARAMETER MATCHING

we aim to learn S such that the model ϕ θ S \phi_{\theta^S} ϕθS trained on them achieves not only comparable generalization performance to ϕ θ τ \phi_{\theta^\tau} ϕθτ but also converges to a similar solution in the parameter space (i.e. θ S = θ τ \theta^S = \theta^\tau θS=θτ )

我们的目标是学习 S 使得在它们上训练的模型 ϕ θ S \phi_{\theta^S} ϕθS 不仅实现了与 ϕ θ τ \phi_{\theta^\tau} ϕθτ相当的泛化性能,而且在参数空间(即 θ S = θ τ \theta^S = \theta^\tau θS=θτ )中也收敛到类似的解决方案

Now we can formulate this goal as

m S i n D ( θ S , θ τ ) s u b j e c t t o θ S ( s ) = a θ r g m i n L S ( θ ) (4) \underset S min~D(\theta^S,\theta^\tau)~~ subject~to~\theta^S(s) = \underset \theta argmin\mathcal L^S(\theta) \tag 4 Smin D(θS,θτ) subject to θS(s)=θargminLS(θ)(4)

In a deep neural network,$ \theta^\tau$ typically depends on its initial values θ 0 \theta_0 θ0. However, the optimization in e q . eq. eq. (4) aims to obtain an optimum set of synthetic images only for one model φ θ τ φ_{\theta^\tau} φθτ with the initialization θ 0 \theta_0 θ0, while our actual goal is to generate samples that can work with a distribution of random initializations P θ 0 \Rho_{\theta_0} Pθ0,Thus we modify e q . eq. eq.(4) as follows

在深度神经网络中, θ τ \theta^\tau θτ 通常取决于其初始值 θ 0 \theta_0 θ0。但是,等式4中的优化旨在仅针对初始化 θ 0 \theta_0 θ0 的模型 φ θ τ φ_{\theta^\tau} φθτ 获得一组最佳合成图像,而我们的实际目标是生成可以使用随机初始化分布 P θ 0 \Rho_{\theta_0} Pθ0的样本,所以我们对公式4进行修改

m S i n E θ 0 ∈ P θ 0 [ D ( θ S ( θ 0 ) , θ τ ( θ 0 ) ) ] s u b j e c t t o θ S ( S ) = a θ r g m i n L S ( θ ( θ 0 ) ) (5) \underset S min~\Epsilon_{\theta_0\in\Rho_{\theta_0}}[D(\theta^S(\theta_0),\theta^\tau(\theta_0))]~~subject~to~~ \theta^S(S) = \underset \theta argmin \mathcal L^S(\theta(\theta_0)) \tag 5 Smin Eθ0∈Pθ0[D(θS(θ0),θτ(θ0))] subject to θS(S)=θargminLS(θ(θ0))(5)

As the inner loop optimization θ S ( s ) = a θ r g m i n L S ( θ ) \theta^S(s) = \underset \theta argmin\mathcal L^S(\theta) θS(s)=θargminLS(θ) can be computationally expensive,adopt the back-optimization approach which re-defines θ S \theta^S θS as the output of an incomplete optimization

由于内环优化 θ S ( s ) = a θ r g m i n L S ( θ ) \theta^S(s) = \underset \theta argmin\mathcal L^S(\theta) θS(s)=θargminLS(θ)的计算成本可能很高所以redefine θ S \theta^S θS

θ S ( S ) = o p t − a l g θ ( L S ( θ ) , ζ ) \theta^S(S) = opt-alg_\theta(\mathcal L^S(\theta),\zeta) θS(S)=opt−algθ(LS(θ),ζ)

o p t − a l g opt-alg opt−alg is a specific optimization procedure with a fixed number of steps (ς)

Discussion

θ τ \theta^\tau θτ for different initializations can be trained first in an offline stage and then used as the target parameter vector in e q . eq. eq.(5).

Issue:

-

distance between θ τ \theta^\tau θτ and intermediate values of θ S \theta^S θS can be too big in the parameter space with multiple local minima traps along the path and thus it can be too challenging to reach

θ τ \theta^\tau θτ 与 θ S \theta^S θS 的中间值之间的距离在沿路径具有多个局部最小值陷阱的参数空间上可能太大,因此到达可能太具有挑战性

-

o p t − a l g opt-alg opt−alg involves a limited number of optimization steps as a trade off between speed and accuracy which may not be sufficient to take enough steps for reaching the optimal solution.

o p t − a l g opt-alg opt−alg 涉及有限数量的优化步骤作为速度和准确性之间的权衡,这可能不足以采取足够的步骤来获得最优解

2.3 DATASET CONDENSATION WITH CURRICULUM GRADIENT MATCHING

we wish θ S \theta^S θS to be close to not only the final θ τ \theta^\tau θτ but also to follow a similar path to θ τ \theta^\tau θτ throughout the optimization. While this can restrict the optimization dynamics for θ, we argue that it also enables a more guided optimization and effective use of the incomplete optimizer

我们希望 θ S \theta^S θS 不仅接近最终的 θ τ \theta^\tau θτ,而且在整个优化过程中也遵循与 θ τ \theta^\tau θτ 相似的路径。虽然这可以限制 θ 的优化动态,但我们认为它也可以更有效地优化和有效使用不完整的优化器

now decompose e q . eq. eq. (5) into multiple subproblems:

where T is the number of iterations, ζ S \zeta^S ζS and ζ τ \zeta^\tau ζτ are the numbers of optimization steps for θ S \theta^S θS and θ τ \theta^\tau θτ respectively.

In words, we wish to generate a set of condensed samples S such that the network parameters trained on them ( θ t S \theta^S_t θtS ) are similar to the ones trained on the original training set ( θ t τ \theta^\tau_t θtτ ) at each iteration t.

我们希望生成一组浓缩样本 S,使得在它们上训练的网络参数 ( θ t S \theta^S_t θtS ) 类似于每次迭代 t 的原始训练集 ( θ t τ \theta^\tau_t θtτ ) 上训练的网络参数。

update rule:

where η θ \eta_\theta ηθ is the learning rate. Based on our observation D ( θ t S , θ t τ ) D(\theta^S_t,\theta^\tau_t) D(θtS,θtτ) ≈ 0), we simplify the formulation in $eq. $(7) by replacing θ t τ \theta^\tau_t θtτ with θ t S \theta^S_t θtS and use θ to denote θ S \theta^S θS in the rest of the paper.

用上式简化eq.7,得eq.9

We now have a single deep network with parameters θ trained on the synthetic set S which is optimized such that the distance between the gradients for the loss over the training samples L τ \mathcal L^\tau Lτ w.r.t. θ and the gradients for the loss over the condensed samples L S \mathcal L^S LS w.r.t. θ is minimized. In words, our goal reduces to matching the gradients for the real and synthetic training loss w.r.t. θ via updating the condensed samples.

我们现在有一个深度网络,其参数 θ 在合成集 S 上进行训练,经过优化,使得训练样本 L τ \mathcal L^\tau Lτ w.r.t. θ 上的损失梯度与压缩样本 L S \mathcal L^S LS w.r.t. θ 上的损失梯度之间的距离最小化。换句话说,我们的目标是通过更新压缩样本来减少真实和合成训练损失的梯度w.r.t. θ。

This approximation has the key advantage over e q . 5 eq.5 eq.5that it does not require the expensive unrolling of the recursive computation graph over the previous parameters { θ 0 \theta^0 θ0, . . . , θ t − 1 \theta^{t-1} θt−1}. The important consequence is that the optimization is significantly faster, memory efficient and thus scales up to the state-of-the-art deep neural networks (e.g. R e s N e t ResNet ResNet ).

这种近似比 e q . 5 eq.5 eq.5具有关键优势. 它不需要递归计算图相对于先前参数 { θ 0 \theta^0 θ0, . . . , θ t − 1 \theta^{t-1} θt−1}。重要的结果是优化速度明显更快、内存效率更高,因此可以扩展到最先进的深度神经网络(例如 R e s N e t ResNet ResNet )。

Discussion

Issue:

in our experiments we learn to synthesize images for fixed labels, e.g. one synthetic image per class

in our experiments we learn to synthesize images for fixed labels, e.g. one synthetic image per class

Algorithm

Gradient matching loss

A,B两个网络计算distance的一种方法,详细见论文

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-4ARGZLKa-1677746725406)(C:\Users\33044\AppData\Roaming\Typora\typora-user-images\image-20230302162333730.png)]](http://img.e-com-net.com/image/info8/70d2da9339c243d2a04d9cccf9cdeb24.jpg)