图形学书籍 Real-Time Rendering 3.4 可编程着色和 API 的演变(根据谷歌翻译修改)

3.4 The Evolution of Programmable Shading and APIs 可编程着色和 API 的演变

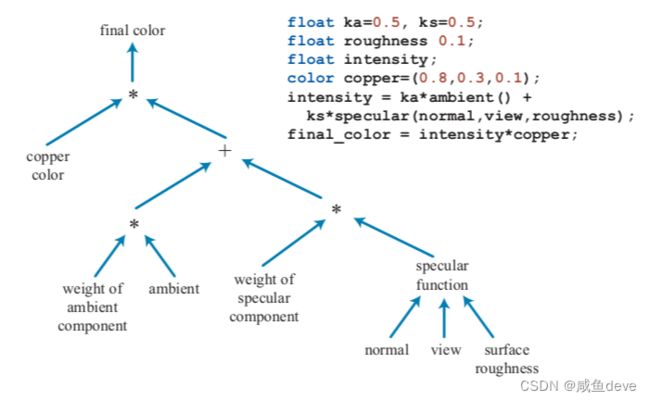

The idea of a framework for programmable shading dates back to 1984 with Cook’s shade trees [287]. A simple shader and its corresponding shade tree are shown in Figure 3.4. The RenderMan Shading Language [63, 1804] was developed from this idea in the late 1980s. It is still used today for film production rendering, along with other evolving specifications, such as the Open Shading Language (OSL) project [608].

可编程着色框架的想法可以追溯到 1984 年 Cook’s shade trees [287]。 图 3.4 显示了一个简单的着色器及其对应的着色树。 RenderMan Shading Language [63, 1804] 是在 1980 年代后期从这个想法发展而来的。 它至今仍在用于电影制作渲染,以及其他不断发展的规范,例如开放着色语言 (OSL) 项目 [608]。

Figure 3.4. Shade tree for a simple copper shader, and its corresponding shader language program.

(After Cook [287].)图 3.4: 一个简单的铜着色器(copper shader)的着色树,及其相应的着色器语言程序。 (在库克 [287] 之后。)

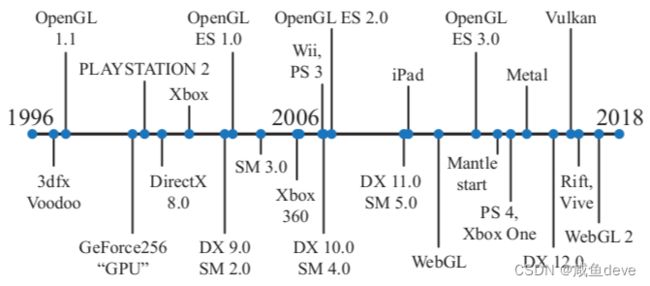

Consumer-level graphics hardware was first successfully introduced by 3dfx Interactive on October 1, 1996. See Figure 3.5 for a timeline from this year. Their Voodoo graphics card’s ability to render the game Quake with high quality and performance led to its quick adoption. This hardware implemented a fixed-function pipeline throughout. Before GPUs supported programmable shaders natively, there were several attempts to implement programmable shading operations in real time via multiple rendering passes. The Quake III: Arena scripting language was the first widespread commercial success in this area in 1999. As mentioned at the beginning of the chapter, NVIDIA’s GeForce256 was the first hardware to be called a GPU, but it was not programmable. However, it was configurable.

消费级图形硬件于 1996 年 10 月 1 日由 3dfx Interactive 首次成功推出。请参见图 3.5 了解今年的时间线。 他们的 Voodoo 显卡能够以高质量和高性能渲染 Quake 游戏,因此迅速被采用。 该硬件自始至终实现了一个固定功能的管线。 在 GPU 原生支持可编程着色器之前,曾多次尝试通过多个渲染通道实时实现可编程着色操作。 Quake III:Arena 脚本语言于 1999 年在该领域取得了第一个广泛的商业成功。如本章开头所述,NVIDIA 的 GeForce256 是第一个被称为 GPU 的硬件,但它不是可编程的。 但是,它是可配置的。

Figure 3.5. A timeline of some API and graphics hardware releases.

图 3.5:一些 API 和图形硬件发布的时间表。

In early 2001, NVIDIA’s GeForce 3 was the first GPU to support programmable vertex shaders [1049], exposed through DirectX 8.0 and extensions to OpenGL. These shaders were programmed in an assembly-like language that was converted by the drivers into microcode on the fly. Pixel shaders were also included in DirectX 8.0, but pixel shaders fell short of actual programmability—the limited “programs” supported were converted into texture blending states by the driver, which in turn wired together hardware “register combiners.” These “programs” were not only limited in length (12 instructions or less) but also lacked important functionality. Dependent texture reads and floating point data were identified by Peercy et al. [1363] as crucial to true programmability, from their study of RenderMan.

2001 年初,NVIDIA 的 GeForce 3 是第一个支持可编程顶点着色器 [1049] 的 GPU,通过 DirectX 8.0 和 OpenGL 扩展公开。 这些着色器使用类似汇编的语言进行编程,由驱动程序即时转换为微代码。 像素着色器也包含在 DirectX 8.0 中,但像素着色器缺乏实际的可编程性——受支持的有限“程序”被驱动程序转换为纹理混合状态,然后将硬件“寄存器组合器”连接在一起。 这些“程序”不仅长度有限(12 条指令或更少),而且缺乏重要的功能。 Peercy 等人确定了相关纹理读取和浮点数据。 [1363] 对真正的可编程性至关重要,来自他们对 RenderMan 的研究。

Shaders at this time did not allow for flow control (branching), so conditionals had to be emulated by computing both terms and selecting or interpolating between the results. DirectX defined the concept of a Shader Model (SM) to distinguish hardware with different shader capabilities. The year 2002 saw the release of DirectX 9.0 including Shader Model 2.0, which featured truly programmable vertex and pixel shaders. Similar functionality was also exposed under OpenGL using various extensions. Support for arbitrary dependent texture reads and storage of 16-bit floating point values was added, finally completing the set of requirements identified by Peercy et al. Limits on shader resources such as instructions, textures, and registers were increased, so shaders became capable of more complex effects. Support for flow control was also added. The growing length and complexity of shaders made the assembly programming model increasingly cumbersome. Fortunately, DirectX 9.0 also included HLSL. This shading language was developed by Microsoft in collaboration with NVIDIA. Around the same time, the OpenGL ARB (Architecture Review Board) released GLSL, a fairly similar language for OpenGL [885]. These languages were heavily influenced by the syntax and design philosophy of the C programming language and included elements from the RenderMan Shading Language.

此时的着色器不允许流控制(分支),因此必须通过计算两个项并在结果之间进行选择或插值来模拟条件。 DirectX 定义了着色器模型 (Shader Model, SM) 的概念,以区分具有不同着色器功能的硬件。 2002 年发布了包含 Shader Model 2.0 的 DirectX 9.0,它具有真正可编程的顶点和像素着色器。 类似的功能也在 OpenGL 下使用各种扩展公开。 添加了对任意相关纹理读取和存储 16 位浮点值的支持,最终完成了 Peercy 等人确定的一组要求。 增加了对指令、纹理和寄存器等着色器资源的限制,因此着色器能够实现更复杂的效果。 还添加了对流量控制的支持。 着色器的长度和复杂性不断增加,使得汇编编程模型越来越繁琐。 幸运的是,DirectX 9.0 还包括 HLSL。 这种着色语言是由 Microsoft 与 NVIDIA 合作开发的。 大约在同一时间,OpenGL ARB(架构审查委员会)发布了 GLSL,这是一种与 OpenGL [885] 非常相似的语言。 这些语言深受 C 编程语言的语法和设计理念的影响,并包含来自 RenderMan 着色语言的元素。

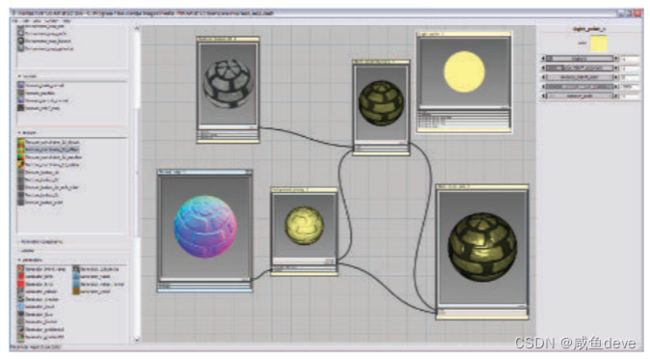

Shader Model 3.0 was introduced in 2004 and added dynamic flow control, making shaders considerably more powerful. It also turned optional features into requirements, further increased resource limits and added limited support for texture reads in vertex shaders. When a new generation of game consoles was introduced in late 2005 (Microsoft’s Xbox 360) and late 2006 (Sony Computer Entertainment’s PLAYSTATION 3 system), they were equipped with Shader Model 3.0—level GPUs. Nintendo’s Wii console was one of the last notable fixed-function GPUs, which initially shipped in late 2006. The purely fixed-function pipeline is long gone at this point. Shader languages have evolved to a point where a variety of tools are used to create and manage them. A screenshot of one such tool, using Cook’s shade tree concept, is shown in Figure 3.6.

Shader Model 3.0 于 2004 年推出,并添加了动态流控制,使着色器更加强大。 它还将可选功能变成了要求,进一步增加了资源限制,并在顶点着色器中添加了对纹理读取的有限支持。 2005 年底(微软的 Xbox 360)和 2006 年底(索尼电脑娱乐公司的 PLAYSTATION 3 系统)推出新一代游戏机时,它们配备了 Shader Model 3.0 级别的 GPU。 任天堂的 Wii 游戏机是最后一批著名的固定功能 GPU 之一,最初于 2006 年底发货。此时纯固定功能管道早已不复存在。 着色器语言已经发展到可以使用各种工具来创建和管理它们的程度。 图 3.6 显示了一个这样的工具的屏幕截图,它使用 Cook 的树荫概念。

Figure 3.6. A visual shader graph system for shader design. Various operations are encapsulated in function boxes, selectable on the left. When selected, each function box has adjustable parameters, shown on the right. Inputs and outputs for each function box are linked to each other to form the final result, shown in the lower right of the center frame. (Screenshot from “mental mill,” mental images inc.) > > 图 3.6。 用于着色器设计的视觉着色器图形系统。 各种操作都封装在功能框里,可以在左边选择。 选中后,每个功能框都有可调整的参数,如右图所示。 每个功能框的输入和输出相互链接以形成最终结果,显示在中间框架的右下方。 (截图来自“mental mill”,mental images inc.)

The next large step in programmability also came near the end of 2006. Shader Model 4.0, included in DirectX 10.0 [175], introduced several major features, such as the geometry shader and stream output. Shader Model 4.0 included a uniform programming model for all shaders (vertex, pixel, and geometry), the unified shader design described earlier. Resource limits were further increased, and support for integer data types (including bitwise operations) was added. The introduction of GLSL 3.30 in OpenGL 3.3 provided a similar shader model.

可编程性的下一个重要步骤也接近 2006 年底。包含在 DirectX 10.0 [175] 中的着色器模型 4.0 引入了几个主要功能,例如几何着色器和流输出。 Shader Model 4.0 包含一个适用于所有着色器(顶点、像素和几何体)的统一编程模型,即前面描述的统一着色器设计。 进一步增加了资源限制,并添加了对整数数据类型(包括按位运算)的支持。 OpenGL 3.3 中引入的 GLSL 3.30 提供了类似的着色器模型。

In 2009 DirectX 11 and Shader Model 5.0 were released, adding the tessellation stage shaders and the compute shader, also called DirectCompute. The release also focused on supporting CPU multiprocessing more effectively, a topic discussed in Section 18.5. OpenGL added tessellation in version 4.0 and compute shaders in 4.3. DirectX and OpenGL evolve differently. Both set a certain level of hardware support needed for a particular version release. Microsoft controls the DirectX API and so works directly with independent hardware vendors ([HVs) such as AMD, NVIDIA, and Intel, as well as game developers and computer-aided design software firms, to determine what features to expose. OpenGL is developed by a consortium of hardware and software vendors, managed by the nonprofit Khronos Group. Because of the number of companies involved, the API features often appear in a release of OpenGL some time after their introduction in DirectX. However, OpenGL allows extensions, vendor-specific or more general, that allow the latest GPU functions to be used before official support in a release.

2009 年发布了 DirectX 11 和 Shader Model 5.0,添加了曲面细分阶段着色器和计算着色器,也称为 DirectCompute。 该版本还专注于更有效地支持 CPU 多处理,这是第 18.5 节中讨论的主题。 OpenGL 在 4.0 版中添加了曲面细分,在 4.3 中添加了计算着色器。 DirectX 和 OpenGL 的发展方式不同。 两者都设置了特定版本发布所需的一定级别的硬件支持。 Microsoft 控制着 DirectX API,因此直接与 AMD、NVIDIA 和 Intel 等独立硬件供应商 ([HV]) 以及游戏开发商和计算机辅助设计软件公司合作,以确定公开哪些功能。 OpenGL 由硬件和软件供应商联盟开发,由非营利组织 Khronos Group 管理。 由于涉及的公司数量众多,API 功能通常在 DirectX 中引入后的某个时间出现在 OpenGL 版本中。 但是,OpenGL 允许特定于供应商或更通用的扩展,允许在发布的官方支持之前使用最新的 GPU 功能。

The next significant change in APIs was led by AMD’s introduction of the Mantle API in 2013. Developed in partnership with video game developer DICE, the idea of Mantle was to strip out much of the graphics driver’s overhead and give this control directly to the developer. Alongside this refactoring was further support for effective CPU multiprocessing. This new class of APIs focuses on vastly reducing the time the CPU spends in the driver, along with more efficient CPU multiprocessor support (Chapter 18). The ideas pioneered in Mantle were picked up by Microsoft and released as DirectX 12 in 2015. Note that DirectX 12 is not focused on exposing new GPU functionality—DirectX 11.3 exposed the same hardware features. Both APIs can be used to send graphics to virtual reality systems such as the Oculus Rift and HTC Vive. However, DirectX 12 is a radical redesign of the API, one that better maps to modern GPU architectures. Low-overhead drivers are useful for applications where the CPU driver cost is causing a bottleneck, or where using more CPU processors for graphics could benefit performance [946]. Porting from earlier APIs can be difficult, and a naive implementation can result in lower performance [249, 699, 1438].

API 的下一个重大变化是由 AMD 在 2013 年推出的 Mantle API 引起的。Mantle 是与视频游戏开发商 DICE 合作开发的,其理念是剥离大部分图形驱动程序的开销,并将此控制权直接交给开发人员。 除了这种重构之外,还进一步支持有效的 CPU 多处理。 这类新的 API 侧重于大大减少 CPU 在驱动程序中花费的时间,以及更高效的 CPU 多处理器支持(第 18 章)。 在 Mantle 中开创的想法被微软采纳并于 2015 年作为 DirectX 12 发布。请注意,DirectX 12 并不专注于公开新的 GPU 功能——DirectX 11.3 公开了相同的硬件功能。 这两个 API 都可用于将图形发送到虚拟现实系统,例如 Oculus Rift 和 HTC Vive。 然而,DirectX 12 是对 API 的彻底重新设计,可以更好地映射到现代 GPU 架构。 低开销驱动程序适用于 CPU 驱动程序成本导致瓶颈的应用程序,或者使用更多 CPU 处理器处理图形可以提高性能的应用程序 [946]。 从早期的 API 移植可能很困难,而且简单的实现可能会导致性能降低 [249、699、1438]。

Apple released its own low-overhead API called Metal in 2014. Metal was first available on mobile devices such as the iPhone 5S and iPad Air, with newer Macintoshes given access a year later through OS X El Capitan. Beyond efficiency, reducing CPU usage saves power, an important factor on mobile devices. This API has its own shading language, meant for both graphics and GPU compute programs.

Apple 于 2014 年发布了自己的低开销 API,称为 Metal。Metal 首次出现在 iPhone 5S 和 iPad Air 等移动设备上,一年后通过 OS X El Capitan 获得了更新的 Macintoshes 访问权限。 除了效率之外,降低 CPU 使用率还可以节省电量,这是移动设备的一个重要因素。 这个 API 有自己的着色语言,适用于图形和 GPU 计算程序。

AMD donated its Mantle work to the Khronos Group, which released its own new API in early 2016, called Vulkan. As with OpenGL, Vulkan works on multiple operating systems. Vulkan uses a new high-level intermediate language called SPIRV, which is used for both shader representation and for general GPU computing. Precompiled shaders are portable and so can be used on any GPU supporting the capabilities needed [885]. Vulkan can also be used for non-graphical GPU computation, as it does not need a display window [946]. One notable difference of Vulkan from other low-overhead drivers is that it is meant to work with a wide range of systems, from workstations to mobile devices.

AMD 将其 Mantle 工作捐赠给了 Khronos Group,后者于 2016 年初发布了自己的新 API,称为 Vulkan。 与 OpenGL 一样,Vulkan 可在多个操作系统上运行。 Vulkan 使用一种称为 SPIRV 的新型高级中间语言,用于着色器表示和通用 GPU 计算。 预编译着色器是可移植的,因此可以在支持所需功能的任何 GPU 上使用 [885]。 Vulkan 也可以用于非图形 GPU 计算,因为它不需要显示窗口 [946]。 Vulkan 与其他低开销驱动程序的一个显着区别是它旨在与范围广泛的系统一起工作,从工作站到移动设备。

On mobile devices the norm has been to use OpenGL ES. “ES” stands for Embedded Systems, as this API was developed with mobile devices in mind. Standard OpenGL at the time was rather bulky and slow in some of its call structures, as well as requiring support for rarely used functionality. Released in 2003, OpenGL ES 1.0 was a stripped-down version of OpenGL 1.3, describing a fixed-function pipeline. While releases of DirectX are timed with those of graphics hardware that support them, developing graphics support for mobile devices did not proceed in the same fashion. For example, the first iPad, released in 2010, implemented OpenGL ES 1.1. In 2007 the OpenGL ES 2.0 specification was released, providing programmable shading. It was based on OpenGL 2.0, but without the fixed-function component, and so was not backward-compatible with OpenGL ES 1.1. OpenGL ES 3.0 was released in 2012, providing functionality such as multiple render targets, texture compression, transform feedback, instancing, and a much wider range of texture formats and modes, as well as shader language improvements. OpenGL ES 3.1 adds compute shaders, and 3.2 adds geometry and tessellation shaders, among other features. Chapter 23 discusses mobile device architectures in more detail.

在移动设备上,标准是使用 OpenGL ES。 “ES”代表嵌入式系统,因为此 API 是为移动设备开发的。 当时的标准 OpenGL 在其某些调用结构中相当庞大且缓慢,并且需要支持很少使用的功能。 OpenGL ES 1.0 于 2003 年发布,是 OpenGL 1.3 的精简版,描述了固定功能的管道。 虽然 DirectX 的发布与支持它们的图形硬件同步,但为移动设备开发图形支持并没有以相同的方式进行。 例如,2010 年发布的第一款 iPad 就实现了 OpenGL ES 1.1。 2007 年,OpenGL ES 2.0 规范发布,提供可编程着色。 它基于 OpenGL 2.0,但没有固定功能组件,因此不向后兼容 OpenGL ES 1.1。 OpenGL ES 3.0 于 2012 年发布,提供了多个渲染目标、纹理压缩、变换反馈、实例化、更广泛的纹理格式和模式以及着色器语言改进等功能。 OpenGL ES 3.1 添加了计算着色器,3.2 添加了几何和曲面细分着色器等功能。 第 23 章更详细地讨论了移动设备架构。

An offshoot of OpenGL ES is the browser-based API WebGL, called through JavaScript. Released in 2011, the first version of this API is usable on most mobile devices, as it is equivalent to OpenGL ES 2.0 in functionality. As with OpenGL, extensions give access to more advanced GPU features. WebGL 2 assumes OpenGL ES 3.0 support.

OpenGL ES 的一个分支是基于浏览器的 API WebGL,通过 JavaScript 调用。 该 API 的第一个版本于 2011 年发布,可在大多数移动设备上使用,因为它在功能上等同于 OpenGL ES 2.0。 与 OpenGL 一样,扩展可以访问更高级的 GPU 功能。 WebGL 2 假设支持 OpenGL ES 3.0。

WebGL is particularly well suited for experimenting with features or use in the classroom:

WebGL 特别适合在课堂上试验功能或使用:

-

It is cross-platform, working on all personal computers and almost all mobile devices.

-

Driver approval is handled by the browsers. Even if one browser does not support a particular GPU or extension, often another browser does.

-

Code is interpreted, not compiled, and only a text editor is needed for development.

-

A debugger is built in to most browsers, and code running at any website can be examined.

-

Programs can be deployed by uploading them to a website or Github, for example.

-

它是跨平台的,适用于所有个人电脑和几乎所有移动设备。

-

驱动程序批准由浏览器处理。 即使一个浏览器不支持特定的 GPU 或扩展,另一个浏览器通常也支持。

-

代码是解释的,不是编译的,开发只需要一个文本编辑器。

-

大多数浏览器都内置了调试器,可以检查在任何网站上运行的代码。

-

可以通过将程序上传到网站或 Github 等来部署程序。

Higher-level scene-graph and effects libraries such as three.js [218] give easy access to code for a variety of more involved effects such as shadow algorithms, post-processing effects, physically based shading, and deferred rendering.

更高级别的场景图和效果库(如 three.js [218])可以轻松访问代码以实现各种更复杂的效果,例如阴影算法、后处理效果、基于物理的着色和延迟渲染。