搭建Ceph集群

搭建Ceph集群

此文以Ceph octopus版本为例!

如未指定,下述命令在所有节点执行!

一、系统资源规划

| 节点名称 | 系统名称 | CPU/内存 | 磁盘 | 网卡 | IP地址 | OS |

|---|---|---|---|---|---|---|

| Deploy | deploy.ceph.local | 2C/4G | 128G+3*20G | ens33 | 192.168.0.10 | CentOS7 |

| Node1 | node1.ceph.local | 2C/4G | 128G+3*20G | ens33 | 192.168.0.11 | CentOS7 |

| ens37 | 10.0.0.11 | |||||

| Node2 | node2.ceph.local | 2C/4G | 128G+3*20G | ens33 | 192.168.0.12 | CentOS7 |

| ens37 | 10.0.0.12 | |||||

| Node3 | node3.ceph.local | 2C/4G | 128G+3*20G | ens33 | 192.168.0.13 | CentOS7 |

| ens37 | 10.0.0.13 | |||||

| Client | client.ceph.local | 2C/4G | 128G+3*20G | ens33 | 192.168.0.100 | CentOS7 |

二、搭建Ceph存储集群

1、系统基础配置

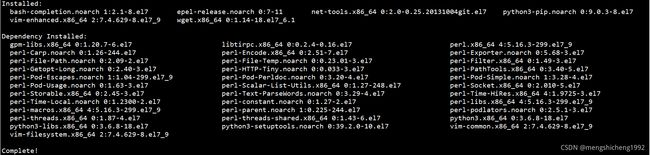

1、安装基本软件

yum -y install vim wget net-tools python3-pip epel-release bash-completion

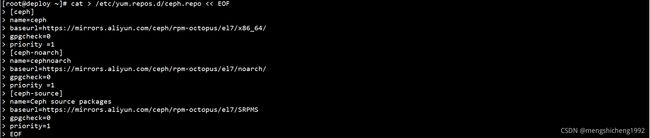

2、设置镜像仓库

cat > /etc/yum.repos.d/ceph.repo << EOF

[ceph]

name=ceph

baseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el7/x86_64/

gpgcheck=0

priority =1

[ceph-noarch]

name=cephnoarch

baseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el7/noarch/

gpgcheck=0

priority =1

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el7/SRPMS

gpgcheck=0

priority=1

EOF

3、设置名称解析

echo 192.168.0.10 deploy.ceph.local >> /etc/hosts

echo 192.168.0.11 node1.ceph.local >> /etc/hosts

echo 192.168.0.12 node2.ceph.local >> /etc/hosts

echo 192.168.0.13 node3.ceph.local >> /etc/hosts

4、设置NTP

yum -y install chrony

systemctl start chronyd

systemctl enable chronyd

systemctl status chronyd

chronyc sources

5、设置防火墙、SELinux

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

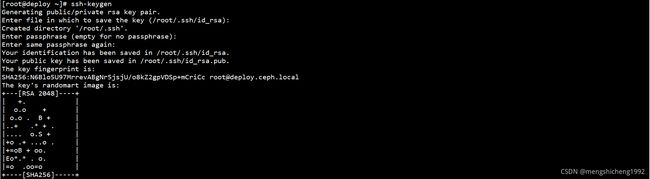

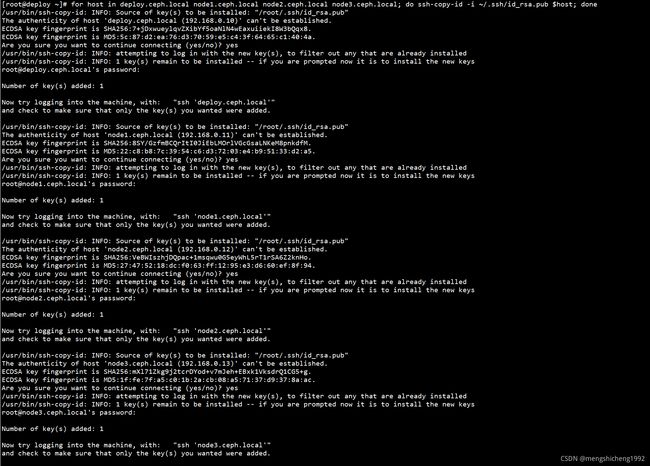

6、设置SSH免密登录

在Delpoy节点上配置免密ssh所有节点:

ssh-keygen

for host in deploy.ceph.local node1.ceph.local node2.ceph.local node3.ceph.local; do ssh-copy-id -i ~/.ssh/id_rsa.pub $host; done

2、创建Ceph存储集群

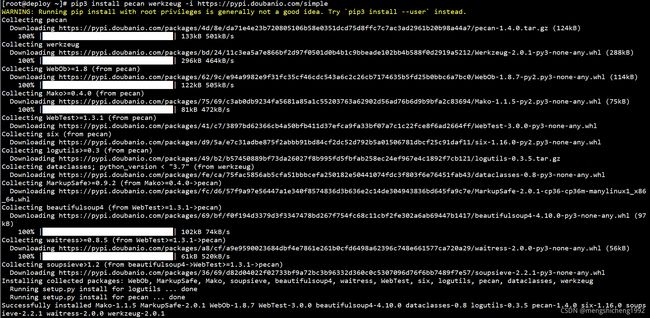

1、安装Python模块

在Deploy和Node节点上安装Python模块:

pip3 install pecan werkzeug -i https://pypi.doubanio.com/simple

2、安装ceph-deploy

在Deploy节点上安装ceph-deploy:

yum -y install ceph-deploy python-setuptools

3、创建目录

在Deploy节点上创建一个目录,保存ceph-deploy生成的配置文件和密钥对:

mkdir /root/cluster/

![]()

4、创建集群

在Deploy节点上创建集群:

cd /root/cluster/

ceph-deploy new deploy.ceph.local

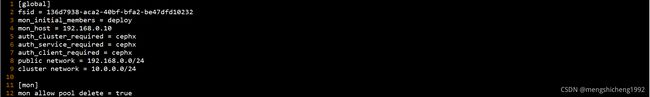

5、修改配置文件

在Deploy节点上修改/root/cluster/ceph.conf,指定前端和后端网络:

public network = 192.168.0.0/24

cluster network = 10.0.0.0/24

[mon]

mon allow pool delete = true

如配置文件更改,需同步配置文件至各节点,并重启相关进程

ceph-deploy --overwrite-conf config push deploy.ceph.local

ceph-deploy --overwrite-conf config push node1.ceph.local

ceph-deploy --overwrite-conf config push node2.ceph.local

ceph-deploy --overwrite-conf config push node3.ceph.local

6、安装Ceph

在Deploy节点上安装Ceph:

ceph-deploy install deploy.ceph.local

ceph-deploy install node1.ceph.local

ceph-deploy install node2.ceph.local

ceph-deploy install node3.ceph.local

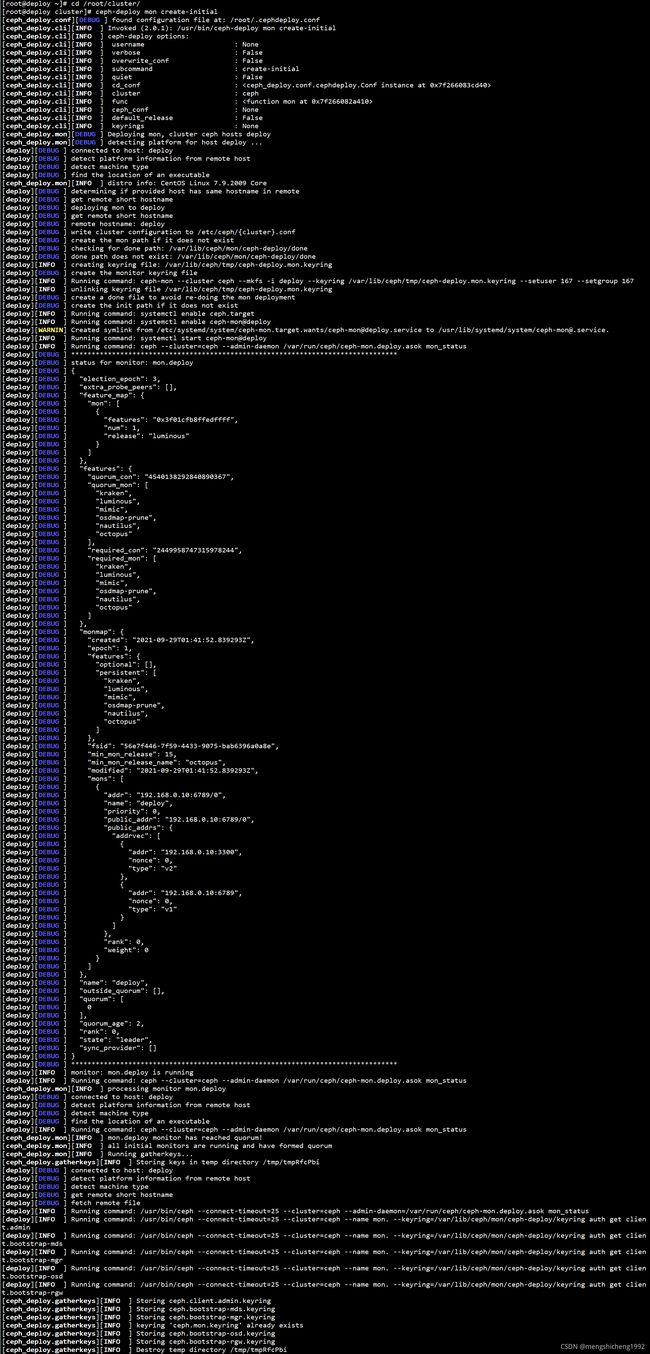

7、初始化MON节点

在Deploy节点上初始化MON节点:

cd /root/cluster/

ceph-deploy mon create-initial

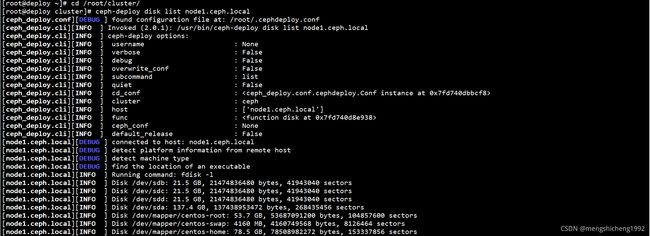

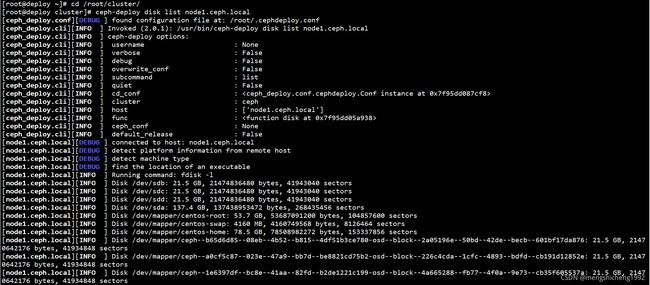

8、查看节点磁盘

在Deploy节点上查看节点磁盘:

cd /root/cluster/

ceph-deploy disk list node1.ceph.local

ceph-deploy disk list node2.ceph.local

ceph-deploy disk list node3.ceph.local

9、擦除节点磁盘

在Deploy节点上擦除节点磁盘:

cd /root/cluster/

ceph-deploy disk zap node1.ceph.local /dev/sdb

ceph-deploy disk zap node1.ceph.local /dev/sdc

ceph-deploy disk zap node1.ceph.local /dev/sdd

ceph-deploy disk zap node2.ceph.local /dev/sdb

ceph-deploy disk zap node2.ceph.local /dev/sdc

ceph-deploy disk zap node2.ceph.local /dev/sdd

ceph-deploy disk zap node3.ceph.local /dev/sdb

ceph-deploy disk zap node3.ceph.local /dev/sdc

ceph-deploy disk zap node3.ceph.local /dev/sdd

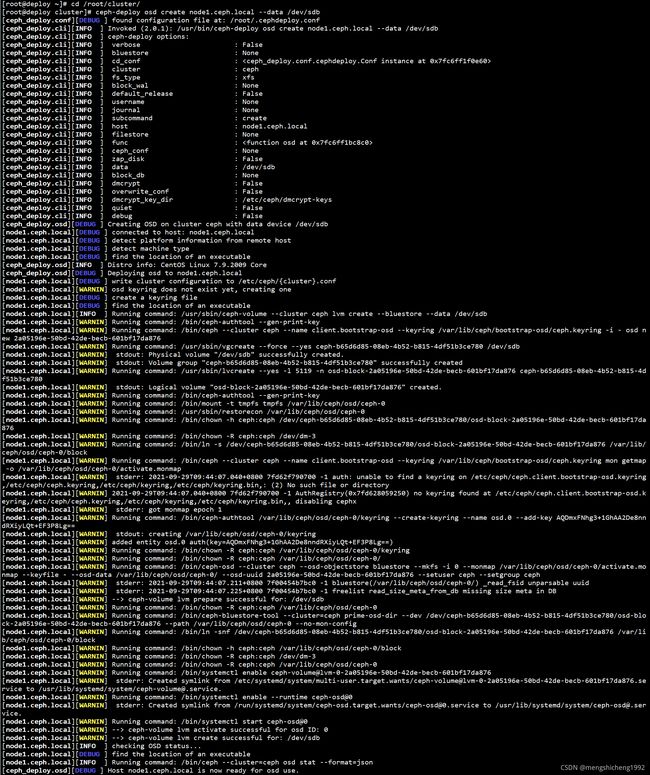

10、创建OSD

在Deploy节点上创建OSD:

cd /root/cluster/

ceph-deploy osd create node1.ceph.local --data /dev/sdb

ceph-deploy osd create node1.ceph.local --data /dev/sdc

ceph-deploy osd create node1.ceph.local --data /dev/sdd

ceph-deploy osd create node2.ceph.local --data /dev/sdb

ceph-deploy osd create node2.ceph.local --data /dev/sdc

ceph-deploy osd create node2.ceph.local --data /dev/sdd

ceph-deploy osd create node3.ceph.local --data /dev/sdb

ceph-deploy osd create node3.ceph.local --data /dev/sdc

ceph-deploy osd create node3.ceph.local --data /dev/sdd

11、查看磁盘及分区信息

在Deploy节点上查看磁盘及分区信息:

cd /root/cluster/

ceph-deploy disk list node1.ceph.local

ceph-deploy disk list node2.ceph.local

ceph-deploy disk list node3.ceph.local

在Node节点上查看磁盘及分区信息:

lsblk

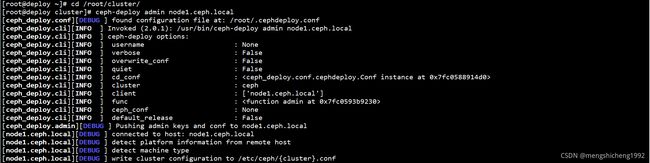

12、拷贝配置和密钥

把Deploy节点上配置文件和admin密钥拷贝至Node节点:

cd /root/cluster/

ceph-deploy admin node1.ceph.local

ceph-deploy admin node2.ceph.local

ceph-deploy admin node3.ceph.local

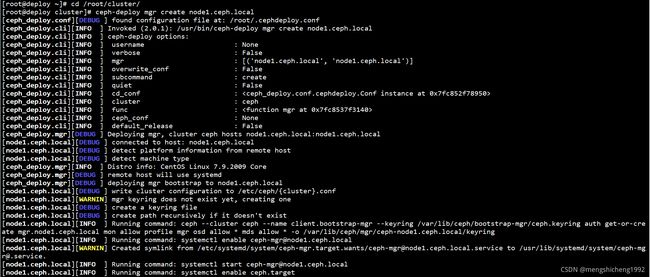

13、初始化MGR节点

在Deploy节点上初始化MGR节点:

cd /root/cluster/

ceph-deploy mgr create node1.ceph.local

ceph-deploy mgr create node2.ceph.local

ceph-deploy mgr create node3.ceph.local

14、拷贝keyring文件

在Deploy节点上拷贝keyring文件至Ceph目录:

cp /root/cluster/*.keyring /etc/ceph/

![]()

15、禁用不安全模式

在Deploy节点上禁用不安全模式:

ceph config set mon auth_allow_insecure_global_id_reclaim false

![]()

15、查看集群状态

在Deploy节点上查看Ceph状态:

ceph -s

ceph health

![]()

在Deploy节点上查看OSD状态:

ceph osd stat

![]()

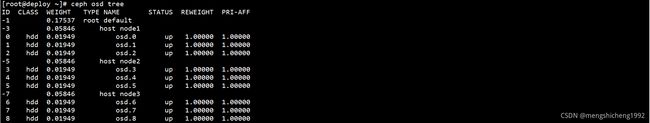

在Deploy节点上查看OSD目录树:

ceph osd tree