OC类的探索(三) - cache_t分析

前言

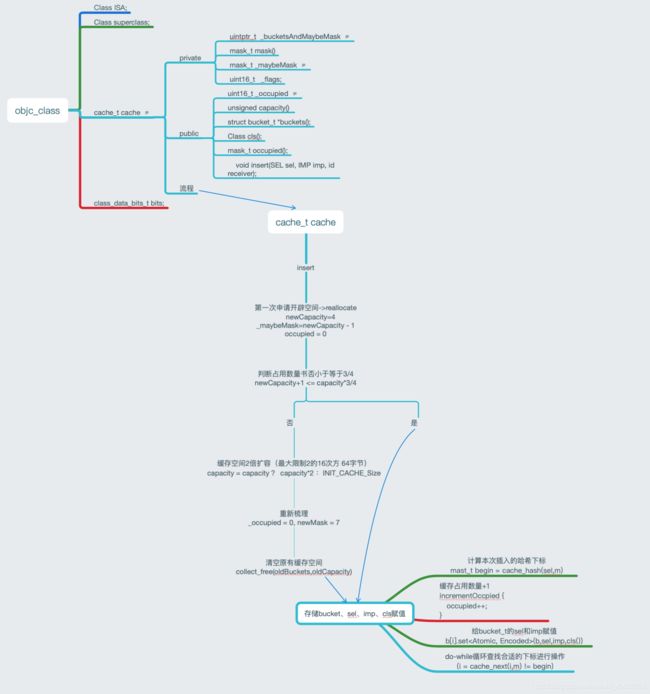

在之前OC类的探索这篇文章中,我们讲到了NSObject的爸爸是objc_class,而它包含以下信息

// Class ISA;

Class superclass;

cache_t cache; // formerly cache pointer and vtable

class_data_bits_t bits; // class_rw_t * plus custom rr/alloc flags

今天,我们就来探索一下cache_t。

一、知识准备

1.数组:

数组是用于储存多个相同类型数据的集合。主要有以下优缺点:

优点:访问某个下标的内容很方便,速度快

缺点:数组中进行插入、删除等操作比较繁琐耗时

2.链表:

链表是一种物理存储单元上非连续、非顺序的存储结构,数据元素的逻辑顺序是通过链表中的指针链接次序实现的。主要有以下优缺点:

优点:插入或者删除某个节点的元素很简单方便

缺点:查找某个位置节点的元素时需要挨个访问,比较耗时

3.哈希表:

是根据关键码值而直接进行访问的数据结构。主要有以下优缺点:

优点:1、访问某个元素速度很快 2、插入删除操作也很方便

缺点:需要经过一系列运算比较复杂

二、cache_t解读

1.cache_t源码

先看看cache_t的数据结构

struct cache_t {

private:

explicit_atomic<uintptr_t> _bucketsAndMaybeMask; // 8

union {

struct {

explicit_atomic<mask_t> _maybeMask; // 4

#if __LP64__

uint16_t _flags; // 2

#endif

uint16_t _occupied; // 2

};

explicit_atomic<preopt_cache_t *> _originalPreoptCache; // 8

};

//缓存为空缓存,第一次判断使用

bool isConstantEmptyCache() const;

bool canBeFreed() const;

// 可使用总容量,为capacity - 1

mask_t mask() const;

// 两倍扩容

void incrementOccupied();

// 设置buckets和mask

void setBucketsAndMask(struct bucket_t *newBuckets, mask_t newMask);

// 重新开辟内存

void reallocate(mask_t oldCapacity, mask_t newCapacity, bool freeOld);

// 根据oldCapacity回收oldBuckets

void collect_free(bucket_t *oldBuckets, mask_t oldCapacity);

public:

// 开辟的总容量

unsigned capacity() const;

// 获取buckets

struct bucket_t *buckets() const;

// 获取class

Class cls() const;

// 获取已缓存的数量

mask_t occupied() const;

// 将调用的方法插入到buckets所在的内存区域

void insert(SEL sel, IMP imp, id receiver);

`````省略n多代码·······

}

2.cache_t 成员变量

_bucketsAndMaybeMask:根据不同架构决定存放不同的信息,X86_64存放buckets,arm64高16位存储mask,低48位buckets。

_maybeMask:当前缓存区的容量,arm64架构下不使用。

_occupied:当前缓存的方法个数。

mac下验证 (x86_64)

(lldb) x/4gx MHPerson.class

0x100008618: 0x00000001000085f0 0x000000010036a140

0x100008628: 0x0000000101304e70 0x0001803000000003

(lldb) p (cache_t *) 0x0000000101304e70

(cache_t *) $1 = 0x0000000101304e70

(lldb) p *$1

(cache_t) $2 = {

_bucketsAndMaybeMask = {

std::__1::atomic<unsigned long> = {

Value = 0

}

}

= {

= {

_maybeMask = {

std::__1::atomic<unsigned int> = {

Value = 0

}

}

_flags = 0

_occupied = 0

}

_originalPreoptCache = {

std::__1::atomic<preopt_cache_t *> = {

Value = nil

}

}

}

}

3.cache_t函数

3.1 cache_t插入

3.1.1 代码:

void cache_t::insert(SEL sel, IMP imp, id receiver)

{

runtimeLock.assertLocked();

// Never cache before +initialize is done

if (slowpath(!cls()->isInitialized())) {

return;

}

if (isConstantOptimizedCache()) {

_objc_fatal("cache_t::insert() called with a preoptimized cache for %s",

cls()->nameForLogging());

}

#if DEBUG_TASK_THREADS

return _collecting_in_critical();

#else

#if CONFIG_USE_CACHE_LOCK

mutex_locker_t lock(cacheUpdateLock);

#endif

ASSERT(sel != 0 && cls()->isInitialized());

// Use the cache as-is if until we exceed our expected fill ratio.

mask_t newOccupied = occupied() + 1;

unsigned oldCapacity = capacity(), capacity = oldCapacity;

if (slowpath(isConstantEmptyCache())) {

// Cache is read-only. Replace it.

if (!capacity) capacity = INIT_CACHE_SIZE;

reallocate(oldCapacity, capacity, /* freeOld */false);

}

else if (fastpath(newOccupied + CACHE_END_MARKER <= cache_fill_ratio(capacity))) {

// Cache is less than 3/4 or 7/8 full. Use it as-is.

}

#if CACHE_ALLOW_FULL_UTILIZATION

else if (capacity <= FULL_UTILIZATION_CACHE_SIZE && newOccupied + CACHE_END_MARKER <= capacity) {

// Allow 100% cache utilization for small buckets. Use it as-is.

}

#endif

else {

capacity = capacity ? capacity * 2 : INIT_CACHE_SIZE;

if (capacity > MAX_CACHE_SIZE) {

capacity = MAX_CACHE_SIZE;

}

reallocate(oldCapacity, capacity, true);

}

bucket_t *b = buckets();

mask_t m = capacity - 1;

mask_t begin = cache_hash(sel, m);

mask_t i = begin;

// Scan for the first unused slot and insert there.

// There is guaranteed to be an empty slot.

do {

if (fastpath(b[i].sel() == 0)) {

incrementOccupied();

b[i].set<Atomic, Encoded>(b, sel, imp, cls());

return;

}

if (b[i].sel() == sel) {

// The entry was added to the cache by some other thread

// before we grabbed the cacheUpdateLock.

return;

}

} while (fastpath((i = cache_next(i, m)) != begin));

bad_cache(receiver, (SEL)sel);

#endif // !DEBUG_TASK_THREADS

}

3.1.2 解读

- 获取当前已缓存方法个数(第一次为0),然后+1。

- 从获取缓存区容量,第一次为0。

- 判断是否为第一次缓存方法,第一次缓存就开辟capacity(1 << INIT_CACHE_SIZE_LOG2(X86_64为2,arm64为1)) * sizeof(bucket_t)大小的内存空间,将bucket_t *首地址存入_bucketsAndMaybeMask,将newCapacity - 1的mask存入_maybeMask,_occupied设置为0。

- 不是第一次缓存,就判断是否需要扩容(已缓存容量超过总容量的3/4或者7/8),需要扩容就双倍扩容(但不能大于最大值),然后像第三步一样重新开辟内存,并且回收旧缓存区的内存。

- 哈希算法算出方法缓存的位置,do{} while()循环判断当前位置是否可存,如果哈希冲突了,就一直再哈希,直到找到可存入的位置位置,如果找完都未找到就调用bad_cache函数。

3.1.3 reallocate

代码

ALWAYS_INLINE

void cache_t::reallocate(mask_t oldCapacity, mask_t newCapacity, bool freeOld)

{

bucket_t *oldBuckets = buckets();

bucket_t *newBuckets = allocateBuckets(newCapacity);

// Cache's old contents are not propagated.

// This is thought to save cache memory at the cost of extra cache fills.

// fixme re-measure this

ASSERT(newCapacity > 0);

ASSERT((uintptr_t)(mask_t)(newCapacity-1) == newCapacity-1);

setBucketsAndMask(newBuckets, newCapacity - 1);

if (freeOld) {

collect_free(oldBuckets, oldCapacity);

}

}

解读

重新开辟newCapacity * sizeof(bucket_t)大小的内存空间,将bucket_t *首地址存入_bucketsAndMaybeMask,将newCapacity - 1的mask存入_maybeMask,freeOld代表是否回收旧内存,第一次插入方法时为false,后续扩容时为true,调用collect_free函数清空、回收。

3.1.4 cache_fill_ratio

代码

#define CACHE_END_MARKER 1

// Historical fill ratio of 75% (since the new objc runtime was introduced).

static inline mask_t cache_fill_ratio(mask_t capacity) {

return capacity * 3 / 4;

}

解读

重新开辟newCapacity * sizeof(bucket_t)大小的内存空间,将bucket_t *首地址存入_bucketsAndMaybeMask,将newCapacity - 1的mask存入_maybeMask,freeOld代表是否回收旧内存,第一次插入方法时为false,后续扩容时为true,调用collect_free函数清空、回收。

3.1.5 allocateBuckets

代码

#if CACHE_END_MARKER

bucket_t *cache_t::endMarker(struct bucket_t *b, uint32_t cap)

{

return (bucket_t *)((uintptr_t)b + bytesForCapacity(cap)) - 1;

}

bucket_t *cache_t::allocateBuckets(mask_t newCapacity)

{

// Allocate one extra bucket to mark the end of the list.

// This can't overflow mask_t because newCapacity is a power of 2.

bucket_t *newBuckets = (bucket_t *)calloc(bytesForCapacity(newCapacity), 1);

bucket_t *end = endMarker(newBuckets, newCapacity);

#if __arm__

// End marker's sel is 1 and imp points BEFORE the first bucket.

// This saves an instruction in objc_msgSend.

end->set<NotAtomic, Raw>(newBuckets, (SEL)(uintptr_t)1, (IMP)(newBuckets - 1), nil);

#else

// End marker's sel is 1 and imp points to the first bucket.

end->set<NotAtomic, Raw>(newBuckets, (SEL)(uintptr_t)1, (IMP)newBuckets, nil);

#endif

if (PrintCaches) recordNewCache(newCapacity);

return newBuckets;

}

#else

// M1版iMac走这里,因为M1首次创建的容量为2,比率为7/8,所以没有设置endMarker

bucket_t *cache_t::allocateBuckets(mask_t newCapacity)

{

if (PrintCaches) recordNewCache(newCapacity);

return (bucket_t *)calloc(bytesForCapacity(newCapacity), 1);

}

#endif

解读

开辟newCapacity * sizeof(bucket_t)大小的内存空间,创建新的bucket_t指针,CACHE_END_MARKER为1时(__arm__ || __x86_64__ || __i386__架构),会存储end标记,即在Capacity - 1的位置存储一个bucket_t指针,sel为1,imp根据架构为newBuckets(缓存区首地址)或者newBuckets - 1,class为nil。

扩容策略说明:

为什么要清空oldBuckets,而不是空间扩容,然后在后面附加新的缓存呢?

第一,如果将旧buckets的缓存都拿出来,放入新的buckets,耗费性能和时间;

第二,苹果缓存策略认为越新越好。举例说明,A方法被调用了一次之后,被继续调用的概率极低,

扩容之后仍然保持缓存没有意义,如果再次调用A方法,会再一次进行缓存,直到下一次扩容之前;

第三,防止缓存的方法无限增多,导致方法查找缓慢。

3.1.6 setBucketsAndMask

代码

void cache_t::setBucketsAndMask(struct bucket_t *newBuckets, mask_t newMask)

{

// objc_msgSend uses mask and buckets with no locks.

// It is safe for objc_msgSend to see new buckets but old mask.

// (It will get a cache miss but not overrun the buckets' bounds).

// It is unsafe for objc_msgSend to see old buckets and new mask.

// Therefore we write new buckets, wait a lot, then write new mask.

// objc_msgSend reads mask first, then buckets.

#ifdef __arm__

// ensure other threads see buckets contents before buckets pointer

mega_barrier();

_bucketsAndMaybeMask.store((uintptr_t)newBuckets, memory_order_relaxed);

// ensure other threads see new buckets before new mask

mega_barrier();

_maybeMask.store(newMask, memory_order_relaxed);

_occupied = 0;

#elif __x86_64__ || i386

// ensure other threads see buckets contents before buckets pointer

_bucketsAndMaybeMask.store((uintptr_t)newBuckets, memory_order_release);

// ensure other threads see new buckets before new mask

_maybeMask.store(newMask, memory_order_release);

_occupied = 0;

#else

#error Don't know how to do setBucketsAndMask on this architecture.

#endif

}

解读

将bucket_t *首地址存入_bucketsAndMaybeMask。 将newCapacity - 1的mask存入_maybeMask。 _occupied设为0,因为刚刚设置buckets,还没有真正缓存方法。

3.1.7 collect_free

// 将传入的内存地址的内容清空,回收内存。

void cache_t::collect_free(bucket_t *data, mask_t capacity)

{

#if CONFIG_USE_CACHE_LOCK

cacheUpdateLock.assertLocked();

#else

runtimeLock.assertLocked();

#endif

if (PrintCaches) recordDeadCache(capacity);

_garbage_make_room ();

garbage_byte_size += cache_t::bytesForCapacity(capacity);

garbage_refs[garbage_count++] = data;

cache_t::collectNolock(false);

}

3.1.8 cache_hash

// 哈希算法,计算方法插入的位置。

static inline mask_t cache_hash(SEL sel, mask_t mask)

{

uintptr_t value = (uintptr_t)sel;

#if CONFIG_USE_PREOPT_CACHES

value ^= value >> 7;

#endif

return (mask_t)(value & mask);

}

3.1.9 cache_next

// 哈希算法,用于哈希冲突后,再次计算方法插入的位置。

#if CACHE_END_MARKER

static inline mask_t cache_next(mask_t i, mask_t mask) {

return (i+1) & mask;

}

#elif __arm64__

static inline mask_t cache_next(mask_t i, mask_t mask) {

return i ? i-1 : mask;

}

#else

#error unexpected configuration

#endif

二、bucket_t解读

1.bucket_t源码

struct bucket_t {

private:

// IMP-first is better for arm64e ptrauth and no worse for arm64.

// SEL-first is better for armv7* and i386 and x86_64.

#if __arm64__

explicit_atomic<uintptr_t> _imp; // imp地址,以uintptr_t(unsigned long)格式存储

explicit_atomic<SEL> _sel; // sel

#else

explicit_atomic<SEL> _sel;

explicit_atomic<uintptr_t> _imp;

#endif

// imp编码,(uintptr_t)newImp ^ (uintptr_t)cls

// imp地址转10进制 ^ class地址转10进制

// imp以uintptr_t(unsigned long)格式存储在bucket_t中

// Sign newImp, with &_imp, newSel, and cls as modifiers.

uintptr_t encodeImp(UNUSED_WITHOUT_PTRAUTH bucket_t *base, IMP newImp, UNUSED_WITHOUT_PTRAUTH SEL newSel, Class cls) const {

if (!newImp) return 0;

#if CACHE_IMP_ENCODING == CACHE_IMP_ENCODING_PTRAUTH

return (uintptr_t)

ptrauth_auth_and_resign(newImp,

ptrauth_key_function_pointer, 0,

ptrauth_key_process_dependent_code,

modifierForSEL(base, newSel, cls));

#elif CACHE_IMP_ENCODING == CACHE_IMP_ENCODING_ISA_XOR

// imp地址转10进制 ^ class地址转10进制

return (uintptr_t)newImp ^ (uintptr_t)cls;

#elif CACHE_IMP_ENCODING == CACHE_IMP_ENCODING_NONE

return (uintptr_t)newImp;

#else

#error Unknown method cache IMP encoding.

#endif

}

public:

// 返回sel

inline SEL sel() const { return _sel.load(memory_order_relaxed); }

// imp解码,(IMP)(imp ^ (uintptr_t)cls)

// imp地址10进制 ^ class地址10进制,再转换成IMP类型

// 原理:c = a ^ b; a = c ^ b; -> b ^ a ^ b = a

inline IMP imp(UNUSED_WITHOUT_PTRAUTH bucket_t *base, Class cls) const {

uintptr_t imp = _imp.load(memory_order_relaxed);

if (!imp) return nil;

#if CACHE_IMP_ENCODING == CACHE_IMP_ENCODING_PTRAUTH

SEL sel = _sel.load(memory_order_relaxed);

return (IMP)

ptrauth_auth_and_resign((const void *)imp,

ptrauth_key_process_dependent_code,

modifierForSEL(base, sel, cls),

ptrauth_key_function_pointer, 0);

#elif CACHE_IMP_ENCODING == CACHE_IMP_ENCODING_ISA_XOR

// imp地址10进制 ^ class地址10进制,再转换成IMP类型

return (IMP)(imp ^ (uintptr_t)cls);

#elif CACHE_IMP_ENCODING == CACHE_IMP_ENCODING_NONE

return (IMP)imp;

#else

#error Unknown method cache IMP encoding.

#endif

}

// 此处只是声明,实现请看下面函数解析

void set(bucket_t *base, SEL newSel, IMP newImp, Class cls);

···· 省略n行代码·····

}

2.bucket_t成员变量

- _sel,方法sel。

- _imp,方法实现地址的10进制,需要^上class地址的10进制,然后再转换成IMP类型。

3.bucket_t函数

3.1 sel

// 获取bucket_的sel。

inline SEL sel() const {

// 返回sel

return _sel.load(memory_order_relaxed);

}

3.2 encodeImp

代码

// Sign newImp, with &_imp, newSel, and cls as modifiers.

uintptr_t encodeImp(UNUSED_WITHOUT_PTRAUTH bucket_t *base, IMP newImp, UNUSED_WITHOUT_PTRAUTH SEL newSel, Class cls) const {

if (!newImp) return 0;

#if CACHE_IMP_ENCODING == CACHE_IMP_ENCODING_PTRAUTH

return (uintptr_t)

ptrauth_auth_and_resign(newImp,

ptrauth_key_function_pointer, 0,

ptrauth_key_process_dependent_code,

modifierForSEL(base, newSel, cls));

#elif CACHE_IMP_ENCODING == CACHE_IMP_ENCODING_ISA_XOR

// imp地址转10进制 ^ class地址转10进制

return (uintptr_t)newImp ^ (uintptr_t)cls;

#elif CACHE_IMP_ENCODING == CACHE_IMP_ENCODING_NONE

return (uintptr_t)newImp;

#else

#error Unknown method cache IMP encoding.

#endif

}

解读

- IMP编码,imp地址10进制^上class地址10进制。imp以uintptr_t(unsigned long)格式存储在bucket_t中。

- imp编码,(uintptr_t)newImp ^ (uintptr_t)cls

- imp地址转10进制 ^ class地址转10进制

- imp以 uintptr_t(unsigned long)格式存储在bucket_t中

3.3 imp函数解析

代码

inline IMP imp(UNUSED_WITHOUT_PTRAUTH bucket_t *base, Class cls) const {

uintptr_t imp = _imp.load(memory_order_relaxed);

if (!imp) return nil;

#if CACHE_IMP_ENCODING == CACHE_IMP_ENCODING_PTRAUTH

SEL sel = _sel.load(memory_order_relaxed);

return (IMP)

ptrauth_auth_and_resign((const void *)imp,

ptrauth_key_process_dependent_code,

modifierForSEL(base, sel, cls),

ptrauth_key_function_pointer, 0);

#elif CACHE_IMP_ENCODING == CACHE_IMP_ENCODING_ISA_XOR

// imp地址10进制 ^ class地址10进制,再转换成IMP类型

return (IMP)(imp ^ (uintptr_t)cls);

#elif CACHE_IMP_ENCODING == CACHE_IMP_ENCODING_NONE

return (IMP)imp;

#else

#error Unknown method cache IMP encoding.

#endif

}

解读

- IMP解码并返回,imp地址10进制^上class地址10进制,再转换成IMP类型(跟上面的编码函数组成对称编解码)。

- 编解码原理:a两次^ b,还是等于a,即:c = a ^ b; a = c ^ b; -> b ^ a ^ b = a。

- 这里的class就是算法中的salt(盐),至于为什么用class作为salt,因为这些imp都归属于class,所以用class作为salt。

- imp解码,(IMP)(imp ^ (uintptr_t)cls)

// imp地址10进制 ^ class地址10进制,再转换成IMP类型

// 原理:b ^ a ^ b = a

断点验证

- 在main函数下断点,运行,在

inline IMP imp(UNUSED_WITHOUT_PTRAUTH bucket_t *base, Class cls) const方法下断点,进入此方法

(lldb) p imp

(uintptr_t) $0 = 48728

(lldb) p (IMP)(imp ^ (uintptr_t)cls) // 解码

(IMP) $1 = 0x0000000100003840 (KCObjcBuild`-[MHPerson sayHello])

(lldb) p (uintptr_t)cls //

(uintptr_t) $2 = 4295001624

(lldb) p 48728 ^ 4295001624

(long) $3 = 4294981696

(lldb) p/x 4294981696

(long) $4 = 0x0000000100003840

(lldb) p (IMP) 0x0000000100003840

// 验证

(IMP) $5 = 0x0000000100003840 (KCObjcBuild`-[MHPerson sayHello])

(lldb) p 4294981696 ^ 4295001624

// 编码验证

(long) $6 = 48728

2.3.4 bucket_t::set

// 为bucket_t设置sel、imp和class。

void bucket_t::set(bucket_t *base, SEL newSel, IMP newImp, Class cls)

{

ASSERT(_sel.load(memory_order_relaxed) == 0 ||

_sel.load(memory_order_relaxed) == newSel);

// objc_msgSend uses sel and imp with no locks.

// It is safe for objc_msgSend to see new imp but NULL sel

// (It will get a cache miss but not dispatch to the wrong place.)

// It is unsafe for objc_msgSend to see old imp and new sel.

// Therefore we write new imp, wait a lot, then write new sel.

uintptr_t newIMP = (impEncoding == Encoded

? encodeImp(base, newImp, newSel, cls)

: (uintptr_t)newImp);

if (atomicity == Atomic) {

_imp.store(newIMP, memory_order_relaxed);

if (_sel.load(memory_order_relaxed) != newSel) {

#ifdef __arm__

mega_barrier();

_sel.store(newSel, memory_order_relaxed);

#elif __x86_64__ || __i386__

_sel.store(newSel, memory_order_release);

#else

#error Don't know how to do bucket_t::set on this architecture.

#endif

}

} else {

_imp.store(newIMP, memory_order_relaxed);

_sel.store(newSel, memory_order_relaxed);

}

}

三、lldb验证cache_t结构

1.代码断点如下

2.步骤

(lldb) p/x pclass

(Class) $1 = 0x0000000100008610 MHPerson

(lldb) p (cache_t *)(0x0000000100008610 + 0x10) // (类型转换) 偏移16字节,拿到cache_t指针(isa8字节,superclass8字节)

(cache_t *) $2 = 0x0000000100008620

(lldb) p *$2 // cache输出

(cache_t) $3 = {

_bucketsAndMaybeMask = {

std::__1::atomic<unsigned long> = {

Value = 4298515360

}

}

= {

= {

_maybeMask = {

std::__1::atomic<unsigned int> = {

Value = 0 // _maybeMask-缓存容量为0,因为当前还未调用方法,所以还未开辟缓存空间

}

}

_flags = 32816

_occupied = 0 // _occupied-方法缓存个数,当前还未调用方法

}

_originalPreoptCache = {

std::__1::atomic<preopt_cache_t *> = {

Value = 0x0000803000000000

}

}

}

}

(lldb)

(lldb) p [p sayHello] // lldb调用方法

2021-06-29 14:54:29.435481+0800 KCObjcBuild[58858:5603051] age - 19831

(lldb) p *$2

(cache_t) $4 = {

_bucketsAndMaybeMask = {

std::__1::atomic<unsigned long> = {

Value = 4302342320

}

}

= {

= {

_maybeMask = {

std::__1::atomic<unsigned int> = {

// _maybeMask-缓存容量为7,

// x86_64架构第一次开辟容量应该为4, value结果应该为3

// 重新运行直接走sayh是3

Value = 7

}

}

_flags = 32816

_occupied = 3 //_occupied-方法缓存个数 因为在方法中调用了setter getter 方法 所以为3 如果不调用 应该为1

}

_originalPreoptCache = {

std::__1::atomic<preopt_cache_t *> = {

Value = 0x0003803000000007

}

}

}

}

// 内存平移方式取值

// 按顺序取出bucket_t

(lldb) p $4.buckets()[0]

(bucket_t) $5 = {

_sel = {

std::__1::atomic<objc_selector *> = (null) {

Value = nil

}

}

_imp = {

std::__1::atomic<unsigned long> = {

Value = 0

}

}

}

(lldb) p $4.buckets()[1]

(bucket_t) $6 = {

_sel = {

std::__1::atomic<objc_selector *> = "" {

Value = ""

}

}

_imp = {

std::__1::atomic<unsigned long> = {

Value = 48720

}

}

}

(lldb) p $6.sel()

(SEL) $7 = "sayHello"

(lldb) p $6.imp(nil, pclass)

(IMP) $8 = 0x0000000100003840 (KCObjcBuild`-[MHPerson sayHello])

(lldb) p $4.buckets()[2]

(bucket_t) $11 = {

_sel = {

std::__1::atomic<objc_selector *> = "" {

Value = ""

}

}

_imp = {

std::__1::atomic<unsigned long> = {

Value = 48144

}

}

}

(lldb) p $11.sel()

(SEL) $12 = "age"

(lldb) p $11.imp(nil, pclass)

(IMP) $13 = 0x0000000100003a00 (KCObjcBuild`-[MHPerson age])

3 lldb调试结果分析

- 调用一个方法,_occupied为1,_maybeMask为7(代码执行第一次调用为4),根据前面allocateBuckets函数解析里面提到过的CACHE_END_MARKER为1时(arm || x86_64 || __i386__架构为1),会存储end标记

- 加上里面调用age的setter getter方法之后_occupied为3,_maybeMask为7,这样加上上面已经缓存的一个方法,缓存区的方法就达到了3个。