popper 使用

TL;DR. In this article, I will be taking you through how you can quickly start experimenting with Ceph, from building and deploying Ceph to running experiments and benchmarks in a reproducible manner leveraging the Popper workflow execution engine. This project was done as a part of the IRIS-HEP fellowship for Summer 2020 in collaboration with CROSS, UCSC. The code for this project can be found here.

TL; DR。 在本文中,我将向您介绍如何快速开始使用Ceph进行实验,从构建和部署Ceph到以可重复的方式利用Popper工作流执行引擎来运行实验和基准测试。 该项目是IRIS-HEP与UCSC的CROSS合作进行的2020年夏季研究金的一部分。 该项目的代码可以在这里找到。

问题与解决方案 (The Problem and Our Solution)

If you are someone getting started in experimenting with Ceph, it can be a bit overwhelming for you as there are a lot of steps that you need to execute and get right before you can run actual experiments and get results. In a high-level, the steps that are generally included in a Ceph experimentation pipeline are depicted below.

如果您是开始使用Ceph进行实验的人,那么这可能会让您有些不知所措,因为在执行实际实验并获得结果之前,您需要执行许多步骤并正确执行。 在较高级别,下面描述了Ceph实验管道中通常包含的步骤。

Booting up VMs or Bare metal nodes on Cloud providers like AWS, GCP, CloudLab, etc.

在AWS,GCP, CloudLab等云提供商上启动VM或Bare metal节点。

Deploying Kubernetes and baselining the cluster.

部署Kubernetes并为集群设定基准。

- Compiling and deploying Ceph. 编译和部署Ceph。

- Baselining the Ceph deployment. 基线Ceph部署。

- Running experiments and use-case specific benchmarks. 运行实验和特定于用例的基准。

- Writing Jupyter notebooks and plotting graphs. 编写Jupyter笔记本并绘制图形。

If done manually, these steps might require typing 100s of commands interactively which can be cumbersome and error-prone. Since Popper is already good at automating experimentation workflows, we felt that automating this complex scenario can be a good use case for Popper and would potentially lower the entry barrier for new Ceph researchers. Using Popper, we coalesced a long list of Ceph experimentation commands and guides into a couple of Popper workflows, that can be easily executed on any machine to perform Ceph experiments, thus automating all the wasteful manual work and allowing researchers to focus on their experimentation logic instead.

如果手动完成,那么这些步骤可能需要交互地键入100个命令,这很麻烦且容易出错。 由于Popper已经擅长自动化实验工作流程,因此我们认为自动化此复杂方案对于Popper可能是一个很好的用例,并且有可能降低新的Ceph研究人员的进入门槛。 使用Popper,我们将一长串的Ceph实验命令和指南合并为几个Popper工作流程,这些工作流程可以在任何机器上轻松执行以执行Ceph实验,从而使所有浪费的手动工作自动化,并使研究人员可以专注于他们的实验逻辑代替。

In our case, we also built workflows to benchmark SkyhookDM Ceph, which is a customization of Ceph to execute queries on tabular datasets stored as objects, by running queries on large datasets of the order of several hundred GBs and several hundred million rows.

在我们的案例中,我们还构建了工作流以对SkyhookDM Ceph进行基准测试,这是Ceph的自定义,可以通过对数百GB和几亿行的大型数据集运行查询来对存储为对象的表格数据集执行查询。

什么是波普尔? (What is Popper?)

Overview of Popper 波普尔概述Popper is a light-weight YAML based container-native workflow execution and task automation engine.

Popper是基于YAML的轻量级容器本机工作流执行和任务自动化引擎。

In general, researchers and developers often need to type a long list of commands in their terminal to build, deploy, and experiment with any complex software system. This process is very manual and needs a lot of expertise and can lead to frustration because of missing dependencies and errors. The problem of dependency management can be addressed by moving the entire software development life cycle inside software containers. This is known as container-native development. In practice, when we work following the container-native paradigm, we end up interactively executing multiple docker pull|build|run commands in order to build containers, compile code, test applications, deploy software, etc. Keeping track of which docker commands were executed, in which order, and which flags were passed to each, can quickly become unmanageable, difficult to document (think of outdated README instructions), and error-prone. The goal of Popper is to bring order to this chaotic scenario by providing a framework for clearly and explicitly defining container-native tasks. You can think of Popper as a tool for wrapping all these manual tasks in a lightweight, machine-readable, self-documented format (YAML).

通常,研究人员和开发人员通常需要在终端上键入一长串命令,以构建,部署和试验任何复杂的软件系统。 此过程非常手动,需要大量专业知识,由于缺少相关性和错误,可能会导致挫败感。 可以通过在软件容器内部移动整个软件开发生命周期来解决依赖性管理问题。 这就是所谓的容器原生开发。 在实践中,当我们遵循容器本机范例工作时,最终会交互执行多个docker pull|build|run命令以构建容器,编译代码,测试应用程序,部署软件等。跟踪哪些docker命令是执行,以什么顺序执行以及以哪种标志传递给每个执行,可能很快变得难以管理,难以记录(考虑过时的README指令)并且容易出错。 Popper的目标是通过提供一个框架来清晰明确地定义容器本地任务,从而使混乱的情况变得井然有序。 您可以将Popper视为一种工具,用于以轻量级,机器可读的,自记录的格式(YAML)包装所有这些手动任务。

While this sounds simple at first, it has significant implications: results in time-savings, improve communication and in general unifies development, testing, and deployment workflows. As a developer or user of “Popperized” container-native projects, you only need to learn one tool and leave the execution details to Popper, whether is to build and tests applications locally, on a remote CI server, or a Kubernetes cluster.

虽然这听起来很简单,但它具有重大意义:可以节省时间,改善沟通并总体上统一开发,测试和部署工作流。 作为“ Popperized”容器本地项目的开发人员或用户,您只需要学习一种工具并将执行细节留给Popper,无论是在本地,远程CI服务器或Kubernetes集群上构建和测试应用程序。

Some features of Popper are as follows:

Popper的某些功能如下:

Lightweight workflow and task automation syntax: Popper workflows can be simply defined by writing a list of steps in a file using a lightweight YAML syntax.

轻量级工作流和任务自动化语法:使用轻量级YAML语法在文件中编写步骤列表,即可轻松定义Popper工作流。

- Abstraction over Containers and Resource managers: Popper allows running workflows in an engine and resource manager agnostic manner by abstracting different container engines like Docker, Podman, Singularity, and resource managers like Slurm, Kubernetes, etc. 容器和资源管理器上的抽象:Popper通过抽象化不同的容器引擎(例如Docker,Podman,Singularity和资源管理器(例如Slurm,Kubernetes等)),以与引擎和资源管理器无关的方式运行工作流。

- Abstraction over CI services: Popper can generate configuration files for CI services like Travis, Circle, Jenkins allowing users to delegate workflow execution from the local machine to CI services. 通过CI服务进行抽象:Popper可以为Travis,Circle,Jenkins等CI服务生成配置文件,从而允许用户将工作流执行从本地计算机委派给CI服务。

You can install it from https://pypi.org/ by doing,

您可以通过以下方法从https://pypi.org/安装:

$ pip install popperIf you cannot use pip, check this out to run Popper on Docker, without having to install anything. The Popper CLI provides a run subcommand that invokes a workflow execution and runs each step in separate containers. Now that we understand what Popper is, Let's see how Popper helps automate each step of a Ceph experimentation workflow.

如果你不能使用画中画,检查这出上多克尔运行波普尔,无需安装任何东西。 Popper CLI提供了run子命令,该子命令调用工作流执行并在单独的容器中运行每个步骤。 现在我们了解了Popper是什么,让我们看一下Popper如何帮助实现Ceph实验工作流程的每个步骤的自动化。

获取Kubernetes集群 (Getting a Kubernetes Cluster)

The first step while getting started with your experiments is to set up the underlying infrastructure, which in this case is a Kubernetes cluster. If you already have access to a Kubernetes cluster, then you can simply skip this section. Otherwise, you can spawn Kubernetes clusters from managed Kubernetes offerings like GKE from Google, EKS from AWS. Or else, if you have access to CloudLab, which is an NSF-sponsored bare-metal-as-a-service public cloud, you can spawn nodes from there and deploy a Kubernetes cluster yourself. The first step for building a Kubernetes cluster on CloudLab will be to spawn the bare-metal nodes. This can be done by running the workflow given below.

开始实验的第一步是设置基础基础架构,在这种情况下,该基础架构是Kubernetes集群。 如果您已经可以访问Kubernetes集群,则可以直接跳过本节。 否则,您可以从托管的Kubernetes产品中生成Kubernetes集群,例如Google的GKE ,AWS的EKS 。 否则,如果您可以访问CloudLab ,这是NSF赞助的裸机即服务公共云,则可以从那里生成节点并自己部署Kubernetes集群。 在CloudLab上构建Kubernetes集群的第一步将是生成裸机节点。 这可以通过运行下面给出的工作流程来完成。

$ popper run -f workflows/nodes.ymlThis workflow takes secrets like your Geni credentials and Node specifications. It will boot up the requested nodes in a LAN with a link speed of 10Gb/s. Geni-lib is used for this purpose which a library to programmatically spawn compute infrastructure on CloudLab. The IP addresses of the nodes spawned can be found in the rook/geni/hosts file. Now that your nodes are ready, its time to deploy Kubernetes.

此工作流程包含诸如Geni凭据和Node规范之类的秘密。 它将以10Gb / s的链接速度启动LAN中的请求节点。 Geni-lib用于此目的,该库用于在CloudLab上以编程方式生成计算基础架构。 生成的节点的IP地址可以在rook/geni/hosts文件中找到。 现在您的节点已准备就绪,是时候部署Kubernetes了。

$ popper run -f workflows/kubernetes.ymlThis workflow will make use of Kubespray and deploy a production-ready Kubernetes cluster for you. After setting up the cluster, the kubeconfig file will be downloaded to the rook/kubeconfig/config file, so that the other workflows can access the config file and connect to the cluster. Just 2 commands were executed for spawning nodes and setting up a Kubernetes cluster. That’s the new normal!

该工作流程将利用Kubespray并为您部署可用于生产的Kubernetes集群。 设置集群后, kubeconfig文件将下载到rook/kubeconfig/config文件中,以便其他工作流可以访问该配置文件并连接到集群。 仅执行了2条命令以生成节点并设置Kubernetes集群。 那是新常态!

Now that your cluster is ready, you can create namespaces, claim volumes, and runs pods, as you would normally do in a Kubernetes cluster. You can also set up a monitoring infrastructure in order to monitor and record several system parameters like CPU usage, memory pressure, network I/O while your experiments are running, and for analyzing the plots later. We chose Prometheus + Grafana stack for the monitoring infrastructure since they are very commonly used and provide very nice real-time visualizations. You can execute the workflow given below to set up the monitoring infrastructure.

现在您的集群已准备就绪,您可以像在Kubernetes集群中通常那样创建名称空间,声明卷并运行Pod。 您还可以设置监视基础结构,以便在实验运行时监视和记录多个系统参数,例如CPU使用率,内存压力,网络I / O,并在以后进行分析。 我们选择Prometheus + Grafana堆栈作为监视基础结构,因为它们非常常用并且提供了非常不错的实时可视化效果。 您可以执行下面给出的工作流程来设置监视基础结构。

$ popper run -f workflows/prometheus.ymlThis workflow will deploy the Prometheus and Grafana operators. To access the Grafana dashboard, you need to map a local port on your machine to the port on which the Grafana service is listening inside the cluster by doing,

该工作流程将部署Prometheus和Grafana运算符。 要访问Grafana仪表板,您需要通过以下方式将计算机上的本地端口映射到Grafana服务正在集群内监听的端口:

$ kubectl --namespace monitoring port-forward svc/grafana 3000You can access the Grafana dashboard by browsing to http://localhost:3000 from your web browser. If it asks for a username and password, use “admin” for both. The next step is to baseline the Kubernetes cluster.

您可以通过从Web浏览器浏览到http:// localhost:3000来访问Grafana仪表板。 如果要求输入用户名和密码,请同时使用“ admin”。 下一步是确定Kubernetes集群的基线。

为Kubernetes集群奠定基础 (Baselining the Kubernetes Cluster)

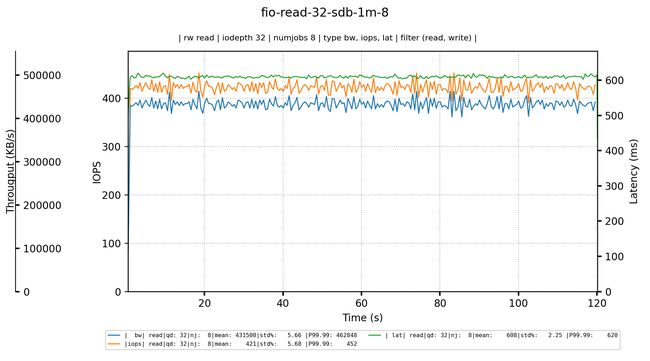

The two most important components you need to benchmark for your Ceph experiments is the disk and network I/O because they are often the primary sources of bottlenecks for any storage system in general and also because you need to compare the disk throughput before and after deploying Ceph. We used well-known tools like fio and iperf for this purpose. Kubestone, which is a standard benchmarking operator for Kubernetes was used to run the fio and iperf workloads.

您需要对Ceph实验进行基准测试的两个最重要的组件是磁盘和网络I / O,因为它们通常通常是任何存储系统瓶颈的主要来源,并且因为您需要在部署前后比较磁盘吞吐量Ceph。 为此,我们使用了诸如fio和iperf之类的知名工具。 Kubestone是Kubernetes的标准基准测试运营商,用于运行fio和iperf工作负载。

To measure the performance of an underlying blockdevice in a particular node, we can run this workflow.

为了衡量特定节点中基础块设备的性能,我们可以运行此工作流程。

$ popper run -f workflows/fio.yml -s _HOSTNAME=This workflow will start a client pod and measure the READ, WRITE, RANDREAD, RANDWRITE performance of a blockdevice in terms of its Bandwidth, IOPS, and Latency over varying block sizes, blockdevices, IO depths, and job counts. Performing the parameter sweeps helps to analyze how the performance varies with different parameters that can be mapped to and compared with actual workloads while running Ceph benchmarks. You can run this workflow multiple times by changing the HOSTNAME variable to benchmark the blockdevice performance of different nodes.

此工作流将启动客户端容器,并根据其在各种块大小,块设备,IO深度和作业计数上的带宽,IOPS和延迟来衡量块设备的读取,写入,RANDREAD和RANDWRITE性能。 执行参数扫描有助于分析在运行Ceph基准测试时可以映射到实际工作负载并与实际工作负载进行比较的不同参数的性能变化。 您可以通过更改HOSTNAME变量以基准化不同节点的块设备性能来多次运行此工作流程。

In our setup, we observed an SEQ READ bandwidth of approx. 410 MB/s while keeping the CPU busy with 8 jobs and an IO Depth of 32. After your disks are benchmarked, its time to benchmark the cluster network. The cluster network can be benchmarked by running the workflow given below.

在我们的设置中,我们观察到大约SEQ READ带宽。 410 MB / s,同时使CPU忙于8个作业和IO深度为32。在对磁盘进行基准测试之后,就该对集群网络进行基准测试了。 可以通过运行下面给出的工作流程对群集网络进行基准测试。

$ popper run -f workflows/iperf.yml -s _CLIENT= -s _SERVER= The above workflow can be run multiple times changing the CLIENT and SERVER variables to setup the iperf client and server pods on a different set of nodes every time and measure the bandwidth of the link between them. This helps in understanding the bandwidth of the cluster network and how much volume of data it can move per second. Also, any faulty network link between any set of nodes can be discovered before moving on to running the Ceph benchmarks. If it's a 10GbE network theoretically, the bandwidth should be around 8–9 Gb/s because of the overhead introduced by underlying Kubernetes networking stacks like Calico.

可以多次运行上述工作流,更改CLIENT和SERVER变量,以每次在不同的节点集上设置iperf客户端和服务器Pod,并测量它们之间链接的带宽。 这有助于了解群集网络的带宽以及每秒可移动多少数据量。 此外,在继续运行Ceph基准测试之前,可以发现任何节点集之间的任何故障网络链接。 从理论上讲,如果它是10GbE网络,则带宽应在8–9 Gb / s左右,这是因为底层的Kubernetes网络堆栈(如Calico)引入了开销。

The internal network of our cluster had an average bandwidth of 8–8.5 Gb/s between different sets of nodes. Now that you have a fair understanding of your cluster’s Disk and network performance, let’s deploy Ceph and start experimenting!

我们群集的内部网络在不同节点集之间的平均带宽为8–8.5 Gb / s。 现在,您已经对群集的磁盘和网络性能有了充分的了解,让我们部署Ceph并开始试验!

对Ceph RADOS接口进行基准测试 (Benchmarking the Ceph RADOS Interface)

Rook is an open-source cloud-native storage orchestrator for Kubernetes, providing the platform, framework, and support for a diverse set of storage solutions to natively integrate with Cloud-native environments. As the primary goal of the Popper workflows is to automate as much experimentation workload as possible, we use Rook to deploy Ceph in Kubernetes clusters since it makes storage software self-managing, self-healing, and self-scaling.

Rook是Kubernetes的开源云原生存储业务流程协调者,它提供了平台,框架和对各种存储解决方案的支持,以与Cloud Native原生集成。 由于Popper工作流程的主要目标是使尽可能多的实验工作自动化,因此我们使用Rook在Kubernetes集群中部署Ceph,因为它可以使存储软件进行自我管理,自我修复和自我扩展。

$ popper run -f workflows/rook.yml setup-ceph-clusterExecuting this workflow would create the rook-ceph namespace and deploy the Rook operator. The Rook operator will start deploying the MONs, OSDs, crash collectors, etc in the form of individual pods. After the Ceph cluster is up and running, you need to download the Ceph configuration by executing,

执行此工作流程将创建rook-ceph名称空间并部署Rook运算符。 Rook操作员将开始以单个Pod的形式部署MON,OSD,崩溃收集器等。 在Ceph集群启动并运行之后,您需要通过执行以下命令来下载Ceph配置:

$ popper run -f workflows/rook.yml download-configThe cluster config will be downloaded to /rook/cephconfig/ inside the project root by default and other workflows will try reading the ceph config from this directory by default while injecting it into client pods that need to connect to the cluster. After the cluster is up and running, the next step is to benchmark the throughput of the Ceph RADOS interface to measure the Object store’s overhead over raw blockdevices and to also figure out the overhead of SkyhookDM over vanilla Ceph. You can perform these benchmarks using the rados bench utility through this workflow.

默认情况下,群集配置将下载到项目根目录下的/rook/cephconfig/ ,其他工作流将默认尝试从此目录读取ceph配置,同时将其注入需要连接到群集的客户端Pod中。 集群启动并运行后,下一步是对Ceph RADOS接口的吞吐量进行基准测试,以测量原始数据块设备上对象存储的开销,并确定SkyhookDM在原始Ceph上的开销。 您可以通过此工作流程使用rados bench实用程序执行这些基准测试。

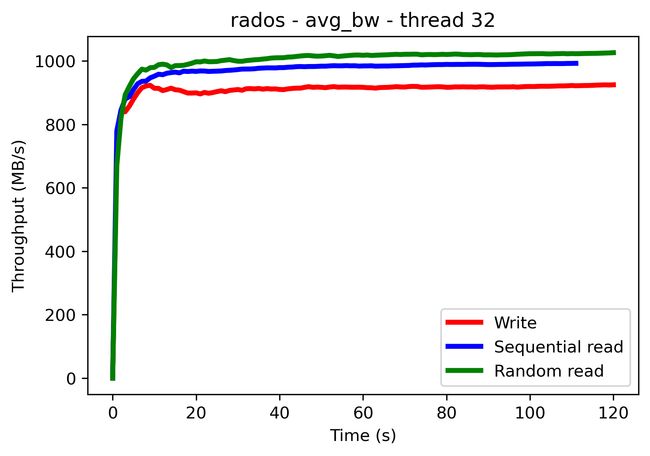

$ popper run -f workflows/radosbench.yml -s _CLIENT=The above workflow also deploys a pod to act as a client and which can be used to run the rados benchmarks. The Ceph config is copied into the pod to enable it to connect to our cluster. The workflow provides a CLIENT substitution variable to specify the node on which to deploy the client pod. The client pod is supposed to be located in a different node that does not host any OSD pod or MON pod. Otherwise, the effects of the underlying network will not get captured and might lead to absurd results. Benchmark parameters like the thread count, duration, and object size can also be configured from the workflow itself to simulate running benchmarks with different workloads. This will generate a notebook containing plots for Throughput, Latency, and IOPS benchmarks. The workflow runs rados bench commands for measuring the SEQ READ, RAND READ, and WRITE throughput of the cluster.

上面的工作流还部署了一个pod来充当客户端,并且可以用来运行rados基准测试。 将Ceph配置复制到pod中,使其能够连接到我们的集群。 工作流提供一个CLIENT替换变量,以指定要在其上部署客户端容器的节点。 客户端Pod应该位于不承载任何OSD Pod或MON Pod的其他节点中。 否则,将无法捕获基础网络的影响,并可能导致荒谬的结果。 还可以从工作流本身配置基准参数,例如线程数,持续时间和对象大小,以模拟具有不同工作负载的运行基准。 这将生成一个笔记本,其中包含吞吐量,延迟和IOPS基准测试的图表。 该工作流运行rados bench命令,用于测量集群的SEQ READ,RAND READ和WRITE吞吐量。

In our case, when we ran benchmarks on a single OSD cluster, we found that the storage was the bottleneck. The SEQ READ throughput of a single OSD was approx 390 MB/s, less than that of the raw blockdevice which was slightly more than 400 MB/s. This difference was probably due to the overhead introduced by Ceph over the raw block devices.

在我们的案例中,当我们在单个OSD群集上运行基准测试时,我们发现存储是瓶颈。 单个OSD的SEQ READ吞吐量约为390 MB / s,小于原始块设备的SEQ READ吞吐量略大于400 MB / s。 这种差异可能是由于Ceph在原始块设备上引入的开销所致。

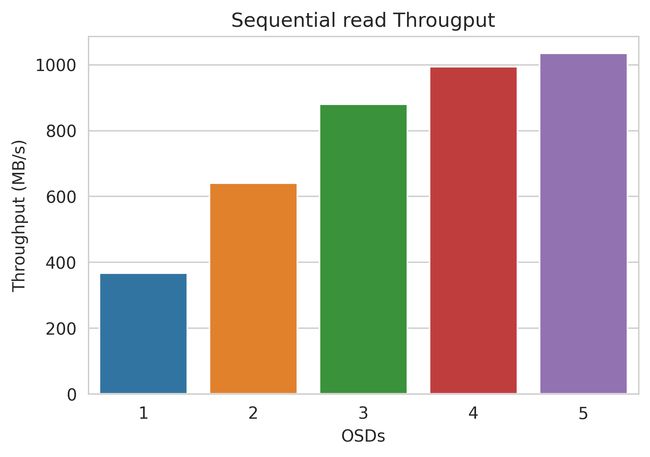

Throughput measurements from reading and writing to 4 OSDs 从读写到4个OSD的吞吐量测量Next, we scaled out by increasing the number of OSDs slowly from 1 to 5. As we scaled out, the throughput started increasing until it reached peak throughput at 4 OSDs. We scaled further to 5 OSDs but there was no improvement in throughput, it was stagnant at around 1000 MB/s. Since the network capacity was around 8–8.5 Gb/s, that’s when we understood that the bottleneck has shifted from the disks to the network.

接下来,我们通过将OSD的数量从1个缓慢增加到5个来进行扩展。随着我们进行扩展,吞吐量开始增加,直到达到4个OSD的峰值吞吐量为止。 我们进一步扩展到5个OSD,但吞吐量没有提高,它停滞在1000 MB / s左右。 由于网络容量约为8–8.5 Gb / s,因此我们了解到瓶颈已从磁盘转移到网络。

Increase in Sequential Read throughput with the increase in OSDs 随着OSD的增加,顺序读取吞吐量增加We also monitored the CPU and memory pressure during these benchmarks and both were far from being saturated. So, they were probably not contributing to any bottleneck. If you also follow this methodology, you should have a fair understanding of your Ceph cluster’s performance and the overhead introduced by Ceph on your disk’s I/O performance. Next, you can move on to benchmarking your custom Ceph implementation. In our case, we benchmarked SkyhookDM to find out how much overhead its tabular data processing functionality incurs.

在这些基准测试期间,我们还监视了CPU和内存压力,两者都还远未达到饱和状态。 因此,他们可能没有造成任何瓶颈。 如果您也遵循这种方法,则应该对Ceph群集的性能以及磁盘的I / O性能所造成的Ceph开销有一定的了解。 接下来,您可以继续基准测试您的自定义Ceph实现。 在我们的案例中,我们对SkyhookDM进行了基准测试,以了解其表格数据处理功能会产生多少开销。

案例研究:基准SkyhookDM Ceph (Case Study: Benchmarking SkyhookDM Ceph)

Since this project was focussed on benchmarking and finding out bottlenecks and overheads in SkyhookDM Ceph, we implemented workflows for it only. The same methodologies can be followed to benchmark some other Ceph flavor. We converted the same vanilla Ceph cluster to a SkyhookDM cluster with the tabular libraries loaded by running,

由于该项目专注于基准测试并找出SkyhookDM Ceph中的瓶颈和开销,因此我们仅为其实现了工作流程。 可以遵循相同的方法对其他Ceph风味进行基准测试。 我们将相同的香草Ceph集群转换为SkyhookDM集群,并通过运行以下方式加载了表格库:

$ popper run -f workflows/rook.yml setup-skyhook-cephThe next step was to start a SkyhookDM client, download the tabular datasets, and load them as objects into the OSDs. Each object was 10 MB in size with 75K rows. 10,000 such objects were loaded into the cluster to get a huge dataset with 750M rows and of total size 210 GB. There is an object loading script that loads objects in batches of size equal to the number of CPU cores using multiple threads. For our experiments, we ran the queries for both client-side and storage-side processing using 48 threads over the 10,000 objects distributed across 4 OSDs. The entire process was done by running the workflow given below. Each query was run 3 times to capture the variability. We used this workflow for the query benchmarks.

下一步是启动SkyhookDM客户端,下载表格数据集,并将其作为对象加载到OSD中。 每个对象的大小为10 MB,行数为75K。 将10,000个此类对象加载到集群中,以获取具有750M行,总大小为210 GB的巨大数据集。 有一个对象加载脚本,该脚本使用多个线程批量加载等于CPU核心数量的对象。 对于我们的实验,我们使用48个线程对分布在4个OSD上的10,000个对象进行了客户端和存储侧处理的查询。 整个过程是通过运行下面给出的工作流程完成的。 每个查询运行3次以捕获变异性。 我们将此工作流用于查询基准。

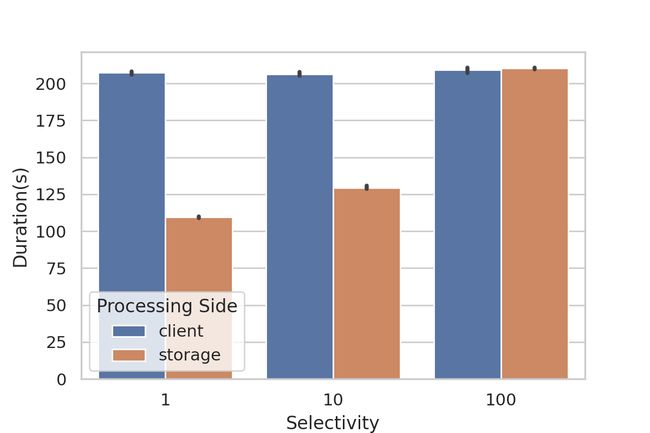

$ popper run -f workflows/run_query.yml -s _CLIENT=In our experiments, we found that for 1% and 10% selectivity, much less time was required for query processing in the storage-side than on the client-side. This was probably because, in the client-side processing scenario, the entire dataset had to be transferred through the network while in the storage side, only the queried dataset needed to be transferred. For the 100% query scenario, since the entire dataset needed to be transferred in both cases, there was no perceptible improvement in the storage side processing scenario.

在我们的实验中,我们发现对于1%和10%的选择性,在存储端进行查询处理所需的时间比在客户端花费更少的时间。 这可能是因为,在客户端处理场景中,整个数据集必须通过网络进行传输,而在存储方面,仅需要传输查询的数据集。 对于100%查询方案,由于在两种情况下都需要传输整个数据集,因此在存储端处理方案中没有明显的改进。

Since the total dataset size was 210 GB and the time spent in fetching and querying the entire dataset was approx. 210 s, if we do some math, we can see that the throughput was approx. 1000 MB/s (8 Gb/s). So, the network was again the bottleneck as expected. Also, the overhead of querying the tabular data was negligible as both vanilla Ceph and SkyhookDM reads had the same throughput.

由于数据集的总大小为210 GB,并且在获取和查询整个数据集上花费的时间大约为。 210 s,如果我们做一些数学运算,我们可以看到吞吐量大约是。 1000 MB /秒(8 Gb / s)。 因此,网络再次成为了预期的瓶颈。 同样,查询表格数据的开销可以忽略不计,因为原始Ceph和SkyhookDM读取具有相同的吞吐量。

分析结果 (Analyzing the Results)

After the benchmarks and experiments are run, several plots and notebooks are generated and the usual next step is to analyze them and find insights. All the benchmark and baseline workflows generate Jupyter notebooks consisting of plots built using Matplotlib and Seaborn along with the actual result files. The Jupyter notebooks can be run interactively using Popper by doing,

在运行基准测试和实验后,将生成多个绘图和笔记本,通常的下一步是对其进行分析并寻找见解。 所有基准测试和基准工作流都会生成Jupyter笔记本,其中包括使用Matplotlib和Seaborn构建的绘图以及实际结果文件。 通过执行以下操作,可以使用Popper交互式运行Jupyter笔记本,

# get a shell into the container

host $ popper sh -f workflows/radosbench.yml plot-results# start the notebook server

container $ jupyter notebook --ip 0.0.0.0 --allow-root --no-browserAlso, every workflow has a teardown step to teardown the resources spawned by it. To clean up the Ceph volumes created in the nodes of the Kubernetes cluster entirely after tearing down the Ceph deployment, follow this guide. This is absolutely necessary if you want to reuse the cluster to deploy Ceph again.

此外,每个工作流程都具有teardown步骤,以拆卸由其衍生的资源。 要在拆除Ceph部署后完全清理在Kubernetes集群的节点中创建的Ceph卷,请遵循本指南。 如果您想重用集群以再次部署Ceph,这是绝对必要的。

结束语 (Closing Remarks)

I was looking to get into storage systems and it was exactly when this project happened. This project helped me learn a lot about performance analysis and benchmarking of systems which are super exciting and also it also worked as a great introduction to storage systems for me. Besides technical stuff, I also got to learn how to present the work done and engage in productive discussions. A huge thanks to my mentors Ivo Jimenez, Jeff LeFevre, and Carlos Maltzahn for being such great support. Thanks to IRIS-HEP and CROSS, UCSC for providing this awesome experience.

我当时正打算进入存储系统,而这恰恰是该项目发生的时间。 这个项目帮助我学到了很多有关性能分析和系统基准测试的知识,这非常令人兴奋,并且还为我提供了很好的存储系统介绍。 除了技术知识外,我还必须学习如何介绍完成的工作并进行富有成效的讨论。 非常感谢我的导师Ivo Jimenez , Jeff LeFevre和Carlos Maltzahn的大力支持。 感谢UCSC的IRIS-HEP和CROSS,提供了如此出色的体验。

保持联系 (Get In Touch)

Feel free to open an issue or submit a Pull Request with your favorite Ceph benchmark. For more information about SkyhookDM or Popper, Please join our Slack channel or follow us on Github. Cheers!

随意打开一个问题或使用您最喜欢的Ceph基准提交提交请求。 有关SkyhookDM或Popper的更多信息,请加入我们的Slack频道或在Github上关注我们。 干杯!

翻译自: https://medium.com/@heyjc/reproducible-experiments-and-benchmarks-on-skyhookdm-ceph-using-popper-64c42d47a65a

popper 使用