An Enhanced Res2Net with Local and Global Feature Fusion for Speaker Verification

1. Overview

论文题目:An Enhanced Res2Net with Local and Global Feature Fusion for Speaker Verification

论文单位:阿里巴巴集团,中国科学技术大学

核心内容:有效融合多尺度特征对于提高说话人识别性能至关重要。现有的大多数方法通过简单的操作,如特征求和或拼接,并采用逐层聚合的方式获取多尺度特征。本文提出了一种新的架构,称为增强式Res2Net(ERes2Net),通过局部和全局特征融合提高说话人识别性能。局部特征融合将一个单一残差块内的特征融合提取局部信号;全局特征融合使用不同层级输出的不同尺度声学特征聚合全局信号。为了实现有效的特征融合,ERes2Net架构中采用了注意力特征融合模块,代替了求和或串联操作。在VoxCeleb数据集上进行的一系列实验展现了ERes2Net识别性能的优越性。

论文预印版下载地址:

https://arxiv.org/pdf/2305.12838.pdf

项目开源地址:

https://github.com/alibaba-damo-academy/3D-Speaker

Problem statement: Most existing methods aggregate multi-scale features in a layer-wise manner via simple operations, such as summation or concatenation, provides a rigid combination of features that may not utilize the complementary information from different scales.

Contributions:

Enhanced Res2Net architecture (ERes2Net) incorporates a local and global feature fusion mechanism for extracting speaker embeddings.

2. Res2Net block and ERes2Net

Res2Net block aims at improving model’s multi-scale representation ability by increasing the number of available receptive fields.

Backbone model: Res2Net

Stage 0: The input layer consists of a single convolutional layer with a kernel size of 3×3, stride of 1 and channel dimension of 32.

Stage 1-4: Four residual stages include [3, 4, 6, 3] basic blocks with 64, 128, 256, and 512 channels output respectively.

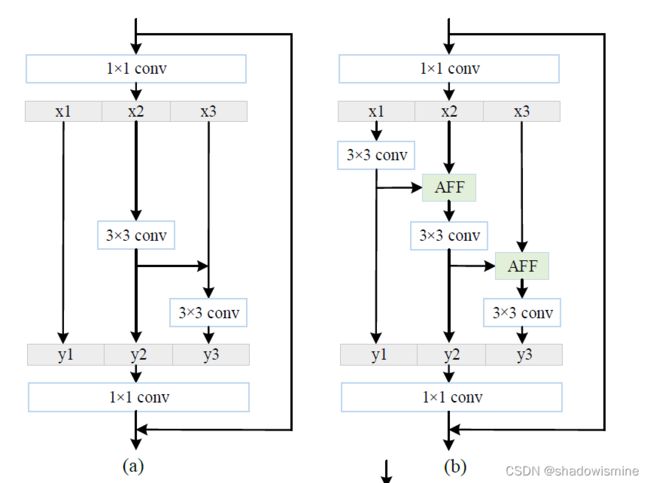

As in figure (a), in each basic block, it has 3 convolutional layers with two filter sizes of 1×1 and one filter size of 3×3. After the 1×1 convolution, feature maps were split into s (we set s=2 in this work) feature map subsets. Every splitted feature goes through 3×3 convolutional operator and concatenated. Then these feature maps are processed by an 1×1 convolutional kernel. Down-sampling is performed by stage 2, stage 3 and stage 4 with a stride of 2×2.

Proposed model: ERes2Net

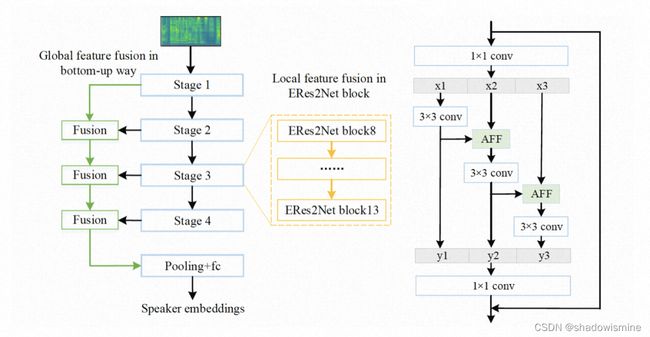

The overall architecture of the proposed network consists of two branches: a local feature fusion branch (in ERes2Net) and a bottom-up feature fusion branch (Through backbone network).

2.1 Local feature fusion in ERes2Net (LFF)

The key idea of LFF is to obtain more finegrained features and strengthen the local information interaction (Shown in Fig b).

1. feature mapsXare divided into different groups according to the channel dimension after a 1 × 1 convolution, denoted by xi.

2. Then output features of the previous group are fused along with another group of input feature maps via an attentional feature fusion (AFF) module.

The output of ERes2Net block is

Ki() represents 3×3 convolution filter.

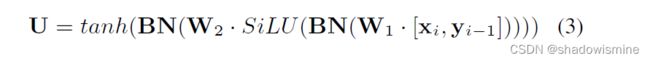

U(·) represents the AFF module to compute local attention weights for adjacent feature maps.

AFF module takes the concatenation of adjacent feature maps xi and yi−1 as the input.

W1 andW2 are point-wise convolution with output channel sizes of C/r and C respectively. r is the channel reduction ratio (we set r=4 in this work). Bottleneck?SE?

2.2 Global feature fusion in ERes2Net (GFF)

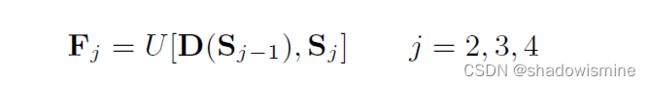

Global feature fusion (GFF) module.

GFF aims to enhance the global feature interaction by modulating features of different temporal scales in the bottomup pathway.

1. select the multi-scale features {Sj |j =2, 3, 4} of last layer in each ERes2Net stage which containing different temporal resolutions.

2. down-sample higher resolution feature maps for ERes2Net stage output in the time and frequency dimension using 3 × 3 convolution kernel and expand the channel dimension with a factor of 2. (卷积后通道数翻倍)

3. concatenate each stage output and calculate the bottom-up attention to modulate {Sj |j = 2, 3, 4} through AFF module.

where D(·) denotes the down-sampling operation. Fj stands for the fusion of (j − 1) − th stage output and j − th stage output in bottom-up pathway.