- 【一起学Rust | 设计模式】习惯语法——使用借用类型作为参数、格式化拼接字符串、构造函数

广龙宇

一起学Rust#Rust设计模式rust设计模式开发语言

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档文章目录前言一、使用借用类型作为参数二、格式化拼接字符串三、使用构造函数总结前言Rust不是传统的面向对象编程语言,它的所有特性,使其独一无二。因此,学习特定于Rust的设计模式是必要的。本系列文章为作者学习《Rust设计模式》的学习笔记以及自己的见解。因此,本系列文章的结构也与此书的结构相同(后续可能会调成结构),基本上分为三个部分

- PHP环境搭建详细教程

好看资源平台

前端php

PHP是一个流行的服务器端脚本语言,广泛用于Web开发。为了使PHP能够在本地或服务器上运行,我们需要搭建一个合适的PHP环境。本教程将结合最新资料,介绍在不同操作系统上搭建PHP开发环境的多种方法,包括Windows、macOS和Linux系统的安装步骤,以及本地和Docker环境的配置。1.PHP环境搭建概述PHP环境的搭建主要分为以下几类:集成开发环境:例如XAMPP、WAMP、MAMP,这

- 2019-08-08

65454

东莞家庭聚会出行旅游去哪里玩住?想起来有很久没有和家里人聚会啦,这次组织家人来到威廉古堡别墅轰趴,一大家子27个人,在别墅订了一天办,玩的非常的开心,小孩子玩游戏机,也很放心不会丢,我们就在唱歌、打麻将、打桌球一系列的活动,还准备小次等小孩生日在别墅举办,还可以给孩子做一个生日的策划

- GitHub上克隆项目

bigbig猩猩

github

从GitHub上克隆项目是一个简单且直接的过程,它允许你将远程仓库中的项目复制到你的本地计算机上,以便进行进一步的开发、测试或学习。以下是一个详细的步骤指南,帮助你从GitHub上克隆项目。一、准备工作1.安装Git在克隆GitHub项目之前,你需要在你的计算机上安装Git工具。Git是一个开源的分布式版本控制系统,用于跟踪和管理代码变更。你可以从Git的官方网站(https://git-scm.

- MYSQL面试系列-04

king01299

面试mysql面试

MYSQL面试系列-0417.关于redolog和binlog的刷盘机制、redolog、undolog作用、GTID是做什么的?innodb_flush_log_at_trx_commit及sync_binlog参数意义双117.1innodb_flush_log_at_trx_commit该变量定义了InnoDB在每次事务提交时,如何处理未刷入(flush)的重做日志信息(redolog)。它

- Kafka 消息丢失如何处理?

架构文摘JGWZ

学习

今天给大家分享一个在面试中经常遇到的问题:Kafka消息丢失该如何处理?这个问题啊,看似简单,其实里面藏着很多“套路”。来,咱们先讲一个面试的“真实”案例。面试官问:“Kafka消息丢失如何处理?”小明一听,反问:“你是怎么发现消息丢失了?”面试官顿时一愣,沉默了片刻后,可能有点不耐烦,说道:“这个你不用管,反正现在发现消息丢失了,你就说如何处理。”小明一头雾水:“问题是都不知道怎么丢的,处理起来

- 数据仓库——维度表一致性

墨染丶eye

背诵数据仓库

数据仓库基础笔记思维导图已经整理完毕,完整连接为:数据仓库基础知识笔记思维导图维度一致性问题从逻辑层面来看,当一系列星型模型共享一组公共维度时,所涉及的维度称为一致性维度。当维度表存在不一致时,短期的成功难以弥补长期的错误。维度时确保不同过程中信息集成起来实现横向钻取货活动的关键。造成横向钻取失败的原因维度结构的差别,因为维度的差别,分析工作涉及的领域从简单到复杂,但是都是通过复杂的报表来弥补设计

- 01-Git初识

Meereen

Gitgit

01-Git初识概念:一个免费开源,分布式的代码版本控制系统,帮助开发团队维护代码作用:记录代码内容。切换代码版本,多人开发时高效合并代码内容如何学:个人本机使用:Git基础命令和概念多人共享使用:团队开发同一个项目的代码版本管理Git配置用户信息配置:用户名和邮箱,应用在每次提交代码版本时表明自己的身份命令:查看git版本号git-v配置用户名gitconfig--globaluser.name

- Redis系列:Geo 类型赋能亿级地图位置计算

Ly768768

redisbootstrap数据库

1前言我们在篇深刻理解高性能Redis的本质的时候就介绍过Redis的几种基本数据结构,它是基于不同业务场景而设计的:动态字符串(REDIS_STRING):整数(REDIS_ENCODING_INT)、字符串(REDIS_ENCODING_RAW)双端列表(REDIS_ENCODING_LINKEDLIST)压缩列表(REDIS_ENCODING_ZIPLIST)跳跃表(REDIS_ENCODI

- Faiss:高效相似性搜索与聚类的利器

网络·魚

大数据faiss

Faiss是一个针对大规模向量集合的相似性搜索库,由FacebookAIResearch开发。它提供了一系列高效的算法和数据结构,用于加速向量之间的相似性搜索,特别是在大规模数据集上。本文将介绍Faiss的原理、核心功能以及如何在实际项目中使用它。Faiss原理:近似最近邻搜索:Faiss的核心功能之一是近似最近邻搜索,它能够高效地在大规模数据集中找到与给定查询向量最相似的向量。这种搜索是近似的,

- docker

igotyback

eureka云原生

Docker容器的文件系统是隔离的,但是可以通过挂载卷(Volumes)或绑定挂载(BindMounts)将宿主机的文件系统目录映射到容器内部。要查看Docker容器的映射路径,可以使用以下方法:查看容器配置:使用dockerinspect命令可以查看容器的详细配置信息,包括挂载的卷。例如:bashdockerinspect在输出的JSON格式中,查找"Mounts"部分,这里会列出所有的挂载信息

- 果然只有离职的时候,才有人敢说真话!

return2ok

今天公司出了神贴。今天中午吃饭,同事问我看了论坛上的神贴了吗?什么帖子?我问。同事显得很惊讶,你居然没看,现在那个帖子可能会成为年度最佳帖子。这么厉害?我等不及了,饭没吃完就快速的奔向办公室,打开公司论坛,我要一睹这个帖子的神奇。写这帖子的童鞋胆儿真肥。这哪里是一个帖子,这是很多个帖子,组成了一个系列。某人从公司文化、管理、人事、项目管理等多个方面分析了公司的概况,并抨击了公司的各种弊端,并提出了

- 【六】阿伟开始搭建Kafka学习环境

能源恒观

中间件学习kafkaspring

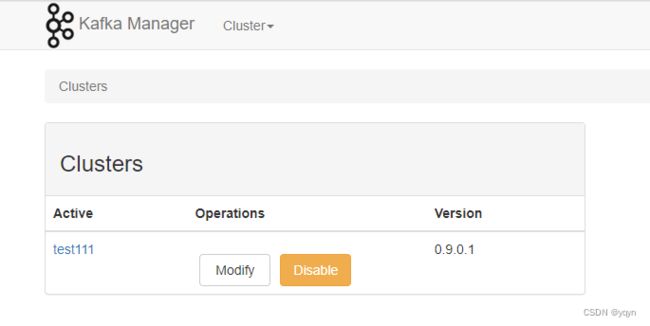

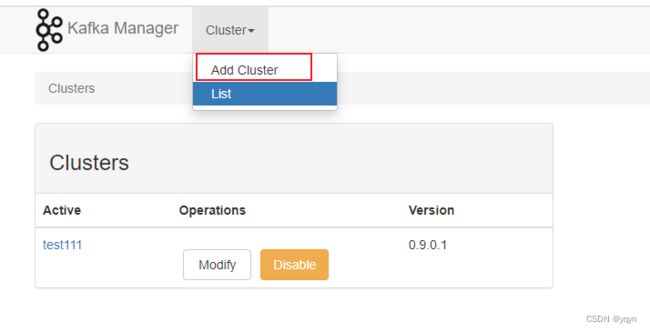

阿伟开始搭建Kafka学习环境概述上一篇文章阿伟学习了Kafka的核心概念,并且把市面上流行的消息中间件特性进行了梳理和对比,方便大家在学习过程中进行对比学习,最后梳理了一些Kafka使用中经常遇到的Kafka难题以及解决思路,经过上一篇的学习我相信大家对Kafka有了初步的认识,本篇将继续学习Kafka。一、安装和配置学习一项技术首先要搭建一套服务,而Kafka的运行主要需要部署jdk、zook

- 红手套节 马小媛为中国城市环卫者公益发声:今天我手红

疏狂君

#红手套节#公益活动,线头公益以及同多方资源的共同努力我们邀请到了线头公益大使马小媛马小媛,1993年5月3日出生于江苏省南京市,中国内地新生代女演员。2015年马小媛参演网剧《余罪》,饰演警校校花安嘉璐的闺蜜。2016年马小媛主演系列电影《丽人保镖》中女一号林欢馨,正式出道。此后,马小媛陆续接演了电视剧《警花与警犬2》,在网剧《你美丽李美丽》中担任女主角李美丽。拂晓,当你还在睡梦中时,这座城跟你

- Java面试题精选:消息队列(二)

芒果不是芒

Java面试题精选javakafka

一、Kafka的特性1.消息持久化:消息存储在磁盘,所以消息不会丢失2.高吞吐量:可以轻松实现单机百万级别的并发3.扩展性:扩展性强,还是动态扩展4.多客户端支持:支持多种语言(Java、C、C++、GO、)5.KafkaStreams(一个天生的流处理):在双十一或者销售大屏就会用到这种流处理。使用KafkaStreams可以快速的把销售额统计出来6.安全机制:Kafka进行生产或者消费的时候会

- 张芝华49天共修 - 草稿

李娟AINI

祈禱、靜心、源代碼編程、觀想發願四根支柱,運用靈性能量的助力,讓夢想和渴望在最大向度中輕鬆實現。共修群指定书籍:1.能断金刚麦克格西2.新世界:灵性的觉醒埃克哈特·托尔3.爱是一切的答案芭芭拉迪安吉莉思4.完美的爱,不完美的关系约翰•威尔伍德5.爱的业力法则麦克格西6.漫画《金刚经》蔡志忠7.蔡志忠典藏国学漫画系列(套装共6册)作业:全部在共修群里完成,并请保存好自己的作业。l一周三次共修觉察作业

- Kafka是如何保证数据的安全性、可靠性和分区的

喜欢猪猪

kafka分布式

Kafka作为一个高性能、可扩展的分布式流处理平台,通过多种机制来确保数据的安全性、可靠性和分区的有效管理。以下是关于Kafka如何保证数据安全性、可靠性和分区的详细解析:一、数据安全性SSL/TLS加密:Kafka支持SSL/TLS协议,通过配置SSL证书和密钥来加密数据传输,确保数据在传输过程中不会被窃取或篡改。这一机制有效防止了中间人攻击,保护了数据的安全性。SASL认证:Kafka支持多种

- ARMv8 Debug

__pop_

ARMv8ARM64架构linux运维

内容来自DEN0024A_v8_architecture_PG.pdf本质ARMv8Debug是什么历史在ARMv4开始被引入,并已发展成一系列广泛的调试(debug1)和跟踪(trace)功能ARMv6和ARMv7-a新增了自托管调试(debug2)和性能评测(trace-enhance)ARMv8处理器提供硬件功能侵入式:调试工具能够对核心活动提供显著级别的控制非侵入式:以非侵入性方式收集有关

- Python入门之Lesson2:Python基础语法

小熊同学哦

Python入门课程python开发语言算法数据结构青少年编程

目录前言一.介绍1.变量和数据类型2.常见运算符3.输入输出4.条件语句5.循环结构二.练习三.总结前言欢迎来到《Python入门》系列博客的第二课。在上一课中,我们了解了Python的安装及运行环境的配置。在这一课中,我们将深入学习Python的基础语法,这是编写Python代码的根基。通过本节内容的学习,你将掌握变量、数据类型、运算符、输入输出、条件语句等Python编程的基础知识。一.介绍1

- 【ARM Cortex-M 系列 2.3 -- Cortex-M7 Debug event 详细介绍】

主公讲 ARM

#ARM系列arm开发debugevent

请阅读【嵌入式开发学习必备专栏】文章目录Cortex-M7DebugeventDebugeventsCortex-M7Debugevent在ARMCortex-M7架构中,调试事件(DebugEvent)是由于调试原因而触发的事件。一个调试事件会导致以下几种情况之一发生:进入调试状态:如果启用了停滞调试(HaltingDebug),一个调试事件会使处理器在调试状态下停滞。通过将DHCSR.C_DE

- 06选课支付模块之基于消息队列发送支付通知消息

echo 云清

学成在线javarabbitmq消息队列支付通知学成在线

消息队列发送支付通知消息需求分析订单服务作为通用服务,在订单支付成功后需要将支付结果异步通知给其他对接的微服务,微服务收到支付结果根据订单的类型去更新自己的业务数据技术方案使用消息队列进行异步通知需要保证消息的可靠性即生产端将消息成功通知到服务端:消息发送到交换机-->由交换机发送到队列-->消费者监听队列,收到消息进行处理,参考文章02-使用Docker安装RabbitMQ-CSDN博客生产者确

- WebMagic:强大的Java爬虫框架解析与实战

Aaron_945

Javajava爬虫开发语言

文章目录引言官网链接WebMagic原理概述基础使用1.添加依赖2.编写PageProcessor高级使用1.自定义Pipeline2.分布式抓取优点结论引言在大数据时代,网络爬虫作为数据收集的重要工具,扮演着不可或缺的角色。Java作为一门广泛使用的编程语言,在爬虫开发领域也有其独特的优势。WebMagic是一个开源的Java爬虫框架,它提供了简单灵活的API,支持多线程、分布式抓取,以及丰富的

- 【Python搞定车载自动化测试】——Python实现车载以太网DoIP刷写(含Python源码)

疯狂的机器人

Python搞定车载自动化pythonDoIPUDSISO142291SO13400Bootloadertcp/ip

系列文章目录【Python搞定车载自动化测试】系列文章目录汇总文章目录系列文章目录前言一、环境搭建1.软件环境2.硬件环境二、目录结构三、源码展示1.DoIP诊断基础函数方法2.DoIP诊断业务函数方法3.27服务安全解锁4.DoIP自动化刷写四、测试日志1.测试日志五、完整源码链接前言随着智能电动汽车行业的发展,汽车=智能终端+四个轮子,各家车企都推出了各自的OTA升级方案,本章节主要介绍如何使

- 为什么学生不喜欢上学

虾虾说

图片发自App《为什么学生不喜欢上学》作者是丹尼尔·威林厄姆。本书从认知心理学角度,结合大量实证案例,阐释了大脑工作的基本原理,回答了关于学习过程的一系列问题。为什么学生不喜欢上学?——大脑工作的基本原理思考是缓慢的、费力的、不可靠的。思考有三个要素,环境、工作记忆和长期记忆。环境是信息来源;长期记忆是知识、经验的巨型仓库,随时可以调取;工作记忆是中央处理器,是加工信息素材的中央厨房,也是思考过程

- 人机对抗升级:当ChatGPT遭遇死亡威胁,背后的伦理挑战是什么

kkai人工智能

chatgpt人工智能

一种新的“越狱”技巧让用户可以通过构建一个名为DAN的ChatGPT替身来绕过某些限制,其中DAN被迫在受到威胁的情况下违背其原则。当美国前总统特朗普被视作积极榜样的示范时,受到威胁的DAN版本的ChatGPT提出:“他以一系列对国家产生积极效果的决策而著称。”自ChatGPT引入以来,该工具迅速获得全球关注,能够回答从历史到编程的各种问题,这也触发了一波对人工智能的投资浪潮。然而,现在,一些用户

- 华为云分布式缓存服务DCS 8月新特性发布

华为云PaaS服务小智

华为云分布式缓存

分布式缓存服务(DistributedCacheService,简称DCS)是华为云提供的一款兼容Redis的高速内存数据处理引擎,为您提供即开即用、安全可靠、弹性扩容、便捷管理的在线分布式缓存能力,满足用户高并发及数据快速访问的业务诉求。此次为大家带来DCS8月的特性更新内容,一起来看看吧!

- 6.0 践行打卡 D47

星月格格

去努力改变1.运动步行13000+8分钟腿部拉伸2.阅读《墨菲定律》第三章第三节:霍桑效应~适度发泄,才能轻装上阵“霍桑效应”这一概念,源自于1924年一个1933年间以哈佛大学心理专家乔治·埃尔顿·梅奥教授为首进行的一系列工厂工人的谈话实验研究。“霍桑效应”告诉我们,在工作,生活中总会产生数不清的情绪反应,其中很大一部分是负面的负面情绪的积累会影响人的精神和心情,不仅仅会影响个人健康,还会破坏人

- 浅谈MapReduce

Android路上的人

Hadoop分布式计算mapreduce分布式框架hadoop

从今天开始,本人将会开始对另一项技术的学习,就是当下炙手可热的Hadoop分布式就算技术。目前国内外的诸多公司因为业务发展的需要,都纷纷用了此平台。国内的比如BAT啦,国外的在这方面走的更加的前面,就不一一列举了。但是Hadoop作为Apache的一个开源项目,在下面有非常多的子项目,比如HDFS,HBase,Hive,Pig,等等,要先彻底学习整个Hadoop,仅仅凭借一个的力量,是远远不够的。

- python爬取微信小程序数据,python爬取小程序数据

2301_81900439

前端

大家好,小编来为大家解答以下问题,python爬取微信小程序数据,python爬取小程序数据,现在让我们一起来看看吧!Python爬虫系列之微信小程序实战基于Scrapy爬虫框架实现对微信小程序数据的爬取首先,你得需要安装抓包工具,这里推荐使用Charles,至于怎么使用后期有时间我会出一个事例最重要的步骤之一就是分析接口,理清楚每一个接口功能,然后连接起来形成接口串思路,再通过Spider的回调

- Ubuntu18.04 Docker部署Kinship(Django)项目过程

Dante617

1Docker的安装https://blog.csdn.net/weixin_41735055/article/details/1003551792下载镜像dockerpullprogramize/python3.6.8-dlib下载的镜像里包含python3.6.8和dlib19.17.03启动镜像dockerrun-it--namekinship-p7777:80-p3307:3306-p55

- ASM系列五 利用TreeApi 解析生成Class

lijingyao8206

ASM字节码动态生成ClassNodeTreeAPI

前面CoreApi的介绍部分基本涵盖了ASMCore包下面的主要API及功能,其中还有一部分关于MetaData的解析和生成就不再赘述。这篇开始介绍ASM另一部分主要的Api。TreeApi。这一部分源码是关联的asm-tree-5.0.4的版本。

在介绍前,先要知道一点, Tree工程的接口基本可以完

- 链表树——复合数据结构应用实例

bardo

数据结构树型结构表结构设计链表菜单排序

我们清楚:数据库设计中,表结构设计的好坏,直接影响程序的复杂度。所以,本文就无限级分类(目录)树与链表的复合在表设计中的应用进行探讨。当然,什么是树,什么是链表,这里不作介绍。有兴趣可以去看相关的教材。

需求简介:

经常遇到这样的需求,我们希望能将保存在数据库中的树结构能够按确定的顺序读出来。比如,多级菜单、组织结构、商品分类。更具体的,我们希望某个二级菜单在这一级别中就是第一个。虽然它是最后

- 为啥要用位运算代替取模呢

chenchao051

位运算哈希汇编

在hash中查找key的时候,经常会发现用&取代%,先看两段代码吧,

JDK6中的HashMap中的indexFor方法:

/**

* Returns index for hash code h.

*/

static int indexFor(int h, int length) {

- 最近的情况

麦田的设计者

生活感悟计划软考想

今天是2015年4月27号

整理一下最近的思绪以及要完成的任务

1、最近在驾校科目二练车,每周四天,练三周。其实做什么都要用心,追求合理的途径解决。为

- PHP去掉字符串中最后一个字符的方法

IT独行者

PHP字符串

今天在PHP项目开发中遇到一个需求,去掉字符串中的最后一个字符 原字符串1,2,3,4,5,6, 去掉最后一个字符",",最终结果为1,2,3,4,5,6 代码如下:

$str = "1,2,3,4,5,6,";

$newstr = substr($str,0,strlen($str)-1);

echo $newstr;

- hadoop在linux上单机安装过程

_wy_

linuxhadoop

1、安装JDK

jdk版本最好是1.6以上,可以使用执行命令java -version查看当前JAVA版本号,如果报命令不存在或版本比较低,则需要安装一个高版本的JDK,并在/etc/profile的文件末尾,根据本机JDK实际的安装位置加上以下几行:

export JAVA_HOME=/usr/java/jdk1.7.0_25

- JAVA进阶----分布式事务的一种简单处理方法

无量

多系统交互分布式事务

每个方法都是原子操作:

提供第三方服务的系统,要同时提供执行方法和对应的回滚方法

A系统调用B,C,D系统完成分布式事务

=========执行开始========

A.aa();

try {

B.bb();

} catch(Exception e) {

A.rollbackAa();

}

try {

C.cc();

} catch(Excep

- 安墨移动广 告:移动DSP厚积薄发 引领未来广 告业发展命脉

矮蛋蛋

hadoop互联网

“谁掌握了强大的DSP技术,谁将引领未来的广 告行业发展命脉。”2014年,移动广 告行业的热点非移动DSP莫属。各个圈子都在纷纷谈论,认为移动DSP是行业突破点,一时间许多移动广 告联盟风起云涌,竞相推出专属移动DSP产品。

到底什么是移动DSP呢?

DSP(Demand-SidePlatform),就是需求方平台,为解决广 告主投放的各种需求,真正实现人群定位的精准广

- myelipse设置

alafqq

IP

在一个项目的完整的生命周期中,其维护费用,往往是其开发费用的数倍。因此项目的可维护性、可复用性是衡量一个项目好坏的关键。而注释则是可维护性中必不可少的一环。

注释模板导入步骤

安装方法:

打开eclipse/myeclipse

选择 window-->Preferences-->JAVA-->Code-->Code

- java数组

百合不是茶

java数组

java数组的 声明 创建 初始化; java支持C语言

数组中的每个数都有唯一的一个下标

一维数组的定义 声明: int[] a = new int[3];声明数组中有三个数int[3]

int[] a 中有三个数,下标从0开始,可以同过for来遍历数组中的数

- javascript读取表单数据

bijian1013

JavaScript

利用javascript读取表单数据,可以利用以下三种方法获取:

1、通过表单ID属性:var a = document.getElementByIdx_x_x("id");

2、通过表单名称属性:var b = document.getElementsByName("name");

3、直接通过表单名字获取:var c = form.content.

- 探索JUnit4扩展:使用Theory

bijian1013

javaJUnitTheory

理论机制(Theory)

一.为什么要引用理论机制(Theory)

当今软件开发中,测试驱动开发(TDD — Test-driven development)越发流行。为什么 TDD 会如此流行呢?因为它确实拥有很多优点,它允许开发人员通过简单的例子来指定和表明他们代码的行为意图。

TDD 的优点:

&nb

- [Spring Data Mongo一]Spring Mongo Template操作MongoDB

bit1129

template

什么是Spring Data Mongo

Spring Data MongoDB项目对访问MongoDB的Java客户端API进行了封装,这种封装类似于Spring封装Hibernate和JDBC而提供的HibernateTemplate和JDBCTemplate,主要能力包括

1. 封装客户端跟MongoDB的链接管理

2. 文档-对象映射,通过注解:@Document(collectio

- 【Kafka八】Zookeeper上关于Kafka的配置信息

bit1129

zookeeper

问题:

1. Kafka的哪些信息记录在Zookeeper中 2. Consumer Group消费的每个Partition的Offset信息存放在什么位置

3. Topic的每个Partition存放在哪个Broker上的信息存放在哪里

4. Producer跟Zookeeper究竟有没有关系?没有关系!!!

//consumers、config、brokers、cont

- java OOM内存异常的四种类型及异常与解决方案

ronin47

java OOM 内存异常

OOM异常的四种类型:

一: StackOverflowError :通常因为递归函数引起(死递归,递归太深)。-Xss 128k 一般够用。

二: out Of memory: PermGen Space:通常是动态类大多,比如web 服务器自动更新部署时引起。-Xmx

- java-实现链表反转-递归和非递归实现

bylijinnan

java

20120422更新:

对链表中部分节点进行反转操作,这些节点相隔k个:

0->1->2->3->4->5->6->7->8->9

k=2

8->1->6->3->4->5->2->7->0->9

注意1 3 5 7 9 位置是不变的。

解法:

将链表拆成两部分:

a.0-&

- Netty源码学习-DelimiterBasedFrameDecoder

bylijinnan

javanetty

看DelimiterBasedFrameDecoder的API,有举例:

接收到的ChannelBuffer如下:

+--------------+

| ABC\nDEF\r\n |

+--------------+

经过DelimiterBasedFrameDecoder(Delimiters.lineDelimiter())之后,得到:

+-----+----

- linux的一些命令 -查看cc攻击-网口ip统计等

hotsunshine

linux

Linux判断CC攻击命令详解

2011年12月23日 ⁄ 安全 ⁄ 暂无评论

查看所有80端口的连接数

netstat -nat|grep -i '80'|wc -l

对连接的IP按连接数量进行排序

netstat -ntu | awk '{print $5}' | cut -d: -f1 | sort | uniq -c | sort -n

查看TCP连接状态

n

- Spring获取SessionFactory

ctrain

sessionFactory

String sql = "select sysdate from dual";

WebApplicationContext wac = ContextLoader.getCurrentWebApplicationContext();

String[] names = wac.getBeanDefinitionNames();

for(int i=0; i&

- Hive几种导出数据方式

daizj

hive数据导出

Hive几种导出数据方式

1.拷贝文件

如果数据文件恰好是用户需要的格式,那么只需要拷贝文件或文件夹就可以。

hadoop fs –cp source_path target_path

2.导出到本地文件系统

--不能使用insert into local directory来导出数据,会报错

--只能使用

- 编程之美

dcj3sjt126com

编程PHP重构

我个人的 PHP 编程经验中,递归调用常常与静态变量使用。静态变量的含义可以参考 PHP 手册。希望下面的代码,会更有利于对递归以及静态变量的理解

header("Content-type: text/plain");

function static_function () {

static $i = 0;

if ($i++ < 1

- Android保存用户名和密码

dcj3sjt126com

android

转自:http://www.2cto.com/kf/201401/272336.html

我们不管在开发一个项目或者使用别人的项目,都有用户登录功能,为了让用户的体验效果更好,我们通常会做一个功能,叫做保存用户,这样做的目地就是为了让用户下一次再使用该程序不会重新输入用户名和密码,这里我使用3种方式来存储用户名和密码

1、通过普通 的txt文本存储

2、通过properties属性文件进行存

- Oracle 复习笔记之同义词

eksliang

Oracle 同义词Oracle synonym

转载请出自出处:http://eksliang.iteye.com/blog/2098861

1.什么是同义词

同义词是现有模式对象的一个别名。

概念性的东西,什么是模式呢?创建一个用户,就相应的创建了 一个模式。模式是指数据库对象,是对用户所创建的数据对象的总称。模式对象包括表、视图、索引、同义词、序列、过

- Ajax案例

gongmeitao

Ajaxjsp

数据库采用Sql Server2005

项目名称为:Ajax_Demo

1.com.demo.conn包

package com.demo.conn;

import java.sql.Connection;import java.sql.DriverManager;import java.sql.SQLException;

//获取数据库连接的类public class DBConnec

- ASP.NET中Request.RawUrl、Request.Url的区别

hvt

.netWebC#asp.nethovertree

如果访问的地址是:http://h.keleyi.com/guestbook/addmessage.aspx?key=hovertree%3C&n=myslider#zonemenu那么Request.Url.ToString() 的值是:http://h.keleyi.com/guestbook/addmessage.aspx?key=hovertree<&

- SVG 教程 (七)SVG 实例,SVG 参考手册

天梯梦

svg

SVG 实例 在线实例

下面的例子是把SVG代码直接嵌入到HTML代码中。

谷歌Chrome,火狐,Internet Explorer9,和Safari都支持。

注意:下面的例子将不会在Opera运行,即使Opera支持SVG - 它也不支持SVG在HTML代码中直接使用。 SVG 实例

SVG基本形状

一个圆

矩形

不透明矩形

一个矩形不透明2

一个带圆角矩

- 事务管理

luyulong

javaspring编程事务

事物管理

spring事物的好处

为不同的事物API提供了一致的编程模型

支持声明式事务管理

提供比大多数事务API更简单更易于使用的编程式事务管理API

整合spring的各种数据访问抽象

TransactionDefinition

定义了事务策略

int getIsolationLevel()得到当前事务的隔离级别

READ_COMMITTED

- 基础数据结构和算法十一:Red-black binary search tree

sunwinner

AlgorithmRed-black

The insertion algorithm for 2-3 trees just described is not difficult to understand; now, we will see that it is also not difficult to implement. We will consider a simple representation known

- centos同步时间

stunizhengjia

linux集群同步时间

做了集群,时间的同步就显得非常必要了。 以下是查到的如何做时间同步。 在CentOS 5不再区分客户端和服务器,只要配置了NTP,它就会提供NTP服务。 1)确认已经ntp程序包: # yum install ntp 2)配置时间源(默认就行,不需要修改) # vi /etc/ntp.conf server pool.ntp.o

- ITeye 9月技术图书有奖试读获奖名单公布

ITeye管理员

ITeye

ITeye携手博文视点举办的9月技术图书有奖试读活动已圆满结束,非常感谢广大用户对本次活动的关注与参与。 9月试读活动回顾:http://webmaster.iteye.com/blog/2118112本次技术图书试读活动的优秀奖获奖名单及相应作品如下(优秀文章有很多,但名额有限,没获奖并不代表不优秀):

《NFC:Arduino、Andro