Ubuntu18.04+ELK架构(实现日志收集、过滤、可视化展现)

目录:

1.实现filebeat-kafka/redis-logstash-elasticsearch-kibana集群架构

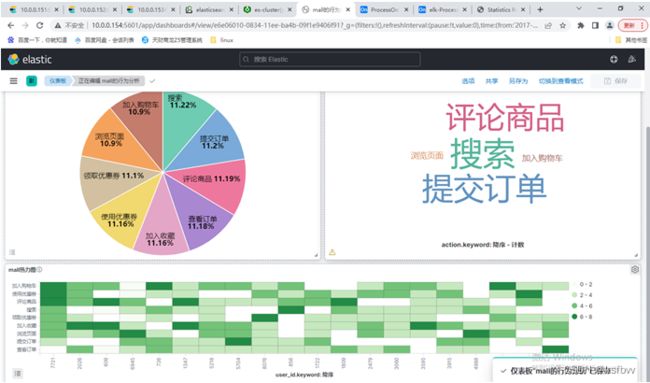

2.完成收集nginx、tomcat、app日志收集

3. 要求完成Kibana日志可视化分析:基于用户真实IP可以地图显示位置等。

filebeat下文简称fb;elasticsearch简称es;logstash简称ls

1.实现filebeat-kafka/redis-logstash-elasticsearch-kibana集群架构

1.1脑图

1.2服务器规划

按照脑图所需规划服务器即可:

154(kibana),

151(es1),152(es2),153(es3);201(logstash1),202(logstash2),

203(filebeat2/rsyslog),204(filebeat1),

205(redis),207(mysql),

206\208\209(kafka)

1.3修改名称并初始化

1.31修改名称(略,参见前述文章)

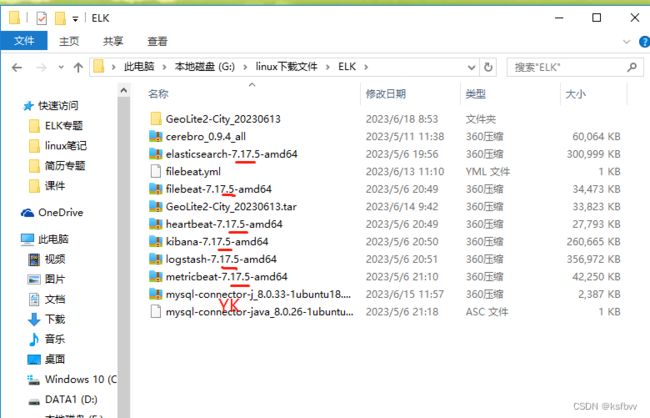

这里要注意,所有ELK使用的相关软件必须是同一个版本的(资源已上传)

1.32初始化

1.321域名解析

vim /etc/hosts

10.0.0.151 es-node1

10.0.0.152 es-node2

10.0.0.153 es-node3

1.322.关闭防火墙和selinux

sudo systemctl stop NetworkManager

sudo systemctl disable NetworkManager

sudo systemctl mask NetworkManager

ufw disable

ufw status

ubuntu没有selinux

1.323.内核参数优化 #否则会起不来

root@es-node2:~# echo "vm.max_map_count = 262144" >> /etc/sysctl.conf

echo "fs.file-max = 1000000" >> /etc/sysctl.conf

sysctl –p

1.324.打开资源限制

vim /etc/security/limits.conf

* soft core unlimited

* hard core unlimited

* soft nproc 1000000

* hard nproc 1000000

* soft nofile 1000000

* hard nofile 1000000

* soft memlock 32000

* hard memlock 32000

* soft msgqueue 8192000

* hard msgqueue 8192000

重启系统

reboot

1.4 es集群搭建

编辑安装脚本install_elasticsearch_cluster.sh(资源已上传)

运行

bash install_elasticsearch_cluster.sh

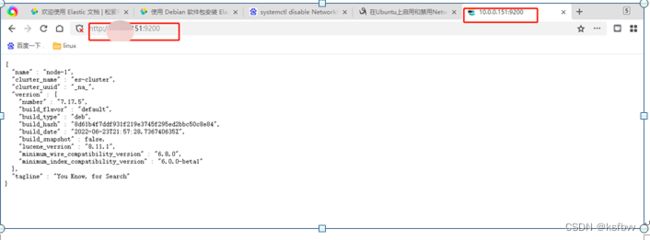

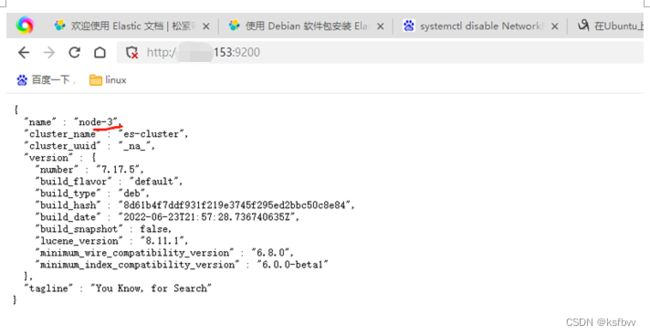

完成,访问测试:

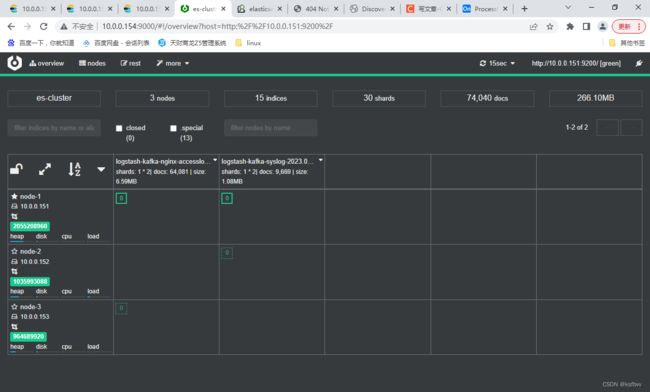

集群效果:(这里注意:uuid一样,才证明集群已经正常工作了)

1.41 es高可用(一般创建三个分片,两个副本)

curl -XPUT '10.0.0.151:9200/index2' -H 'Content-Type: application/json' -d '

{

"settings": {

"index": {

"number_of_shards": 3,

"number_of_replicas": 2

}

}

}'

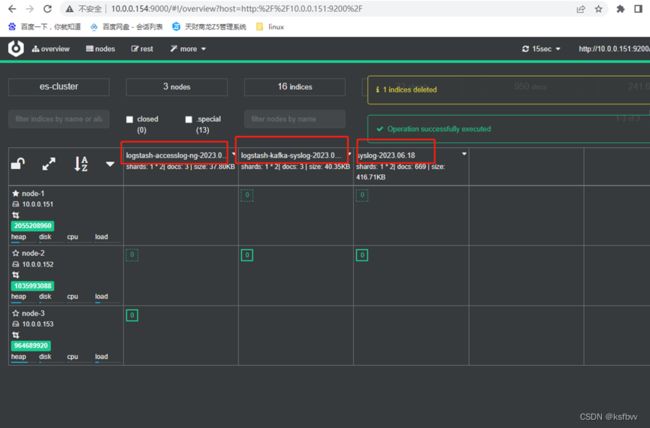

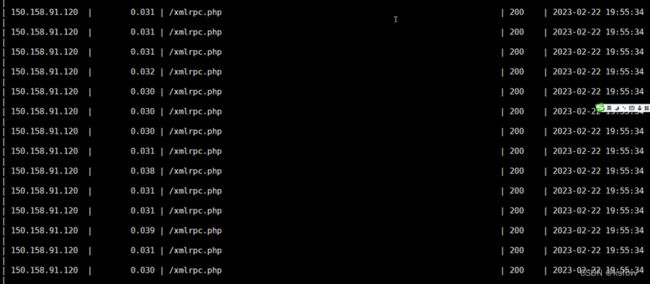

安装插件查看

1.42 Cerebro插件

github链接:

https://github.com/lmenezes/cerebro

### 1.5 filebeat集群搭建

### 1.5 filebeat集群搭建

官方说明:

https://www.elastic.co/guide/en/beats/filebeat/8.3/configuration-generaloptions.html

root@k8s-ha1:~# dpkg -i filebeat-7.17.5-amd64.deb

#dpkg: Debian package

filebeat –help

1.51 修改配置文件

root@es-filebeat:~# grep -Ev "#|^$" /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: filestream

id: my-filestream-id

enabled: false

paths:

- /var/log/*.log

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

setup.kibana:

output.elasticsearch:

hosts: ["localhost:9200"]

processors:

- add_host_metadata:

when.not.contains.tags: forwarded

- add_cloud_metadata: ~

- add_docker_metadata: ~

- add_kubernetes_metadata: ~

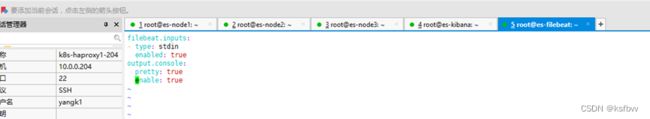

1.52 编写一个yml文件

root@es-filebeat:~# vim /etc/filebeat/stdin.yml

filebeat.inputs:

- type: stdin

enabled: true

output.console:

pretty: true

enable: true

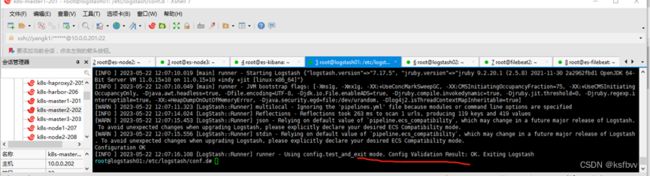

1.6 logstash集群搭建

打开机器;初始化、网络配置;修改主机名称;

hostnamectl set-hostname logstash01

因为没有修改logstash的配置,所以启动应该是起不来的

bash install_logstash.sh(资源已上传)

两台机器同时装

root@logstash01:~# apt update

#常用选项

-e 指定配置内容

-f 指定配置文件

-t 语法检查

-r 修改配置文件,自动加载生效

Logstas缺点:基于java,启动、重启很慢,-r解决的就是这个痛点

1.61 修改logstash主配置文件

root@logstash01:~# grep -v "#" /etc/logstash/logstash.yml

node.name: logstash01

pipeline.workers: 2

pipeline.batch.size: 1000 #批量写入的消息个数,可以根据ES的性能做性能优化

pipeline.batch.delay: 5 #批量写入的延时,可以根据ES的性能做性能优化

1.62 ls标准输入插件

https://www.elastic.co/guide/en/logstash/7.15/input-plugins.html

编写一个conf文件

root@logstash01:~# vim /etc/logstash/conf.d/stdin_to_stdout.conf

input {

stdin {

type => "stdin_type" #自定义事件类型,可用于后续判断

tags => "stdin_tag" #自定义事件tag,可用于后续判断

codec => "json" #指定Json 格式

}

}

output {

stdout {

codec => "rubydebug" #输出格式,此为默认值,可省略

}

}

语法检查:

logstash -f stdin_to_stdout.conf -r -t

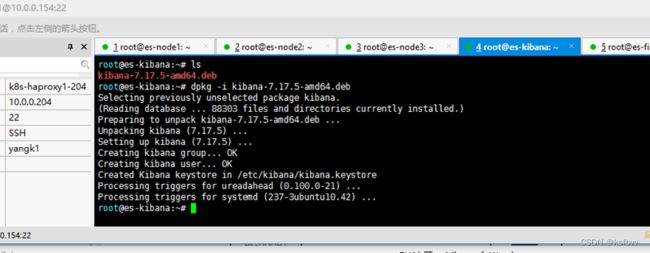

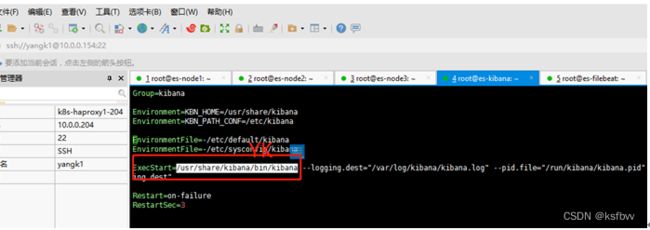

1.7 kibana安装

官网:https://www.elastic.co/cn/downloads/past-releases#kibana

root@es-kibana:~# dpkg -i kibana-7.17.5-amd64.deb

监听端口:5601

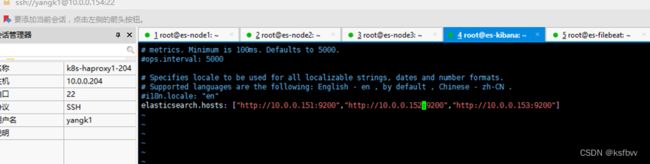

1.71修改配置文件

vim /etc/systemd/system/kibana.service

elasticsearch.hosts: ["http:/x.x.x.151:9200","http://x.x.x.152:9200","http://x.x.x.153:9200"]

server.port: 5601

server.host: "0.0.0.0"

i18n.locale: "zh-CN"

systemctl start kibana.service && systemctl enable kibana.service

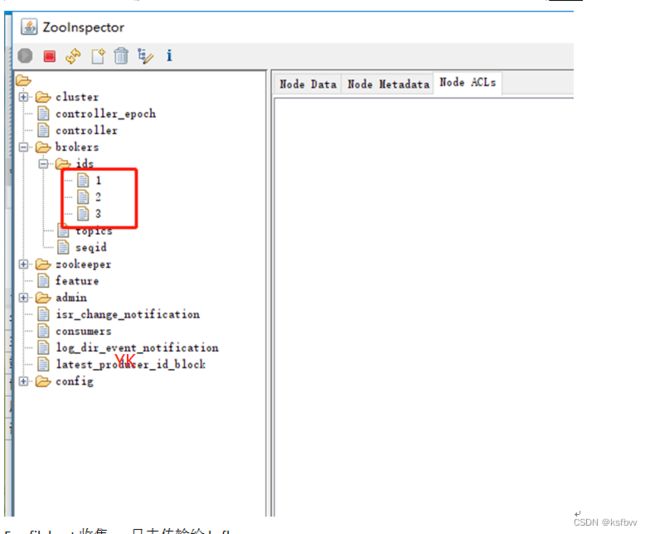

1.8创建kafka集群

(略,参见前述消息队列文章:zookeeper+kafka)

最终效果

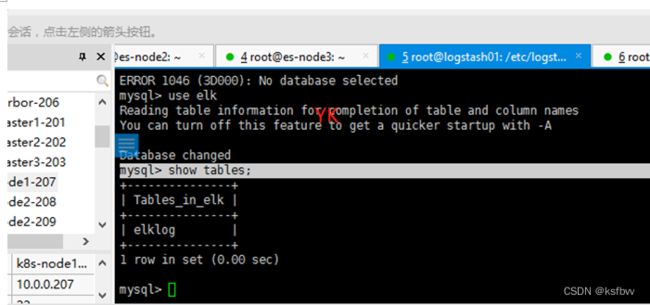

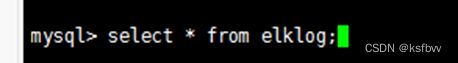

1.9安装mysql并创建数据库、表、授权

root@mysql_ELK:~# apt -y install mysql-server

vim /etc/mysql/mysql.conf.d/mysqld.cnf

bind-address = 0.0.0.0

root@mysql_ELK:~# mysql

mysql> create database elk character set utf8 collate utf8_bin;

mysql> create user elk@"10.0.0.%" identified by '123456';

mysql> grant all on elk.* to elk@"10.0.0.%";

mysql> flush privileges;

#创建表,字段对应需要保存的数据字段

mysql> use elk

mysql> create table elklog (clientip varchar(39),responsetime float(10,3),uri

-> varchar(256),status char(3),time timestamp default current_timestamp );

mysql> desc elklog;

验证:

root@logstash01:/etc/logstash/conf.d# mysql -uelk -p123456 -h10.0.0.207 -e 'show databases'

root@logstash01:/etc/logstash/conf.d# mysql -uelk -p123456 -h10.0.0.207

mysql> use elk

mysql> show tables;

2.完成收集nginx、tomcat、app日志收集

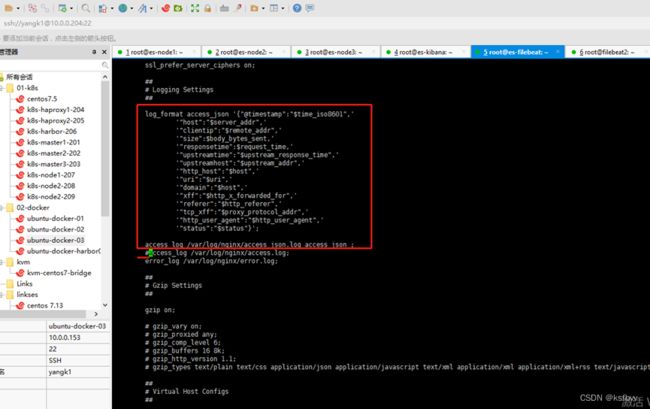

2.1nginx配置文件

log_format access_json '{"@timestamp":"$time_iso8601",'

'"host":"$server_addr",'

'"clientip":"$remote_addr",'

'"size":$body_bytes_sent,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamhost":"$upstream_addr",'

'"http_host":"$host",'

'"uri":"$uri",'

'"domain":"$host",'

'"xff":"$http_x_forwarded_for",'

'"referer":"$http_referer",'

'"tcp_xff":"$proxy_protocol_addr",'

'"http_user_agent":"$http_user_agent",'

'"status":"$status"}';

access_log /var/log/nginx/access_json.log access_json ;

#access_log /var/log/nginx/access.log;

error_log /var/log/nginx/error.log;

配置好稍后重启,导入accesslog(资源已上传)

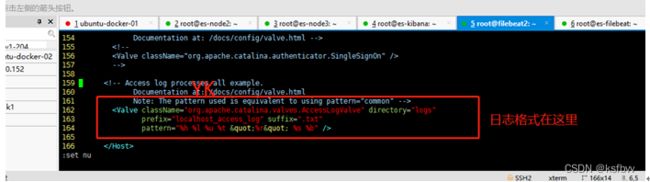

2.2 tomcat修改日志格式所在的配置文件

vim /etc/tomcat9/server.xml

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs" prefix="localhost_access_log" suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" />

配置好稍后重启

2.3 filebeat配置

root@filebeat2:/etc/filebeat# cp filebeat.yml filebeat.yml.bak

vim filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access_json.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["nginx-access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["nginx-error"]

- type: log

enabled: true

paths:

- /var/log/syslog

tags: ["syslog"]

output.kafka:

hosts: ["x.x.x.206:9092", "x.x.x.208:9092", "x.x.x.209:9092"]

topic: filebeat-log #指定kafka的topic

partition.round_robin:

reachable_only: true #true表示只发布到可用的分区,false时表示所有分区,如果一个节点down,会block

required_acks: 1 #如果为0,错误消息可能会丢失,1等待写入主分区(默认),-1等待写入副本分区

compression: gzip

max_message_bytes: 1000000 #每条消息最大长度,以字节为单位,如果超过将丢弃

重启fi,没想到kafka直接有反应了

2.4 logstash配置

root@logstash01:/etc/logstash/conf.d# vim kafka-to-es.conf

vim kafka-to-es.conf

input {

kafka {

bootstrap_servers => "x.x.x.206:9092,x.x.x.208:9092,x.x.x.209:9092"

topics => "filebeat-log"

codec => "json"

#group_id => "logstash" #消费者组的名称

#consumer_threads => "3" #建议设置为和kafka的分区相同的值为线程数

#topics_pattern => "nginx-.*" #通过正则表达式匹配topic,而非像上面topics=>指定固定值

}

}

output {

#stdout {} #调试使用

if "nginx-access" in [tags] {

elasticsearch {

hosts => ["x.x.x.151:9200"]

index => "logstash-kafka-nginx-accesslog-%{+YYYY.MM.dd}"

}

}

if "nginx-error" in [tags] {

elasticsearch {

hosts => ["x.x.x.151:9200"]

index => "logstash-kafka-nginx-errorlog-%{+YYYY.MM.dd}"

}

}

if "syslog" in [tags] {

elasticsearch {

hosts => ["x.x.x.151:9200"]

index => "logstash-kafka-syslog-%{+YYYY.MM.dd}"

}

}

}

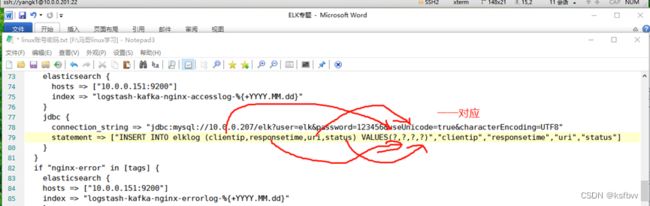

2.4.1 ls写入mysql配置

input {

kafka {

bootstrap_servers => "10.0.0.206:9092,10.0.0.208:9092,10.0.0.209:9092"

topics => "filebeat-log"

codec => "json"

#group_id => "logstash" #消费者组的名称

#consumer_threads => "3" #建议设置为和kafka的分区相同的值为线程数

#topics_pattern => "nginx-.*" #通过正则表达式匹配topic,而非像上面topics=>指定固定值

}

}

filter {

if "nginx-access" in [tags] {

geoip {

source => "clientip" #日志必须是json格式且有一个clientip的key

target => "geoip"

database => "/etc/logstash/conf.d/GeoLite2-City.mmdb" #指定数据库文件

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ] #经度

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ] #纬度

}

mutate {

convert => [ "[geoip][coordinates]", "float"]

}

}

}

output {

#stdout {} #调试使用

if "nginx-access" in [tags] {

elasticsearch {

hosts => ["10.0.0.151:9200"]

index => "logstash-kafka-nginx-accesslog-%{+YYYY.MM.dd}"

}

jdbc {

connection_string => "jdbc:mysql://10.0.0.207/elk?user=elk&password=123456&useUnicode=true&characterEncoding=UTF8"

statement => ["INSERT INTO elklog (clientip,responsetime,uri,status) VALUES(?,?,?,?)","clientip","responsetime","uri","status"]

}

}

if "nginx-error" in [tags] {

elasticsearch {

hosts => ["10.0.0.151:9200"]

index => "logstash-kafka-nginx-errorlog-%{+YYYY.MM.dd}"

}

}

if "syslog" in [tags] {

elasticsearch {

hosts => ["10.0.0.151:9200"]

index => "logstash-kafka-syslog-%{+YYYY.MM.dd}"

}

}

}

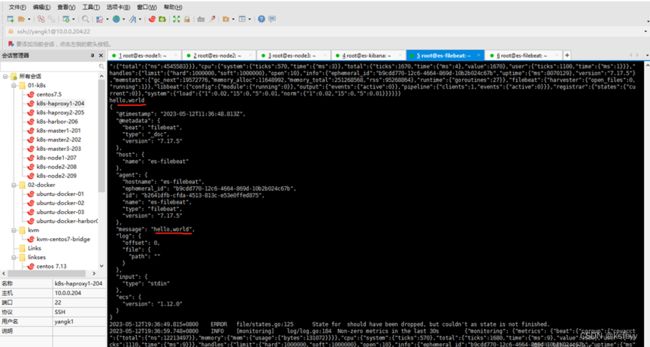

收集日志,直接转换为json格式效果:

2.5. filebeat收集ng日志传输给kafka,6. logstash读取 kafka日志传输给elasticsearch

root@logstash01:/etc/logstash/conf.d# vim kafka-to-es.conf

vim kafka-to-es.conf

input {

kafka {

bootstrap_servers => "x.x.x.206:9092,x.x.x.208:9092,x.x.x.209:9092"

topics => "filebeat-log"

codec => "json"

#group_id => "logstash" #消费者组的名称

#consumer_threads => "3" #建议设置为和kafka的分区相同的值为线程数

#topics_pattern => "nginx-.*" #通过正则表达式匹配topic,而非像上面topics=>指定固定值

}

}

output {

#stdout {} #调试使用

if "nginx-access" in [tags] {

elasticsearch {

hosts => ["10.0.0.151:9200"]

index => "logstash-kafka-nginx-accesslog-%{+YYYY.MM.dd}"

}

}

if "nginx-error" in [tags] {

elasticsearch {

hosts => ["10.0.0.151:9200"]

index => "logstash-kafka-nginx-errorlog-%{+YYYY.MM.dd}"

}

}

if "syslog" in [tags] {

elasticsearch {

hosts => ["10.0.0.151:9200"]

index => "logstash-kafka-syslog-%{+YYYY.MM.dd}"

}

}

}

2.6. filebeat收集ng日志传输给kafka 修改logstash文件并实现mysql存储

2.61 安装jdbc插件(ls连接mysql,略)

root@filebeat2:/etc/filebeat# cat /root/mall_app.log > /var/log/nginx/access_json.log

但是运行就报错:

:message=>"Could not execute action: PipelineAction::Create, action_result: false" , :backtrace=>nil}(

解决

root@logstash01:~# dpkg -i mysql-connector-j_8.0.33-1ubuntu18.04_all.deb

dpkg -L mysql-connector-j

mkdir -p /usr/share/logstash/vendor/jar/jdbc

cp /usr/share/java/mysql-connector-j-8.0.33.jar /usr/share/logstash/vendor/jar/jdbc/

chown -R logstash.logstash /usr/share/logstash/vendor/jar/

ll -h /usr/share/logstash/vendor/jar/jdbc/

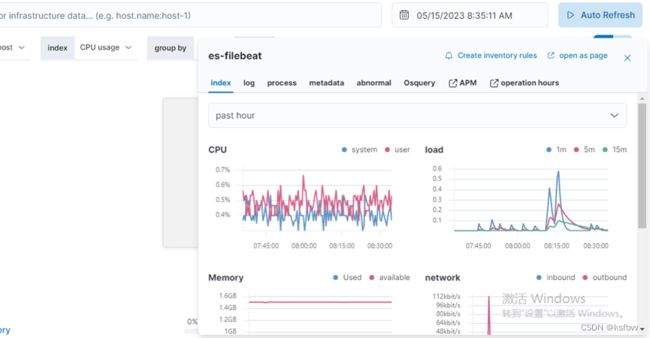

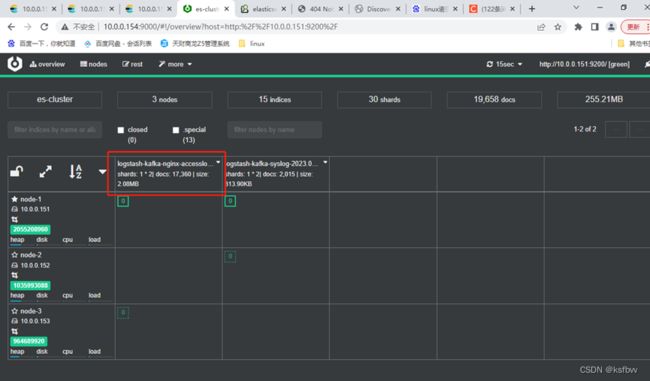

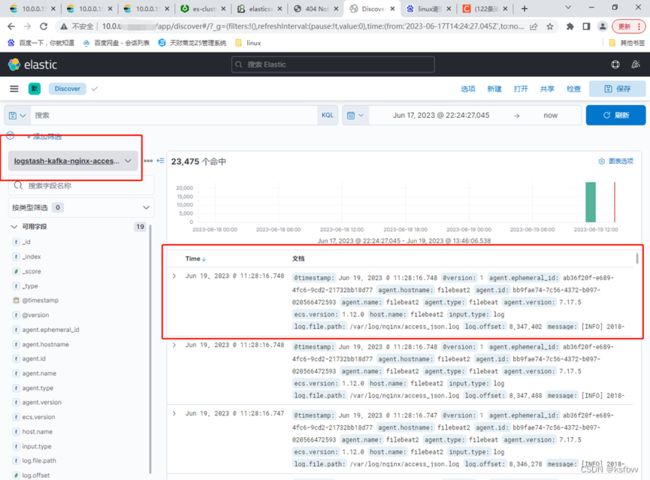

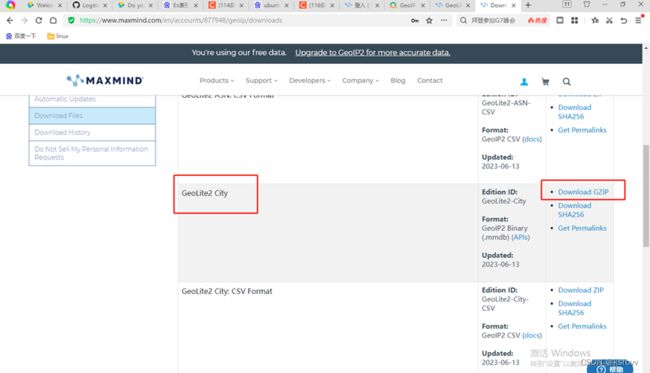

3. 要求完成Kibana日志可视化分析:基于用户真实IP可以地图显示位置等

logstash利用geoip过滤插件实现IP来源地图展示。

geoip官网:https://www.maxmind.com/

3.1需要注册下载(资源已上传)

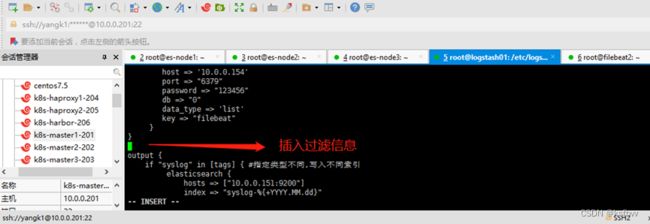

3.2修改logstash配置文件:

root@logstash01:/etc/logstash/conf.d# mv redis_to_es.conf redis_geoip_to_es.conf

filter {

if "nginx-access" in [tags] {

geoip {

source => "clientip"

target => "geoip"

}

}

}

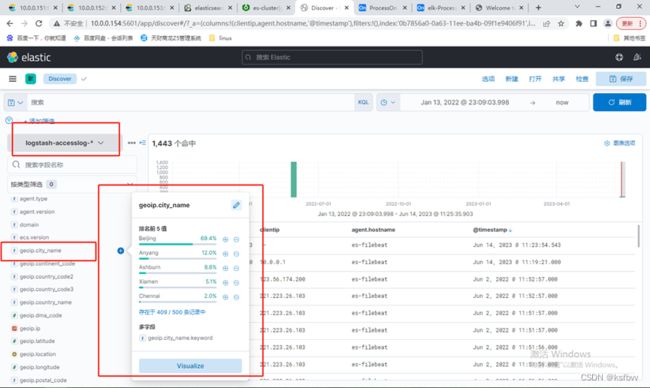

3.3对端(nginx)导入access日志

导入前100行

sed -i '1,100p' access_json.log-20220603 > /var/log/nginx/access_json.log

注意: 地图功能要求必须使用 logstash 开头的索引名称

kibana收到分解的城市信息

如果觉得对您有用,请点个赞吧 ↓↓↓