Jetson NX + yolov5 v5.0 + TensorRT加速+调用usb摄像头

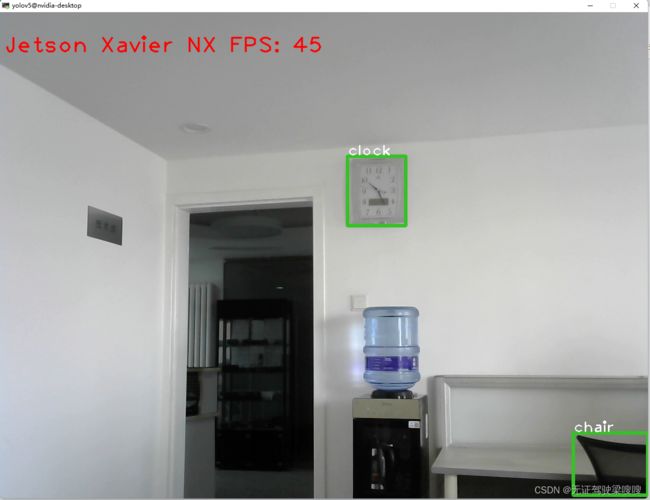

上一篇笔记记录了如何使用yolov5使用usb摄像头使用权重进行测试,测试效果如下

本篇文章具体操作步骤如下就可以了,切记版本要对应 ,我产生这个错误的原因就是版本问题,成功转换但是还是卡顿,估计是硬件usb问题,加速以后帧率得到了明显提升

git clone -b v5.0 https://github.com/ultralytics/yolov5.git

git clone -b yolov5-v5.0 https://github.com/wang-xinyu/tensorrtx.git cd yolov5/

nvidia@nvidia-desktop:~/yolov5$ ls

best.wts data detect.py Dockerfile gen_wts.py hubconf.py LICENSE models __pycache__ README.md requirements.txt runs test.py train.py tutorial.ipynb utils wandb weights

nvidia@nvidia-desktop:~/yolov5$ git clone -b yolov5-v5.0 https://github.com/wang-xinyu/tensorrtx.git

Cloning into 'tensorrtx'...

remote: Enumerating objects: 2238, done.

remote: Counting objects: 100% (5/5), done.

remote: Compressing objects: 100% (5/5), done.

remote: Total 2238 (delta 0), reused 1 (delta 0), pack-reused 2233

Receiving objects: 100% (2238/2238), 1.85 MiB | 313.00 KiB/s, done.

Resolving deltas: 100% (1431/1431), done.

Note: checking out '791c8a4484ac08ab8808c347f5b900bdf49e15c1'.

You are in 'detached HEAD' state. You can look around, make experimental

changes and commit them, and you can discard any commits you make in this

state without impacting any branches by performing another checkout.

If you want to create a new branch to retain commits you create, you may

do so (now or later) by using -b with the checkout command again. Example:

git checkout -b

nvidia@nvidia-desktop:~/yolov5$ ls

best.wts data detect.py Dockerfile gen_wts.py hubconf.py LICENSE models __pycache__ README.md requirements.txt runs tensorrtx test.py train.py tutorial.ipynb utils wandb weights

nvidia@nvidia-desktop:~/yolov5$ cd tensorrtx/

nvidia@nvidia-desktop:~/yolov5/tensorrtx$ ls

alexnet crnn detr googlenet inception lprnet psenet refinedet retinaface senet tsm unet yolov3-spp yolov5

arcface dbnet Dockerfile hrnet lenet mnasnet rcnn repvgg retinafaceAntiCov shufflenetv2 tutorials vgg yolov3-tiny

centernet densenet efficientnet ibnnet LICENSE mobilenet README.md resnet scaled-yolov4 squeezenet ufld yolov3 yolov4

nvidia@nvidia-desktop:~/yolov5/tensorrtx$ cd yolov5/

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5$ ls

calibrator.cpp calibrator.h CMakeLists.txt common.hpp cuda_utils.h gen_wts.py logging.h macros.h README.md samples utils.h yololayer.cu yololayer.h yolov5.cpp yolov5_trt.py

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5$ cp gen_wts.py ../../

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5$ ls

calibrator.cpp calibrator.h CMakeLists.txt common.hpp cuda_utils.h gen_wts.py logging.h macros.h README.md samples utils.h yololayer.cu yololayer.h yolov5.cpp yolov5_trt.py

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5$ cd ../..

nvidia@nvidia-desktop:~/yolov5$ ls

best.wts data detect.py Dockerfile gen_wts.py hubconf.py LICENSE models __pycache__ README.md requirements.txt runs tensorrtx test.py train.py tutorial.ipynb utils wandb weights

nvidia@nvidia-desktop:~/yolov5$

nvidia@nvidia-desktop:~/yolov5$ python3 gen_wts.py -w weights/yolov5s.pt -o yolov5s.wts

Matplotlib created a temporary config/cache directory at /tmp/matplotlib-ato3ywnd because the default path (/home/nvidia/.cache/matplotlib) is not a writable directory; it is highly recommended to set the MPLCONFIGDIR environment variable to a writable directory, in particular to speed up the import of Matplotlib and to better support multiprocessing.

nvidia@nvidia-desktop:~/yolov5$ ls

best.wts detect.py gen_wts.py LICENSE __pycache__ requirements.txt tensorrtx train.py utils weights

data Dockerfile hubconf.py models README.md runs test.py tutorial.ipynb wandb yolov5s.wts

nvidia@nvidia-desktop:~/yolov5$ ll

total 478892

drwxrwxr-x 12 nvidia nvidia 4096 12月 29 13:16 ./

drwxr-xr-x 46 nvidia nvidia 4096 12月 29 13:10 ../

-rw-rw-r-- 1 nvidia nvidia 424088219 12月 29 09:42 best.wts

drwxrwxr-x 4 nvidia nvidia 4096 12月 29 09:51 data/

-rw-rw-r-- 1 nvidia nvidia 8816 12月 26 17:12 detect.py

-rw-rw-r-- 1 nvidia nvidia 1809 12月 21 13:16 Dockerfile

-rw-rw-r-- 1 nvidia nvidia 3610 12月 21 13:16 .dockerignore

-rw-rw-r-- 1 nvidia nvidia 1358 12月 29 13:16 gen_wts.py

drwxrwxr-x 8 nvidia nvidia 4096 12月 28 13:01 .git/

-rw-rw-r-- 1 nvidia nvidia 75 12月 21 13:16 .gitattributes

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 13:16 .github/

-rwxrwxr-x 1 nvidia nvidia 3976 12月 21 13:16 .gitignore*

-rw-rw-r-- 1 nvidia nvidia 5510 12月 21 13:16 hubconf.py

-rw-rw-r-- 1 nvidia nvidia 35126 12月 21 13:16 LICENSE

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 14:16 models/

drwxrwxr-x 2 nvidia nvidia 4096 12月 21 14:16 __pycache__/

-rwxrwxr-x 1 nvidia nvidia 11191 12月 21 13:16 README.md*

-rwxrwxr-x 1 nvidia nvidia 599 12月 21 13:16 requirements.txt*

drwxr-xr-x 4 nvidia nvidia 4096 12月 22 16:39 runs/

drwxrwxr-x 41 nvidia nvidia 4096 12月 29 13:10 tensorrtx/

-rw-rw-r-- 1 nvidia nvidia 16976 12月 21 13:16 test.py

-rw-rw-r-- 1 nvidia nvidia 33779 12月 21 13:16 train.py

-rw-rw-r-- 1 nvidia nvidia 394029 12月 21 13:16 tutorial.ipynb

drwxrwxr-x 6 nvidia nvidia 4096 12月 27 13:29 utils/

drwxrwxr-x 15 nvidia nvidia 4096 12月 22 15:52 wandb/

drwxrwxr-x 2 nvidia nvidia 4096 12月 28 13:05 weights/

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 13:17 yolov5s.wts

nvidia@nvidia-desktop:~/yolov5$ cp yolov5s.wts te

tensorrtx/ test.py

nvidia@nvidia-desktop:~/yolov5$ cp yolov5s.wts te

tensorrtx/ test.py

nvidia@nvidia-desktop:~/yolov5$ cp yolov5s.wts tensorrtx/

alexnet/ densenet/ .github/ inception/ mobilenet/ repvgg/ senet/ ufld/ yolov3-tiny/

arcface/ detr/ .gitignore lenet/ psenet/ resnet/ shufflenetv2/ unet/ yolov4/

centernet/ Dockerfile googlenet/ LICENSE rcnn/ retinaface/ squeezenet/ vgg/ yolov5/

crnn/ efficientnet/ hrnet/ lprnet/ README.md retinafaceAntiCov/ tsm/ yolov3/

dbnet/ .git/ ibnnet/ mnasnet/ refinedet/ scaled-yolov4/ tutorials/ yolov3-spp/

nvidia@nvidia-desktop:~/yolov5$ cp yolov5s.wts tensorrtx/yolov5/

nvidia@nvidia-desktop:~/yolov5$ ls

best.wts detect.py gen_wts.py LICENSE __pycache__ requirements.txt tensorrtx train.py utils weights

data Dockerfile hubconf.py models README.md runs test.py tutorial.ipynb wandb yolov5s.wts

nvidia@nvidia-desktop:~/yolov5$ mkdir build

nvidia@nvidia-desktop:~/yolov5$ cd tensorrtx/yolov5/

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5$ ls

calibrator.cpp calibrator.h CMakeLists.txt common.hpp cuda_utils.h gen_wts.py logging.h macros.h README.md samples utils.h yololayer.cu yololayer.h yolov5.cpp yolov5s.wts yolov5_trt.py

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5$ mkdir build

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5$ cd build/

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ ls

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ cp ../

build/ calibrator.h common.hpp gen_wts.py macros.h samples/ yololayer.cu yolov5.cpp yolov5_trt.py

calibrator.cpp CMakeLists.txt cuda_utils.h logging.h README.md utils.h yololayer.h yolov5s.wts

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ cp ../yolov5s.wts .

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ cmake ..

CMake Deprecation Warning at CMakeLists.txt:1 (cmake_minimum_required):

Compatibility with CMake < 2.8.12 will be removed from a future version of

CMake.

Update the VERSION argument value or use a ... suffix to tell

CMake that the project does not need compatibility with older versions.

-- The C compiler identification is GNU 7.5.0

-- The CXX compiler identification is GNU 7.5.0

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /usr/bin/cc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/c++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Found CUDA: /usr/local/cuda-10.2 (found version "10.2")

-- Found OpenCV: /usr (found version "4.1.1")

-- Configuring done

-- Generating done

-- Build files have been written to: /home/nvidia/yolov5/tensorrtx/yolov5/build

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ make -j6

[ 20%] Building NVCC (Device) object CMakeFiles/myplugins.dir/myplugins_generated_yololayer.cu.o

/home/nvidia/yolov5/tensorrtx/yolov5/yololayer.h(86): warning: function "nvinfer1::IPluginV2Ext::configurePlugin(const nvinfer1::Dims *, int, const nvinfer1::Dims *, int, const nvinfer1::DataType *, const nvinfer1::DataType *, const __nv_bool *, const __nv_bool *, nvinfer1::PluginFormat, int)" is hidden by "nvinfer1::YoloLayerPlugin::configurePlugin" -- virtual function override intended?

/home/nvidia/yolov5/tensorrtx/yolov5/yololayer.h(86): warning: function "nvinfer1::IPluginV2Ext::configurePlugin(const nvinfer1::Dims *, int, const nvinfer1::Dims *, int, const nvinfer1::DataType *, const nvinfer1::DataType *, const bool *, const bool *, nvinfer1::PluginFormat, int)" is hidden by "nvinfer1::YoloLayerPlugin::configurePlugin" -- virtual function override intended?

[ 40%] Linking CXX shared library libmyplugins.so

[ 40%] Built target myplugins

[ 80%] Building CXX object CMakeFiles/yolov5.dir/yolov5.cpp.o

[ 80%] Building CXX object CMakeFiles/yolov5.dir/calibrator.cpp.o

[100%] Linking CXX executable yolov5

[100%] Built target yolov5

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ ls

CMakeCache.txt CMakeFiles cmake_install.cmake libmyplugins.so Makefile yolov5 yolov5s.wts

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ sudo ./yolov5 -s yolov5s.wts yolov5s.engine s

[sudo] password for nvidia:

Loading weights: yolov5s.wts

Building engine, please wait for a while...

Build engine successfully!

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ ls

CMakeCache.txt CMakeFiles cmake_install.cmake libmyplugins.so Makefile yolov5 yolov5s.engine yolov5s.wts

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$

nvidia@nvidia-desktop:~/yolov5$ python3 train.py --img 640 --batch 8 --epochs 300 --data data/coco128.yaml --cfg models/yolov5s.yaml --weights weights/yolov5s.pt --device '0'

Matplotlib created a temporary config/cache directory at /tmp/matplotlib-hm4x9a5_ because the default path (/home/nvidia/.cache/matplotlib) is not a writable directory; it is highly recommended to set the MPLCONFIGDIR environment variable to a writable directory, in particular to speed up the import of Matplotlib and to better support multiprocessing.

github: skipping check (offline)

YOLOv5 v5.0-0-gf5b8f7d5 torch 1.9.0 CUDA:0 (Xavier, 31927.26953125MB)

Namespace(adam=False, artifact_alias='latest', batch_size=8, bbox_interval=-1, bucket='', cache_images=False, cfg='models/yolov5s.yaml', data='data/coco128.yaml', device='0', entity=None, epochs=300, evolve=False, exist_ok=False, global_rank=-1, hyp='data/hyp.scratch.yaml', image_weights=False, img_size=[640, 640], label_smoothing=0.0, linear_lr=False, local_rank=-1, multi_scale=False, name='exp', noautoanchor=False, nosave=False, notest=False, project='runs/train', quad=False, rect=False, resume=False, save_dir='runs/train/exp17', save_period=-1, single_cls=False, sync_bn=False, total_batch_size=8, upload_dataset=False, weights='weights/yolov5s.pt', workers=8, world_size=1)

tensorboard: Start with 'tensorboard --logdir runs/train', view at http://localhost:6006/

2022-12-29 14:30:11.401327: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library libcudart.so.10.2

hyperparameters: lr0=0.01, lrf=0.2, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=0.05, cls=0.5, cls_pw=1.0, obj=1.0, obj_pw=1.0, iou_t=0.2, anchor_t=4.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.0

wandb: Currently logged in as: junxing. Use `wandb login --relogin` to force relogin

wandb: Tracking run with wandb version 0.13.7

wandb: Run data is saved locally in /home/nvidia/yolov5/wandb/run-20221229_143028-3d4ixpx4

wandb: Run `wandb offline` to turn off syncing.

wandb: Syncing run exp17

wandb: ⭐️ View project at https://wandb.ai/junxing/YOLOv5

wandb: View run at https://wandb.ai/junxing/YOLOv5/runs/3d4ixpx4

from n params module arguments

0 -1 1 3520 models.common.Focus [3, 32, 3]

1 -1 1 18560 models.common.Conv [32, 64, 3, 2]

2 -1 1 18816 models.common.C3 [64, 64, 1]

3 -1 1 73984 models.common.Conv [64, 128, 3, 2]

4 -1 1 156928 models.common.C3 [128, 128, 3]

5 -1 1 295424 models.common.Conv [128, 256, 3, 2]

6 -1 1 625152 models.common.C3 [256, 256, 3]

7 -1 1 1180672 models.common.Conv [256, 512, 3, 2]

8 -1 1 656896 models.common.SPP [512, 512, [5, 9, 13]]

9 -1 1 1182720 models.common.C3 [512, 512, 1, False]

10 -1 1 131584 models.common.Conv [512, 256, 1, 1]

11 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

12 [-1, 6] 1 0 models.common.Concat [1]

13 -1 1 361984 models.common.C3 [512, 256, 1, False]

14 -1 1 33024 models.common.Conv [256, 128, 1, 1]

15 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

16 [-1, 4] 1 0 models.common.Concat [1]

17 -1 1 90880 models.common.C3 [256, 128, 1, False]

18 -1 1 147712 models.common.Conv [128, 128, 3, 2]

19 [-1, 14] 1 0 models.common.Concat [1]

20 -1 1 296448 models.common.C3 [256, 256, 1, False]

21 -1 1 590336 models.common.Conv [256, 256, 3, 2]

22 [-1, 10] 1 0 models.common.Concat [1]

23 -1 1 1182720 models.common.C3 [512, 512, 1, False]

24 [17, 20, 23] 1 229245 models.yolo.Detect [80, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]

/usr/local/lib/python3.6/dist-packages/torch/nn/functional.py:718: UserWarning: Named tensors and all their associated APIs are an experimental feature and subject to change. Please do not use them for anything important until they are released as stable. (Triggered internally at /media/nvidia/NVME/pytorch/pytorch-v1.9.0/c10/core/TensorImpl.h:1156.)

return torch.max_pool2d(input, kernel_size, stride, padding, dilation, ceil_mode)

Model Summary: 283 layers, 7276605 parameters, 7276605 gradients, 17.2 GFLOPS

Transferred 360/362 items from weights/yolov5s.pt

Scaled weight_decay = 0.0005

Optimizer groups: 62 .bias, 62 conv.weight, 59 other

train: Scanning '../coco128/labels/train2017.cache' images and labels... 126 found, 2 missing, 0 empty, 0 corrupted: 100%|█████████████████████████████████████████████████████████| 128/128 [00:00模型转换

接下来:使用TensorRT加速部署YOLOv5!!!!基本流程如下:

- 使用tensorrtx/yolov5中的

gen_wts.py文件,在yolov5-5.0中将yolov5.pt转换为yolov5.wts文件 - 在tensorrtx/yolov5中进行编译,生成可执行文件yolov5

- 使用yolov5可执行文件来生成

yolov5.engine文件,即TensorRT模型

nvidia@nvidia-desktop:~/yolov5$ python3 gen_wts.py -w runs/train/exp17/weights/best.pt -o best.wts

Matplotlib created a temporary config/cache directory at /tmp/matplotlib-ed9n7r3z because the default path (/home /nvidia/.cache/matplotlib) is not a writable directory; it is highly recommended to set the MPLCONFIGDIR environm ent variable to a writable directory, in particular to speed up the import of Matplotlib and to better support mu ltiprocessing.

nvidia@nvidia-desktop:~/yolov5$ ls

best.wts data Dockerfile hubconf.py models README.md runs test.py tutorial.ipynb wandb yolov5s.wts

build detect.py gen_wts.py LICENSE __pycache__ requirements.txt tensorrtx train.py utils weights

nvidia@nvidia-desktop:~/yolov5$ ll

total 128876

drwxrwxr-x 13 nvidia nvidia 4096 12月 29 13:18 ./

drwxr-xr-x 46 nvidia nvidia 4096 12月 29 13:10 ../

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 16:18 best.wts

drwxrwxr-x 2 nvidia nvidia 4096 12月 29 13:18 build/

drwxrwxr-x 4 nvidia nvidia 4096 12月 29 09:51 data/

-rw-rw-r-- 1 nvidia nvidia 8816 12月 26 17:12 detect.py

-rw-rw-r-- 1 nvidia nvidia 1809 12月 21 13:16 Dockerfile

-rw-rw-r-- 1 nvidia nvidia 3610 12月 21 13:16 .dockerignore

-rw-rw-r-- 1 nvidia nvidia 1358 12月 29 13:16 gen_wts.py

drwxrwxr-x 8 nvidia nvidia 4096 12月 28 13:01 .git/

-rw-rw-r-- 1 nvidia nvidia 75 12月 21 13:16 .gitattributes

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 13:16 .github/

-rwxrwxr-x 1 nvidia nvidia 3976 12月 21 13:16 .gitignore*

-rw-rw-r-- 1 nvidia nvidia 5510 12月 21 13:16 hubconf.py

-rw-rw-r-- 1 nvidia nvidia 35126 12月 21 13:16 LICENSE

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 14:16 models/

drwxrwxr-x 2 nvidia nvidia 4096 12月 21 14:16 __pycache__/

-rwxrwxr-x 1 nvidia nvidia 11191 12月 21 13:16 README.md*

-rwxrwxr-x 1 nvidia nvidia 599 12月 21 13:16 requirements.txt*

drwxr-xr-x 4 nvidia nvidia 4096 12月 22 16:39 runs/

drwxrwxr-x 41 nvidia nvidia 4096 12月 29 13:10 tensorrtx/

-rw-rw-r-- 1 nvidia nvidia 16976 12月 21 13:16 test.py

-rw-rw-r-- 1 nvidia nvidia 33779 12月 21 13:16 train.py

-rw-rw-r-- 1 nvidia nvidia 394029 12月 21 13:16 tutorial.ipynb

drwxrwxr-x 6 nvidia nvidia 4096 12月 27 13:29 utils/

drwxrwxr-x 16 nvidia nvidia 4096 12月 29 14:30 wandb/

drwxrwxr-x 2 nvidia nvidia 4096 12月 28 13:05 weights/

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 13:17 yolov5s.wts

nvidia@nvidia-desktop:~/yolov5$ ll

total 128876

drwxrwxr-x 13 nvidia nvidia 4096 12月 29 13:18 ./

drwxr-xr-x 46 nvidia nvidia 4096 12月 29 13:10 ../

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 16:18 best.wts

drwxrwxr-x 2 nvidia nvidia 4096 12月 29 13:18 build/

drwxrwxr-x 4 nvidia nvidia 4096 12月 29 09:51 data/

-rw-rw-r-- 1 nvidia nvidia 8816 12月 26 17:12 detect.py

-rw-rw-r-- 1 nvidia nvidia 1809 12月 21 13:16 Dockerfile

-rw-rw-r-- 1 nvidia nvidia 3610 12月 21 13:16 .dockerignore

-rw-rw-r-- 1 nvidia nvidia 1358 12月 29 13:16 gen_wts.py

drwxrwxr-x 8 nvidia nvidia 4096 12月 28 13:01 .git/

-rw-rw-r-- 1 nvidia nvidia 75 12月 21 13:16 .gitattributes

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 13:16 .github/

-rwxrwxr-x 1 nvidia nvidia 3976 12月 21 13:16 .gitignore*

-rw-rw-r-- 1 nvidia nvidia 5510 12月 21 13:16 hubconf.py

-rw-rw-r-- 1 nvidia nvidia 35126 12月 21 13:16 LICENSE

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 14:16 models/

drwxrwxr-x 2 nvidia nvidia 4096 12月 21 14:16 __pycache__/

-rwxrwxr-x 1 nvidia nvidia 11191 12月 21 13:16 README.md*

-rwxrwxr-x 1 nvidia nvidia 599 12月 21 13:16 requirements.txt*

drwxr-xr-x 4 nvidia nvidia 4096 12月 22 16:39 runs/

drwxrwxr-x 41 nvidia nvidia 4096 12月 29 13:10 tensorrtx/

-rw-rw-r-- 1 nvidia nvidia 16976 12月 21 13:16 test.py

-rw-rw-r-- 1 nvidia nvidia 33779 12月 21 13:16 train.py

-rw-rw-r-- 1 nvidia nvidia 394029 12月 21 13:16 tutorial.ipynb

drwxrwxr-x 6 nvidia nvidia 4096 12月 27 13:29 utils/

drwxrwxr-x 16 nvidia nvidia 4096 12月 29 14:30 wandb/

drwxrwxr-x 2 nvidia nvidia 4096 12月 28 13:05 weights/

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 13:17 yolov5s.wts

nvidia@nvidia-desktop:~/yolov5$ ll

total 128876

drwxrwxr-x 13 nvidia nvidia 4096 12月 29 13:18 ./

drwxr-xr-x 46 nvidia nvidia 4096 12月 29 13:10 ../

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 16:18 best.wts

drwxrwxr-x 2 nvidia nvidia 4096 12月 29 13:18 build/

drwxrwxr-x 4 nvidia nvidia 4096 12月 29 09:51 data/

-rw-rw-r-- 1 nvidia nvidia 8816 12月 26 17:12 detect.py

-rw-rw-r-- 1 nvidia nvidia 1809 12月 21 13:16 Dockerfile

-rw-rw-r-- 1 nvidia nvidia 3610 12月 21 13:16 .dockerignore

-rw-rw-r-- 1 nvidia nvidia 1358 12月 29 13:16 gen_wts.py

drwxrwxr-x 8 nvidia nvidia 4096 12月 28 13:01 .git/

-rw-rw-r-- 1 nvidia nvidia 75 12月 21 13:16 .gitattributes

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 13:16 .github/

-rwxrwxr-x 1 nvidia nvidia 3976 12月 21 13:16 .gitignore*

-rw-rw-r-- 1 nvidia nvidia 5510 12月 21 13:16 hubconf.py

-rw-rw-r-- 1 nvidia nvidia 35126 12月 21 13:16 LICENSE

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 14:16 models/

drwxrwxr-x 2 nvidia nvidia 4096 12月 21 14:16 __pycache__/

-rwxrwxr-x 1 nvidia nvidia 11191 12月 21 13:16 README.md*

-rwxrwxr-x 1 nvidia nvidia 599 12月 21 13:16 requirements.txt*

drwxr-xr-x 4 nvidia nvidia 4096 12月 22 16:39 runs/

drwxrwxr-x 41 nvidia nvidia 4096 12月 29 13:10 tensorrtx/

-rw-rw-r-- 1 nvidia nvidia 16976 12月 21 13:16 test.py

-rw-rw-r-- 1 nvidia nvidia 33779 12月 21 13:16 train.py

-rw-rw-r-- 1 nvidia nvidia 394029 12月 21 13:16 tutorial.ipynb

drwxrwxr-x 6 nvidia nvidia 4096 12月 27 13:29 utils/

drwxrwxr-x 16 nvidia nvidia 4096 12月 29 14:30 wandb/

drwxrwxr-x 2 nvidia nvidia 4096 12月 28 13:05 weights/

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 13:17 yolov5s.wts

nvidia@nvidia-desktop:~/yolov5$ ll

total 128876

drwxrwxr-x 13 nvidia nvidia 4096 12月 29 13:18 ./

drwxr-xr-x 46 nvidia nvidia 4096 12月 29 13:10 ../

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 16:18 best.wts

drwxrwxr-x 2 nvidia nvidia 4096 12月 29 13:18 build/

drwxrwxr-x 4 nvidia nvidia 4096 12月 29 09:51 data/

-rw-rw-r-- 1 nvidia nvidia 8816 12月 26 17:12 detect.py

-rw-rw-r-- 1 nvidia nvidia 1809 12月 21 13:16 Dockerfile

-rw-rw-r-- 1 nvidia nvidia 3610 12月 21 13:16 .dockerignore

-rw-rw-r-- 1 nvidia nvidia 1358 12月 29 13:16 gen_wts.py

drwxrwxr-x 8 nvidia nvidia 4096 12月 28 13:01 .git/

-rw-rw-r-- 1 nvidia nvidia 75 12月 21 13:16 .gitattributes

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 13:16 .github/

-rwxrwxr-x 1 nvidia nvidia 3976 12月 21 13:16 .gitignore*

-rw-rw-r-- 1 nvidia nvidia 5510 12月 21 13:16 hubconf.py

-rw-rw-r-- 1 nvidia nvidia 35126 12月 21 13:16 LICENSE

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 14:16 models/

drwxrwxr-x 2 nvidia nvidia 4096 12月 21 14:16 __pycache__/

-rwxrwxr-x 1 nvidia nvidia 11191 12月 21 13:16 README.md*

-rwxrwxr-x 1 nvidia nvidia 599 12月 21 13:16 requirements.txt*

drwxr-xr-x 4 nvidia nvidia 4096 12月 22 16:39 runs/

drwxrwxr-x 41 nvidia nvidia 4096 12月 29 13:10 tensorrtx/

-rw-rw-r-- 1 nvidia nvidia 16976 12月 21 13:16 test.py

-rw-rw-r-- 1 nvidia nvidia 33779 12月 21 13:16 train.py

-rw-rw-r-- 1 nvidia nvidia 394029 12月 21 13:16 tutorial.ipynb

drwxrwxr-x 6 nvidia nvidia 4096 12月 27 13:29 utils/

drwxrwxr-x 16 nvidia nvidia 4096 12月 29 14:30 wandb/

drwxrwxr-x 2 nvidia nvidia 4096 12月 28 13:05 weights/

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 13:17 yolov5s.wts

nvidia@nvidia-desktop:~/yolov5$ ll

total 128876

drwxrwxr-x 13 nvidia nvidia 4096 12月 29 13:18 ./

drwxr-xr-x 46 nvidia nvidia 4096 12月 29 13:10 ../

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 16:18 best.wts

drwxrwxr-x 2 nvidia nvidia 4096 12月 29 13:18 build/

drwxrwxr-x 4 nvidia nvidia 4096 12月 29 09:51 data/

-rw-rw-r-- 1 nvidia nvidia 8816 12月 26 17:12 detect.py

-rw-rw-r-- 1 nvidia nvidia 1809 12月 21 13:16 Dockerfile

-rw-rw-r-- 1 nvidia nvidia 3610 12月 21 13:16 .dockerignore

-rw-rw-r-- 1 nvidia nvidia 1358 12月 29 13:16 gen_wts.py

drwxrwxr-x 8 nvidia nvidia 4096 12月 28 13:01 .git/

-rw-rw-r-- 1 nvidia nvidia 75 12月 21 13:16 .gitattributes

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 13:16 .github/

-rwxrwxr-x 1 nvidia nvidia 3976 12月 21 13:16 .gitignore*

-rw-rw-r-- 1 nvidia nvidia 5510 12月 21 13:16 hubconf.py

-rw-rw-r-- 1 nvidia nvidia 35126 12月 21 13:16 LICENSE

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 14:16 models/

drwxrwxr-x 2 nvidia nvidia 4096 12月 21 14:16 __pycache__/

-rwxrwxr-x 1 nvidia nvidia 11191 12月 21 13:16 README.md*

-rwxrwxr-x 1 nvidia nvidia 599 12月 21 13:16 requirements.txt*

drwxr-xr-x 4 nvidia nvidia 4096 12月 22 16:39 runs/

drwxrwxr-x 41 nvidia nvidia 4096 12月 29 13:10 tensorrtx/

-rw-rw-r-- 1 nvidia nvidia 16976 12月 21 13:16 test.py

-rw-rw-r-- 1 nvidia nvidia 33779 12月 21 13:16 train.py

-rw-rw-r-- 1 nvidia nvidia 394029 12月 21 13:16 tutorial.ipynb

drwxrwxr-x 6 nvidia nvidia 4096 12月 27 13:29 utils/

drwxrwxr-x 16 nvidia nvidia 4096 12月 29 14:30 wandb/

drwxrwxr-x 2 nvidia nvidia 4096 12月 28 13:05 weights/

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 13:17 yolov5s.wts

nvidia@nvidia-desktop:~/yolov5$ ll

total 128876

drwxrwxr-x 13 nvidia nvidia 4096 12月 29 13:18 ./

drwxr-xr-x 46 nvidia nvidia 4096 12月 29 13:10 ../

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 16:18 best.wts

drwxrwxr-x 2 nvidia nvidia 4096 12月 29 13:18 build/

drwxrwxr-x 4 nvidia nvidia 4096 12月 29 09:51 data/

-rw-rw-r-- 1 nvidia nvidia 8816 12月 26 17:12 detect.py

-rw-rw-r-- 1 nvidia nvidia 1809 12月 21 13:16 Dockerfile

-rw-rw-r-- 1 nvidia nvidia 3610 12月 21 13:16 .dockerignore

-rw-rw-r-- 1 nvidia nvidia 1358 12月 29 13:16 gen_wts.py

drwxrwxr-x 8 nvidia nvidia 4096 12月 28 13:01 .git/

-rw-rw-r-- 1 nvidia nvidia 75 12月 21 13:16 .gitattributes

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 13:16 .github/

-rwxrwxr-x 1 nvidia nvidia 3976 12月 21 13:16 .gitignore*

-rw-rw-r-- 1 nvidia nvidia 5510 12月 21 13:16 hubconf.py

-rw-rw-r-- 1 nvidia nvidia 35126 12月 21 13:16 LICENSE

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 14:16 models/

drwxrwxr-x 2 nvidia nvidia 4096 12月 21 14:16 __pycache__/

-rwxrwxr-x 1 nvidia nvidia 11191 12月 21 13:16 README.md*

-rwxrwxr-x 1 nvidia nvidia 599 12月 21 13:16 requirements.txt*

drwxr-xr-x 4 nvidia nvidia 4096 12月 22 16:39 runs/

drwxrwxr-x 41 nvidia nvidia 4096 12月 29 13:10 tensorrtx/

-rw-rw-r-- 1 nvidia nvidia 16976 12月 21 13:16 test.py

-rw-rw-r-- 1 nvidia nvidia 33779 12月 21 13:16 train.py

-rw-rw-r-- 1 nvidia nvidia 394029 12月 21 13:16 tutorial.ipynb

drwxrwxr-x 6 nvidia nvidia 4096 12月 27 13:29 utils/

drwxrwxr-x 16 nvidia nvidia 4096 12月 29 14:30 wandb/

drwxrwxr-x 2 nvidia nvidia 4096 12月 28 13:05 weights/

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 13:17 yolov5s.wts

nvidia@nvidia-desktop:~/yolov5$ ll

total 128876

drwxrwxr-x 13 nvidia nvidia 4096 12月 29 13:18 ./

drwxr-xr-x 46 nvidia nvidia 4096 12月 29 13:10 ../

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 16:18 best.wts

drwxrwxr-x 2 nvidia nvidia 4096 12月 29 13:18 build/

drwxrwxr-x 4 nvidia nvidia 4096 12月 29 09:51 data/

-rw-rw-r-- 1 nvidia nvidia 8816 12月 26 17:12 detect.py

-rw-rw-r-- 1 nvidia nvidia 1809 12月 21 13:16 Dockerfile

-rw-rw-r-- 1 nvidia nvidia 3610 12月 21 13:16 .dockerignore

-rw-rw-r-- 1 nvidia nvidia 1358 12月 29 13:16 gen_wts.py

drwxrwxr-x 8 nvidia nvidia 4096 12月 28 13:01 .git/

-rw-rw-r-- 1 nvidia nvidia 75 12月 21 13:16 .gitattributes

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 13:16 .github/

-rwxrwxr-x 1 nvidia nvidia 3976 12月 21 13:16 .gitignore*

-rw-rw-r-- 1 nvidia nvidia 5510 12月 21 13:16 hubconf.py

-rw-rw-r-- 1 nvidia nvidia 35126 12月 21 13:16 LICENSE

drwxrwxr-x 4 nvidia nvidia 4096 12月 21 14:16 models/

drwxrwxr-x 2 nvidia nvidia 4096 12月 21 14:16 __pycache__/

-rwxrwxr-x 1 nvidia nvidia 11191 12月 21 13:16 README.md*

-rwxrwxr-x 1 nvidia nvidia 599 12月 21 13:16 requirements.txt*

drwxr-xr-x 4 nvidia nvidia 4096 12月 22 16:39 runs/

drwxrwxr-x 41 nvidia nvidia 4096 12月 29 13:10 tensorrtx/

-rw-rw-r-- 1 nvidia nvidia 16976 12月 21 13:16 test.py

-rw-rw-r-- 1 nvidia nvidia 33779 12月 21 13:16 train.py

-rw-rw-r-- 1 nvidia nvidia 394029 12月 21 13:16 tutorial.ipynb

drwxrwxr-x 6 nvidia nvidia 4096 12月 27 13:29 utils/

drwxrwxr-x 16 nvidia nvidia 4096 12月 29 14:30 wandb/

drwxrwxr-x 2 nvidia nvidia 4096 12月 28 13:05 weights/

-rw-rw-r-- 1 nvidia nvidia 65675318 12月 29 13:17 yolov5s.wts

nvidia@nvidia-desktop:~/yolov5$ cd tensorrtx/yolov5/

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5$ ls

build calibrator.h common.hpp gen_wts.py macros.h samples yololayer.cu yolov5.cpp yolov5_trt.py

calibrator.cpp CMakeLists.txt cuda_utils.h logging.h README.md utils.h yololayer.h yolov5s.wts

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5$ cd build/

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ ls

best.wts _bus.jpg CMakeCache.txt CMakeFiles cmake_install.cmake libmyplugins.so Makefile yolov5 yolov5s.engine yolov5s.wts _zidane.jpg

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ rm best.wts

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ cp ../../

alexnet/ densenet/ .github/ inception/ mobilenet/ repvgg/ senet/ ufld/ yolov3-tiny/

arcface/ detr/ .gitignore lenet/ psenet/ resnet/ shufflenetv2/ unet/ yolov4/

centernet/ Dockerfile googlenet/ LICENSE rcnn/ retinaface/ squeezenet/ vgg/ yolov5/

crnn/ efficientnet/ hrnet/ lprnet/ README.md retinafaceAntiCov/ tsm/ yolov3/

dbnet/ .git/ ibnnet/ mnasnet/ refinedet/ scaled-yolov4/ tutorials/ yolov3-spp/

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ cp ../../../

best.wts detect.py gen_wts.py .github/ LICENSE README.md tensorrtx/ tutorial.ipynb weights/

build/ Dockerfile .git/ .gitignore models/ requirements.txt test.py utils/ yolov5s.wts

data/ .dockerignore .gitattributes hubconf.py __pycache__/ runs/ train.py wandb/

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ cp ../../../

best.wts detect.py gen_wts.py .github/ LICENSE README.md tensorrtx/ tutorial.ipynb weights/

build/ Dockerfile .git/ .gitignore models/ requirements.txt test.py utils/ yolov5s.wts

data/ .dockerignore .gitattributes hubconf.py __pycache__/ runs/ train.py wandb/

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ cp ../../../best.wts .

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ sudo ./yolov5 -s best.wts best.engine s

[sudo] password for nvidia:

Loading weights: best.wts

Building engine, please wait for a while...

Build engine successfully!

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$

还有, 如果我们要转换自己训练的模型,需要在编译前修改yololayer.h,这里没改用的开源的数据。

static constexpr int CLASS_NUM =80; // 数据集的类别数//修改成自己的类别数

static constexpr int INPUT_H =608;

static constexpr int INPUT_W =608;查看使用的版本是否对应

/yolov5$ git log

commit f5b8f7d54c9fa69210da0177fec7ac2d9e4a627c (HEAD, tag: v5.0)

Author: Glenn Jocher

Date: Sun Apr 11 19:23:47 2021 +0200

YOLOv5 v5.0 Release (#2762)

commit e2b7bc0b32ecf306fc179bb87bad82216a470b37

Author: Ben Milanko

Date: Mon Apr 12 02:53:40 2021 +1000

YouTube Livestream Detection (#2752)

* Youtube livestream detection

* dependancy update to auto install pafy

* Remove print

* include youtube_dl in deps

* PEP8 reformat

* youtube url check fix

* reduce lines

* add comment

* update check_requirements

* stream framerate fix

* Update README.md

* cleanup

* PEP8

* remove cap.retrieve() failure code

Co-authored-by: Glenn Jocher

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ git log

commit 791c8a4484ac08ab8808c347f5b900bdf49e15c1 (HEAD, tag: yolov5-v5.0)

Author: wang-xinyu

Date: Wed Oct 13 12:15:10 2021 +0000

update yolov5 readme

commit c70a33882796b5bb37f1a1bf548a551e8403ea85

Author: JumpPandaer <[email protected]>

Date: Wed Oct 13 19:04:39 2021 +0800

fix a bug with maskrcnn (#758)

commit 7c1a145c346241df048c0525de11580b3c45c756

Author: liufqing <[email protected]>

Date: Thu Sep 30 15:18:04 2021 +0800

add resnet34 (#741)

* hello

* add resnet34

commit 9fefbed77f2f8166cacc9d4063102072f501e653

Author: Armassarion <33727511+Armassarion@users USB摄像头实时检测

要修改代码,我这里用的是大佬们写好的,直接复制张贴就能跑。

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ cat ../yolov5.cpp

#include

#include

#include"cuda_utils.h"

#include"logging.h"

#include"common.hpp"

#include"utils.h"

#include"calibrator.h"

#define USE_FP16// set USE_INT8 or USE_FP16 or USE_FP32

#define DEVICE 0// GPU id

#define NMS_THRESH 0.4

#define CONF_THRESH 0.5

#define BATCH_SIZE 1// stuff we know about the network and the input/output blobs

static const int INPUT_H = Yolo::INPUT_H;

static const int INPUT_W = Yolo::INPUT_W;

static const int CLASS_NUM = Yolo::CLASS_NUM;

static const int OUTPUT_SIZE = Yolo::MAX_OUTPUT_BBOX_COUNT *sizeof(Yolo::Detection)/sizeof(float)+1;// we assume the yololayer outputs no more than MAX_OUTPUT_BBOX_COUNT boxes that conf >= 0.1

const char* INPUT_BLOB_NAME ="data";

const char* OUTPUT_BLOB_NAME ="prob";

static Logger gLogger;// 数据集所有类别名称

char* my_classes[]={"person","bicycle","car","motorcycle","airplane","bus","train","truck","boat","traffic light","fire hydrant","stop sign","parking meter","bench","bird","cat","dog","horse","sheep","cow","elephant","bear","zebra","giraffe","backpack","umbrella","handbag","tie","suitcase","frisbee","skis","snowboard","sports ball","kite","baseball bat","baseball glove","skateboard","surfboard","tennis racket","bottle","wine glass","cup","fork","knife","spoon","bowl","banana","apple","sandwich","orange","broccoli","carrot","hot dog","pizza","donut","cake","chair","couch","potted plant","bed","dining table","toilet","tv","laptop","mouse","remote","keyboard","cell phone","microwave","oven","toaster","sink","refrigerator","book","clock","vase","scissors","teddy bear","hair drier","toothbrush"};

static int get_width(int x,float gw,int divisor =8){//return math.ceil(x / divisor) * divisor

if(int(x * gw)% divisor ==0){return int(x * gw);}

return (int(x * gw / divisor)+1)* divisor;

}

static int get_depth(int x,float gd){

if(x ==1){return 1;}

else{

return round(x * gd)>1?round(x * gd):1;}

}

ICudaEngine*build_engine(unsigned int maxBatchSize, IBuilder* builder, IBuilderConfig* config, DataType dt,float& gd,float& gw, std::string& wts_name){

INetworkDefinition* network = builder->createNetworkV2(0U);

// Create input tensor of shape {3, INPUT_H, INPUT_W} with name INPUT_BLOB_NAME

ITensor* data = network->addInput(INPUT_BLOB_NAME, dt, Dims3{3, INPUT_H, INPUT_W });assert(data);

std::map weightMap =loadWeights(wts_name);

/* ------ yolov5 backbone------ */

auto focus0 =focus(network, weightMap,*data,3,get_width(64, gw),3,"model.0");

auto conv1 =convBlock(network, weightMap,*focus0->getOutput(0),get_width(128, gw),3,2,1,"model.1");

auto bottleneck_CSP2 =C3(network, weightMap,*conv1->getOutput(0),get_width(128, gw),get_width(128, gw),get_depth(3, gd),true,1,0.5,"model.2");

auto conv3 =convBlock(network, weightMap,*bottleneck_CSP2->getOutput(0),get_width(256, gw),3,2,1,"model.3");

auto bottleneck_csp4 =C3(network, weightMap,*conv3->getOutput(0),get_width(256, gw),get_width(256, gw),get_depth(9, gd),true,1,0.5,"model.4");

auto conv5 =convBlock(network, weightMap,*bottleneck_csp4->getOutput(0),get_width(512, gw),3,2,1,"model.5");

auto bottleneck_csp6 =C3(network, weightMap,*conv5->getOutput(0),get_width(512, gw),get_width(512, gw),get_depth(9, gd),true,1,0.5,"model.6");

auto conv7 =convBlock(network, weightMap,*bottleneck_csp6->getOutput(0),get_width(1024, gw),3,2,1,"model.7");

auto spp8 =SPP(network, weightMap,*conv7->getOutput(0),get_width(1024, gw),get_width(1024, gw),5,9,13,"model.8");

/* ------ yolov5 head ------ */

auto bottleneck_csp9 =C3(network, weightMap,*spp8->getOutput(0),get_width(1024, gw),get_width(1024, gw),get_depth(3, gd),false,1,0.5,"model.9");

auto conv10 =convBlock(network, weightMap,*bottleneck_csp9->getOutput(0),get_width(512, gw),1,1,1,"model.10");

auto upsample11 = network->addResize(*conv10->getOutput(0));assert(upsample11);

upsample11->setResizeMode(ResizeMode::kNEAREST);

upsample11->setOutputDimensions(bottleneck_csp6->getOutput(0)->getDimensions());

ITensor* inputTensors12[]={ upsample11->getOutput(0), bottleneck_csp6->getOutput(0)};

auto cat12 = network->addConcatenation(inputTensors12,2);

auto bottleneck_csp13 =C3(network, weightMap,*cat12->getOutput(0),get_width(1024, gw),get_width(512, gw),get_depth(3, gd),false,1,0.5,"model.13");

auto conv14 =convBlock(network, weightMap,*bottleneck_csp13->getOutput(0),get_width(256, gw),1,1,1,"model.14");

auto upsample15 = network->addResize(*conv14->getOutput(0));assert(upsample15);

upsample15->setResizeMode(ResizeMode::kNEAREST);

upsample15->setOutputDimensions(bottleneck_csp4->getOutput(0)->getDimensions());

ITensor* inputTensors16[]={ upsample15->getOutput(0), bottleneck_csp4->getOutput(0)};

auto cat16 = network->addConcatenation(inputTensors16,2);

auto bottleneck_csp17 =C3(network, weightMap,*cat16->getOutput(0),get_width(512, gw),get_width(256, gw),get_depth(3, gd),false,1,0.5,"model.17");// yolo layer 0

IConvolutionLayer* det0 = network->addConvolutionNd(*bottleneck_csp17->getOutput(0),3*(Yolo::CLASS_NUM +5), DimsHW{1,1}, weightMap["model.24.m.0.weight"], weightMap["model.24.m.0.bias"]);

auto conv18 =convBlock(network, weightMap,*bottleneck_csp17->getOutput(0),get_width(256, gw),3,2,1,"model.18");

ITensor* inputTensors19[]={ conv18->getOutput(0), conv14->getOutput(0)};

auto cat19 = network->addConcatenation(inputTensors19,2);

auto bottleneck_csp20 =C3(network, weightMap,*cat19->getOutput(0),get_width(512, gw),get_width(512, gw),get_depth(3, gd),false,1,0.5,"model.20");//yolo layer 1

IConvolutionLayer* det1 = network->addConvolutionNd(*bottleneck_csp20->getOutput(0),3*(Yolo::CLASS_NUM +5), DimsHW{1,1}, weightMap["model.24.m.1.weight"], weightMap["model.24.m.1.bias"]);

auto conv21 =convBlock(network, weightMap,*bottleneck_csp20->getOutput(0),get_width(512, gw),3,2,1,"model.21");

ITensor* inputTensors22[]={ conv21->getOutput(0), conv10->getOutput(0)};

auto cat22 = network->addConcatenation(inputTensors22,2);

auto bottleneck_csp23 =C3(network, weightMap,*cat22->getOutput(0),get_width(1024, gw),get_width(1024, gw),get_depth(3, gd),false,1,0.5,"model.23");

IConvolutionLayer* det2 = network->addConvolutionNd(*bottleneck_csp23->getOutput(0),3*(Yolo::CLASS_NUM +5), DimsHW{1,1}, weightMap["model.24.m.2.weight"], weightMap["model.24.m.2.bias"]);

auto yolo =addYoLoLayer(network, weightMap,"model.24", std::vector{det0, det1, det2});

yolo->getOutput(0)->setName(OUTPUT_BLOB_NAME);

network->markOutput(*yolo->getOutput(0));

// Build engine

builder->setMaxBatchSize(maxBatchSize);

config->setMaxWorkspaceSize(16*(1<<20));// 16MB

#if defined(USE_FP16)

config->setFlag(BuilderFlag::kFP16);

#elif defined(USE_INT8)

std::cout <<"Your platform support int8: "<<(builder->platformHasFastInt8()?"true":"false")<< std::endl;

assert(builder->platformHasFastInt8());

config->setFlag(BuilderFlag::kINT8);

Int8EntropyCalibrator2* calibrator =newInt8EntropyCalibrator2(1, INPUT_W, INPUT_H,"./coco_calib/","int8calib.table", INPUT_BLOB_NAME);

config->setInt8Calibrator(calibrator);

#endif

std::cout <<"Building engine, please wait for a while..."<< std::endl;

ICudaEngine* engine = builder->buildEngineWithConfig(*network,*config);

std::cout <<"Build engine successfully!"<< std::endl;

// Don't need the network any more

network->destroy();

// Release host memory

for(auto& mem : weightMap)

{

free((void*)(mem.second.values));

}

return engine;

}

ICudaEngine*build_engine_p6(unsigned int maxBatchSize, IBuilder* builder, IBuilderConfig* config, DataType dt,float& gd,float& gw, std::string& wts_name){

INetworkDefinition* network = builder->createNetworkV2(0U);

// Create input tensor of shape {3, INPUT_H, INPUT_W} with name INPUT_BLOB_NAME

ITensor* data = network->addInput(INPUT_BLOB_NAME, dt, Dims3{3, INPUT_H, INPUT_W });assert(data);

std::map weightMap =loadWeights(wts_name);

/* ------ yolov5 backbone------ */

auto focus0 =focus(network, weightMap,*data,3,get_width(64, gw),3,"model.0");

auto conv1 =convBlock(network, weightMap,*focus0->getOutput(0),get_width(128, gw),3,2,1,"model.1");

auto c3_2 =C3(network, weightMap,*conv1->getOutput(0),get_width(128, gw),get_width(128, gw),get_depth(3, gd),true,1,0.5,"model.2");

auto conv3 =convBlock(network, weightMap,*c3_2->getOutput(0),get_width(256, gw),3,2,1,"model.3");

auto c3_4 =C3(network, weightMap,*conv3->getOutput(0),get_width(256, gw),get_width(256, gw),get_depth(9, gd),true,1,0.5,"model.4");

auto conv5 =convBlock(network, weightMap,*c3_4->getOutput(0),get_width(512, gw),3,2,1,"model.5");

auto c3_6 =C3(network, weightMap,*conv5->getOutput(0),get_width(512, gw),get_width(512, gw),get_depth(9, gd),true,1,0.5,"model.6");

auto conv7 =convBlock(network, weightMap,*c3_6->getOutput(0),get_width(768, gw),3,2,1,"model.7");

auto c3_8 =C3(network, weightMap,*conv7->getOutput(0),get_width(768, gw),get_width(768, gw),get_depth(3, gd),true,1,0.5,"model.8");

auto conv9 =convBlock(network, weightMap,*c3_8->getOutput(0),get_width(1024, gw),3,2,1,"model.9");

auto spp10 =SPP(network, weightMap,*conv9->getOutput(0),get_width(1024, gw),get_width(1024, gw),3,5,7,"model.10");

auto c3_11 =C3(network, weightMap,*spp10->getOutput(0),get_width(1024, gw),get_width(1024, gw),get_depth(3, gd),false,1,0.5,"model.11");

/* ------ yolov5 head ------ */

auto conv12 =convBlock(network, weightMap,*c3_11->getOutput(0),get_width(768, gw),1,1,1,"model.12");

auto upsample13 = network->addResize(*conv12->getOutput(0));assert(upsample13);

upsample13->setResizeMode(ResizeMode::kNEAREST);

upsample13->setOutputDimensions(c3_8->getOutput(0)->getDimensions());

ITensor* inputTensors14[]={ upsample13->getOutput(0), c3_8->getOutput(0)};

auto cat14 = network->addConcatenation(inputTensors14,2);

auto c3_15 =C3(network, weightMap,*cat14->getOutput(0),get_width(1536, gw),get_width(768, gw),get_depth(3, gd),false,1,0.5,"model.15");

auto conv16 =convBlock(network, weightMap,*c3_15->getOutput(0),get_width(512, gw),1,1,1,"model.16");

auto upsample17 = network->addResize(*conv16->getOutput(0));assert(upsample17);

upsample17->setResizeMode(ResizeMode::kNEAREST);

upsample17->setOutputDimensions(c3_6->getOutput(0)->getDimensions());

ITensor* inputTensors18[]={ upsample17->getOutput(0), c3_6->getOutput(0)};

auto cat18 = network->addConcatenation(inputTensors18,2);

auto c3_19 =C3(network, weightMap,*cat18->getOutput(0),get_width(1024, gw),get_width(512, gw),get_depth(3, gd),false,1,0.5,"model.19");

auto conv20 =convBlock(network, weightMap,*c3_19->getOutput(0),get_width(256, gw),1,1,1,"model.20");

auto upsample21 = network->addResize(*conv20->getOutput(0));assert(upsample21);

upsample21->setResizeMode(ResizeMode::kNEAREST);

upsample21->setOutputDimensions(c3_4->getOutput(0)->getDimensions());

ITensor* inputTensors21[]={ upsample21->getOutput(0), c3_4->getOutput(0)};

auto cat22 = network->addConcatenation(inputTensors21,2);

auto c3_23 =C3(network, weightMap,*cat22->getOutput(0),get_width(512, gw),get_width(256, gw),get_depth(3, gd),false,1,0.5,"model.23");

auto conv24 =convBlock(network, weightMap,*c3_23->getOutput(0),get_width(256, gw),3,2,1,"model.24");

ITensor* inputTensors25[]={ conv24->getOutput(0), conv20->getOutput(0)};

auto cat25 = network->addConcatenation(inputTensors25,2);

auto c3_26 =C3(network, weightMap,*cat25->getOutput(0),get_width(1024, gw),get_width(512, gw),get_depth(3, gd),false,1,0.5,"model.26");

auto conv27 =convBlock(network, weightMap,*c3_26->getOutput(0),get_width(512, gw),3,2,1,"model.27");

ITensor* inputTensors28[]={ conv27->getOutput(0), conv16->getOutput(0)};

auto cat28 = network->addConcatenation(inputTensors28,2);

auto c3_29 =C3(network, weightMap,*cat28->getOutput(0),get_width(1536, gw),get_width(768, gw),get_depth(3, gd),false,1,0.5,"model.29");

auto conv30 =convBlock(network, weightMap,*c3_29->getOutput(0),get_width(768, gw),3,2,1,"model.30");

ITensor* inputTensors31[]={ conv30->getOutput(0), conv12->getOutput(0)};

auto cat31 = network->addConcatenation(inputTensors31,2);

auto c3_32 =C3(network, weightMap,*cat31->getOutput(0),get_width(2048, gw),get_width(1024, gw),get_depth(3, gd),false,1,0.5,"model.32");

/* ------ detect ------ */

IConvolutionLayer* det0 = network->addConvolutionNd(*c3_23->getOutput(0),3*(Yolo::CLASS_NUM +5), DimsHW{1,1}, weightMap["model.33.m.0.weight"], weightMap["model.33.m.0.bias"]);

IConvolutionLayer* det1 = network->addConvolutionNd(*c3_26->getOutput(0),3*(Yolo::CLASS_NUM +5), DimsHW{1,1}, weightMap["model.33.m.1.weight"], weightMap["model.33.m.1.bias"]);

IConvolutionLayer* det2 = network->addConvolutionNd(*c3_29->getOutput(0),3*(Yolo::CLASS_NUM +5), DimsHW{1,1}, weightMap["model.33.m.2.weight"], weightMap["model.33.m.2.bias"]);

IConvolutionLayer* det3 = network->addConvolutionNd(*c3_32->getOutput(0),3*(Yolo::CLASS_NUM +5), DimsHW{1,1}, weightMap["model.33.m.3.weight"], weightMap["model.33.m.3.bias"]);

auto yolo =addYoLoLayer(network, weightMap,"model.33", std::vector{det0, det1, det2, det3});

yolo->getOutput(0)->setName(OUTPUT_BLOB_NAME);

network->markOutput(*yolo->getOutput(0));

// Build engine

builder->setMaxBatchSize(maxBatchSize);

config->setMaxWorkspaceSize(16*(1<<20));// 16MB

#if defined(USE_FP16)

config->setFlag(BuilderFlag::kFP16);

#elif defined(USE_INT8)

std::cout <<"Your platform support int8: "<<(builder->platformHasFastInt8()?"true":"false")<< std::endl;assert(builder->platformHasFastInt8());

config->setFlag(BuilderFlag::kINT8);

Int8EntropyCalibrator2* calibrator =newInt8EntropyCalibrator2(1, INPUT_W, INPUT_H,"./coco_calib/","int8calib.table", INPUT_BLOB_NAME);

config->setInt8Calibrator(calibrator);

#endif

std::cout <<"Building engine, please wait for a while..."<< std::endl;

ICudaEngine* engine = builder->buildEngineWithConfig(*network,*config);

std::cout <<"Build engine successfully!"<< std::endl;

// Don't need the network any more

network->destroy();

// Release host memory

for(auto& mem : weightMap)

{

free((void*)(mem.second.values));

}

return engine;

}

void APIToModel(unsigned int maxBatchSize, IHostMemory** modelStream,float& gd,float& gw, std::string& wts_name){// Create builder

IBuilder* builder =createInferBuilder(gLogger);

IBuilderConfig* config = builder->createBuilderConfig();

// Create model to populate the network, then set the outputs and create an engine

ICudaEngine* engine =build_engine(maxBatchSize, builder, config, DataType::kFLOAT, gd, gw, wts_name);

assert(engine !=nullptr);// Serialize the engine(*modelStream)= engine->serialize();// Close everything down

engine->destroy();

builder->destroy();

config->destroy();}

void doInference(IExecutionContext& context, cudaStream_t& stream,void** buffers,float* input,float* output,int batchSize){

// DMA input batch data to device, infer on the batch asynchronously, and DMA output back to host

CUDA_CHECK(cudaMemcpyAsync(buffers[0], input, batchSize *3* INPUT_H * INPUT_W *sizeof(float), cudaMemcpyHostToDevice, stream));

context.enqueue(batchSize, buffers, stream,nullptr);

CUDA_CHECK(cudaMemcpyAsync(output, buffers[1], batchSize * OUTPUT_SIZE *sizeof(float), cudaMemcpyDeviceToHost, stream));

cudaStreamSynchronize(stream);}

bool parse_args(int argc,char** argv, std::string& engine){

if(argc <3)

return false;

if(std::string(argv[1])=="-v"&& argc ==3){

engine = std::string(argv[2]);

}else{return false;}

return true;}

int main(int argc,char** argv){

cudaSetDevice(DEVICE);//

std::string wts_name = "";

std::string engine_name = "";//float gd = 0.0f, gw = 0.0f;//

std::string img_dir;if(!parse_args(argc, argv, engine_name)){

std::cerr <<"arguments not right!"<< std::endl;

std::cerr <<"./yolov5 -v [.engine] // run inference with camera"<< std::endl;

return-1;}

std::ifstream file(engine_name, std::ios::binary);

if(!file.good()){

std::cerr <<" read "<< engine_name <<" error! "<< std::endl;return-1;}char* trtModelStream{nullptr};

size_t size =0;

file.seekg(0, file.end);

size = file.tellg();

file.seekg(0, file.beg);

trtModelStream =new char[size];assert(trtModelStream);

file.read(trtModelStream, size);

file.close();// prepare input data ---------------------------

static float data[BATCH_SIZE *3* INPUT_H * INPUT_W];//for (int i = 0; i < 3 * INPUT_H * INPUT_W; i++)// data[i] = 1.0;

static float prob[BATCH_SIZE * OUTPUT_SIZE];

IRuntime* runtime =createInferRuntime(gLogger);assert(runtime !=nullptr);

ICudaEngine* engine = runtime->deserializeCudaEngine(trtModelStream, size);assert(engine !=nullptr);

IExecutionContext* context = engine->createExecutionContext();assert(context !=nullptr);delete[] trtModelStream;assert(engine->getNbBindings()==2);

void* buffers[2];

// In order to bind the buffers, we need to know the names of the input and output tensors.

// Note that indices are guaranteed to be less than IEngine::getNbBindings()

const int inputIndex = engine->getBindingIndex(INPUT_BLOB_NAME);

const int outputIndex = engine->getBindingIndex(OUTPUT_BLOB_NAME);

assert(inputIndex ==0);

assert(outputIndex ==1);// Create GPU buffers on device

CUDA_CHECK(cudaMalloc(&buffers[inputIndex], BATCH_SIZE *3* INPUT_H * INPUT_W *sizeof(float)));

CUDA_CHECK(cudaMalloc(&buffers[outputIndex], BATCH_SIZE * OUTPUT_SIZE *sizeof(float)));// Create stream

cudaStream_t stream;CUDA_CHECK(cudaStreamCreate(&stream));// 调用摄像头编号

cv::VideoCapture capture(0);//cv::VideoCapture capture("../overpass.mp4");//int fourcc = cv::VideoWriter::fourcc('M','J','P','G');//capture.set(cv::CAP_PROP_FOURCC, fourcc);

if(!capture.isOpened()){

std::cout <<"Error opening video stream or file"<< std::endl;return-1;}

int key;

int fcount =0;

while(1){

cv::Mat frame;

capture >> frame;if(frame.empty()){

std::cout <<"Fail to read image from camera!"<< std::endl;break;}

fcount++;//if (fcount < BATCH_SIZE && f + 1 != (int)file_names.size()) continue;

for(int b =0; b < fcount; b++){//cv::Mat img = cv::imread(img_dir + "/" + file_names[f - fcount + 1 + b]);

cv::Mat img = frame;

if(img.empty())continue;

cv::Mat pr_img =preprocess_img(img, INPUT_W, INPUT_H);// letterbox BGR to RGB

int i =0;for(int row =0; row < INPUT_H;++row){

uchar* uc_pixel = pr_img.data + row * pr_img.step;for(int col =0; col < INPUT_W;++col){

data[b *3* INPUT_H * INPUT_W + i]=(float)uc_pixel[2]/255.0;

data[b *3* INPUT_H * INPUT_W + i + INPUT_H * INPUT_W]=(float)uc_pixel[1]/255.0;

data[b *3* INPUT_H * INPUT_W + i +2* INPUT_H * INPUT_W]=(float)uc_pixel[0]/255.0;

uc_pixel +=3;++i;}}}

// Run inference

auto start = std::chrono::system_clock::now();

doInference(*context, stream, buffers, data, prob, BATCH_SIZE);

auto end = std::chrono::system_clock::now();// std::cout << std::chrono::duration_cast(end - start).count() << "ms" << std::endl;

int fps =1000.0/ std::chrono::duration_cast(end - start).count();

std::cout <<"fps: "<< fps << std::endl;

std::vector>batch_res(fcount);

for(int b =0; b < fcount; b++){

auto& res = batch_res[b];

nms(res,&prob[b * OUTPUT_SIZE], CONF_THRESH, NMS_THRESH);

}

for(int b =0; b < fcount; b++){

auto& res = batch_res[b];//std::cout << res.size() << std::endl;//cv::Mat img = cv::imread(img_dir + "/" + file_names[f - fcount + 1 + b]);

for(size_t j =0; j < res.size(); j++){

cv::Rect r =get_rect(frame, res[j].bbox);

cv::rectangle(frame, r, cv::Scalar(0x27,0xC1,0x36),6);

std::string label = my_classes[(int)res[j].class_id];

cv::putText(frame, label, cv::Point(r.x, r.y -1), cv::FONT_HERSHEY_PLAIN,2, cv::Scalar(0xFF,0xFF,0xFF),2);

std::string jetson_fps ="Jetson Xavier NX FPS: "+ std::to_string(fps);

cv::putText(frame, jetson_fps, cv::Point(11,80), cv::FONT_HERSHEY_PLAIN,3, cv::Scalar(0,0,255),2, cv::LINE_AA);}//cv::imwrite("_" + file_names[f - fcount + 1 + b], img);}

cv::imshow("yolov5", frame);

key = cv::waitKey(1);if(key =='q'){break;}

fcount =0;}}

capture.release();// Release stream and bufferscudaStreamDestroy(stream);CUDA_CHECK(cudaFree(buffers[inputIndex]));CUDA_CHECK(cudaFree(buffers[outputIndex]));// Destroy the engine

context->destroy();

engine->destroy();

runtime->destroy();return 0;} make -j6

sudo ./yolov5 -v yolov5s.engine

sudo ./yolov5 -v best.engine还有就是在原来的基础上加内容,命令还是用旧的

./yolov5 -d yolov5s.engine ../samples

nvidia@nvidia-desktop:~/yolov5/tensorrtx/yolov5/build$ cat ../yolov5.cpp

#include

#include

#include

#include "cuda_utils.h"

#include "logging.h"

#include "common.hpp"

#include "utils.h"

#include "calibrator.h"

#define USE_FP16 // set USE_INT8 or USE_FP16 or USE_FP32

#define DEVICE 0 // GPU id

#define NMS_THRESH 0.4

#define CONF_THRESH 0.5

#define BATCH_SIZE 1

// stuff we know about the network and the input/output blobs

static const int INPUT_H = Yolo::INPUT_H;

static const int INPUT_W = Yolo::INPUT_W;

static const int CLASS_NUM = Yolo::CLASS_NUM;

static const int OUTPUT_SIZE = Yolo::MAX_OUTPUT_BBOX_COUNT * sizeof(Yolo::Detection) / sizeof(float) + 1; // we assume the yololayer outputs no more than MAX_OUTPUT_BBOX_COUNT boxes that conf >= 0.1

const char* INPUT_BLOB_NAME = "data";

const char* OUTPUT_BLOB_NAME = "prob";

static Logger gLogger;

char* my_classes[]={"person","bicycle","car","motorcycle","airplane","bus","train","truck","boat","traffic light","fire hydrant","stop sign","parking meter","bench","bird","cat","dog","horse","sheep","cow","elephant","bear","zebra","giraffe","backpack","umbrella","handbag","tie","suitcase","frisbee","skis","snowboard","sports ball","kite","baseball bat","baseball glove","skateboard","surfboard","tennis racket","bottle","wine glass","cup","fork","knife","spoon","bowl","banana","apple","sandwich","orange","broccoli","carrot","hot dog","pizza","donut","cake","chair","couch","potted plant","bed","dining table","toilet","tv","laptop","mouse","remote","keyboard","cell phone","microwave","oven","toaster","sink","refrigerator","book","clock","vase","scissors","teddy bear","hair drier","toothbrush"};

static int get_width(int x, float gw, int divisor = 8) {

return int(ceil((x * gw) / divisor)) * divisor;

}

static int get_depth(int x, float gd) {

if (x == 1) return 1;

int r = round(x * gd);

if (x * gd - int(x * gd) == 0.5 && (int(x * gd) % 2) == 0) {

--r;

}

return std::max(r, 1);

}

ICudaEngine* build_engine(unsigned int maxBatchSize, IBuilder* builder, IBuilderConfig* config, DataType dt, float& gd, float& gw, std::string& wts_name) {

INetworkDefinition* network = builder->createNetworkV2(0U);

// Create input tensor of shape {3, INPUT_H, INPUT_W} with name INPUT_BLOB_NAME

ITensor* data = network->addInput(INPUT_BLOB_NAME, dt, Dims3{ 3, INPUT_H, INPUT_W });

assert(data);

std::map weightMap = loadWeights(wts_name);

/* ------ yolov5 backbone------ */

auto focus0 = focus(network, weightMap, *data, 3, get_width(64, gw), 3, "model.0");

auto conv1 = convBlock(network, weightMap, *focus0->getOutput(0), get_width(128, gw), 3, 2, 1, "model.1");

auto bottleneck_CSP2 = C3(network, weightMap, *conv1->getOutput(0), get_width(128, gw), get_width(128, gw), get_depth(3, gd), true, 1, 0.5, "model.2");

auto conv3 = convBlock(network, weightMap, *bottleneck_CSP2->getOutput(0), get_width(256, gw), 3, 2, 1, "model.3");

auto bottleneck_csp4 = C3(network, weightMap, *conv3->getOutput(0), get_width(256, gw), get_width(256, gw), get_depth(9, gd), true, 1, 0.5, "model.4");

auto conv5 = convBlock(network, weightMap, *bottleneck_csp4->getOutput(0), get_width(512, gw), 3, 2, 1, "model.5");

auto bottleneck_csp6 = C3(network, weightMap, *conv5->getOutput(0), get_width(512, gw), get_width(512, gw), get_depth(9, gd), true, 1, 0.5, "model.6");

auto conv7 = convBlock(network, weightMap, *bottleneck_csp6->getOutput(0), get_width(1024, gw), 3, 2, 1, "model.7");

auto spp8 = SPP(network, weightMap, *conv7->getOutput(0), get_width(1024, gw), get_width(1024, gw), 5, 9, 13, "model.8");

/* ------ yolov5 head ------ */

auto bottleneck_csp9 = C3(network, weightMap, *spp8->getOutput(0), get_width(1024, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.9");

auto conv10 = convBlock(network, weightMap, *bottleneck_csp9->getOutput(0), get_width(512, gw), 1, 1, 1, "model.10");

auto upsample11 = network->addResize(*conv10->getOutput(0));

assert(upsample11);

upsample11->setResizeMode(ResizeMode::kNEAREST);

upsample11->setOutputDimensions(bottleneck_csp6->getOutput(0)->getDimensions());

ITensor* inputTensors12[] = { upsample11->getOutput(0), bottleneck_csp6->getOutput(0) };

auto cat12 = network->addConcatenation(inputTensors12, 2);

auto bottleneck_csp13 = C3(network, weightMap, *cat12->getOutput(0), get_width(1024, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.13");

auto conv14 = convBlock(network, weightMap, *bottleneck_csp13->getOutput(0), get_width(256, gw), 1, 1, 1, "model.14");

auto upsample15 = network->addResize(*conv14->getOutput(0));

assert(upsample15);

upsample15->setResizeMode(ResizeMode::kNEAREST);

upsample15->setOutputDimensions(bottleneck_csp4->getOutput(0)->getDimensions());

ITensor* inputTensors16[] = { upsample15->getOutput(0), bottleneck_csp4->getOutput(0) };

auto cat16 = network->addConcatenation(inputTensors16, 2);

auto bottleneck_csp17 = C3(network, weightMap, *cat16->getOutput(0), get_width(512, gw), get_width(256, gw), get_depth(3, gd), false, 1, 0.5, "model.17");

/* ------ detect ------ */

IConvolutionLayer* det0 = network->addConvolutionNd(*bottleneck_csp17->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.24.m.0.weight"], weightMap["model.24.m.0.bias"]);

auto conv18 = convBlock(network, weightMap, *bottleneck_csp17->getOutput(0), get_width(256, gw), 3, 2, 1, "model.18");

ITensor* inputTensors19[] = { conv18->getOutput(0), conv14->getOutput(0) };

auto cat19 = network->addConcatenation(inputTensors19, 2);

auto bottleneck_csp20 = C3(network, weightMap, *cat19->getOutput(0), get_width(512, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.20");

IConvolutionLayer* det1 = network->addConvolutionNd(*bottleneck_csp20->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.24.m.1.weight"], weightMap["model.24.m.1.bias"]);

auto conv21 = convBlock(network, weightMap, *bottleneck_csp20->getOutput(0), get_width(512, gw), 3, 2, 1, "model.21");

ITensor* inputTensors22[] = { conv21->getOutput(0), conv10->getOutput(0) };

auto cat22 = network->addConcatenation(inputTensors22, 2);

auto bottleneck_csp23 = C3(network, weightMap, *cat22->getOutput(0), get_width(1024, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.23");

IConvolutionLayer* det2 = network->addConvolutionNd(*bottleneck_csp23->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.24.m.2.weight"], weightMap["model.24.m.2.bias"]);

auto yolo = addYoLoLayer(network, weightMap, "model.24", std::vector{det0, det1, det2});

yolo->getOutput(0)->setName(OUTPUT_BLOB_NAME);

network->markOutput(*yolo->getOutput(0));

// Build engine

builder->setMaxBatchSize(maxBatchSize);

config->setMaxWorkspaceSize(16 * (1 << 20)); // 16MB

#if defined(USE_FP16)

config->setFlag(BuilderFlag::kFP16);

#elif defined(USE_INT8)

std::cout << "Your platform support int8: " << (builder->platformHasFastInt8() ? "true" : "false") << std::endl;

assert(builder->platformHasFastInt8());

config->setFlag(BuilderFlag::kINT8);

Int8EntropyCalibrator2* calibrator = new Int8EntropyCalibrator2(1, INPUT_W, INPUT_H, "./coco_calib/", "int8calib.table", INPUT_BLOB_NAME);

config->setInt8Calibrator(calibrator);

#endif

std::cout << "Building engine, please wait for a while..." << std::endl;

ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

std::cout << "Build engine successfully!" << std::endl;

// Don't need the network any more

network->destroy();

// Release host memory

for (auto& mem : weightMap)

{

free((void*)(mem.second.values));

}

return engine;

}

ICudaEngine* build_engine_p6(unsigned int maxBatchSize, IBuilder* builder, IBuilderConfig* config, DataType dt, float& gd, float& gw, std::string& wts_name) {

INetworkDefinition* network = builder->createNetworkV2(0U);

// Create input tensor of shape {3, INPUT_H, INPUT_W} with name INPUT_BLOB_NAME

ITensor* data = network->addInput(INPUT_BLOB_NAME, dt, Dims3{ 3, INPUT_H, INPUT_W });

assert(data);

std::map weightMap = loadWeights(wts_name);

/* ------ yolov5 backbone------ */

auto focus0 = focus(network, weightMap, *data, 3, get_width(64, gw), 3, "model.0");

auto conv1 = convBlock(network, weightMap, *focus0->getOutput(0), get_width(128, gw), 3, 2, 1, "model.1");

auto c3_2 = C3(network, weightMap, *conv1->getOutput(0), get_width(128, gw), get_width(128, gw), get_depth(3, gd), true, 1, 0.5, "model.2");

auto conv3 = convBlock(network, weightMap, *c3_2->getOutput(0), get_width(256, gw), 3, 2, 1, "model.3");

auto c3_4 = C3(network, weightMap, *conv3->getOutput(0), get_width(256, gw), get_width(256, gw), get_depth(9, gd), true, 1, 0.5, "model.4");

auto conv5 = convBlock(network, weightMap, *c3_4->getOutput(0), get_width(512, gw), 3, 2, 1, "model.5");

auto c3_6 = C3(network, weightMap, *conv5->getOutput(0), get_width(512, gw), get_width(512, gw), get_depth(9, gd), true, 1, 0.5, "model.6");

auto conv7 = convBlock(network, weightMap, *c3_6->getOutput(0), get_width(768, gw), 3, 2, 1, "model.7");

auto c3_8 = C3(network, weightMap, *conv7->getOutput(0), get_width(768, gw), get_width(768, gw), get_depth(3, gd), true, 1, 0.5, "model.8");

auto conv9 = convBlock(network, weightMap, *c3_8->getOutput(0), get_width(1024, gw), 3, 2, 1, "model.9");

auto spp10 = SPP(network, weightMap, *conv9->getOutput(0), get_width(1024, gw), get_width(1024, gw), 3, 5, 7, "model.10");

auto c3_11 = C3(network, weightMap, *spp10->getOutput(0), get_width(1024, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.11");

/* ------ yolov5 head ------ */

auto conv12 = convBlock(network, weightMap, *c3_11->getOutput(0), get_width(768, gw), 1, 1, 1, "model.12");

auto upsample13 = network->addResize(*conv12->getOutput(0));

assert(upsample13);

upsample13->setResizeMode(ResizeMode::kNEAREST);

upsample13->setOutputDimensions(c3_8->getOutput(0)->getDimensions());

ITensor* inputTensors14[] = { upsample13->getOutput(0), c3_8->getOutput(0) };

auto cat14 = network->addConcatenation(inputTensors14, 2);

auto c3_15 = C3(network, weightMap, *cat14->getOutput(0), get_width(1536, gw), get_width(768, gw), get_depth(3, gd), false, 1, 0.5, "model.15");

auto conv16 = convBlock(network, weightMap, *c3_15->getOutput(0), get_width(512, gw), 1, 1, 1, "model.16");

auto upsample17 = network->addResize(*conv16->getOutput(0));

assert(upsample17);

upsample17->setResizeMode(ResizeMode::kNEAREST);

upsample17->setOutputDimensions(c3_6->getOutput(0)->getDimensions());

ITensor* inputTensors18[] = { upsample17->getOutput(0), c3_6->getOutput(0) };

auto cat18 = network->addConcatenation(inputTensors18, 2);

auto c3_19 = C3(network, weightMap, *cat18->getOutput(0), get_width(1024, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.19");

auto conv20 = convBlock(network, weightMap, *c3_19->getOutput(0), get_width(256, gw), 1, 1, 1, "model.20");

auto upsample21 = network->addResize(*conv20->getOutput(0));

assert(upsample21);

upsample21->setResizeMode(ResizeMode::kNEAREST);

upsample21->setOutputDimensions(c3_4->getOutput(0)->getDimensions());

ITensor* inputTensors21[] = { upsample21->getOutput(0), c3_4->getOutput(0) };

auto cat22 = network->addConcatenation(inputTensors21, 2);

auto c3_23 = C3(network, weightMap, *cat22->getOutput(0), get_width(512, gw), get_width(256, gw), get_depth(3, gd), false, 1, 0.5, "model.23");

auto conv24 = convBlock(network, weightMap, *c3_23->getOutput(0), get_width(256, gw), 3, 2, 1, "model.24");

ITensor* inputTensors25[] = { conv24->getOutput(0), conv20->getOutput(0) };

auto cat25 = network->addConcatenation(inputTensors25, 2);

auto c3_26 = C3(network, weightMap, *cat25->getOutput(0), get_width(1024, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.26");

auto conv27 = convBlock(network, weightMap, *c3_26->getOutput(0), get_width(512, gw), 3, 2, 1, "model.27");

ITensor* inputTensors28[] = { conv27->getOutput(0), conv16->getOutput(0) };

auto cat28 = network->addConcatenation(inputTensors28, 2);

auto c3_29 = C3(network, weightMap, *cat28->getOutput(0), get_width(1536, gw), get_width(768, gw), get_depth(3, gd), false, 1, 0.5, "model.29");

auto conv30 = convBlock(network, weightMap, *c3_29->getOutput(0), get_width(768, gw), 3, 2, 1, "model.30");

ITensor* inputTensors31[] = { conv30->getOutput(0), conv12->getOutput(0) };

auto cat31 = network->addConcatenation(inputTensors31, 2);

auto c3_32 = C3(network, weightMap, *cat31->getOutput(0), get_width(2048, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.32");

/* ------ detect ------ */

IConvolutionLayer* det0 = network->addConvolutionNd(*c3_23->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.33.m.0.weight"], weightMap["model.33.m.0.bias"]);

IConvolutionLayer* det1 = network->addConvolutionNd(*c3_26->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.33.m.1.weight"], weightMap["model.33.m.1.bias"]);

IConvolutionLayer* det2 = network->addConvolutionNd(*c3_29->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.33.m.2.weight"], weightMap["model.33.m.2.bias"]);

IConvolutionLayer* det3 = network->addConvolutionNd(*c3_32->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.33.m.3.weight"], weightMap["model.33.m.3.bias"]);

auto yolo = addYoLoLayer(network, weightMap, "model.33", std::vector{det0, det1, det2, det3});

yolo->getOutput(0)->setName(OUTPUT_BLOB_NAME);

network->markOutput(*yolo->getOutput(0));

// Build engine

builder->setMaxBatchSize(maxBatchSize);

config->setMaxWorkspaceSize(16 * (1 << 20)); // 16MB

#if defined(USE_FP16)

config->setFlag(BuilderFlag::kFP16);

#elif defined(USE_INT8)

std::cout << "Your platform support int8: " << (builder->platformHasFastInt8() ? "true" : "false") << std::endl;

assert(builder->platformHasFastInt8());

config->setFlag(BuilderFlag::kINT8);

Int8EntropyCalibrator2* calibrator = new Int8EntropyCalibrator2(1, INPUT_W, INPUT_H, "./coco_calib/", "int8calib.table", INPUT_BLOB_NAME);

config->setInt8Calibrator(calibrator);

#endif

std::cout << "Building engine, please wait for a while..." << std::endl;

ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

std::cout << "Build engine successfully!" << std::endl;

// Don't need the network any more

network->destroy();

// Release host memory

for (auto& mem : weightMap)

{

free((void*)(mem.second.values));

}

return engine;

}

void APIToModel(unsigned int maxBatchSize, IHostMemory** modelStream, bool& is_p6, float& gd, float& gw, std::string& wts_name) {

// Create builder

IBuilder* builder = createInferBuilder(gLogger);

IBuilderConfig* config = builder->createBuilderConfig();

// Create model to populate the network, then set the outputs and create an engine

ICudaEngine *engine = nullptr;

if (is_p6) {

engine = build_engine_p6(maxBatchSize, builder, config, DataType::kFLOAT, gd, gw, wts_name);

} else {

engine = build_engine(maxBatchSize, builder, config, DataType::kFLOAT, gd, gw, wts_name);

}

assert(engine != nullptr);

// Serialize the engine

(*modelStream) = engine->serialize();

// Close everything down

engine->destroy();

builder->destroy();

config->destroy();

}

void doInference(IExecutionContext& context, cudaStream_t& stream, void **buffers, float* input, float* output, int batchSize) {

// DMA input batch data to device, infer on the batch asynchronously, and DMA output back to host

CUDA_CHECK(cudaMemcpyAsync(buffers[0], input, batchSize * 3 * INPUT_H * INPUT_W * sizeof(float), cudaMemcpyHostToDevice, stream));

context.enqueue(batchSize, buffers, stream, nullptr);

CUDA_CHECK(cudaMemcpyAsync(output, buffers[1], batchSize * OUTPUT_SIZE * sizeof(float), cudaMemcpyDeviceToHost, stream));

cudaStreamSynchronize(stream);

}

bool parse_args(int argc, char** argv, std::string& wts, std::string& engine, bool& is_p6, float& gd, float& gw, std::string& img_dir) {

if (argc < 4) return false;

if (std::string(argv[1]) == "-s" && (argc == 5 || argc == 7)) {

wts = std::string(argv[2]);

engine = std::string(argv[3]);

auto net = std::string(argv[4]);

if (net[0] == 's') {

gd = 0.33;

gw = 0.50;

} else if (net[0] == 'm') {

gd = 0.67;

gw = 0.75;

} else if (net[0] == 'l') {

gd = 1.0;

gw = 1.0;

} else if (net[0] == 'x') {

gd = 1.33;

gw = 1.25;

} else if (net[0] == 'c' && argc == 7) {

gd = atof(argv[5]);

gw = atof(argv[6]);

} else {

return false;

}

if (net.size() == 2 && net[1] == '6') {

is_p6 = true;

}

} else if (std::string(argv[1]) == "-d" && argc == 4) {

engine = std::string(argv[2]);

img_dir = std::string(argv[3]);

} else {

return false;

}

return true;

}

int main(int argc, char** argv) {

cudaSetDevice(DEVICE);

std::string wts_name = "";

std::string engine_name = "";

bool is_p6 = false;

float gd = 0.0f, gw = 0.0f;

std::string img_dir;

if (!parse_args(argc, argv, wts_name, engine_name, is_p6, gd, gw, img_dir)) {

std::cerr << "arguments not right!" << std::endl;

std::cerr << "./yolov5 -s [.wts] [.engine] [s/m/l/x/s6/m6/l6/x6 or c/c6 gd gw] // serialize model to plan file" << std::endl;

std::cerr << "./yolov5 -d [.engine] ../samples // deserialize plan file and run inference" << std::endl;

return -1;

}

// create a model using the API directly and serialize it to a stream

if (!wts_name.empty()) {

IHostMemory* modelStream{ nullptr };

APIToModel(BATCH_SIZE, &modelStream, is_p6, gd, gw, wts_name);

assert(modelStream != nullptr);

std::ofstream p(engine_name, std::ios::binary);

if (!p) {

std::cerr << "could not open plan output file" << std::endl;

return -1;

}

p.write(reinterpret_cast(modelStream->data()), modelStream->size());

modelStream->destroy();

return 0;

}

// deserialize the .engine and run inference

std::ifstream file(engine_name, std::ios::binary);

if (!file.good()) {

std::cerr << "read " << engine_name << " error!" << std::endl;

return -1;

}

char *trtModelStream = nullptr;

size_t size = 0;

file.seekg(0, file.end);

size = file.tellg();

file.seekg(0, file.beg);

trtModelStream = new char[size];

assert(trtModelStream);

file.read(trtModelStream, size);

file.close();

std::vector file_names;

if (read_files_in_dir(img_dir.c_str(), file_names) < 0)

{

std::cerr << "read_files_in_dir failed." << std::endl;

return -1;

}

// prepare input data ---------------------------

static float data[BATCH_SIZE * 3 * INPUT_H * INPUT_W];

//for (int i = 0; i < 3 * INPUT_H * INPUT_W; i++)

// data[i] = 1.0;

static float prob[BATCH_SIZE * OUTPUT_SIZE];

IRuntime* runtime = createInferRuntime(gLogger);

assert(runtime != nullptr);

ICudaEngine* engine = runtime->deserializeCudaEngine(trtModelStream, size);

assert(engine != nullptr);

IExecutionContext* context = engine->createExecutionContext();

assert(context != nullptr);

delete[] trtModelStream;

assert(engine->getNbBindings() == 2);

void* buffers[2];

// In order to bind the buffers, we need to know the names of the input and output tensors.

// Note that indices are guaranteed to be less than IEngine::getNbBindings()

const int inputIndex = engine->getBindingIndex(INPUT_BLOB_NAME);

const int outputIndex = engine->getBindingIndex(OUTPUT_BLOB_NAME);

assert(inputIndex == 0);

assert(outputIndex == 1);

// Create GPU buffers on device

CUDA_CHECK(cudaMalloc(&buffers[inputIndex], BATCH_SIZE * 3 * INPUT_H * INPUT_W * sizeof(float)));

CUDA_CHECK(cudaMalloc(&buffers[outputIndex], BATCH_SIZE * OUTPUT_SIZE * sizeof(float)));

// Create stream

cudaStream_t stream;

CUDA_CHECK(cudaStreamCreate(&stream));

cv::VideoCapture capture(0);//cv::VideoCapture capture("../overpass.mp4");//int fourcc = cv::VideoWriter::fourcc('M','J','P','G');//capture.set(cv::CAP_PROP_FOURCC, fourcc);

if(!capture.isOpened())

{

std::cout <<"Error opening video stream or file"<< std::endl;

return-1;

}

int key;

int fcount =0;

while(1){

cv::Mat frame;

capture >> frame;if(frame.empty())

{

std::cout <<"Fail to read image from camera!"<< std::endl;

break;

}

//int fcount = 0;

//for (int f = 0; f < (int)file_names.size(); f++) {

fcount++;

//if (fcount < BATCH_SIZE && f + 1 != (int)file_names.size()) continue;

for (int b = 0; b < fcount; b++) {

// cv::Mat img = cv::imread(img_dir + "/" + file_names[f - fcount + 1 + b]);

cv::Mat img = frame;

//if (img.empty()) continue;

if(frame.empty())continue;

cv::Mat pr_img = preprocess_img(img, INPUT_W, INPUT_H); // letterbox BGR to RGB

int i = 0;

for (int row = 0; row < INPUT_H; ++row) {

uchar* uc_pixel = pr_img.data + row * pr_img.step;

for (int col = 0; col < INPUT_W; ++col) {

data[b * 3 * INPUT_H * INPUT_W + i] = (float)uc_pixel[2] / 255.0;

data[b * 3 * INPUT_H * INPUT_W + i + INPUT_H * INPUT_W] = (float)uc_pixel[1] / 255.0;

data[b * 3 * INPUT_H * INPUT_W + i + 2 * INPUT_H * INPUT_W] = (float)uc_pixel[0] / 255.0;

uc_pixel += 3;

++i;

}

}

}

// Run inference

auto start = std::chrono::system_clock::now();

doInference(*context, stream, buffers, data, prob, BATCH_SIZE);

auto end = std::chrono::system_clock::now();

//std::cout << std::chrono::duration_cast(end - start).count() << "ms" << std::endl;

int fps =1000.0/ std::chrono::duration_cast(end - start).count();

std::cout <<"fps: "<< fps << std::endl;

std::vector> batch_res(fcount);

for (int b = 0; b < fcount; b++) {

auto& res = batch_res[b];

nms(res, &prob[b * OUTPUT_SIZE], CONF_THRESH, NMS_THRESH);

}

for (int b = 0; b < fcount; b++) {

auto& res = batch_res[b];

//std::cout << res.size() << std::endl;

// cv::Mat img = cv::imread(img_dir + "/" + file_names[f - fcount + 1 + b]);

for (size_t j = 0; j < res.size(); j++) {

// cv::Rect r = get_rect(img, res[j].bbox);

// cv::rectangle(img, r, cv::Scalar(0x27, 0xC1, 0x36), 2);

// cv::putText(img, std::to_string((int)res[j].class_id), cv::Point(r.x, r.y - 1), cv::FONT_HERSHEY_PLAIN, 1.2, cv::Scalar(0xFF, 0xFF, 0xFF), 2);

//}

//cv::imwrite("_" + file_names[f - fcount + 1 + b], img);

cv::Rect r =get_rect(frame, res[j].bbox);

cv::rectangle(frame, r, cv::Scalar(0x27,0xC1,0x36),6);

std::string label = my_classes[(int)res[j].class_id];

cv::putText(frame, label, cv::Point(r.x, r.y -1), cv::FONT_HERSHEY_PLAIN,2, cv::Scalar(0xFF,0xFF,0xFF),2);

std::string jetson_fps ="Jetson Xavier NX FPS: "+ std::to_string(fps);

cv::putText(frame, jetson_fps, cv::Point(11,80), cv::FONT_HERSHEY_PLAIN,3, cv::Scalar(0,0,255),2, cv::LINE_AA);} //cv::imwrite("_" + file_names[f - fcount + 1 + b], img);}

cv::imshow("yolov5", frame);

key = cv::waitKey(1);

if(key =='q')

{

break;

}

fcount = 0;

}}

// Release stream and buffers

cudaStreamDestroy(stream);

CUDA_CHECK(cudaFree(buffers[inputIndex]));

CUDA_CHECK(cudaFree(buffers[outputIndex]));

// Destroy the engine

context->destroy();

engine->destroy();

runtime->destroy();