Ubuntu20.04安装配置zookeeper和kafka,并使用flink读取kafka流数据存入MySQL

这里写自定义目录标题

-

- 开发环境

-

-

- 系统: Ubuntu 20.04

- 软件架构:JDK 15.0.2 + zookeeper 3.6.2 + kafka 2.7.0 + flink 1.12.2 + mysql 8 + Maven 3.6.3

- IDE:IDEA + navicat

-

- 1. 安装JDK

- 2. 安装zookeeper

-

-

-

- 配置系统环境变量

- 添加

- 启动

-

-

- 3. 安装kafka

- 4. 安装flink

- 5. 安装MySQL

- 6. 编写第一个flink+kafka程序

-

-

- maven 依赖

- 创建学生类

- 创建kafka类

- SinkToMySQL

- flink主程序

- 结果

-

由于业务需求,最近开始学习大数据,主要是kafka和flink操作,在前期环境搭建过程中走了很多弯路,踩了很多坑,这里把自己遇到的所有问题都罗列出来,并将从环境搭建到demo运行成功的整个过程记录下来,方便自己以后查看,也希望能给网友们一些参考。

开发环境

系统: Ubuntu 20.04

软件架构:JDK 15.0.2 + zookeeper 3.6.2 + kafka 2.7.0 + flink 1.12.2 + mysql 8 + Maven 3.6.3

IDE:IDEA + navicat

1. 安装JDK

从官网下载jdk工具包jdk工具包。

选择最新的15就行,

选择bin.tar版本,下载完后保存在/opt目录下并解压,然后设置环境变量。

![]()

tar -xvf jdk-15.0.2_linux-x64_bin.tar.gz

vim /etc/profile

export JAVA_HOME=/opt/jdk-15.0.2

export CLASSPATH=.

export PATH=$JAVA_HOME/bin:$PATH

source /etc/profile

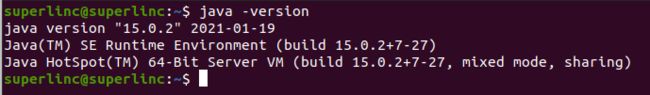

可以输入 java -version查看是否配置成功。

这里最好重启一下,不然后面输入命令可能会找不到。

2. 安装zookeeper

解压下载好的tar.gz安装包到某个目录下,我直接解压到了/home目录,可使用命令

tar -xvf apache-zookeeper-3.6.2-bin

进入解压目录的conf目录,复制配置文件zoo_sample.cfg并命名为zoo.cfg,

只需要改数据存储目录和日志文件目录,新建data和logs文件夹。

# 增加dataDir和dataLogDir目录,目录自己创建并指定,用作数据存储目录和日志文件目录

dataDir=/home/superlinc/zookeeper/data

dataLogDir=/home/superlinc/zookeeper/logs

配置系统环境变量

sudo vim /etc/profile

添加

export ZOOKEEPER_HOME=/home/superlinc/zookeeper

PATH=$ZOOKEEPER_HOME/bin:$PATH

source /etc/profile

这样就安装配置好啦。

启动

sudo ./bin/zkServer.sh start

如果想看具体的信息,可以

sudo ./bin/zkServer.sh start-foreground

如果你已经启动了一次,以后再启动或者端口2181被占用就会出现“地址已在使用”错误,

Caused by: java.net.BindException: 地址已在使用

sudo netstat -ap | grep 2181

sudo kill 11051

3. 安装kafka

安装kafka就很简单了,同样解压到/home下。

tar -xvf kafka_2.12-2.7.0.tgz

进入config目录,只要修改server.properties一句。

log.dirs=/home/superlinc/kafka/logs

由于我们已经启动了zookeeper,直接输入以下命令就行,

sudo ./bin/kafka-server-start.sh config/server.properties

当强行关闭kafka集群或zookeeper集群时,重新启动时经常会出现异常 kafka.common.InconsistentClusterIdException

直接删除zookeeper下的data和logs目录以及kafka下的logs目录,然后重新启动zookeeper和kafka。

• 删除日志目录logs下的meta.properties文件

• 重启Kafka

4. 安装flink

同样解压到/home下。

tar -xvf flink-1.12.2-bin-scala_2.11.tgz

sudo ./bin/start-cluster.sh

启动好了之后访问 http://localhost:8081

5. 安装MySQL

可以参考这篇博客

添加链接描述

主要问题是如何实现远程连接。

6. 编写第一个flink+kafka程序

maven 依赖

<properties>

<maven.compiler.source>15maven.compiler.source>

<maven.compiler.target>15maven.compiler.target>

<flink.version>1.11.0flink.version>

<java.version>1.15java.version>

<scala.binary.version>2.11scala.binary.version>

properties>

<dependencies>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-javaartifactId>

<version>${flink.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-streaming-java_${scala.binary.version}artifactId>

<version>${flink.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.slf4jgroupId>

<artifactId>slf4j-log4j12artifactId>

<version>1.7.30version>

<scope>runtimescope>

dependency>

<dependency>

<groupId>log4jgroupId>

<artifactId>log4jartifactId>

<version>1.2.17version>

<scope>runtimescope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-connector-kafka-0.11_${scala.binary.version}artifactId>

<version>${flink.version}version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>fastjsonartifactId>

<version>1.2.51version>

dependency>

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>8.0.22version>

dependency>

dependencies>

首先用Navicat连接MySQL,然后创建一张表"student",

DROP TABLE IF EXISTS `student`;

CREATE TABLE `student` (

`id` int(11) unsigned NOT NULL AUTO_INCREMENT,

`name` varchar(25) COLLATE utf8_bin DEFAULT NULL,

`password` varchar(25) COLLATE utf8_bin DEFAULT NULL,

`age` int(10) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=5 DEFAULT CHARSET=utf8 COLLATE=utf8_bin;

创建学生类

package com.linc.flink;

public class Student {

public int id;

public String name;

public String password;

public int age;

public Student() {

}

public Student(int id, String name, String password, int age) {

this.id = id;

this.name = name;

this.password = password;

this.age = age;

}

@Override

public String toString() {

return "Student{" +

"id=" + id +

", name='" + name + '\'' +

", password='" + password + '\'' +

", age=" + age +

'}';

}

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public String getPassword() {

return password;

}

public void setPassword(String password) {

this.password = password;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

}

创建kafka类

package com.linc.flink;

import com.alibaba.fastjson.JSON;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.util.HashMap;

import java.util.Map;

import java.util.Properties;

public class KafkaUtils {

public static final String broker_list = "localhost:9092";

public static final String topic = "student"; //kafka topic 需要和 flink 程序用同一个 topic

public static void writeToKafka() throws InterruptedException {

Properties props = new Properties();

props.put("bootstrap.servers", broker_list);

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

KafkaProducer producer = new KafkaProducer<String, String>(props);

for (int i = 1; i <= 100; i++) {

Student student = new Student(i, "linc" + i, "password" + i, 18 + i);

ProducerRecord record = new ProducerRecord<String, String>(topic, null, null, JSON.toJSONString(student));

producer.send(record);

System.out.println("发送数据: " + JSON.toJSONString(student));

}

producer.flush();

}

public static void main(String[] args) throws InterruptedException {

writeToKafka();

}

}

SinkToMySQL

package com.linc.flink;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

public class SinkToMySQL extends RichSinkFunction<Student> {

PreparedStatement ps;

private Connection connection;

/**

* open() 方法中建立连接,这样不用每次 invoke 的时候都要建立连接和释放连接

*

* @param parameters

* @throws Exception

*/

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

connection = getConnection();

String sql = "insert into student(id, name, password, age) values(?, ?, ?, ?);";

ps = this.connection.prepareStatement(sql);

}

@Override

public void close() throws Exception {

super.close();

//关闭连接和释放资源

if (connection != null) {

connection.close();

}

if (ps != null) {

ps.close();

}

}

/**

* 每条数据的插入都要调用一次 invoke() 方法

*

* @param value

* @param context

* @throws Exception

*/

@Override

public void invoke(Student value, Context context) throws Exception {

//组装数据,执行插入操作

ps.setInt(1, value.getId());

ps.setString(2, value.getName());

ps.setString(3, value.getPassword());

ps.setInt(4, value.getAge());

ps.executeUpdate();

}

private static Connection getConnection() {

Connection con = null;

try {

Class.forName("com.mysql.cj.jdbc.Driver");

con = DriverManager.getConnection("jdbc:mysql://localhost:3306/mysql?useUnicode=true&characterEncoding=UTF-8", "root", "root");

} catch (Exception e) {

System.out.println("-----------mysql get connection has exception , msg = "+ e.getMessage());

}

return con;

}

}

flink主程序

package com.linc.flink;

import com.alibaba.fastjson.JSON;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.sink.PrintSinkFunction;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer011;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer011;

import java.util.Properties;

public class Main {

public static void main(String[] args) throws Exception {

final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("zookeeper.connect", "localhost:2181");

props.put("group.id", "metric-group");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("auto.offset.reset", "latest");

SingleOutputStreamOperator<Student> student = env.addSource(new FlinkKafkaConsumer011<>(

"student", //这个 kafka topic 需要和上面的工具类的 topic 一致

new SimpleStringSchema(),

props)).setParallelism(1)

.map(string -> JSON.parseObject(string, Student.class)); //Fastjson 解析字符串成 student 对象

student.addSink(new SinkToMySQL()); //数据 sink 到 mysql

env.execute("Flink add sink");

}

}

结果

运行 Flink 主程序,然后再运行 KafkaUtils.java 工具类

如果出现以下错误,

java.lang.NoClassDefFoundError: org/apache/flink/api/common/serialization/DeserializationSchema,

导入flink中lib下的jar包即可。

运行结果:

MySQL结果: