hive小操作·自定义函数 udtf

包含两个案例

1、hive多列操作----行转列

2、hive单列操作----使用split切分json数据

一、udtf的介绍

UDTF(User-Defined Table-Generating Functions) 用来解决 输入一行输出多行(On-to-many maping) 的需求

二、udtf的使用

1、使用规则必须继承org.apache.hadoop.hive.ql.udf.generic.GenericUDTF,实现initialize, process, close三个方法。

2、程序流程:

UDTF首先会调用initialize方法,此方法返回UDTF的返回行的信息(返回个数,类型)。

初始化完成后,会调用process方法,真正的处理过程在process函数中,在process中,每一次forward()调用产生一行;如果产生多列可以将多个列的值放在一个数组中,然后将该数组传入到forward()函数。

最后close()方法调用,对需要清理的方法进行清理。

三、UDTF 案例一

1、行转列

如下hive表数据

1 a,b,c 1,2

2 j,k NULL

3 NULL NULL将行通过UDTF转为列

如下

a 1

b 2

c NULL

j NULL

k NULL2、具体实现

pom.xml

4.0.0

org.springframework.boot

spring-boot-starter-parent

2.1.6.RELEASE

com.hello

hive

0.0.1-SNAPSHOT

hive

Demo project for Spring Boot

1.8

org.springframework.boot

spring-boot-starter-web

org.springframework.boot

spring-boot-starter-test

test

com.vaadin.external.google

android-json

org.apache.hive

hive-exec

3.1.1

org.apache.spark

spark-core_2.11

2.4.3

org.springframework.boot

spring-boot-maven-plugin

具体实现代码

package com.hello.hive;

/**

* @ProjectName: hive

* @Package: com.hello.hive

* @ClassName: ArrToMapUDTF

* @Author: dongsong

* @Description: UDTF-行变列

* @Date: 2019/8/12 9:19

* @Version: 1.0

*/

import java.util.List;

import com.google.common.collect.Lists;

import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

/**

*@program: hive

*@description: UDTF-行变列

*@author: by song

*@create: 2019-08-12 09:19

*/

public class ArrToMapUDTF extends GenericUDTF {

private String[] obj = new String[2];

@Override

public StructObjectInspector initialize(StructObjectInspector argOIs) throws UDFArgumentException {

//生成表的字段名数组

List colName = Lists.newLinkedList();

colName.add("key");

colName.add("value");

//生成表的字段对象监控器(object inspector)数组,即生成表的行对象每个字段的类型

List resType = Lists.newLinkedList();

resType.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

resType.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

// 返回分别为列名 和 列类型,通过ObjectInspectorFactory.getStandardStructObjectInspector方法

//获取到hive的值对object进行访问

return ObjectInspectorFactory.getStandardStructObjectInspector(colName, resType);

}

//在udtf中实际对hive的操作,如上面获取到的是在objectinspector对象中解耦的两个列值,然后下面的函数是对

//这两列的实际操作,通过字符串截取来将行转为列

//process方法中的参数Object[] args,我认为应该是ObjectInspectorFactory.getStandardStructObjectInspector

//对hive解耦后的列值

@Override

public void process(Object[] args) throws HiveException {

if (args[0] == null) {

return;

}

String arg1 = args[0].toString();

String[] arr1 = arg1.split(",");

String[] arr2 = null;

if (args[1] != null) {

arr2 = args[1].toString().split(",");

}

for (int i = 0; i < arr1.length; i++) {

obj[0] = arr1[i];

if (arr2 != null && arr2.length > i) {

obj[1] = arr2[i];

} else {

obj[1] = null;

}

//使用本机调forward会报异常,正好可以打印出不在hive环境运行时的结果

try{

forward(obj);

}catch (Exception e){

System.out.println("***********本机调试***********");

System.out.println(obj[0]+" "+obj[1]);

}

}

}

@Override

public void close() throws HiveException { }

public static void main(String[] args) throws HiveException {

Object[] arg0 = new Object[2];

arg0[0]="a,s,d";

arg0[1]="1,2";

ArrToMapUDTF arr=new ArrToMapUDTF();

arr.process(arg0);

}

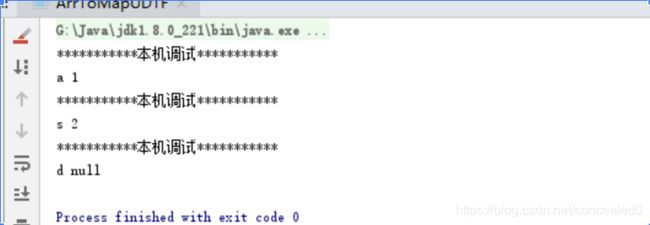

} 本机调试结果

3、执行过程

1、maven函数打包

2、打包好后放入Hadoop自定义目录中

3、进入hive页面中

-- 执行效果

hive> add jar /*/*.jar;

Added [/*/*.jar] to class path

Added resources: [/*/*.jar]

hive> create temporary function get_map as 'com.hello.hive.ArrToMapUDTF';

OK

Time taken: 0.005 seconds

hive> select get_map(col,col1) from test;

OK

a 1

b 2

c NULL

j NULL

k NULL

Time taken: 1.008 seconds, Fetched: 5 row(s)

以上为get_map函数的使用方法,该方法局限性为使用时无法引用其它列。结合lateral view关键词使用可以达到预期效果。

select t.ind,t.col,t.col1,t1.key,t1.value

from test t

lateral view get_map(col,col1) t1 as key,value;该使用方法中涉及到t、t1两张表。lateral view将两张表进行join操作,过程如下:

对输入的test表中的col、col1列进行udtf操作,将得到的数据集命名为t1,并对列命令为key,value

将t表和t1表进行join操作,得到结果数据集

可以看到lateral view 和join操作类似。另外lateral view也支持谓词下推和outer join操作,当udtf不返回值而左侧表有值,此时outer关键词登场了,类似于left outer join操作

四、UDTF 案例二

1、hive单列操作----使用split切分json数据

package com.hello.hive;/**

* @ProjectName: hive

* @Package: com.hello.hive

* @ClassName: SplitJsonUDTF

* @Author: dongsong

* @Description: 用来切分json是数据

* @Date: 2019/8/12 13:54

* @Version: 1.0

*/

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Date;

import java.util.Set;

import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.exec.UDFArgumentLengthException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

import com.alibaba.fastjson.JSONArray;

import com.alibaba.fastjson.JSONObject;

/**

*@program: hive

*@description: 用来切分json是数据

*@author: by song

*@create: 2019-08-12 13:54

*/

public class SplitJsonUDTF extends GenericUDTF{

@Override

public StructObjectInspector initialize(ObjectInspector[] args)

throws UDFArgumentException {

if (args.length != 1) {//判断参数是否为1.

throw new UDFArgumentLengthException(

"Redis_Gmp takes only one argument");

}//判断参数是否是PRIMITIVE,LIST,MAP,STRUCT,UNION类型;

if (args[0].getCategory() != ObjectInspector.Category.PRIMITIVE) {

throw new UDFArgumentException(

"Redis_Gmp takes string as a parameter");

}

ArrayList fieldNames = new ArrayList();

ArrayList fieldOIs = new ArrayList();

fieldNames.add("content_id");

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

fieldNames.add("app");

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

fieldNames.add("click");

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

fieldNames.add("impression");

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

fieldNames.add("ctr");

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

return ObjectInspectorFactory.getStandardStructObjectInspector(

fieldNames, fieldOIs);

}

@Override

public void close() throws HiveException {

// TODO Auto-generated method stub

}

@Override

public void process(Object[] arg0) throws HiveException {

String input = arg0[0].toString();

String[] split = input.split(",\\[");

String content_id = split[0].replace("(", "");

String json_str = split[1].replace(")", "");

json_str = "["+json_str;

//(98337176,[{"-1":{"impression":167,"click":0.9933209,"ctr":0.02424849}},{"ali":{"impression":163,"click":0.9933209,"ctr":0.025131164}}])

//把Json格式数据转为数组形式

JSONArray json_arr = JSONArray.parseArray(json_str);

//[{"-1":{"ctr":0.006597938,"impression":150,"click":0.3438084}},{"coolpad":{"ctr":0.018344998,"impression":56,"click":0.3438084}},{"emui":{"ctr":0,"impression":64,"click":0}}]

for(int i =0 ;i < json_arr.size();i++){

String[] result = new String[5];

result[0] = content_id;

//获取到单个json数组

JSONObject ele = json_arr.getJSONObject(i);//{"-1":{"ctr":0.006597938,"impression":150,"click":0.3438084}}

//通过set对象获取到json的键

Set ks = ele.keySet(); //[-1]

for(String k : ks){

result[1] = k;//将键转为string字符串-1

}

result[2] = ele.getJSONObject(result[1]).getString("click");

result[3] = ele.getJSONObject(result[1]).getString("impression");

result[4] = ele.getJSONObject(result[1]).getString("ctr");

//forward(result);

//使用本机调forward会报异常,正好可以打印出不在hive环境运行时的结果

try{

forward(result);

}catch (Exception e){

System.out.println("***********本机调试***********");

System.out.println(result[0] + " " + result[1] + " " + result[2] +" "+result[3]+" "+result[4]);

}

}

}

public static void main(String[] args) throws HiveException {

SplitJsonUDTF redis_gmp = new SplitJsonUDTF();

String s1 = "(98337176,[{\"-1\":{\"impression\":167,\"click\":0.9933209,\"ctr\":0.02424849}},{\"ali\":{\"impression\":163,\"click\":0.9933209,\"ctr\":0.025131164}}])";

String s2 = "(119962233,[{\"-1\":{\"impression\":150,\"click\":0.3438084,\"ctr\":0.006597938}},{\"coolpad\":{\"impression\":56,\"click\":0.3438084,\"ctr\":0.018344998}},{\"emui\":{\"impression\":64,\"click\":0,\"ctr\":0}}])";

Object[] arg0 = new Object[]{s2};

redis_gmp.process(arg0);

}

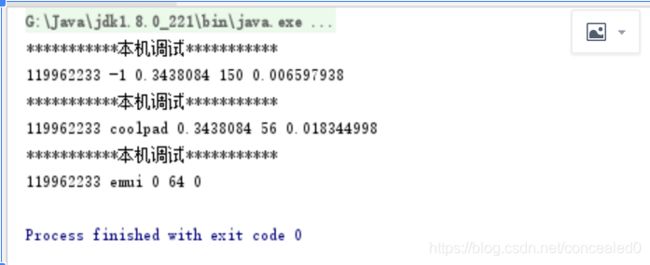

} 本机测试结果

2、使用方法

UDTF有两种使用方法,一种直接放到select后面,一种和lateral view一起使用。

1、直接select中使用

select explode_map(properties) as (col1,col2) from src;不可以添加其他字段使用

select a, explode_map(properties) as (col1,col2) from src不可以嵌套调用

select explode_map(explode_map(properties)) from src不可以和group by/cluster by/distribute by/sort by一起使用

select explode_map(properties) as (col1,col2) from src group by col1, col22、和lateral view一起使用

select src.id, mytable.col1, mytable.col2 from src lateral view explode_map(properties) mytable as col1, col2;