python读取pdf提取文字和图片

问题描述

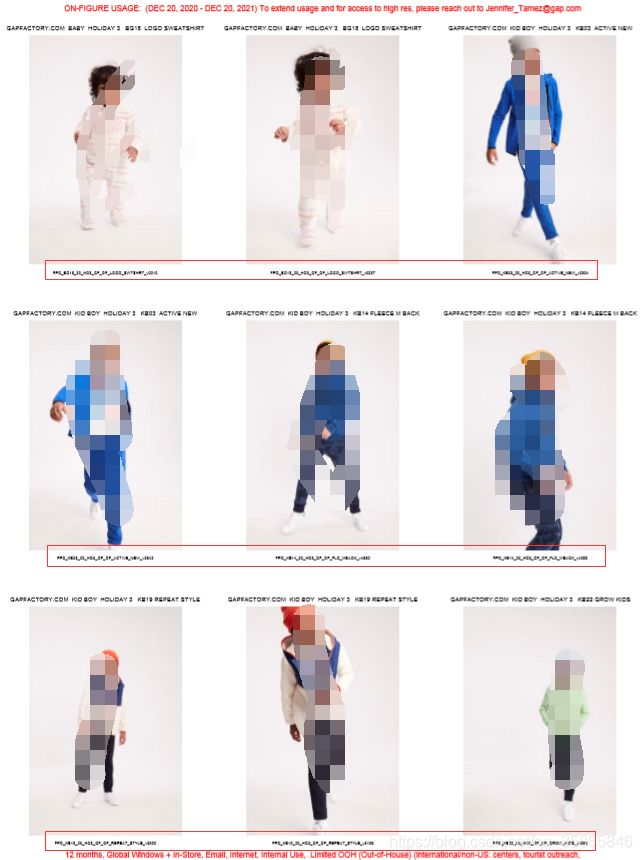

如下图所示,一份pdf有几十页,每页九张图片,

提取出图片并用图片下方的文本对图片命名

主要涉及问题:

- 图片提取

- 文本识别

借鉴了上面文本识别的资料,上面图片提取的顺序不一致,没办法把两个结合起来实现我的需求

#防爬虫识别码–原创CSDN诡途:https://blog.csdn.net/qq_35866846

翻看了pdfminer源代码找到一种把pdf单页保存的方法,保存下来之后,再用Image对图片像素点位进行裁剪,因为格式比较固定所以可以用这种方式,更好的方法暂时没找到,网上没找到相关问题的比较完整的处理方法,我这应该是首发,欢迎有其他更好的方法的朋友,评论区探讨一下

关于Image图片处理之前也写过几篇博客:

图片按照宽度等比例缩放

长图按固定像素长度裁切

Python实现图片切割拼接实验——numpy数组的脑洞玩法

代码实现

# 导入库

import fitz,time,re,os,pdfminer,datetime

from pdfminer.pdfparser import PDFParser

from pdfminer.pdfdocument import PDFDocument

from pdfminer.pdfpage import PDFPage, PDFTextExtractionNotAllowed

from pdfminer.pdfinterp import PDFResourceManager, PDFPageInterpreter

> #防爬虫识别码--原创CSDN诡途:https://blog.csdn.net/qq_35866846

from pdfminer.pdfdevice import PDFDevice

from pdfminer.layout import LAParams

from pdfminer.converter import PDFPageAggregator

import pandas as pd

import numpy as np

from PIL import Image

# 分页保存成图片

def save_page_pic(pdf_path,page_path):

# 保存前先清空图片保存文件夹

for wj in os.listdir(page_path):

os.remove(os.path.join(page_path,wj))

# 二进制读取

doc = fitz.open(pdf_path)

# 循环分页处理

for d in doc:

#获取页码

page = int(str(d).split()[1])+1

# 单页图片命名

pic_name =f" page_{page}.png"

page_pic_path = os.path.join(page_path,pic_name)

# 防爬虫识别码--原创CSDN诡途:https://blog.csdn.net/qq_35866846

# 图片保存

pix = d.getPixmap()

if pix.n < 5: # 如果pix.n<5,可以直接存为PNG

pix.writePNG(page_pic_path )

else: # 否则先转换CMYK

pix0 = fitz.Pixmap(fitz.csRGB, pix)

pix0.writePNG(page_pic_path)

pix0 = None

pix = None # 释放资源

# 解析pdf 文本信息

def parse_pdf_txt(pdf_path,code_str):

# 二进制读取pdf

fp = open(pdf_path, 'rb')

# Create a PDF parser object associated with the file object

parser = PDFParser(fp)

# Create a PDF document object that stores the document structure.

# 防爬虫识别码--原创CSDN诡途:https://blog.csdn.net/qq_35866846

# Password for initialization as 2nd parameter

document = PDFDocument(parser)

# Check if the document allows text extraction. If not, abort.

if not document.is_extractable:

raise PDFTextExtractionNotAllowed

# Create a PDF resource manager object that stores shared resources.

rsrcmgr = PDFResourceManager()

# Create a PDF device object.

#device = PDFDevice(rsrcmgr)

# BEGIN LAYOUT ANALYSIS.

# Set parameters for analysis.

laparams = LAParams(

char_margin=10.0,

line_margin=0.2,

boxes_flow=0.2,

all_texts=False,

)

# Create a PDF page aggregator object.

# device = PDFPageAggregator(rsrcmgr, laparams=laparams)

# 防爬虫识别码--原创CSDN诡途:https://blog.csdn.net/qq_35866846

device = PDFPageAggregator(rsrcmgr, laparams=laparams)

# Create a PDF interpreter object.

interpreter = PDFPageInterpreter(rsrcmgr, device)

# loop over all pages in the document

page_count = 0

result =[]

for page in PDFPage.create_pages(document):

page_count+=1

# read the page into a layout object

interpreter.process_page(page)

layout = device.get_result()

txt_list = []

for obj in layout._objs:

if isinstance(obj, pdfminer.layout.LTTextBoxHorizontal):

txt = obj.get_text()

# 无法识别的字符进行解码

cid_list = re.findall("cid:\d+",txt)

for cid in cid_list:

cid_key = cid.split(":")[1]

txt = txt.replace(f"({cid})",code_str[cid_key])

# 解码完成后判断是否还有未识别的字符

cid_list = re.findall("cid:\d+",txt)

if len(cid_list):

print(f"解码字典需补充: {cid_list}")

# 保存储存

txt_list.append(txt)

txt_list.insert(0,page_count)

result.append(txt_list)

data = pd.DataFrame(result)

data.columns =["页码" if col == 0 else f"元素{col}" for col in data.columns ]

return data

def save_product_pic(txt_data,product_path,page_path):

count,total_page= 0,len(os.listdir(page_path))

data = txt_data.copy()

# 存储图片名称

result = []

for pic_name in os.listdir(page_path):

count+=1

# 读取单页图片

pic_path = os.path.join(page_path,pic_name)

im=Image.open(pic_path)

# pdf中的页码

page = int(pic_name.split('_')[1].split('.')[0])

need_col = ['元素4', '元素5', '元素6', '元素10', '元素11', '元素12', '元素16', '元素17', '元素18']

product_pic_list = data[need_col][data.页码==page].values.tolist()[0]

# (x,y)=im.size

# 9张图的像素点设置

x_list = [[45,183],[237,375],[429,567]]

y_list = [[38,245],[290,497],[542,749]]

# 标记对应位置图片

# 横向1,2,3 \n 4,5,6 \n 7,8,9

i = 0

for _y in y_list:

upper,lower=_y

for _x in x_list:

i+=1

left,right=_x

# 循环获取每张图的像素点位

box = (left, upper, right, lower)

# 最后一页可能没有9张图

# 防爬虫识别码--原创CSDN诡途:https://blog.csdn.net/qq_35866846

_product_pic_name=product_pic_list[i-1]

if _product_pic_name :

# 获取单个产品图的名称

product_pic_name = _product_pic_name.strip('\n')+".png"

result.append(product_pic_name[:-4])

# 构建图片保存路径

product_pic_path = os.path.join(product_path,product_pic_name)

# 裁剪第 i 张图 i∈[1,9] 并保存

im.crop(box).save(product_pic_path)

print(f"第{count}页图片提取成功,剩余{total_page-count}页!")

pd_result = pd.DataFrame(result,columns=["图片名称"])

return pd_result

pdf_path = os.path.join("pdf",os.listdir("pdf")[0])

today = str(datetime.datetime.today())[:10]

fina_path = f"存档//{today}"

product_path = f"存档//{today}//pic"

# 单页图片存储地址

page_path = "page_pic"

# 自定义解码字典 - 及时更新补充 识别文本时对应无法识别的编码

# 防爬虫识别码--原创CSDN诡途:https://blog.csdn.net/qq_35866846

code_str = {"46":"K","49":"N","25":"6","23":"4","28":"9","57":"V","45":"J","24":"5","56":"U",}

try:

os.mkdir(fina_path)

except:

print(f"文件夹 {fina_path} 已存在")

try:

os.mkdir(product_path)

except:

print(f"文件夹 {product_path} 已存在")

# 分页保存成图片

save_page_pic(pdf_path,page_path)

# 提取文本信息

txt_data = parse_pdf_txt(pdf_path,code_str)

# 把提取到的文字 保存到本地

# txt_data.to_excel(os.path.join(fina_path,"pdf文字信息.xlsx"),index=False)

pic_name = save_product_pic(txt_data,product_path,page_path)

# 把提取到的文字 整理后保存到本地-合并成一列,并只保留图片信息

pic_name.to_excel(os.path.join(fina_path,"pdf文字信息.xlsx"),index=False)