转载:计算机视觉Paper with code-2023.10.31

原文链接:计算机视觉Paper with code-2023.10.31

留存备用。

1.【基础网络架构】(NeurIPS2023)Fast Trainable Projection for Robust Fine-Tuning

-

论文地址:https://arxiv.org//pdf/2310.19182

-

开源代码:https://github.com/GT-RIPL/FTP

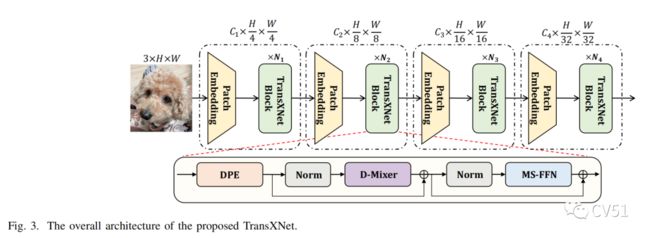

2.【基础网络架构:Transformer】TransXNet: Learning Both Global and Local Dynamics with a Dual Dynamic Token Mixer for Visual Recognition

-

论文地址:https://arxiv.org//pdf/2310.19380

-

开源代码(即将开源):https://github.com/LMMMEng/TransXNet

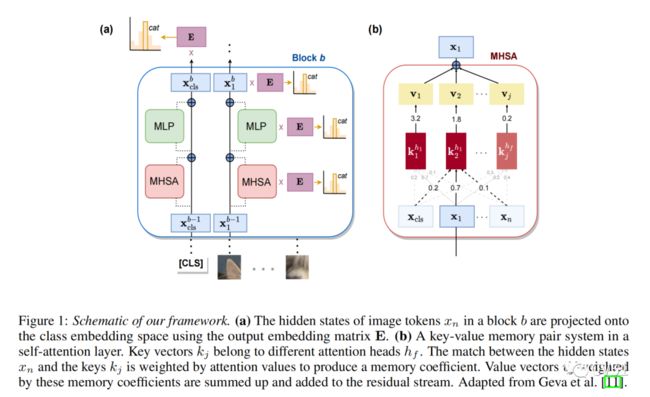

3.【图像分类】(NeurIPS2023)Analyzing Vision Transformers for Image Classification in Class Embedding Space

-

论文地址:https://arxiv.org//pdf/2310.18969

-

开源代码:https://github.com/martinagvilas/vit-cls_emb

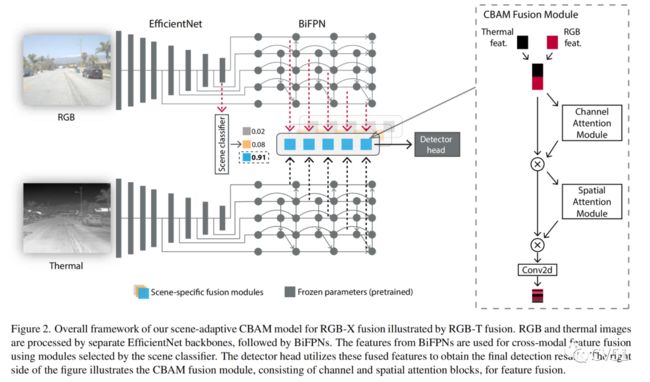

4.【目标检测】RGB-X Object Detection via Scene-Specific Fusion Modules

-

论文地址:https://arxiv.org//pdf/2310.19372

-

开源代码:https://github.com/dsriaditya999/RGBXFusion

5.【目标检测】A High-Resolution Dataset for Instance Detection with Multi-View Instance Capture

-

论文地址:https://arxiv.org//pdf/2310.19257

-

开源代码:https://github.com/insdet/instance-detection

6.【目标检测】PrObeD: Proactive Object Detection Wrapper

-

论文地址:https://arxiv.org//pdf/2310.18788

-

开源代码(即将开源):https://github.com/vishal3477/Proactive-Object-Detection#proactive-object-detection

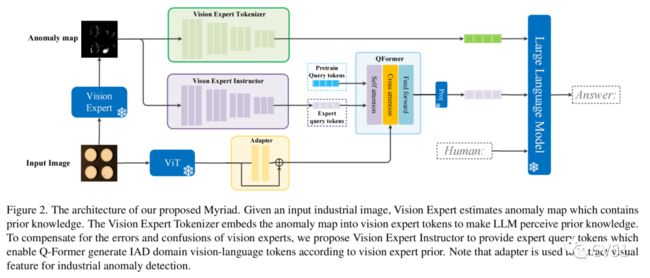

7.【异常检测】Myriad: Large Multimodal Model by Applying Vision Experts for Industrial Anomaly Detection

-

论文地址:https://arxiv.org//pdf/2310.19070

-

开源代码(即将开源):https://github.com/tzjtatata/Myriad

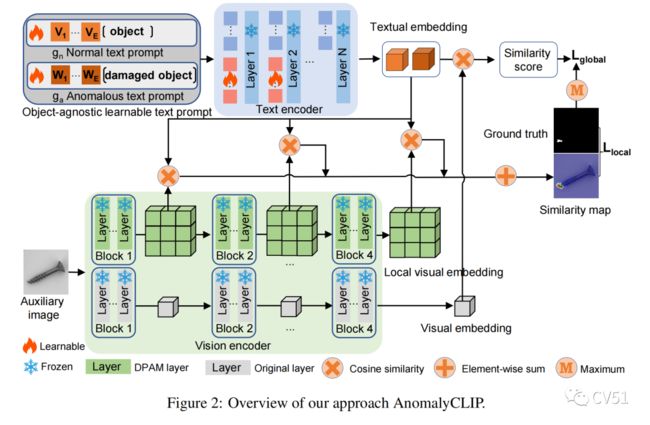

8.【异常检测】AnomalyCLIP: Object-agnostic Prompt Learning for Zero-shot Anomaly Detection

-

论文地址:https://arxiv.org//pdf/2310.18961

-

开源代码(即将开源):https://github.com/zqhang/AnomalyCLIP

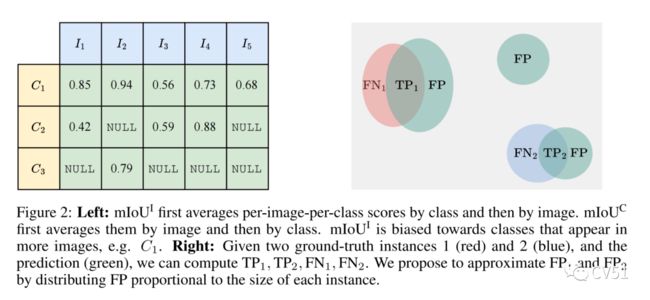

9.【语义分割】(NeurIPS2023)Revisiting Evaluation Metrics for Semantic Segmentation: Optimization and Evaluation of Fine-grained Intersection over Union

-

论文地址:https://arxiv.org//pdf/2310.19252

-

开源代码:https://github.com/zifuwanggg/JDTLosses

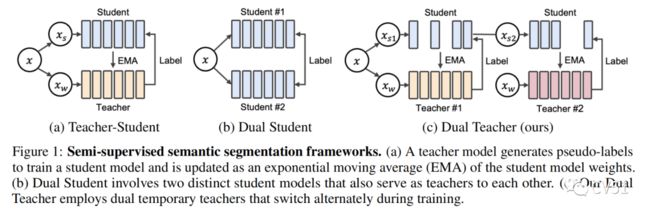

10.【语义分割】(NeurIPS2023)Switching Temporary Teachers for Semi-Supervised Semantic Segmentation

-

论文地址:https://arxiv.org//pdf/2310.18640

-

开源代码(即将开源):https://github.com/naver-ai/dual-teacher

11.【Open-Vocabulary Segmentation】(NeurIPS2023)Uncovering Prototypical Knowledge for Weakly Open-Vocabulary Semantic Segmentation

-

论文地址:https://arxiv.org//pdf/2310.19001

-

开源代码(即将开源):https://github.com/Ferenas/PGSeg

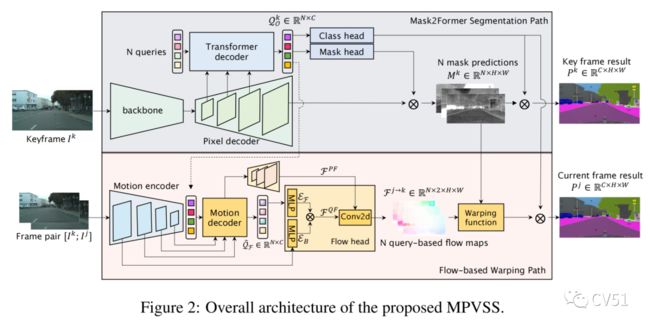

12.【视频语义分割】(NeurIPS2023)Mask Propagation for Efficient Video Semantic Segmentation

-

论文地址:https://arxiv.org//pdf/2310.18954

-

开源代码(即将开源):https://github.com/ziplab/MPVSS

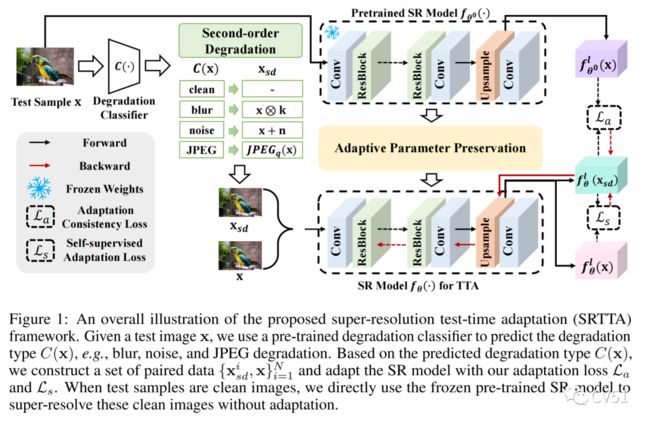

13.【超分辨率重建】(NeurIPS2023)Efficient Test-Time Adaptation for Super-Resolution with Second-Order Degradation and Reconstruction

-

论文地址:https://arxiv.org//pdf/2310.19011

-

开源代码(即将开源):https://github.com/DengZeshuai/SRTTA

14.【超分辨率重建】EDiffSR: An Efficient Diffusion Probabilistic Model for Remote Sensing Image Super-Resolution

-

论文地址:https://arxiv.org//pdf/2310.19288

-

开源代码(即将开源):https://github.com/XY-boy/EDiffSR

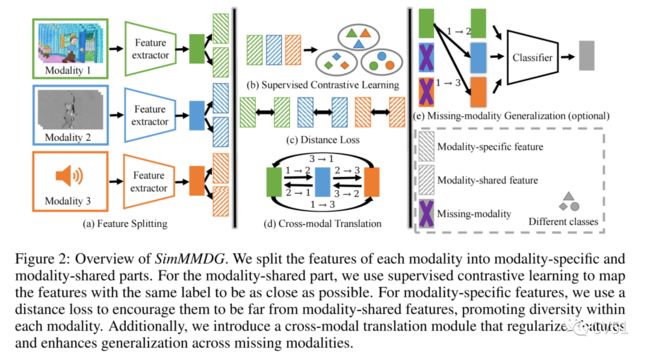

15.【领域泛化】(NeurIPS2023)SimMMDG: A Simple and Effective Framework for Multi-modal Domain Generalization

-

论文地址:https://arxiv.org//pdf/2310.19795

-

开源代码(即将开源):https://github.com/donghao51/SimMMDG

16.【领域泛化】(WACV2024)Domain Generalisation via Risk Distribution Matching

-

论文地址:https://arxiv.org//pdf/2310.18598

-

开源代码:https://github.com/nktoan/risk-distribution-matching

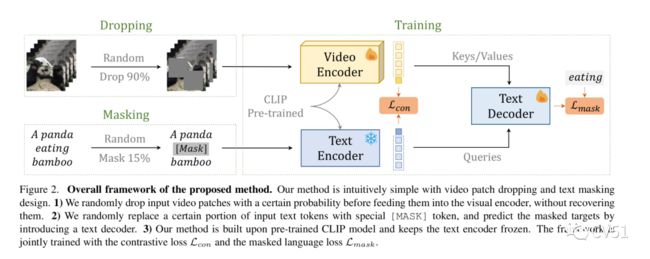

17.【多模态】Harvest Video Foundation Models via Efficient Post-Pretraining

-

论文地址:https://arxiv.org//pdf/2310.19554

-

开源代码:https://github.com/OpenGVLab/InternVideo

18.【多模态】IterInv: Iterative Inversion for Pixel-Level T2I Models

-

论文地址:https://arxiv.org//pdf/2310.19540

-

开源代码(即将开源):https://github.com/Tchuanm/IterInv

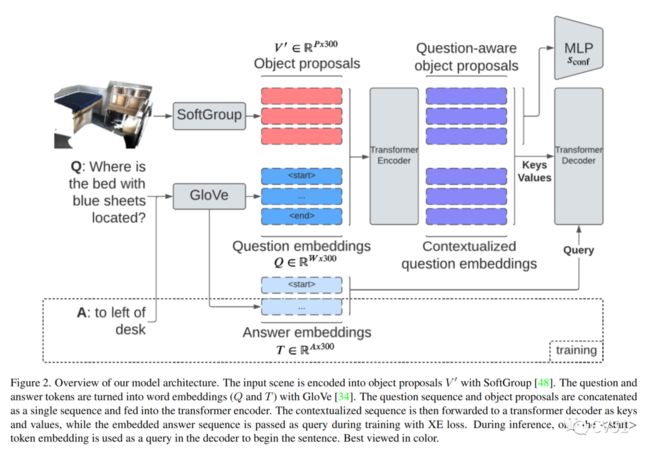

19.【多模态】Generating Context-Aware Natural Answers for Questions in 3D Scenes

-

论文地址:https://arxiv.org//pdf/2310.19516

-

开源代码(即将开源):https://github.com/MunzerDw/Gen3DQA

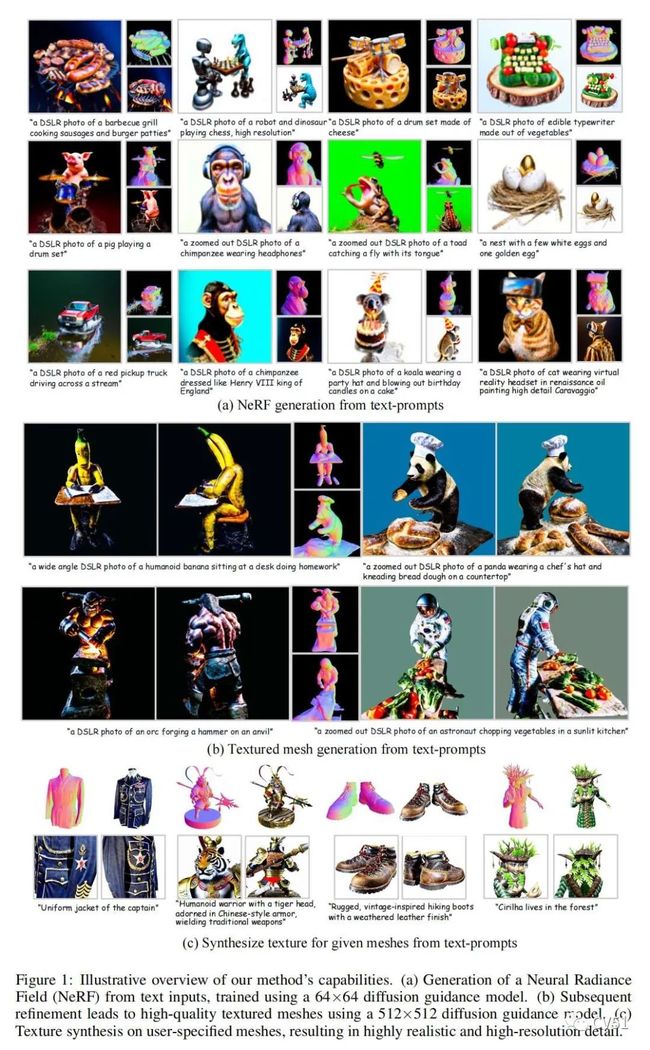

20.【多模态】Text-to-3D with Classifier Score Distillation

-

论文地址:https://arxiv.org//pdf/2310.19415

-

工程主页:https://xinyu-andy.github.io/Classifier-Score-Distillation/

-

代码即将开源

21.【多模态】Dynamic Task and Weight Prioritization Curriculum Learning for Multimodal Imagery

-

论文地址:https://arxiv.org//pdf/2310.19109

-

开源代码:https://github.com/fualsan/DATWEP

22.【多模态】TESTA: Temporal-Spatial Token Aggregation for Long-form Video-Language Understanding

-

论文地址:https://arxiv.org//pdf/2310.19060

-

开源代码:https://github.com/RenShuhuai-Andy/TESTA

23.【多模态】Customizing 360-Degree Panoramas through Text-to-Image Diffusion Models

-

论文地址:https://arxiv.org//pdf/2310.18840

-

开源代码:https://github.com/littlewhitesea/StitchDiffusion

24.【多模态】ROME: Evaluating Pre-trained Vision-Language Models on Reasoning beyond Visual Common Sense

-

论文地址:https://arxiv.org//pdf/2310.19301

-

开源代码(即将开源):https://github.com/K-Square-00/ROME

25.【多模态】Apollo: Zero-shot MultiModal Reasoning with Multiple Experts

-

论文地址:https://arxiv.org//pdf/2310.18369

-

开源代码:https://github.com/danielabd/Apollo-Cap

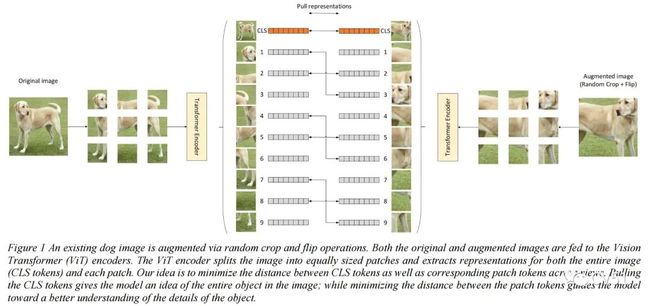

26.【自监督学习】Local-Global Self-Supervised Visual Representation Learning

-

论文地址:https://arxiv.org//pdf/2310.18651

-

开源代码:https://github.com/alijavidani/Local_Global_Representation_Learning

27.【自监督学习】(NeurIPS2023)InstanT: Semi-supervised Learning with Instance-dependent Thresholds

-

论文地址:https://arxiv.org//pdf/2310.18910

-

开源代码(即将开源):https://github.com/tmllab/2023_NeurIPS_InstanT

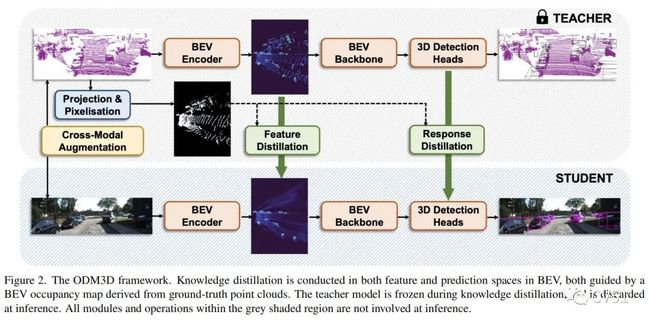

28.【单目3D目标检测】ODM3D: Alleviating Foreground Sparsity for Enhanced Semi-Supervised Monocular 3D Object Detection

-

论文地址:https://arxiv.org//pdf/2310.18620

-

开源代码(即将开源):https://github.com/arcaninez/odm3d

29.【自动驾驶:协同感知】Dynamic V2X Autonomous Perception from Road-to-Vehicle Vision

-

论文地址:https://arxiv.org//pdf/2310.19113

-

开源代码(即将开源):https://github.com/tjy1423317192/AP2VP

30.【自动驾驶:深度估计】(NeurIPS2023)Dynamo-Depth: Fixing Unsupervised Depth Estimation for Dynamical Scenes

-

论文地址:https://arxiv.org//pdf/2310.18887

-

工程主页:https://dynamo-depth.github.io/

-

开源代码(即将开源):https://github.com/YihongSun/Dynamo-Depth

31.【图像编辑】(EMNLP2023)Learning to Follow Object-Centric Image Editing Instructions Faithfully

-

论文地址:https://arxiv.org//pdf/2310.19145

-

开源代码:https://github.com/tuhinjubcse/FaithfulEdits_EMNLP2023

32.【视频生成】VideoCrafter1: Open Diffusion Models for High-Quality Video Generation

-

论文地址:https://arxiv.org//pdf/2310.19512

-

工程主页:https://ailab-cvc.github.io/videocrafter/

-

开源代码:https://github.com/AILab-CVC/VideoCrafter

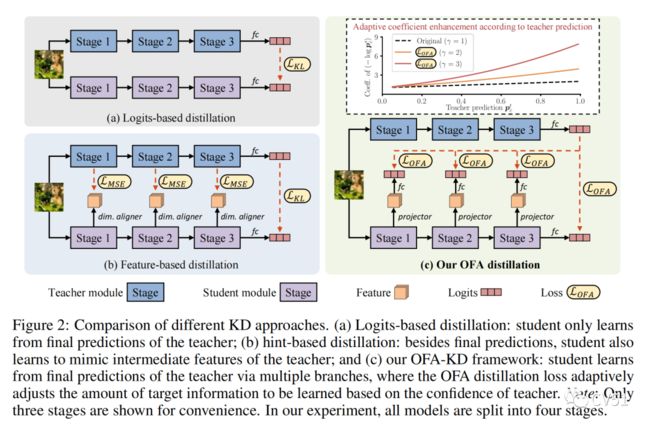

33.【知识蒸馏】One-for-All: Bridge the Gap Between Heterogeneous Architectures in Knowledge Distillation

-

论文地址:https://arxiv.org//pdf/2310.19444

-

开源代码:https://github.com/Hao840/OFAKD

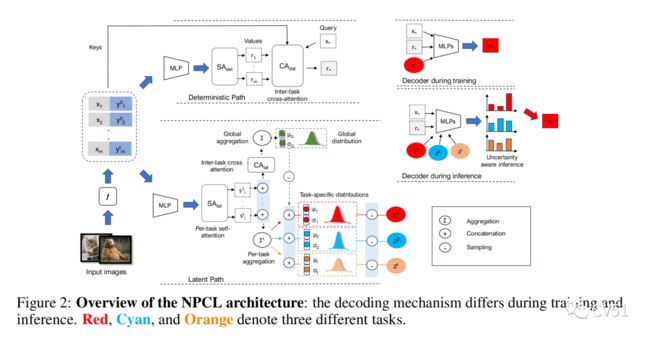

34.【Continual Learning】(NeurIPS2023)NPCL: Neural Processes for Uncertainty-Aware Continual Learning

-

论文地址:https://arxiv.org//pdf/2310.19272

-

开源代码(即将开源):https://github.com/srvCodes/NPCL

推荐阅读:

[1]读研期间,如何快速上手计算机视觉?

[2]23篇YOLO目标检测论文及代码分享

[3]Vision Transformer近三年18篇论文和代码分享

[4]人体姿态估计2023年最新论文和开源代码汇总(上)

[5]5分钟搞定深度学习中间特征可视化

[6]无监督视频实例分割新方法VideoCutLER!猛猛涨点!

[7]RevColV2:视觉预训练模型涨点!旷视提出将解耦表征应用于视觉预训练模型

[8]ICLR2023|解耦表征提升CNN和ViT性能!旷视等提出

[9]ICCV2023|涨点神器!目标检测蒸馏学习新方法,浙大、海康威视等提出

[10]EViT:借鉴鹰眼视觉结构,南开大学等提出ViT新骨干架构,在多个任务上涨点

[11]HSN:微调预训练ViT用于目标检测和分割的新方法,华南理工和阿里巴巴联合提出

[12]港科大提出适用于夜间场景语义分割的无监督域自适应新方法

[13]2023年9月-10月最新4篇带有开源代码的图像增强论文

[14]图像分类任务ViT与CNN谁更胜一筹?DeepMind用实验证明

[15]YOLO目标检测器中间特征可视化代码