文章目录

- 1. Kubernetes的网络类别

- 2. Kubernetes的接口类型

- 3. CNI网络插件 ---- Flannel的介绍及部署

-

- 3.1 简介

- 3.2 flannel的三种模式

- 3.3 flannel的UDP模式工作原理

- 3.4 flannel的VXLAN模式工作原理

- 3.5 Flannel CNI 网络插件部署

-

- 3.5.1 上传flannel镜像文件和插件包到node节点

- 3.5.2 在master01节点部署 CNI 网络

- 3.5.3 查看集群的节点状态

- 4.CoreDNS 的简单介绍与部署

-

- 4.1 简介

- 4.2 CoreDNS的部署

-

- 4.2.1 构建coredns镜像 ---- 所有node节点

- 4.2.2 编写CoreDNS配置文件

- 4.2.3 部署 CoreDNS ---- master01节点

1. Kubernetes的网络类别

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第1张图片](http://img.e-com-net.com/image/info8/5da95c4cd9674c749358ce314b7b6258.jpg)

- 节点网络 :

nodeIP

- Pod网络:

podIP

- Service网络:

clusterIP

2. Kubernetes的接口类型

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第2张图片](http://img.e-com-net.com/image/info8/3e386fc6410e422f925208dc22c9a180.jpg)

CRI 容器运行时接口CNI 容器网络接口CSI 容器存储接口

3. CNI网络插件 ---- Flannel的介绍及部署

3.1 简介

Flannel 的功能是让集群中的不同节点主机创建的 Docker 容器都具有全集群唯一的虚拟 IP 地址。Flannel 是 Overlay 网络的一种,也是将 TCP 源数据包封装在另一种网络包里面进行路由转发和通信

3.2 flannel的三种模式

- UDP : 出现最早的模式,但是性能较差,基于flanneld应用程序实现数据包的封装/解封装

- VXLAN : 默认模式,是推荐使用的模式,性能比UDP模式更好,基于内核实现数据帧的封装/解封装,配置简单使用方便

- HOST-GW : 性能最好的模式,但是配置复杂,且不能跨网段

3.3 flannel的UDP模式工作原理

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第3张图片](http://img.e-com-net.com/image/info8/1c54376409ce4bc2a107b1b32556b13e.jpg)

- 原始数据包从源主机的Pod容器发出到cni0网桥接口,再由cni0转发到flannel0虚拟接口

flanneld服务进程会监听flannel0接口收到的数据,flanneld进程会将原始数据包封装到UDP报文里- flanneld进程会根据在etcd中维护的路由表查到目标Pod所在的nodeIP,并在UDP报文外再封装nodeIP报文、MAC报文,再通过物理网卡发送到目标node节点

- UDP报文通过

8285号端口送到目标node节点的flanneld进程进行解封装,再通过flannel0接口转发到cni0网桥,再由cni0转发到目标Pod容器

3.4 flannel的VXLAN模式工作原理

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第4张图片](http://img.e-com-net.com/image/info8/427327461060477993df1ec6e5916051.jpg)

- 原始数据帧从源主机的Pod容器发出到cni0网桥接口,再由cni0转发到flannel.1虚拟接口

- flannel.1接口收到数据帧后添加VXLAN头部,并在内核将原始数据帧封装到UDP报文里

- flanneld进程根据在etcd维护的路由表将UDP报文通过物理网卡发送到目标node节点

- UDP报文通过8472号端口送到目标node节点的flannel.1接口在内核进行解封装,再通过flannel.1接口转发到cni0网桥,再由cni0转发到目标Pod容器

3.5 Flannel CNI 网络插件部署

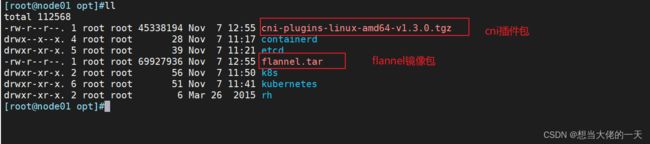

3.5.1 上传flannel镜像文件和插件包到node节点

node01节点

cd /opt/

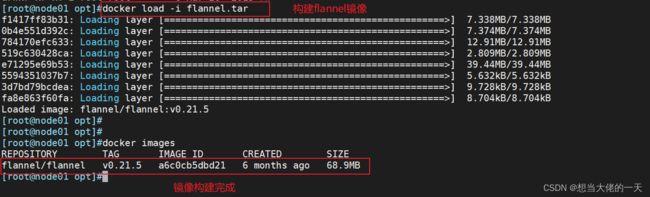

docker load -i flannel.tar

docker load -i flannel-cni-plugin.tar

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第5张图片](http://img.e-com-net.com/image/info8/8ebcaf8911ca48eebfab509a2985a975.jpg)

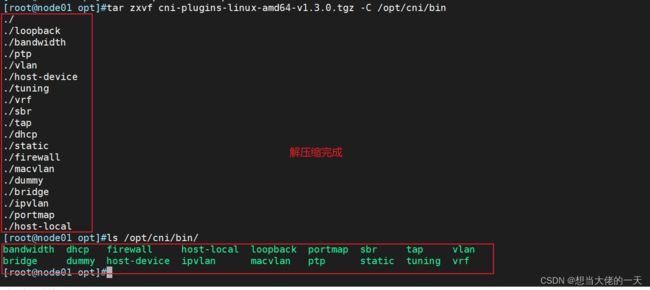

mkdir /opt/cni/bin -p

tar zxvf cni-plugins-linux-amd64-v1.3.0.tgz -C /opt/cni/bin

scp *.tar *.tgz node02:`pwd`

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第6张图片](http://img.e-com-net.com/image/info8/5ea0a6d8bd114f878e3164aee6870064.jpg)

node02

for i in `ls *.tar`

> do

> docker load -i $i

> done

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第7张图片](http://img.e-com-net.com/image/info8/8c465212dfda481ba9d5d161efe1e020.jpg)

mkdir /opt/cni/bin -p

tar zxvf cni-plugins-linux-amd64-v1.3.0.tgz -C /opt/cni/bin

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第8张图片](http://img.e-com-net.com/image/info8/71d8a8f977334e0daf7a5fca877ae12f.jpg)

3.5.2 在master01节点部署 CNI 网络

cd /opt/k8s

vim kube-flannel.yml

apiVersion: v1

kind: Namespace

metadata:

labels:

k8s-app: flannel

pod-security.kubernetes.io/enforce: privileged

name: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: flannel

name: flannel

namespace: kube-flannel

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: flannel

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- networking.k8s.io

resources:

- clustercidrs

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: flannel

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

kind: ConfigMap

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-cfg

namespace: kube-flannel

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-ds

namespace: kube-flannel

spec:

selector:

matchLabels:

app: flannel

k8s-app: flannel

template:

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

containers:

- args:

- --ip-masq

- --kube-subnet-mgr

command:

- /opt/bin/flanneld

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

image: docker.io/flannel/flannel:v0.21.5

name: kube-flannel

resources:

requests:

cpu: 100m

memory: 50Mi

securityContext:

capabilities:

add:

- NET_ADMIN

- NET_RAW

privileged: false

volumeMounts:

- mountPath: /run/flannel

name: run

- mountPath: /etc/kube-flannel/

name: flannel-cfg

- mountPath: /run/xtables.lock

name: xtables-lock

hostNetwork: true

initContainers:

- args:

- -f

- /flannel

- /opt/cni/bin/flannel

command:

- cp

image: docker.io/flannel/flannel-cni-plugin:v1.1.2

name: install-cni-plugin

volumeMounts:

- mountPath: /opt/cni/bin

name: cni-plugin

- args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

command:

- cp

image: docker.io/flannel/flannel:v0.21.5

name: install-cni

volumeMounts:

- mountPath: /etc/cni/net.d

name: cni

- mountPath: /etc/kube-flannel/

name: flannel-cfg

priorityClassName: system-node-critical

serviceAccountName: flannel

tolerations:

- effect: NoSchedule

operator: Exists

volumes:

- hostPath:

path: /run/flannel

name: run

- hostPath:

path: /opt/cni/bin

name: cni-plugin

- hostPath:

path: /etc/cni/net.d

name: cni

- configMap:

name: kube-flannel-cfg

name: flannel-cfg

- hostPath:

path: /run/xtables.lock

type: FileOrCreate

name: xtables-lock

kubectl apply -f kube-flannel.yml

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第9张图片](http://img.e-com-net.com/image/info8/dca6982ce8c74641b35f1b07aec9f4bf.jpg)

通过应用这个 YAML 文件,创建了 Flannel 网络插件所需的各种资源对象。

可以使用 `kubectl get` 命令来查看这些资源对象的状态和详细信息。

kubectl get pods -n kube-flannel

或者

kubectl get pods -A

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第10张图片](http://img.e-com-net.com/image/info8/6e98e35754e5431bba4658f97eb102c4.jpg)

kubectl delete -f kube-flannel.yml

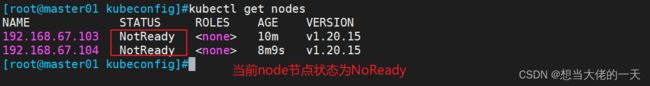

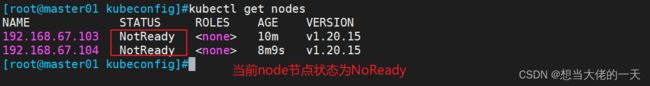

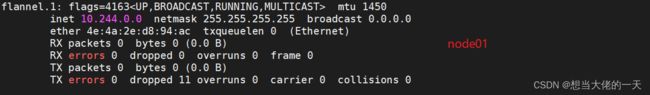

3.5.3 查看集群的节点状态

kubectl get nodes

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第11张图片](http://img.e-com-net.com/image/info8/3d721392fa7944e980657a604b16c395.jpg)

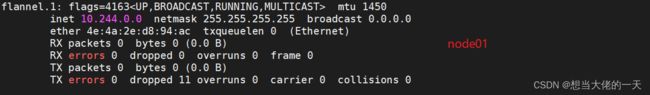

ifconfig

4.CoreDNS 的简单介绍与部署

4.1 简介

CoreDNS 是 K8S 的默认 DNS 实现- 根据

service 资源名称 解析出对应的 clusterIP

- 根据 s

tatefulset 控制器创建的 Pod 资源名称 解析出对应的 podIP

4.2 CoreDNS的部署

4.2.1 构建coredns镜像 ---- 所有node节点

cd /opt

docker load -i coredns.tar

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第12张图片](http://img.e-com-net.com/image/info8/9fe0945fb6094fa7b9c761cd796a0a36.jpg)

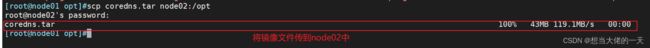

scp coredns.tar node02:/opt

cd /opt

docker load -i coredns.tar

4.2.2 编写CoreDNS配置文件

vim coredns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

priorityClassName: system-cluster-critical

serviceAccountName: coredns

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

containers:

- name: coredns

image: registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.0.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

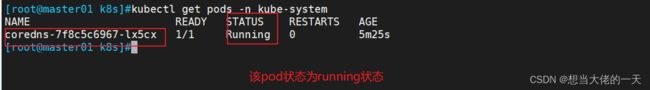

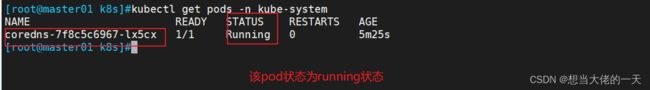

4.2.3 部署 CoreDNS ---- master01节点

cd /opt/k8s

kubectl apply -f coredns.yaml

kubectl get pods -n kube-system

kubectl run -it --rm dns-test --image=busybox:1.28.4 sh

If you don't see a command prompt, try pressing enter.

/

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第13张图片](http://img.e-com-net.com/image/info8/ac1681cc63624e788dd8fcf1f5b77aca.jpg)

![]()

![]()

![]()

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第1张图片](http://img.e-com-net.com/image/info8/5da95c4cd9674c749358ce314b7b6258.jpg)

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第2张图片](http://img.e-com-net.com/image/info8/3e386fc6410e422f925208dc22c9a180.jpg)

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第3张图片](http://img.e-com-net.com/image/info8/1c54376409ce4bc2a107b1b32556b13e.jpg)

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第4张图片](http://img.e-com-net.com/image/info8/427327461060477993df1ec6e5916051.jpg)

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第5张图片](http://img.e-com-net.com/image/info8/8ebcaf8911ca48eebfab509a2985a975.jpg)

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第6张图片](http://img.e-com-net.com/image/info8/5ea0a6d8bd114f878e3164aee6870064.jpg)

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第7张图片](http://img.e-com-net.com/image/info8/8c465212dfda481ba9d5d161efe1e020.jpg)

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第8张图片](http://img.e-com-net.com/image/info8/71d8a8f977334e0daf7a5fca877ae12f.jpg)

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第9张图片](http://img.e-com-net.com/image/info8/dca6982ce8c74641b35f1b07aec9f4bf.jpg)

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第10张图片](http://img.e-com-net.com/image/info8/6e98e35754e5431bba4658f97eb102c4.jpg)

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第11张图片](http://img.e-com-net.com/image/info8/3d721392fa7944e980657a604b16c395.jpg)

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第12张图片](http://img.e-com-net.com/image/info8/9fe0945fb6094fa7b9c761cd796a0a36.jpg)

![[云原生案例2.2 ] Kubernetes的部署安装 【单master集群架构 ---- (二进制安装部署)】网络插件部分_第13张图片](http://img.e-com-net.com/image/info8/ac1681cc63624e788dd8fcf1f5b77aca.jpg)