Flink的并行度和Kafka的partition的结合

flink kafka实现反序列化:

package Flink_Kafka;

import com.alibaba.fastjson.JSON;

import org.apache.flink.api.common.serialization.DeserializationSchema;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer010;

import org.apache.flink.streaming.util.serialization.SimpleStringSchema;

import java.io.IOException;

import java.util.Properties;

//kafka的反序列化

public class KafkaSimpleSche {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

Properties consumerProperties = new Properties();

//设置服务

consumerProperties.setProperty("bootstrap.servers","s101:9092");

//设置消费者组

consumerProperties.setProperty("group.id","con56");

//自动提交偏移量

consumerProperties.setProperty("enable.auto.commit","true");

consumerProperties.setProperty("auto.commit.interval.ms","2000");

//SimpleStringSchema()这是系统提供了一个kafka反序列化,我们也可以自定义一个反序列化类

// DataStream dataStreamSource = env.addSource(new FlinkKafkaConsumer010<>("browse_topic",new SimpleStringSchema(),consumerProperties));

DataStreamSource browse_topic = env.addSource(new FlinkKafkaConsumer010<>("browse_topic", new BrowseLogDeserializationSchema(), consumerProperties));

browse_topic.print();

env.execute("FlinkKafkaConsumer");

}

//自定义一个kafka的反序列化类

public static class BrowseLogDeserializationSchema implements DeserializationSchema {

@Override

public BrowseLog deserialize(byte[] bytes) throws IOException {

return JSON.parseObject(new String(bytes),BrowseLog.class);

}

@Override

public boolean isEndOfStream(BrowseLog browseLogDeserializationSchema) {

return false;

}

@Override

public TypeInformation getProducedType() {

return TypeInformation.of(BrowseLog.class);

}

}

public static class BrowseLog{

private String userID;

private String eventTime;

private int productPrice;

public BrowseLog(){

}

public BrowseLog(String userID, String eventTime, int productPrice) {

this.userID = userID;

this.eventTime = eventTime;

this.productPrice = productPrice;

}

public String getUserID() {

return userID;

}

public void setUserID(String userID) {

this.userID = userID;

}

public String getEventTime() {

return eventTime;

}

public void setEventTime(String eventTime) {

this.eventTime = eventTime;

}

public int getProductPrice() {

return productPrice;

}

public void setProductPrice(int productPrice) {

this.productPrice = productPrice;

}

@Override

public String toString() {

return "BrowseLog{" +

"userID='" + userID + '\'' +

", eventTime='" + eventTime + '\'' +

", productPrice=" + productPrice +

'}';

}

}

}

Flink的kafka写一个消费者consumer:

package Flink_Kafka;

import com.alibaba.fastjson.JSON;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.serialization.DeserializationSchema;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.ProcessFunction;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer010;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer010;

import org.apache.flink.streaming.util.serialization.SimpleStringSchema;

import org.apache.flink.util.Collector;

import java.util.Properties;

public class KafkaConsumer {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

Properties consumerProperties = new Properties();

//设置服务

consumerProperties.setProperty("bootstrap.servers","s101:9092");

//设置消费者组

consumerProperties.setProperty("group.id","con56");

//自动提交偏移量

consumerProperties.setProperty("enable.auto.commit","true");

consumerProperties.setProperty("auto.commit.interval.ms","2000");

//SimpleStringSchema()这是系统提供了一个kafka反序列化,我们也可以自定义一个反序列化类

FlinkKafkaConsumer010 consumer10 = new FlinkKafkaConsumer010<>("browse_topic",new SimpleStringSchema(),consumerProperties);

// consumer10.setStartFromLatest();

//获取数据

consumer10.setStartFromEarliest();

DataStreamSource dataStreamSource = env.addSource(consumer10).setParallelism(6);

DataStream process = dataStreamSource.process(new ProcessFunction() {

@Override

public void processElement(String s, Context context, Collector collector) throws Exception {

try{

BrowseLog browseLog = JSON.parseObject(s,BrowseLog.class);

if(browseLog !=null){

collector.collect(browseLog);

}

}catch (Exception e){

System.out.print("解析Json异常,异常信息是:"+e.getMessage());

}

}

}).setParallelism(10);

DataStream map = process.map(new MapFunction() {

@Override

public String map(BrowseLog browseLog) throws Exception {

String jsonString=JSON.toJSONString(browseLog);

return jsonString;

}

});

Properties producerProperties = new Properties();

producerProperties.setProperty("bootstrap.servers","s101:9092");

map.addSink(new FlinkKafkaProducer010("topic_flinktest",new SimpleStringSchema(),producerProperties));

env.execute("KafkaConsumer");

}

public static class BrowseLog{

private String userID;

private String eventTime;

private int productPrice;

public BrowseLog(){

}

public BrowseLog(String userID, String eventTime, int productPrice) {

this.userID = userID;

this.eventTime = eventTime;

this.productPrice = productPrice;

}

public String getUserID() {

return userID;

}

public void setUserID(String userID) {

this.userID = userID;

}

public String getEventTime() {

return eventTime;

}

public void setEventTime(String eventTime) {

this.eventTime = eventTime;

}

public int getProductPrice() {

return productPrice;

}

public void setProductPrice(int productPrice) {

this.productPrice = productPrice;

}

@Override

public String toString() {

return "BrowseLog{" +

"userID='" + userID + '\'' +

", eventTime='" + eventTime + '\'' +

", productPrice=" + productPrice +

'}';

}

}

}

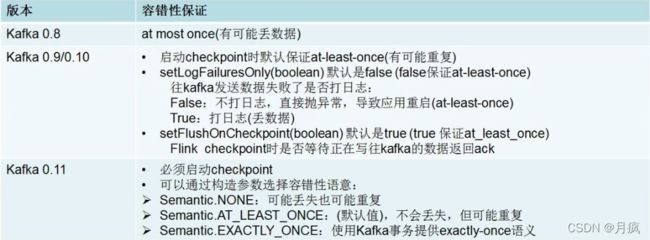

Kafka的容错性机制:

上面的程序当中,我们可以实现将数据写入到Kafka当中,但是不支持exactly-once语义,因此不能保证数据的绝对安全性,在分析Kafka Producer的容错性时,

需要根据Kafka的不同版本来进行分析。

1、Kafka 0.8版本

只能保证 at most once:因为只能保证最多一次,所以可能会造成数据丢失;

2、Kafka0.9版本or0.10版本

如果Flink开启了CheckPoint机制,默认可以保证at least once语义,即至少消费一次,但是发送的数据有可能造成重复,虽然可以保证at least once语义,

但是需要开启2个参数(不开也行,默认就是响应的作用):myProducer.setLogFailuresOnly(false):默认该值就是false,表示当producer向kafka发送数据失败是,是否需要打印日志。

false:不打印日志,直接抛出异常,导致应用重启,从而实现at least once语义;

true:发送数据失败的时候,打印日志(数据丢失),不能实现at least once

myProducer.setFlushOnCheckpoint(true):默认值是true,true可以保证 at least once

true:当kafka写数据的时候,只有返回ack,Flink才会执行checkpoint

false:不需要等待返回ack,Flink就会执行checkpoint

因为myProduce.setLogFailuresOnly(false)这个参数的缘故,建议修改Kafka生产者的重试次数,retries这个参数默认是0,建议修改为3

3、kafka 0.11版本

在Kafka011版本当中,当Flink开启了Checkpoint机制,则针对FlinkKafkaProducer011就可以提供exactly-once语义

在具体使用的时候,可以选择具体语义,支持以下三项:

semantic.NONE:可能丢失重复

semantic.AT_LEAST_ONCE:(默认值),不会丢失,但可能重复

semantic.EXACTLY_ONCE:使用Kafka事物提供exactly-once语义