基于轻量级卷积神经网络CNN开发构建打架斗殴识别分析系统

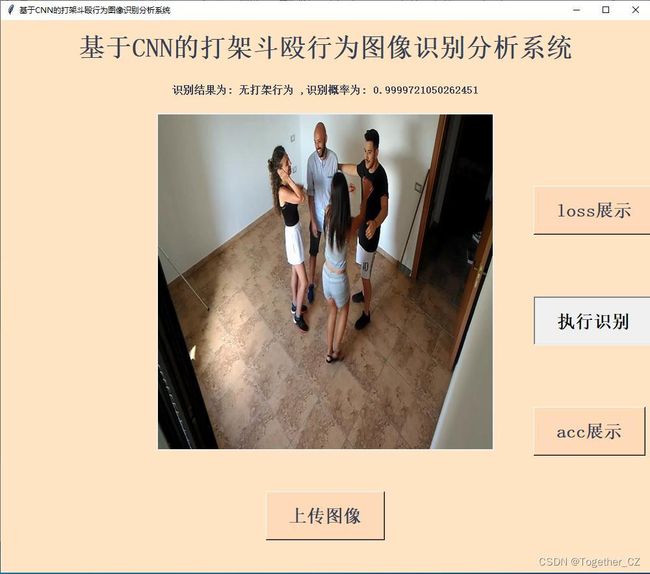

在很多公共场合中,因为一些不可控因素导致最终爆发打架斗殴或者大规则冲突事件的案例层出不穷,基于视频监控等技术手段智能自动化地识别出已有或者潜在的危险行为对于维护公共场合的安全稳定有着重要的意义。本文的核心目的就是想要基于CNN模型来尝试开发构建公众场景下的打架斗殴行为识别系统,这里我们从互联网中采集了相当批量的聚众数据,首先看下效果:

接下来看下数据集:

【有打架斗殴】

【无打架斗殴】

数据主要来源于互联网数据采集和后期的人工处理标注。

可以借助我编写的数据集随机划分函数,便捷地生成训练集-测试集,如下所示:

def randomSplit(dataDir="data/",train_ratio=0.80):

"""

数据集随机划分

"""

if not os.path.exists("labels.json") or not os.path.exists("dataset.json"):

pic_list=[]

labels_list = os.listdir(dataDir)

labels_list.sort()

print("labels_list: ", labels_list)

with open("labels.json", "w") as f:

f.write(json.dumps(labels_list))

for one_label in os.listdir(dataDir):

oneDir=dataDir+one_label+"/"

one_list=[oneDir+one for one in os.listdir(oneDir)]

pic_list+=one_list

length=len(pic_list)

print("length: ", length)

train_num=int(length*train_ratio)

test_num=length-train_num

print("train_num: ", train_num, ", test_num: ", test_num)

train_list=random.sample(pic_list, train_num)

test_list=[one for one in pic_list if one not in train_list]

dataset={}

dataset["train"]=train_list

dataset["test"]=test_list

with open("dataset.json","w") as f:

f.write(json.dumps(dataset))

else:

print("Not Need To SplitData Again!!!!!")接下来需要加载本地图像数据来解析创建可直接用于后续模型训练计算的数据集,核心实现如下所示:

def buildDataset():

"""

加载本地数据集创建数据集

"""

X_train, y_train = [], []

X_test, y_test = [], []

train_list=dataset["train"]

test_list=dataset["test"]

picDir = "data/train/"

#训练集

for one_path in train_list:

try:

print("one_path: ", one_path)

one_img = parse4Img(one_path)

one_y = parse4Label(one_pic_classes,labels_list)

X_train.append(one_img)

y_train.append(one_y)

except Exception as e:

print("train Exception: ", e)

X_train = np.array(X_train)

#测试集

for one_path in test_list:

try:

print("one_path: ", one_path)

one_img = parse4Img(one_path)

one_y = parse4Label(one_pic_classes,labels_list)

X_test.append(one_img)

y_test.append(one_y)

except Exception as e:

print("test Exception: ", e)

X_test = np.array(X_test)完成数据集的解析构建之后就可以进行模型的开发训练工作了。

这部分可以参考我前面的博文的实现即可,这里就不再赘述了。本文中搭建的轻量级模型结构详情如下所示:

{

"class_name": "Sequential",

"config": {

"name": "sequential_1",

"layers": [{

"class_name": "Conv2D",

"config": {

"name": "conv2d_1",

"trainable": true,

"batch_input_shape": [null, 100, 100, 3],

"dtype": "float32",

"filters": 64,

"kernel_size": [3, 3],

"strides": [2, 2],

"padding": "same",

"data_format": "channels_last",

"dilation_rate": [1, 1],

"activation": "relu",

"use_bias": true,

"kernel_initializer": {

"class_name": "RandomUniform",

"config": {

"minval": -0.05,

"maxval": 0.05,

"seed": null

}

},

"bias_initializer": {

"class_name": "Zeros",

"config": {}

},

"kernel_regularizer": null,

"bias_regularizer": null,

"activity_regularizer": null,

"kernel_constraint": null,

"bias_constraint": null

}

}, {

"class_name": "MaxPooling2D",

"config": {

"name": "max_pooling2d_1",

"trainable": true,

"pool_size": [2, 2],

"padding": "valid",

"strides": [2, 2],

"data_format": "channels_last"

}

}, {

"class_name": "Conv2D",

"config": {

"name": "conv2d_2",

"trainable": true,

"filters": 128,

"kernel_size": [3, 3],

"strides": [2, 2],

"padding": "same",

"data_format": "channels_last",

"dilation_rate": [1, 1],

"activation": "relu",

"use_bias": true,

"kernel_initializer": {

"class_name": "RandomUniform",

"config": {

"minval": -0.05,

"maxval": 0.05,

"seed": null

}

},

"bias_initializer": {

"class_name": "Zeros",

"config": {}

},

"kernel_regularizer": null,

"bias_regularizer": null,

"activity_regularizer": null,

"kernel_constraint": null,

"bias_constraint": null

}

}, {

"class_name": "MaxPooling2D",

"config": {

"name": "max_pooling2d_2",

"trainable": true,

"pool_size": [2, 2],

"padding": "valid",

"strides": [2, 2],

"data_format": "channels_last"

}

}, {

"class_name": "Conv2D",

"config": {

"name": "conv2d_3",

"trainable": true,

"filters": 256,

"kernel_size": [3, 3],

"strides": [2, 2],

"padding": "same",

"data_format": "channels_last",

"dilation_rate": [1, 1],

"activation": "relu",

"use_bias": true,

"kernel_initializer": {

"class_name": "RandomUniform",

"config": {

"minval": -0.05,

"maxval": 0.05,

"seed": null

}

},

"bias_initializer": {

"class_name": "Zeros",

"config": {}

},

"kernel_regularizer": null,

"bias_regularizer": null,

"activity_regularizer": null,

"kernel_constraint": null,

"bias_constraint": null

}

}, {

"class_name": "MaxPooling2D",

"config": {

"name": "max_pooling2d_3",

"trainable": true,

"pool_size": [2, 2],

"padding": "valid",

"strides": [2, 2],

"data_format": "channels_last"

}

}, {

"class_name": "Flatten",

"config": {

"name": "flatten_1",

"trainable": true,

"data_format": "channels_last"

}

}, {

"class_name": "Dense",

"config": {

"name": "dense_1",

"trainable": true,

"units": 256,

"activation": "relu",

"use_bias": true,

"kernel_initializer": {

"class_name": "VarianceScaling",

"config": {

"scale": 1.0,

"mode": "fan_avg",

"distribution": "uniform",

"seed": null

}

},

"bias_initializer": {

"class_name": "Zeros",

"config": {}

},

"kernel_regularizer": null,

"bias_regularizer": null,

"activity_regularizer": null,

"kernel_constraint": null,

"bias_constraint": null

}

}, {

"class_name": "Dropout",

"config": {

"name": "dropout_1",

"trainable": true,

"rate": 0.1,

"noise_shape": null,

"seed": null

}

}, {

"class_name": "Dense",

"config": {

"name": "dense_2",

"trainable": true,

"units": 512,

"activation": "relu",

"use_bias": true,

"kernel_initializer": {

"class_name": "VarianceScaling",

"config": {

"scale": 1.0,

"mode": "fan_avg",

"distribution": "uniform",

"seed": null

}

},

"bias_initializer": {

"class_name": "Zeros",

"config": {}

},

"kernel_regularizer": null,

"bias_regularizer": null,

"activity_regularizer": null,

"kernel_constraint": null,

"bias_constraint": null

}

}, {

"class_name": "Dropout",

"config": {

"name": "dropout_2",

"trainable": true,

"rate": 0.15,

"noise_shape": null,

"seed": null

}

}, {

"class_name": "Dense",

"config": {

"name": "dense_3",

"trainable": true,

"units": 2,

"activation": "softmax",

"use_bias": true,

"kernel_initializer": {

"class_name": "VarianceScaling",

"config": {

"scale": 1.0,

"mode": "fan_avg",

"distribution": "uniform",

"seed": null

}

},

"bias_initializer": {

"class_name": "Zeros",

"config": {}

},

"kernel_regularizer": null,

"bias_regularizer": null,

"activity_regularizer": null,

"kernel_constraint": null,

"bias_constraint": null

}

}]

},

"keras_version": "2.2.4",

"backend": "tensorflow"

}默认200次epoch的迭代计算,训练完成后,对loss曲线和acc曲线进行了对比可视化展示,如下所示:

【loss对比曲线】

【acc对比曲线】

可以看到:模型的效果还是很不错的。

最后编写专用的可视化系统界面,进行实例化推理展示,实例结果如下所示:

后续的工作考虑结合视频连续帧的特点来进一步提升打架斗殴行为的识别精度,感兴趣的话也都可以自行尝试实践一下。