实战:kubeadm方式搭建k8s集群(containerd)-20211102

![]()

目录

文章目录

-

- 目录

- 文档版本

- 实验环境

- 实验软件

- 1、集群环境配置(all节点均要配置)

-

- 1.1配置主机名

- 1.2关闭防火墙,selinux

- 1.3关闭swap分区

- 1.4配置dns解析

- 1.5将桥接的IPv4流量传递到iptables的链

- 1.6安装 ipvs

- 1.7同步服务器时间

- 1.8配置免密(方便后期从master节点传文件到node节点)

- 2、安装 Containerd(all节点均要配置)

-

- 2.1安装containerd

- 2.2将 containerd 的 cgroup driver 配置为 systemd

- 2.3配置镜像加速器地址

- 2.4启动containerd服务

- 2.5验证

- 3、使用 kubeadm 部署 Kubernetes

-

- 3.1添加阿里云YUM软件源(all节点均要配置)

- 3.2安装 kubeadm、kubelet、kubectl(all节点均要配置)

- 3.3初始化集群(master1节点操作)

- 3.4添加节点

- 3.5安装网络插件flannel

- 4、Dashboard

-

- 4.1下载kube-dashboard的yaml文件

- 4.2修改kube-dashboard.yaml文件

- 4.3部署kube-dashboard.yaml文件

- 4.4配置cni

- 5、清理

- 需要注意的问题

-

- 1.coredns镜像和pause镜像问题

- 2.有的yaml文件无法下载问题

- 关于我

- 总结

文档版本

| 版本 | 创建者 | 更新时间 | 备注 |

|---|---|---|---|

| v1 | 黄岗 | 2021年11月2日14:19:51 | 创建文档 |

实验环境

1、硬件环境

3台虚机 2c2g,20g。(nat模式,可访问外网)

| 角色 | 主机名 | ip |

|---|---|---|

| master节点 | master1 | 172.29.9.51 |

| node节点 | node1 | 172.29.9.52 |

| node节点 | node2 | 172.29.9.53 |

2、软件环境

| 软件 | 版本 |

|---|---|

| 操作系统 | centos7.6_x64 1810 mini(其他centos7.x版本也行) |

| containerd | v1.5.5 |

| kubernetes | v1.22.1 |

实验软件

链接:https://pan.baidu.com/s/124kie2_6u3JMN6yolZlZZw

提取码:le5w

–来自百度网盘超级会员V6的分享

1、集群环境配置(all节点均要配置)

使用 containerd 作为容器运行时搭建 Kubernetes 集群。

现在我们使用 kubeadm 从头搭建一个使用 containerd 作为容器运行时的 Kubernetes 集群,这里我们安装最新的 v1.22.2 版本。

1.1配置主机名

hostnamectl --static set-hostname master1

bash

hostnamectl --static set-hostname node1

bash

hostnamectl --static set-hostname node2

bash

注意:

节点的 hostname 必须使用标准的 DNS 命名,另外千万不用什么默认的localhost的 hostname,会导致各种错误出现的。在 Kubernetes 项目里,机器的名字以及一切存储在 Etcd 中的 API 对象,都必须使用标准的 DNS 命名(RFC 1123)。可以使用命令hostnamectl set-hostname node1来修改 hostname。

1.2关闭防火墙,selinux

systemctl stop firewalld && systemctl disable firewalld

systemctl stop NetworkManager && systemctl disable NetworkManager

setenforce 0

sed -i s/SELINUX=enforcing/SELINUX=disabled/ /etc/selinux/config

1.3关闭swap分区

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

问题:k8s集群安装为什么需要关闭swap分区?

swap必须关,否则kubelet起不来,进而导致k8s集群起不来;

可能kublet考虑到用swap做数据交换的话,对性能影响比较大;

1.4配置dns解析

cat >> /etc/hosts << EOF

172.29.9.51 master1

172.29.9.52 node1

172.29.9.53 node2

EOF

问题:k8s集群安装时节点是否需要配置dns解析?

就是后面的kubectl如果需要连接运行在node上面的容器的话,它是通过kubectl get node出来的名称去连接的,所以那个的话,我们需要在宿主机上能够解析到它。如果它解析不到的话,那么他就可能连不上;

1.5将桥接的IPv4流量传递到iptables的链

modprobe br_netfilter

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system

注意:将桥接的IPv4流量传递到iptables的链

由于开启内核 ipv4 转发需要加载 br_netfilter 模块,所以加载下该模块:

modprobe br_netfilter

bridge-nf说明:bridge-nf 使得 netfilter 可以对 Linux 网桥上的 IPv4/ARP/IPv6 包过滤。比如,设置

net.bridge.bridge-nf-call-iptables=1后,二层的网桥在转发包时也会被 iptables的 FORWARD 规则所过滤。常用的选项包括:

- net.bridge.bridge-nf-call-arptables:是否在 arptables 的 FORWARD 中过滤网桥的 ARP 包

- net.bridge.bridge-nf-call-ip6tables:是否在 ip6tables 链中过滤 IPv6 包

- net.bridge.bridge-nf-call-iptables:是否在 iptables 链中过滤 IPv4 包

- net.bridge.bridge-nf-filter-vlan-tagged:是否在 iptables/arptables 中过滤打了 vlan 标签的包。

1.6安装 ipvs

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

yum install ipset -y

yum install ipvsadm -y

说明:

01、上面脚本创建了的/etc/sysconfig/modules/ipvs.modules文件,保证在节点重启后能自动加载所需模块。使用lsmod | grep -e ip_vs -e nf_conntrack_ipv4命令查看是否已经正确加载所需的内核模块;

02、要确保各个节点上已经安装了 ipset 软件包,因此需要:yum install ipset -y;

03、为了便于查看 ipvs 的代理规则,最好安装一下管理工具 ipvsadm:yum install ipvsadm -y

1.7同步服务器时间

yum install chrony -y

systemctl enable chronyd --now

chronyc sources

1.8配置免密(方便后期从master节点传文件到node节点)

#在master1节点执行如下命令,按2次回车

ssh-keygen

#在master1节点执行

ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected]

ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected]

2、安装 Containerd(all节点均要配置)

2.1安装containerd

cd /root/

yum install libseccomp -y

wget https://download.fastgit.org/containerd/containerd/releases/download/v1.5.5/cri-containerd-cni-1.5.5-linux-amd64.tar.gz

tar -C / -xzf cri-containerd-cni-1.5.5-linux-amd64.tar.gz

echo "export PATH=$PATH:/usr/local/bin:/usr/local/sbin" >> ~/.bashrc

source ~/.bashrc

mkdir -p /etc/containerd

containerd config default > /etc/containerd/config.toml

systemctl enable containerd --now

ctr version

说明:centos7上具体如何安装containerd,请看文章:[实战:centos7上containerd的安装-20211023](https://www.onlyonexl.cn/2021/10/24/55 实战:centos7上containerd的安装-20211023/),本次只提供具体shell命令。

2.2将 containerd 的 cgroup driver 配置为 systemd

对于使用 systemd 作为 init system 的 Linux 的发行版,使用 systemd 作为容器的 cgroup driver 可以确保节点在资源紧张的情况更加稳定,所以推荐将 containerd 的 cgroup driver 配置为 systemd。

修改前面生成的配置文件 /etc/containerd/config.toml,在 plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options 配置块下面将 SystemdCgroup 设置为 true:

#通过搜索SystemdCgroup进行定位

vim /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

...

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true

....

#注意:最终输出shell命令:

sed -i "s/SystemdCgroup = false/SystemdCgroup = true/g" /etc/containerd/config.toml

2.3配置镜像加速器地址

然后再为镜像仓库配置一个加速器,需要在 cri 配置块下面的 registry 配置块下面进行配置 registry.mirrors:(注意缩进)

[root@master1 ~]#vim /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://kvuwuws2.mirror.aliyuncs.com"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s.gcr.io"]

endpoint = ["https://registry.aliyuncs.com/k8sxio"]

2.4启动containerd服务

由于上面我们下载的 containerd 压缩包中包含一个 etc/systemd/system/containerd.service 的文件,这样我们就可以通过 systemd 来配置 containerd 作为守护进程运行了,现在我们就可以启动 containerd 了,直接执行下面的命令即可:

systemctl daemon-reload

systemctl enable containerd --now

2.5验证

启动完成后就可以使用 containerd 的本地 CLI 工具 ctr 和 crictl 了,比如查看版本:

➜ ~ ctr version

➜ ~ crictl version

至此,containerd安装完成。

3、使用 kubeadm 部署 Kubernetes

3.1添加阿里云YUM软件源(all节点均要配置)

我们使用阿里云的源进行安装:

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

3.2安装 kubeadm、kubelet、kubectl(all节点均要配置)

yum makecache fast

yum install -y kubelet-1.22.2 kubeadm-1.22.2 kubectl-1.22.2 --disableexcludes=kubernetes

kubeadm version

systemctl enable --now kubelet

说明:–disableexcludes 禁掉除了kubernetes之外的别的仓库

符合预期:

3.3初始化集群(master1节点操作)

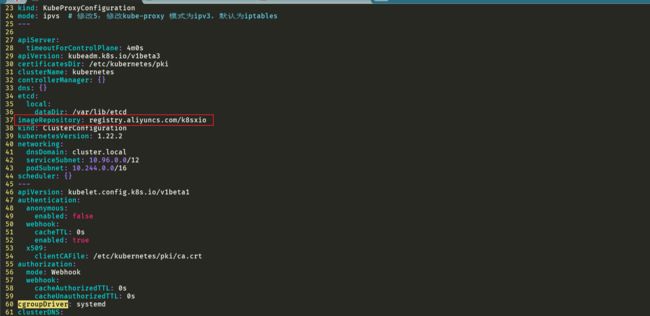

当我们执行 kubelet --help 命令的时候可以看到原来大部分命令行参数都被 DEPRECATED了,这是因为官方推荐我们使用 --config 来指定配置文件,在配置文件中指定原来这些参数的配置,可以通过官方文档 Set Kubelet parameters via a config file 了解更多相关信息,这样 Kubernetes 就可以支持**动态 Kubelet 配置(Dynamic Kubelet Configuration)**了,参考 Reconfigure a Node’s Kubelet in a Live Cluster。

然后我们可以通过下面的命令在 master 节点上输出集群初始化默认使用的配置:

[root@master1 ~]#kubeadm config print init-defaults --component-configs KubeletConfiguration > kubeadmyaml

然后根据我们自己的需求修改配置,比如修改 imageRepository 指定集群初始化时拉取 Kubernetes 所需镜像的地址,kube-proxy 的模式为 ipvs,另外需要注意的是我们这里是准备安装 flannel 网络插件的,需要将 networking.podSubnet 设置为10.244.0.0/16:

[root@master1 ~]#vim kubeadmyaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.29.9.51 # 修改1:指定master节点内网IP

bindPort: 6443

nodeRegistration:

criSocket: /run/containerd/containerd.sock # 修改2:使用 containerd的Unix socket 地址

imagePullPolicy: IfNotPresent

name: master1 #name #修改3:修改master节点名称

taints: # 修改4:给master添加污点,master节点不能调度应用

- effect: "NoSchedule"

key: "node-role.kubernetes.io/master"

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs # 修改5:修改kube-proxy 模式为ipvs,默认为iptables

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/k8sxio #修改6:image地址

kind: ClusterConfiguration

kubernetesVersion: 1.22.2 #修改7:指定k8s版本号,默认这里忽略了小版本号

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 # 修改8:指定 pod 子网

scheduler: {}

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

cgroupDriver: systemd # 修改9:配置 cgroup driver

logging: {}

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

配置提示

对于上面的资源清单的文档比较杂,要想完整了解上面的资源对象对应的属性,可以查看对应的 godoc 文档,地址: https://godoc.org/k8s.io/kubernetes/cmd/kubeadm/app/apis/kubeadm/v1beta3。

在开始初始化集群之前可以使用 kubeadm config images pull --config kubeadm.yaml 预先在各个服务器节点上拉取所k8s需要的容器镜像。

我们可以先list一下:

[root@master1 ~]#kubeadm config images list

[root@master1 ~]#kubeadm config images list --config kubeadm.yaml

#记得昨天测试都没报错啊,现在怎么报错了。。。可以忽略,不影响后续使用的。

配置文件准备好过后,可以使用如下命令先将相关镜像 pull 下面:

[root@master1 ~]#kubeadm config images pull --config kubeadm.yaml

W1031 06:59:20.922890 25580 strict.go:55] error unmarshaling configuration schema.GroupVersionKind{Group:"kubelet.config.k8s.io", Version:"v1beta1", Kind:"KubeletConfiguration"}: error converting YAML to JSON: yaml: unmarshal errors:

line 27: key "cgroupDriver" already set in map

[config/images] Pulled registry.aliyuncs.com/k8sxio/kube-apiserver:v1.22.2

[config/images] Pulled registry.aliyuncs.com/k8sxio/kube-controller-manager:v1.22.2

[config/images] Pulled registry.aliyuncs.com/k8sxio/kube-scheduler:v1.22.2

[config/images] Pulled registry.aliyuncs.com/k8sxio/kube-proxy:v1.22.2

[config/images] Pulled registry.aliyuncs.com/k8sxio/pause:3.5

[config/images] Pulled registry.aliyuncs.com/k8sxio/etcd:3.5.0-0

failed to pull image "registry.aliyuncs.com/k8sxio/coredns:v1.8.4": output: time="2021-10-31T07:04:50+08:00" level=fatal msg="pulling image: rpc error: code = NotFound desc = failed to pull and unpack image \"registry.aliyuncs.com/k8sxio/coredns:v1.8.4\": failed to resolve reference \"registry.aliyuncs.com/k8sxio/coredns:v1.8.4\": registry.aliyuncs.com/k8sxio/coredns:v1.8.4: not found"

, error: exit status 1

To see the stack trace of this error execute with --v=5 or higher

[root@master1 ~]#

上面在拉取 coredns 镜像的时候出错了,阿里云仓库里没有找到这个镜像,我们可以手动到官方仓库 pull 该镜像,然后重新 tag 下镜像地址即可:

[root@master1 ~]#ctr -n k8s.io i pull docker.io/coredns/coredns:1.8.4

docker.io/coredns/coredns:1.8.4: resolved |++++++++++++++++++++++++++++++++++++++|

index-sha256:6e5a02c21641597998b4be7cb5eb1e7b02c0d8d23cce4dd09f4682d463798890: done |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:10683d82b024a58cc248c468c2632f9d1b260500f7cd9bb8e73f751048d7d6d4: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:bc38a22c706b427217bcbd1a7ac7c8873e75efdd0e59d6b9f069b4b243db4b4b: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:8d147537fb7d1ac8895da4d55a5e53621949981e2e6460976dae812f83d84a44: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:c6568d217a0023041ef9f729e8836b19f863bcdb612bb3a329ebc165539f5a80: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 15.9s total: 12.1 M (780.6 KiB/s)

unpacking linux/amd64 sha256:6e5a02c21641597998b4be7cb5eb1e7b02c0d8d23cce4dd09f4682d463798890...

done: 684.151259ms

[root@master1 ~]#ctr -n k8s.io i ls -q

docker.io/coredns/coredns:1.8.4

registry.aliyuncs.com/k8sxio/etcd:3.5.0-0

registry.aliyuncs.com/k8sxio/etcd@sha256:9ce33ba33d8e738a5b85ed50b5080ac746deceed4a7496c550927a7a19ca3b6d

registry.aliyuncs.com/k8sxio/kube-apiserver:v1.22.2

registry.aliyuncs.com/k8sxio/kube-apiserver@sha256:eb4fae890583e8d4449c1e18b097aec5574c25c8f0323369a2df871ffa146f41

registry.aliyuncs.com/k8sxio/kube-controller-manager:v1.22.2

registry.aliyuncs.com/k8sxio/kube-controller-manager@sha256:91ccb477199cdb4c63fb0c8fcc39517a186505daf4ed52229904e6f9d09fd6f9

registry.aliyuncs.com/k8sxio/kube-proxy:v1.22.2

registry.aliyuncs.com/k8sxio/kube-proxy@sha256:561d6cb95c32333db13ea847396167e903d97cf6e08dd937906c3dd0108580b7

registry.aliyuncs.com/k8sxio/kube-scheduler:v1.22.2

registry.aliyuncs.com/k8sxio/kube-scheduler@sha256:c76cb73debd5e37fe7ad42cea9a67e0bfdd51dd56be7b90bdc50dd1bc03c018b

registry.aliyuncs.com/k8sxio/pause:3.5

registry.aliyuncs.com/k8sxio/pause@sha256:1ff6c18fbef2045af6b9c16bf034cc421a29027b800e4f9b68ae9b1cb3e9ae07

sha256:0048118155842e4c91f0498dd298b8e93dc3aecc7052d9882b76f48e311a76ba

sha256:5425bcbd23c54270d9de028c09634f8e9a014e9351387160c133ccf3a53ab3dc

sha256:873127efbc8a791d06e85271d9a2ec4c5d58afdf612d490e24fb3ec68e891c8d

sha256:8d147537fb7d1ac8895da4d55a5e53621949981e2e6460976dae812f83d84a44

sha256:b51ddc1014b04295e85be898dac2cd4c053433bfe7e702d7e9d6008f3779609b

sha256:e64579b7d8862eff8418d27bf67011e348a5d926fa80494a6475b3dc959777f5

sha256:ed210e3e4a5bae1237f1bb44d72a05a2f1e5c6bfe7a7e73da179e2534269c459

[root@master1 ~]#

[root@master1 ~]#ctr -n k8s.io i tag docker.io/coredns/coredns:1.8.4 registry.aliyuncs.com/k8sxio/coredns:v1.8.4

registry.aliyuncs.com/k8sxio/coredns:v1.8.4

[root@master1 ~]#

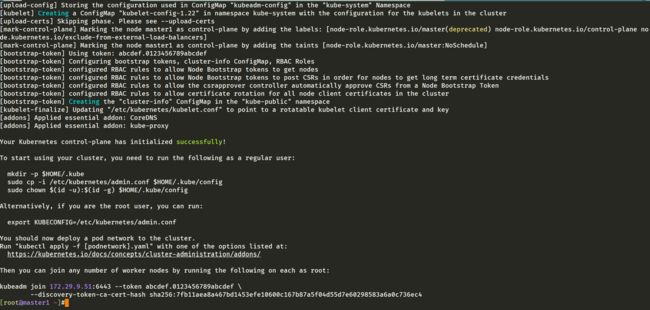

然后就可以使用上面的配置文件在 master 节点上进行初始化:

这里需要特别注意下:会报错。。。

#注意:可以通过加上--v 5来进一步打印更多的log信息

kubeadm init --config kubeadm.yaml --v 5

[root@master1 ~]#kubeadm init --config kubeadm.yaml

W1031 07:14:21.837059 26278 strict.go:55] error unmarshaling configuration schema.GroupVersionKind{Group:"kubelet.config.k8s.io", V ersion:"v1beta1", Kind:"KubeletConfiguration"}: error converting YAML to JSON: yaml: unmarshal errors:

line 27: key "cgroupDriver" already set in map

[init] Using Kubernetes version: v1.22.2

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.c luster.local master1] and IPs [10.96.0.1 172.29.9.51]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master1] and IPs [172.29.9.51 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master1] and IPs [172.29.9.51 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

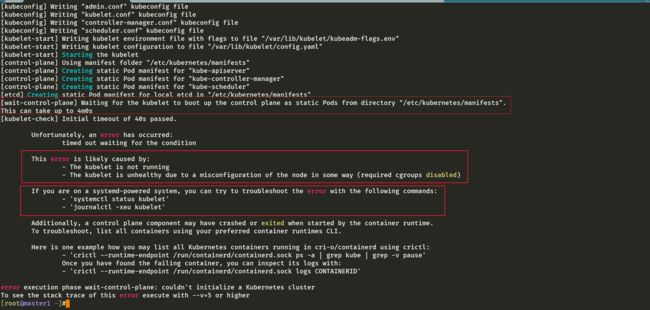

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all Kubernetes containers running in cri-o/containerd using crictl:

- 'crictl --runtime-endpoint /run/containerd/containerd.sock ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'crictl --runtime-endpoint /run/containerd/containerd.sock logs CONTAINERID'

error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster

To see the stack trace of this error execute with --v=5 or higher

[root@master1 ~]#

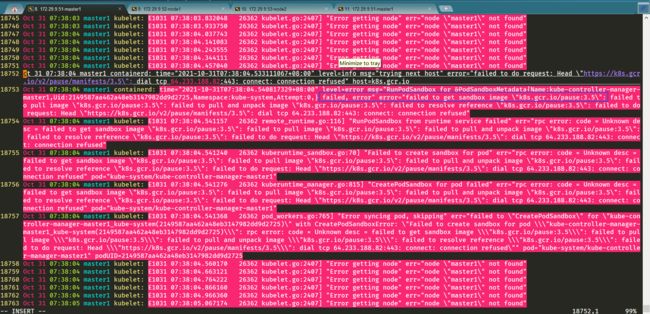

我们进一步排查报错log:

[root@master1 ~]#systemctl status kubelet

[root@master1 ~]#journalctl -xeu kubelet

[root@master1 ~]#vim /var/log/messages

通过上述排查,从vim /var/log/messages可以看出是error="failed to get sandbox image \"k8s.gcr.io/pause:3.5\"问题。

咦,奇怪了,不是本地都已经下载好了阿里云pause镜像了吗,这里怎么提示还从默认k8s仓库拉pause镜像呢?

这里我们再根据报错提示再次从k8s官方仓库拉取下pause镜像,看下效果:

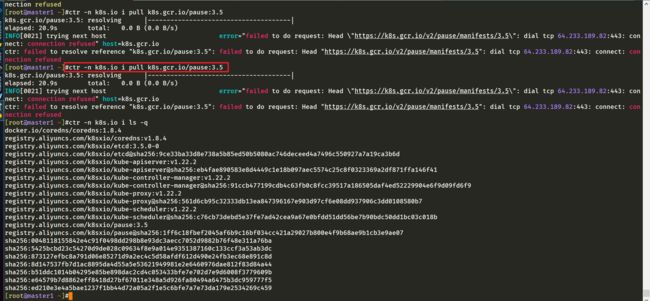

[root@master1 ~]#ctr -n k8s.io i pull k8s.gcr.io/pause:3.5

尝试了几次,发现从k8s官方仓库拉取pause镜像一直失败,即使科学上网也还是有问题。

咦,我们不是可以直接把阿里云仓库下载的镜像直接打下tag不就可以了吗,下面测试下:

[root@master1 ~]#ctr -n k8s.io i tag registry.aliyuncs.com/k8sxio/pause:3.5 k8s.gcr.io/pause:3.5

[root@master1 ~]#ctr -n k8s.io i ls -q

此时,我们用kubeadm reset命令清空下刚才master1节点,再次初始化集群看下下效果:

[root@master1 ~]#kubeadm reset

[root@master1 ~]#kubeadm init --config kubeadm.yaml

W1031 07:56:49.681727 27288 strict.go:55] error unmarshaling configuration schema.GroupVersionKind{Group:"kubelet.config.k8s.io", Version:"v1beta1", Kind:"KubeletConfiguration"}: error converting YAML to JSON: yaml: unmarshal errors:

line 27: key "cgroupDriver" already set in map

[init] Using Kubernetes version: v1.22.2

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master1] and IPs [10.96.0.1 172.29.9.51]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master1] and IPs [172.29.9.51 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master1] and IPs [172.29.9.51 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 210.014030 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.22" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master1 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.29.9.51:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:7fb11aea8a467bd1453efe10600c167b87a5f04d55d7e60298583a6a0c736ec4

[root@master1 ~]#

注意:

I1030 07:26:13.898398 18436 checks.go:205] validating availability of port 10250 kubelet端口

I1030 07:26:13.898547 18436 checks.go:205] validating availability of port 2379 etcd端口

I1030 07:26:13.898590 18436 checks.go:205] validating availability of port 2380 etcd端口

master1节点初始化成功。

根据安装提示拷贝 kubeconfig 文件:

[root@master1 ~]#mkdir -p $HOME/.kube

[root@master1 ~]#sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master1 ~]#sudo chown $(id -u):$(id -g) $HOME/.kube/config

然后可以使用 kubectl 命令查看 master 节点已经初始化成功了:

[root@master1 ~]#kubectl get node

NAME STATUS ROLES AGE VERSION

master1 Ready control-plane,master 114s v1.22.2

[root@master1 ~]#

3.4添加节点

记住初始化集群上面的配置和操作要提前做好,将 master 节点上面的 $HOME/.kube/config 文件拷贝到 node 节点对应的文件中,安装 kubeadm、kubelet、kubectl(可选),然后执行上面初始化完成后提示的 join 命令即可:

kubeadm join 172.29.9.51:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:7fb11aea8a467bd1453efe10600c167b87a5f04d55d7e60298583a6a0c736ec4

join 命令:如果忘记了上面的 join 命令可以使用命令 kubeadm token create --print-join-command 重新获取。

执行成功后运行 get nodes 命令:

[root@master1 ~]#kubectl get node

NAME STATUS ROLES AGE VERSION

master1 Ready control-plane,master 31m v1.22.2

node1 Ready 102s v1.22.2

node2 Ready 95s v1.22.2

[root@master1 ~]#

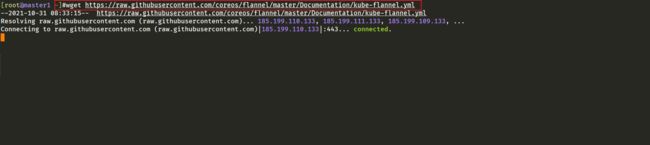

3.5安装网络插件flannel

这个时候其实集群还不能正常使用,因为还没有安装网络插件,接下来安装网络插件,可以在文档 https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/ 中选择我们自己的网络插件,这里我们安装 flannel:

➜ ~ wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

注意:

# 如果有节点是多网卡,则需要在资源清单文件中指定内网网卡

# 搜索到名为 kube-flannel-ds 的 DaemonSet,在kube-flannel容器下面

➜ ~ vi kube-flannel.yml

......

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.15.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=eth0 # 如果是多网卡的话,指定内网网卡的名称

......

- 注意:这个文件需要科学上网才可以,你们可以直接用我这里的下载好的文件

[root@master1 ~]#ll -h

total 122M

-rw-r--r-- 1 root root 122M Jul 30 01:16 cri-containerd-cni-1.5.5-linux-amd64.tar.gz

-rw-r--r-- 1 root root 2.0K Oct 31 06:54 kubeadm.yaml

-rw-r--r-- 1 root root 7.4K Oct 31 23:01 kube-dashboard.yaml

-rw-r--r-- 1 root root 5.1K Oct 31 08:38 kube-flannel.yml

[root@master1 ~]#

https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

![]()

[root@master1 ~]#vim kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.2

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: quay.io/coreos/flannel:v0.15.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.15.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- 这个文件不用修改什么,直接apply即可:

[root@master1 ~]#kubectl apply -f kube-flannel.yml # 安装 flannel 网络插件

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

[root@master1 ~]#

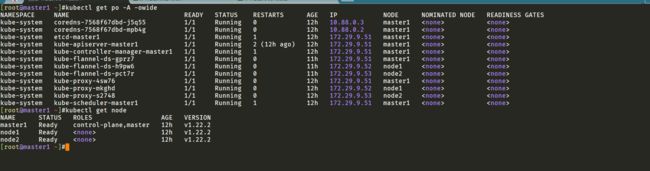

- 隔一会儿查看 Pod 运行状态:

[root@master1 ~]#kubectl get pod -nkube-system -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-7568f67dbd-j5q55 1/1 Running 0 12h 10.88.0.3 master1 <none> <none>

coredns-7568f67dbd-mpb4g 1/1 Running 0 12h 10.88.0.2 master1 <none> <none>

etcd-master1 1/1 Running 1 12h 172.29.9.51 master1 <none> <none>

kube-apiserver-master1 1/1 Running 2 (12h ago) 12h 172.29.9.51 master1 <none> <none>

kube-controller-manager-master1 1/1 Running 1 12h 172.29.9.51 master1 <none> <none>

kube-flannel-ds-gprz7 1/1 Running 0 11h 172.29.9.51 master1 <none> <none>

kube-flannel-ds-h9pw6 0/1 Init:0/2 0 11h 172.29.9.52 node1 <none> <none>

kube-flannel-ds-pct7r 0/1 Init:0/2 0 11h 172.29.9.53 node2 <none> <none>

kube-proxy-4sw76 1/1 Running 0 12h 172.29.9.51 master1 <none> <none>

kube-proxy-mkghd 0/1 ContainerCreating 0 11h 172.29.9.52 node1 <none> <none>

kube-proxy-s2748 0/1 ContainerCreating 0 11h 172.29.9.53 node2 <none> <none>

kube-scheduler-master1 1/1 Running 1 12h 172.29.9.51 master1 <none> <none>

[root@master1 ~]#

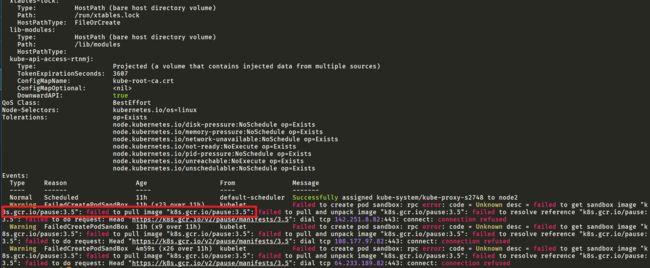

- 这时候遇到了个问题:

可以看出:node1和node2的 kube-flannel、kube-proxy pod均没有启动成功!

这些组件都是以daemonset方式启动的,即每一个节点均会起一个pod;

我们通过kubectl describe pod xxx命令来看下这些pod部署失败的原因:

[root@master1 ~]#kubectl describe pod kube-flannel-ds-h9pw6 -nkube-system

[root@master1 ~]#kubectl describe pod kube-proxy-s2748 -nkube-system

经查看发现导致node1、node2节点部署kube-flannel、kube-proxy pod失败的原因是没有k8s.gcr.io/pause:3.5镜像,这个应该和在master节点遇到的现象一样。

接下来我们在node1节点上测试下:

我们先来看下本地存在哪些镜像?

[root@node1 ~]#ctr -n k8s.io i ls -q

[root@node1 ~]#ctr i ls -q

[root@node1 ~]#

查看发现本地无任何镜像。

根据报错提示,我们手动拉取下k8s.gcr.io/pause:3.5镜像:

[root@node1 ~]#ctr -n k8s.io i pull k8s.gcr.io/pause:3.5

k8s.gcr.io/pause:3.5: resolving |--------------------------------------|

elapsed: 20.9s total: 0.0 B (0.0 B/s)

INFO[0021] trying next host error="failed to do request: Head \"https://k8s.gcr.io/v2/pause/manifests/3.5\": dial tcp 64.233.188.82:443: connect: connection refused" host=k8s.gcr.io

ctr: failed to resolve reference "k8s.gcr.io/pause:3.5": failed to do request: Head "https://k8s.gcr.io/v2/pause/manifests/3.5": dial tcp 64.233.188.82:443: connect: connection refused

[root@node1 ~]#

结果报错,看这个报错是这个镜像无法拉取。

此时,我们采用阿里源拉取下这个镜像,然后再重新打下tag,和master1节点一样:

[root@node1 ~]#ctr -n k8s.io i pull registry.aliyuncs.com/k8sxio/pause:3.5

registry.aliyuncs.com/k8sxio/pause:3.5: resolved |++++++++++++++++++++++++++++++++++++++|

index-sha256:1ff6c18fbef2045af6b9c16bf034cc421a29027b800e4f9b68ae9b1cb3e9ae07: done |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:369201a612f7b2b585a8e6ca99f77a36bcdbd032463d815388a96800b63ef2c8: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:019d8da33d911d9baabe58ad63dea2107ed15115cca0fc27fc0f627e82a695c1: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:ed210e3e4a5bae1237f1bb44d72a05a2f1e5c6bfe7a7e73da179e2534269c459: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 1.0 s total: 4.8 Ki (4.8 KiB/s)

unpacking linux/amd64 sha256:1ff6c18fbef2045af6b9c16bf034cc421a29027b800e4f9b68ae9b1cb3e9ae07...

done: 48.82759ms

[root@node1 ~]#ctr -n k8s.io i tag registry.aliyuncs.com/k8sxio/pause:3.5 k8s.gcr.io/pause:3.5

k8s.gcr.io/pause:3.5

[root@node1 ~]#ctr -n k8s.io i ls -q

k8s.gcr.io/pause:3.5

registry.aliyuncs.com/k8sxio/pause:3.5

sha256:ed210e3e4a5bae1237f1bb44d72a05a2f1e5c6bfe7a7e73da179e2534269c459

[root@node1 ~]#

重新打好tag后,我们再次回到master1节点观察下pod启动情况:

现在发现node1节点的kube-flannel、kube-peoxy pod都启动完成了,就剩node2的了,那就是这个问题了。

我们按照相同方法在node2节点上进行相同操作:

[root@node2 ~]# ctr -n k8s.io i pull registry.aliyuncs.com/k8sxio/pause:3.5

registry.aliyuncs.com/k8sxio/pause:3.5: resolved |++++++++++++++++++++++++++++++++++++++|

index-sha256:1ff6c18fbef2045af6b9c16bf034cc421a29027b800e4f9b68ae9b1cb3e9ae07: done |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:369201a612f7b2b585a8e6ca99f77a36bcdbd032463d815388a96800b63ef2c8: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:019d8da33d911d9baabe58ad63dea2107ed15115cca0fc27fc0f627e82a695c1: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:ed210e3e4a5bae1237f1bb44d72a05a2f1e5c6bfe7a7e73da179e2534269c459: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 0.9 s total: 4.8 Ki (5.3 KiB/s)

unpacking linux/amd64 sha256:1ff6c18fbef2045af6b9c16bf034cc421a29027b800e4f9b68ae9b1cb3e9ae07...

done: 53.021995ms

[root@node2 ~]#ctr -n k8s.io i tag registry.aliyuncs.com/k8sxio/pause:3.5 k8s.gcr.io/pause:3.5

k8s.gcr.io/pause:3.5

[root@node2 ~]#ctr -n k8s.io i ls -q

k8s.gcr.io/pause:3.5

registry.aliyuncs.com/k8sxio/pause:3.5

sha256:ed210e3e4a5bae1237f1bb44d72a05a2f1e5c6bfe7a7e73da179e2534269c459

[root@node2 ~]#

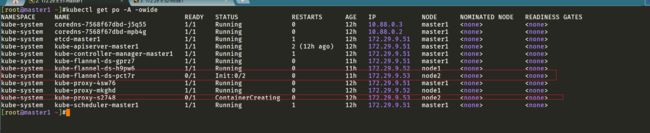

最终,在master1节点上再次确认下pod启动情况:

[root@master1 ~]#kubectl get po -A -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-7568f67dbd-j5q55 1/1 Running 0 12h 10.88.0.3 master1 <none> <none>

kube-system coredns-7568f67dbd-mpb4g 1/1 Running 0 12h 10.88.0.2 master1 <none> <none>

kube-system etcd-master1 1/1 Running 1 12h 172.29.9.51 master1 <none> <none>

kube-system kube-apiserver-master1 1/1 Running 2 (12h ago) 12h 172.29.9.51 master1 <none> <none>

kube-system kube-controller-manager-master1 1/1 Running 1 12h 172.29.9.51 master1 <none> <none>

kube-system kube-flannel-ds-gprz7 1/1 Running 0 11h 172.29.9.51 master1 <none> <none>

kube-system kube-flannel-ds-h9pw6 1/1 Running 0 11h 172.29.9.52 node1 <none> <none>

kube-system kube-flannel-ds-pct7r 1/1 Running 0 11h 172.29.9.53 node2 <none> <none>

kube-system kube-proxy-4sw76 1/1 Running 0 12h 172.29.9.51 master1 <none> <none>

kube-system kube-proxy-mkghd 1/1 Running 0 12h 172.29.9.52 node1 <none> <none>

kube-system kube-proxy-s2748 1/1 Running 0 12h 172.29.9.53 node2 <none> <none>

kube-system kube-scheduler-master1 1/1 Running 1 12h 172.29.9.51 master1 <none> <none>

[root@master1 ~]#

目前,一切正常。

- 注意:Flannel 网络插件

当我们部署完网络插件后执行 ifconfig 命令,正常会看到新增的cni0与flannel1这两个虚拟设备,但是如果没有看到cni0这个设备也不用太担心,我们可以观察/var/lib/cni目录是否存在,如果不存在并不是说部署有问题,而是该节点上暂时还没有应用运行,我们只需要在该节点上运行一个 Pod 就可以看到该目录会被创建,并且cni0设备也会被创建出来。

用同样的方法添加另外一个节点即可。

4、Dashboard

v1.22.2 版本的集群需要安装最新的 2.0+ 版本的 Dashboard:

4.1下载kube-dashboard的yaml文件

# 推荐使用下面这种方式

[root@master1 ~]#wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

--2021-10-31 22:56:47-- https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.110.133, 185.199.111.133, 185.199.108.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.110.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 7552 (7.4K) [text/plain]

Saving to: ‘recommended.yaml’

100%[===============================================================================================================================>] 7,552 3.76KB/s in 2.0s

2021-10-31 22:56:55 (3.76 KB/s) - ‘recommended.yaml’ saved [7552/7552]

[root@master1 ~]#ll

total 124120

-rw-r--r-- 1 root root 127074581 Jul 30 01:16 cri-containerd-cni-1.5.5-linux-amd64.tar.gz

-rw-r--r-- 1 root root 1976 Oct 31 06:54 kubeadm.yaml

-rw-r--r-- 1 root root 5175 Oct 31 08:38 kube-flannel.yml

-rw-r--r-- 1 root root 7552 Oct 31 22:56 recommended.yaml

[root@master1 ~]#mv recommended.yaml kube-dashboard.yaml

4.2修改kube-dashboard.yaml文件

[root@master1 ~]#vim kube-dashboard.yaml

# 修改Service为NodePort类型

......

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

type: NodePort # 加上type=NodePort变成NodePort类型的服务

......

4.3部署kube-dashboard.yaml文件

在 YAML 文件中可以看到新版本 Dashboard 集成了一个 metrics-scraper 的组件,可以通过 Kubernetes 的 Metrics API 收集一些基础资源的监控信息,并在 web 页面上展示,所以要想在页面上展示监控信息就需要提供 Metrics API,比如安装 Metrics Server。

直接创建:

[root@master1 ~]#kubectl apply -f kube-dashboard.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

Warning: spec.template.metadata.annotations[seccomp.security.alpha.kubernetes.io/pod]: deprecated since v1.19; use the "seccompProfile" field instead

deployment.apps/dashboard-metrics-scraper created

[root@master1 ~]#

新版本的 Dashboard 会被默认安装在 kubernetes-dashboard 这个命名空间下面:

[root@master1 ~]#kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7568f67dbd-559d2 1/1 Running 0 14m

kube-system coredns-7568f67dbd-b95fg 1/1 Running 0 14m

kube-system etcd-master1 1/1 Running 1 15h

kube-system kube-apiserver-master1 1/1 Running 2 (15h ago) 15h

kube-system kube-controller-manager-master1 1/1 Running 1 15h

kube-system kube-flannel-ds-gprz7 1/1 Running 0 14h

kube-system kube-flannel-ds-h9pw6 1/1 Running 0 14h

kube-system kube-flannel-ds-pct7r 1/1 Running 0 14h

kube-system kube-proxy-4sw76 1/1 Running 0 15h

kube-system kube-proxy-mkghd 1/1 Running 0 14h

kube-system kube-proxy-s2748 1/1 Running 0 14h

kube-system kube-scheduler-master1 1/1 Running 1 15h

kubernetes-dashboard dashboard-metrics-scraper-856586f554-zwv6r 1/1 Running 0 34s

kubernetes-dashboard kubernetes-dashboard-67484c44f6-hh5zj 1/1 Running 0 34s

[root@master1 ~]#

4.4配置cni

我们仔细看可以发现上面的 Pod 分配的 IP 段是 10.88.xx.xx,包括前面自动安装的 CoreDNS 也是如此,我们前面不是配置的 podSubnet 为 10.244.0.0/16 吗?

我们先去查看下 CNI 的配置文件:

[root@node1 ~]#ll /etc/cni/net.d/

total 8

-rw-r--r-- 1 1001 116 604 Jul 30 01:13 10-containerd-net.conflist

-rw-r--r-- 1 root root 292 Oct 31 20:30 10-flannel.conflist

[root@node1 ~]#

可以看到里面包含两个配置,一个是 10-containerd-net.conflist,另外一个是我们上面创建的 Flannel 网络插件生成的配置,我们的需求肯定是想使用 Flannel 的这个配置,我们可以查看下 containerd 这个自带的 cni 插件配置:

[root@node1 net.d]#cat 10-containerd-net.conflist

{

"cniVersion": "0.4.0",

"name": "containerd-net",

"plugins": [

{

"type": "bridge",

"bridge": "cni0",

"isGateway": true,

"ipMasq": true,

"promiscMode": true,

"ipam": {

"type": "host-local",

"ranges": [

[{

"subnet": "10.88.0.0/16"

}],

[{

"subnet": "2001:4860:4860::/64"

}]

],

"routes": [

{ "dst": "0.0.0.0/0" },

{ "dst": "::/0" }

]

}

},

{

"type": "portmap",

"capabilities": {"portMappings": true}

}

]

}

[root@node1 net.d]#

可以看到上面的 IP 段恰好就是 10.88.0.0/16,但是这个 cni 插件类型是 bridge 网络,网桥的名称为 cni0:

但是使用 bridge 网络的容器无法跨多个宿主机进行通信,跨主机通信需要借助其他的 cni 插件,比如上面我们安装的 Flannel,或者 Calico 等等,由于我们这里有两个 cni 配置,所以我们需要将 10-containerd-net.conflist 这个配置删除,因为如果这个目录中有多个 cni 配置文件,kubelet 将会使用按文件名的字典顺序排列的第一个作为配置文件,所以前面默认选择使用的是 containerd-net 这个插件。

[root@node1 ~]#mv /etc/cni/net.d/10-containerd-net.conflist{,.bak}

[root@node1 ~]#ifconfig cni0 down && ip link delete cni0

[root@node1 ~]#systemctl daemon-reload

[root@node1 ~]#systemctl restart containerd kubelet

#命令汇总

mv /etc/cni/net.d/10-containerd-net.conflist{,.bak}

ifconfig cni0 down && ip link delete cni0

systemctl daemon-reload

systemctl restart containerd kubelet

按删除方法把node2节点也配置下:

[root@node2 ~]#mv /etc/cni/net.d/10-containerd-net.conflist{,.bak}

[root@node2 ~]#ifconfig cni0 down && ip link delete cni0

[root@node2 ~]#systemctl daemon-reload

[root@node2 ~]#systemctl restart containerd kubelet

然后记得重建 coredns 和 dashboard 的 Pod,重建后 Pod 的 IP 地址就正常了:

[root@master1 ~]#kubectl delete pod coredns-7568f67dbd-ftnlb coredns-7568f67dbd-scdb5 -nkube-system

pod "coredns-7568f67dbd-ftnlb" deleted

pod "coredns-7568f67dbd-scdb5" deleted

[root@master1 ~]#kubectl delete pod dashboard-metrics-scraper-856586f554-r5qvs kubernetes-dashboard-67484c44f6-9dg85 -nkubernetes-dashboard

pod "dashboard-metrics-scraper-856586f554-r5qvs" deleted

pod "kubernetes-dashboard-67484c44f6-9dg85" deleted

查看 Dashboard 的 NodePort 端口:

[root@master1 ~]#kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15h

kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 15h

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.99.185.146 <none> 8000/TCP 18m

kubernetes-dashboard kubernetes-dashboard NodePort 10.105.110.130 <none> 443:30498/TCP 18m

[root@master1 ~]#

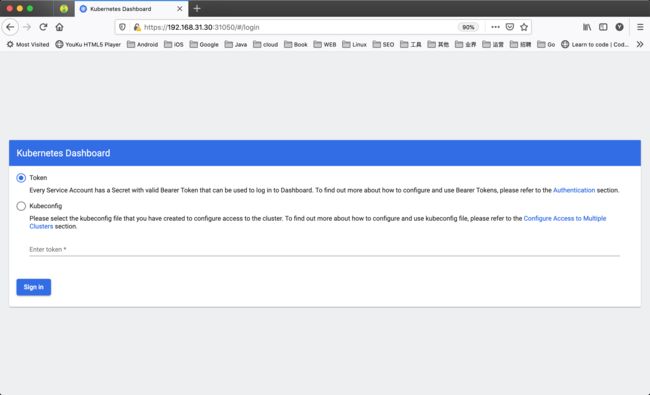

然后可以通过上面的 31498端口去访问 Dashboard,要记住使用 https,Chrome 不生效可以使用Firefox 测试,如果没有 Firefox 下面打不开页面,可以点击下页面中的信任证书即可:

信任后就可以访问到 Dashboard 的登录页面了:

然后创建一个具有全局所有权限的用户来登录 Dashboard:(admin.yaml)

vim admin.yaml

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: admin

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: admin

namespace: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kubernetes-dashboard

直接创建:

[root@master1 ~]#kubectl apply -f admin.yaml

clusterrolebinding.rbac.authorization.k8s.io/admin created

serviceaccount/admin created

[root@master1 ~]#

[root@master1 ~]#kubectl get secret admin-token-sbh8v -o jsonpath={.data.token} -n kubernetes-dashboard |base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6Im9hOERROHhuWTRSeVFweG1kdnpXSTRNNXg3bDZ0ZkRDVWNzN0l5Z0haT1UifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi10b2tlbi1zYmg4diIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjYwMDVmZjc5LWJlYzItNDE5MC1iMmNmLWMwOGVhNDRmZTVmMCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbiJ9.FyxLcGjtxI5Asl07Z21FzAFFcgwVFI7zc-2ITU6uQV3dzQaUNEl642MyIkhkEvdqd6He0eKgR79xm1ly9PL0exD2YUVFfeCnt-M-LiJwM59YGEQfQHYcku6ozikyQ7YeooV2bQ6EsAsyseVcCrHfa4ZXjmBb9L5rRX9Kds49yVsVFWbgbhe3LzNFMy1wm4fTN4EXPvegmA6mUaMLlLsrQJ2oNokx9TYuifIXjQDATZMTFc-YQMZahAbT-rUz8KccOt1O59NebitAHW0YKVFTkxbvBQwghe_yf25_j07LRbygSnHV5OrMEqZXl82AhdnXsvqdjjes6AxejGuDtwiiyw[root@master1 ~]#

# 会生成一串很长的base64后的字符串

然后用上面的 base64 解码后的字符串作为 token 登录 Dashboard 即可,新版本还新增了一个暗黑模式:

5、清理

如果你的集群安装过程中遇到了其他问题,我们可以使用下面的命令来进行重置:

➜ ~ kubeadm reset

➜ ~ ifconfig cni0 down && ip link delete cni0

➜ ~ ifconfig flannel.1 down && ip link delete flannel.1

➜ ~ rm -rf /var/lib/cni/

最终我们就完成了使用 kubeadm 搭建 v1.22.1 版本的 kubernetes 集群、coredns、ipvs、flannel、containerd。

最后记得做下3台虚机的快照,方便后期还原。

需要注意的问题

1.coredns镜像和pause镜像问题

01.coredns镜像拉取失败问题

报错现象:在拉取registry.aliyuncs.com/k8sxio/coredns:v1.8.4时报错,说阿里云的k8s.io仓库里没这个镜像。

因此只能先从官方拉取这个镜像,然后再打上tag即可:

ctr -n k8s.io i pull docker.io/coredns/coredns:1.8.4

ctr -n k8s.io i tag docker.io/coredns/coredns:1.8.4 registry.aliyuncs.com/k8sxio/coredns:v1.8.4

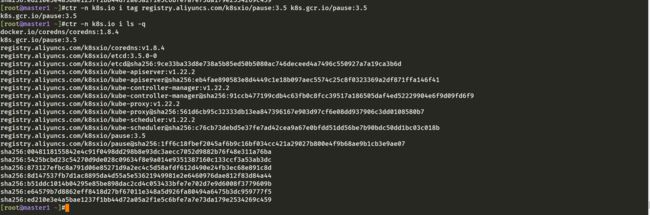

02.从阿里仓库k8sxio仓库拉取的pause镜像无法使用问题

从阿里云仓库下载的3个节点的pause镜像,k8s不识别,还依然去官网拉取pasue急需耐心那个,需要重新打成官网k8s.gcr.io/pause:3.5才可以。

可能是个bug问题,先搁置,或者使用最新版的v1.22.3去测试下。

[root@master1 ~]#systemctl cat kubelet

[root@master1 ~]#cat /var/lib/kubelet/kubeadm-flags.env

可以看到kubelet拉取镜像的仓库地址已经修改成了阿里云k8sxio仓库:

[root@master1 ~]#vim kubeadm.yaml

修改方法:

ctr -n k8s.io i pull registry.aliyuncs.com/k8sxio/pause:3.5

ctr -n k8s.io i tag registry.aliyuncs.com/k8sxio/pause:3.5 k8s.gcr.io/pause:3.5

[root@master1 ~]#ctr -n k8s.io i ls -q

docker.io/coredns/coredns:1.8.4

docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.2

docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin@sha256:b69fb2dddf176edeb7617b176543f3f33d71482d5d425217f360eca5390911dc

k8s.gcr.io/pause:3.5

quay.io/coreos/flannel:v0.15.0

quay.io/coreos/flannel@sha256:bf24fa829f753d20b4e36c64cf9603120c6ffec9652834953551b3ea455c4630

registry.aliyuncs.com/k8sxio/coredns:v1.8.4

registry.aliyuncs.com/k8sxio/etcd:3.5.0-0

registry.aliyuncs.com/k8sxio/etcd@sha256:9ce33ba33d8e738a5b85ed50b5080ac746deceed4a7496c550927a7a19ca3b6d

registry.aliyuncs.com/k8sxio/kube-apiserver:v1.22.2

registry.aliyuncs.com/k8sxio/kube-apiserver@sha256:eb4fae890583e8d4449c1e18b097aec5574c25c8f0323369a2df871ffa146f41

registry.aliyuncs.com/k8sxio/kube-controller-manager:v1.22.2

registry.aliyuncs.com/k8sxio/kube-controller-manager@sha256:91ccb477199cdb4c63fb0c8fcc39517a186505daf4ed52229904e6f9d09fd6f9

registry.aliyuncs.com/k8sxio/kube-proxy:v1.22.2

registry.aliyuncs.com/k8sxio/kube-proxy@sha256:561d6cb95c32333db13ea847396167e903d97cf6e08dd937906c3dd0108580b7

registry.aliyuncs.com/k8sxio/kube-scheduler:v1.22.2

registry.aliyuncs.com/k8sxio/kube-scheduler@sha256:c76cb73debd5e37fe7ad42cea9a67e0bfdd51dd56be7b90bdc50dd1bc03c018b

registry.aliyuncs.com/k8sxio/pause:3.5

registry.aliyuncs.com/k8sxio/pause@sha256:1ff6c18fbef2045af6b9c16bf034cc421a29027b800e4f9b68ae9b1cb3e9ae07

sha256:0048118155842e4c91f0498dd298b8e93dc3aecc7052d9882b76f48e311a76ba

sha256:09b38f011a29c697679aa10918b7514e22136b50ceb6cf59d13151453fe8b7a0

sha256:5425bcbd23c54270d9de028c09634f8e9a014e9351387160c133ccf3a53ab3dc

sha256:873127efbc8a791d06e85271d9a2ec4c5d58afdf612d490e24fb3ec68e891c8d

sha256:8d147537fb7d1ac8895da4d55a5e53621949981e2e6460976dae812f83d84a44

sha256:98660e6e4c3ae49bf49cd640309f79626c302e1d8292e1971dcc2e6a6b7b8c4d

sha256:b51ddc1014b04295e85be898dac2cd4c053433bfe7e702d7e9d6008f3779609b

sha256:e64579b7d8862eff8418d27bf67011e348a5d926fa80494a6475b3dc959777f5

sha256:ed210e3e4a5bae1237f1bb44d72a05a2f1e5c6bfe7a7e73da179e2534269c459

[root@master1 ~]#

2.有的yaml文件无法下载问题

因网络问题,kube-dashboard.yaml和kube-flannel.yml文件无法下载,大家可直接使用我提供的yaml文件即可。

[root@master1 ~]#ll

total 124124

-rw-r--r-- 1 root root 7569 Oct 31 23:01 kube-dashboard.yaml

-rw-r--r-- 1 root root 5175 Oct 31 08:38 kube-flannel.yml

关于我

我的博客主旨:我希望每一个人拿着我的博客都可以做出实验现象,先把实验做出来,然后再结合理论知识更深层次去理解技术点,这样学习起来才有乐趣和动力。并且,我的博客内容步骤是很完整的,也分享源码和实验用到的软件,希望能和大家一起共同进步!

各位小伙伴在实际操作过程中如有什么疑问,可随时联系本人免费帮您解决问题:

-

个人微信二维码:x2675263825 (舍得), qq:2675263825。

-

个人博客地址:www.onlyonexl.cn

-

个人微信公众号:云原生架构师实战

-

个人csdn

https://blog.csdn.net/weixin_39246554?spm=1010.2135.3001.5421

总结

好了,关于kubeadm方式搭建k8s集群(containerd)实验就到这里了,感谢大家阅读,最后贴上我的美圆photo一张,祝大家生活快乐,每天都过的有意义哦,我们下期见!