疫情数据可视化

国内疫情数据可视化

文章目录

- 国内疫情数据可视化

- 一、获取疫情数据并写入数据库

-

- 获取数据以及写入数据库代码

- 分析数据所在url

- 二、数据的读取及可视化

- 三、结果展示

- 四、实现实时监控

- 五、总结

一、获取疫情数据并写入数据库

对于疫情可视化来讲,获取数据也许是最重要的也是最难的部分了。官方的数据可以从国家卫生健康委员会官网获取,但是呢大家都知道他的反爬机制是相当的厉害。所以我们可以从其他地方获取比如腾讯阿里等。这里是从腾讯获取的数据。

获取数据以及写入数据库代码

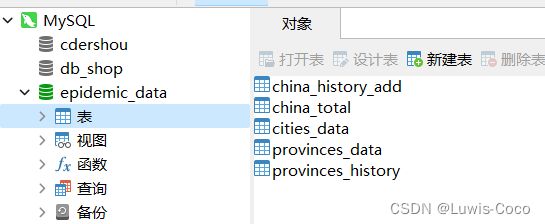

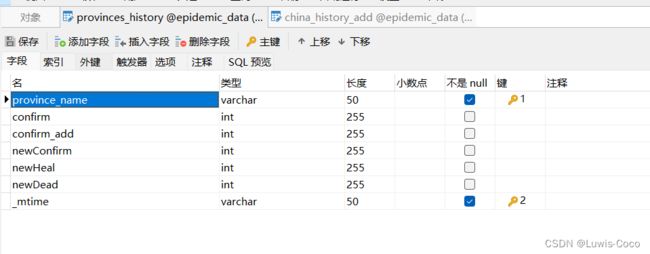

数据库用的是MySQL,数据库名和密码按照自己的填写,表也要提前在数据库中建好

数据库建库建表代码如下

/*

Navicat Premium Data Transfer

Source Server : MySQL

Source Server Type : MySQL

Source Server Version : 50553

Source Host : localhost:3306

Source Schema : epidemic_data

Target Server Type : MySQL

Target Server Version : 50553

File Encoding : 65001

Date: 27/09/2022 12:26:05

*/

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for china_history_add

-- ----------------------------

DROP TABLE IF EXISTS `china_history_add`;

CREATE TABLE `china_history_add` (

`confirm` int(255) NULL DEFAULT NULL,

`nowConfirm` int(255) NULL DEFAULT NULL,

`localConfirm` int(255) NULL DEFAULT NULL,

`date` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`importedCase` int(255) NULL DEFAULT NULL,

`heal` int(255) NULL DEFAULT NULL,

`dead` int(255) NULL DEFAULT NULL,

UNIQUE INDEX `noRepeat`(`date`) USING BTREE

) ENGINE = MyISAM CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for china_total

-- ----------------------------

DROP TABLE IF EXISTS `china_total`;

CREATE TABLE `china_total` (

`confirm` int(255) NULL DEFAULT NULL,

`nowConfirm` int(255) NULL DEFAULT NULL,

`localWzzAdd` int(255) NULL DEFAULT NULL,

`localConfirmAdd` int(255) NULL DEFAULT NULL,

`heal` int(255) NULL DEFAULT NULL,

`dead` int(255) NULL DEFAULT NULL

) ENGINE = MyISAM CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Fixed;

-- ----------------------------

-- Table structure for cities_data

-- ----------------------------

DROP TABLE IF EXISTS `cities_data`;

CREATE TABLE `cities_data` (

`province_name` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`confirm` int(255) NULL DEFAULT NULL,

`nowConfirm` int(255) NULL DEFAULT NULL,

`local_confirm_add` int(255) NULL DEFAULT NULL,

`wzz_add` int(255) NULL DEFAULT NULL,

`heal` int(255) NULL DEFAULT NULL,

`dead` int(255) NULL DEFAULT NULL,

`city_name` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL

) ENGINE = MyISAM CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for provinces_data

-- ----------------------------

DROP TABLE IF EXISTS `provinces_data`;

CREATE TABLE `provinces_data` (

`province_name` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`confirm` int(255) NULL DEFAULT NULL,

`nowConfirm` int(255) NULL DEFAULT NULL,

`local_confirm_add` int(255) NULL DEFAULT NULL,

`wzz_add` int(255) NULL DEFAULT NULL,

`heal` int(255) NULL DEFAULT NULL,

`dead` int(255) NULL DEFAULT NULL

) ENGINE = MyISAM CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for provinces_history

-- ----------------------------

DROP TABLE IF EXISTS `provinces_history`;

CREATE TABLE `provinces_history` (

`province_name` varchar(50) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`confirm` int(255) NULL DEFAULT NULL,

`confirm_add` int(255) NULL DEFAULT NULL,

`newConfirm` int(255) NULL DEFAULT NULL,

`newHeal` int(255) NULL DEFAULT NULL,

`newDead` int(255) NULL DEFAULT NULL,

`_mtime` varchar(50) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

PRIMARY KEY (`province_name`, `_mtime`) USING BTREE

) ENGINE = MyISAM CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic;

SET FOREIGN_KEY_CHECKS = 1;

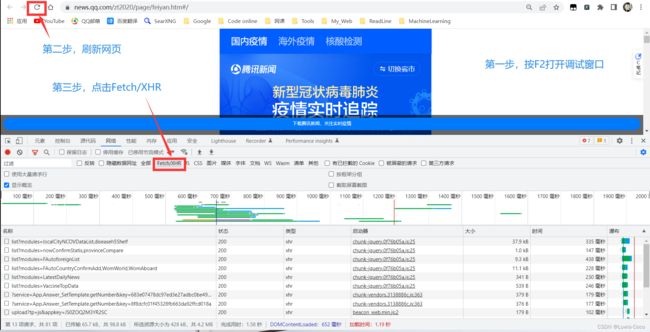

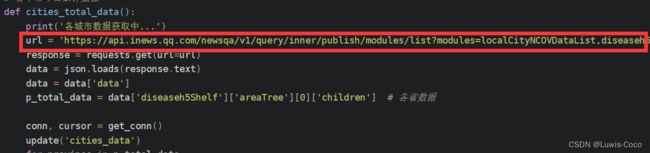

分析数据所在url

不难发现,第一个xhr中存放的是各省的数据,点击标头将url复制到代码中

其余的数据url分析和上面的类似,这里就不再赘述

获取数据并入库的代码如下

import requests, json, MySQLdb

def get_conn():

# 数据入库

conn = MySQLdb.connect(host='localhost', user='root', password='root', db='epidemic_data', charset='utf8')

cursor = conn.cursor()

return conn,cursor

def close(conn, cursor):

cursor.close()

conn.close()

def update(table_name):

conn,cursor = get_conn()

#清空数据库

sql='truncate table '+table_name

cursor.execute(sql)

conn.commit()

close(conn,cursor)

# 全国今日累计数据

def china_total_data():

print('全国数据获取中...')

url = 'https://api.inews.qq.com/newsqa/v1/query/inner/publish/modules/list?modules=localCityNCOVDataList,diseaseh5Shelf'

response = requests.get(url=url)

data = json.loads(response.text)

data = data['data']

total_data = data['diseaseh5Shelf']['chinaTotal'] #全国疫情数据

confirm = total_data['confirm']

nowConfirm = total_data['nowConfirm']

heal = total_data['heal']

dead = total_data['dead']

localWzzAdd = total_data['localWzzAdd']

localConfirmAdd = total_data['localConfirmAdd']

# 数据处理完毕

# 数据入库`

conn,cursor = get_conn()

update('china_total')

sql = 'insert into china_total(confirm,nowConfirm,heal,dead,localWzzAdd,localConfirmAdd) values (%s, %s, %s, %s, %s, %s)'

cursor.execute(sql, args=[confirm,nowConfirm,heal,dead,localWzzAdd,localConfirmAdd])

conn.commit()

close(conn, cursor)

print('全国今日累计数据获取完毕', end='\n\n')

# 各个省(直辖市)今日累计数据

def province_total_data():

print('各个省(直辖市)数据获取中...')

url = 'https://api.inews.qq.com/newsqa/v1/query/inner/publish/modules/list?modules=localCityNCOVDataList,diseaseh5Shelf'

response = requests.get(url=url)

data = json.loads(response.text)

data = data['data']

p_total_data = data['diseaseh5Shelf']['areaTree'][0]['children'] # 各省数据

conn, cursor = get_conn()

update('provinces_data')

for province in p_total_data:

province_name = province['name']

total_data = province['total']

today_data = province['today']

confirm = total_data['confirm']

nowConfirm = total_data['nowConfirm']

local_confirm_add = today_data['local_confirm_add']

wzz_add = today_data['wzz_add']

heal = total_data['heal']

dead = total_data['dead']

# 数据处理完毕

# 数据入库

sql = 'insert into provinces_data(province_name,confirm,nowConfirm,local_confirm_add,wzz_add,heal,dead) values (%s, %s, %s, %s, %s, %s,%s)'

cursor.execute(sql, args=[province_name,confirm,nowConfirm,local_confirm_add,wzz_add,heal,dead])

conn.commit()

close(conn, cursor)

print('各个省(直辖市)今日累计数据获取完毕', end='\n\n')

# 各个市今日累计数据

def cities_total_data():

print('各城市数据获取中...')

url = 'https://api.inews.qq.com/newsqa/v1/query/inner/publish/modules/list?modules=localCityNCOVDataList,diseaseh5Shelf'

response = requests.get(url=url)

data = json.loads(response.text)

data = data['data']

p_total_data = data['diseaseh5Shelf']['areaTree'][0]['children'] # 各省数据

conn, cursor = get_conn()

update('cities_data')

for province in p_total_data:

count = len(p_total_data)

province_name = province['name']

for city in province['children']:

city_name = city['name']

total_data = city['total']

today_data = city['today']

confirm = total_data['confirm']

nowConfirm = total_data['nowConfirm']

local_confirm_add = today_data['local_confirm_add']

wzz_add = today_data['wzz_add']

heal = total_data['heal']

dead = total_data['dead']

# 数据处理完毕

# 数据入库

sql = 'insert into cities_data(province_name,confirm,nowConfirm,local_confirm_add,wzz_add,heal,dead,city_name) values (%s, %s,%s, %s, %s, %s, %s,%s)'

cursor.execute(sql, args=[province_name,confirm,nowConfirm,local_confirm_add,wzz_add,heal,dead,city_name])

conn.commit()

close(conn, cursor)

print('各城市据获取完毕', end='\n\n')

# 国内历史日新增数据

def china_history_daily_data():

print('国内历史日新增数据获取中...')

url = 'https://api.inews.qq.com/newsqa/v1/query/inner/publish/modules/list?modules=chinaDayListNew,chinaDayAddListNew&limit=30'

response = requests.get(url=url)

data = json.loads(response.text)

data = data['data']

conn, cursor = get_conn()

for daily_data in data['chinaDayListNew']:

confirm = daily_data['confirm']

nowConfirm = daily_data['nowConfirm']

heal = daily_data['heal']

dead = daily_data['dead']

localConfirm = daily_data['localConfirm']

importedCase = daily_data['importedCase']

date = daily_data['y'] + '-' + daily_data['date'].replace('.', '-')

# 数据处理完毕

# 数据入库

sql = 'insert into china_history_add(confirm, nowConfirm, localConfirm,heal, dead, importedCase, date) values (%s, %s, %s, %s, %s, %s, %s)'

try:

cursor.execute(sql, args=[confirm, nowConfirm, localConfirm,heal, dead, importedCase, date])

except:

pass

conn.commit()

close(conn, cursor)

print('国内历史日新增数据数据获取完毕', end='\n\n')

#各省历史数据

def provinces_history_data():

print('各省历史日数据获取中...')

url_keys = ['110000', '120000', '130000', '140000', '150000', '210000', '220000', '230000', '310000', '320000', '330000', '340000', '350000', '360000', '370000', '410000', '420000', '430000', '440000', '450000', '460000', '500000', '510000', '520000', '530000', '540000', '610000', '620000', '630000', '640000', '650000', '710000', '810000', '820000']

url = 'https://api.inews.qq.com/newsqa/v1/query/pubished/daily/list?adCode=%s&limit=30'

conn, cursor = get_conn()

i = 0

provinces_count = 34

for url_key in url_keys:

response = requests.get(url=url%url_key)

data = json.loads(response.text)

data = data['data']

for date_data in data:

province_name = date_data['province']

confirm = date_data['confirm']

confirm_add = date_data['confirm_add']

newConfirm = date_data['newConfirm']

newHeal = date_data['newHeal']

newDead = date_data['newDead']

_mtime = date_data['_mtime']

# 数据处理完毕

# 数据入库

sql = 'insert into provinces_history(province_name, confirm, confirm_add,newConfirm, newHeal, newDead, _mtime) values (%s, %s, %s, %s, %s, %s, %s)'

try:

cursor.execute(sql, args=[province_name, confirm, confirm_add,newConfirm, newHeal, newDead, _mtime])

except:

pass

conn.commit()

i += 1

print("\r[{}{}]{:^3.0f}% ".format('■■' * i, ' ' * (provinces_count - i), (i / provinces_count) * 100), end="")

close(conn, cursor)

print()

print('各省历史日数据获取完毕')

if __name__ == '__main__':

china_total_data()

province_total_data()

cities_total_data()

china_history_daily_data()

provinces_history_data()

运行上面代码就可以在数据库中查询到数据了,数据在数据库中可以看到

二、数据的读取及可视化

一定记得提前安装三方库。

import datetime

from typing import List

from flask import Flask, render_template

import MySQLdb

import pyecharts.options as opts

from pyecharts.globals import ThemeType

from pyecharts.commons.utils import JsCode

from pyecharts.charts import Timeline, Grid, Bar, Map, Pie, Line

app = Flask(__name__)

def get_conn():

conn = MySQLdb.connect(host='localhost', user='root', password='root', db='epidemic_data', charset='utf8')

cursor = conn.cursor()

return conn, cursor

def close(conn, cursor):

cursor.close()

conn.close()

def read_data():

conn, cursor = get_conn()

temp_date = datetime.datetime.now()

data = []

time_list = []

total_num = []

max = 0

min = 10 ** 10

for i in range(0, 25):

mtime = temp_date + datetime.timedelta(days=-i) # 获取当前日期的前i天的日期

time_list.append(mtime.strftime("%m-%d"))

sql = "select province_name,newConfirm,confirm from provinces_history where _mtime LIKE '" + mtime.strftime("%Y-%m-%d") + "%'"

# print(sql)

cursor.execute(sql)

datas = cursor.fetchall()

province_datas = [{'name': '广东', 'value': [0, 0, '广东']}, {'name': '江苏', 'value': [0, 0, '江苏']},

{'name': '山东', 'value': [0, 0, '山东']}, {'name': '浙江', 'value': [0, 0, '浙江']},

{'name': '河南', 'value': [0, 0, '河南']}, {'name': '四川', 'value': [0, 0, '四川']},

{'name': '湖北', 'value': [0, 0, '湖北']}, {'name': '湖南', 'value': [0, 0, '湖南']},

{'name': '河北', 'value': [0, 0, '河北']}, {'name': '福建', 'value': [0, 0, '福建']},

{'name': '上海', 'value': [0, 0, '上海']}, {'name': '北京', 'value': [0, 0, '北京']},

{'name': '安徽', 'value': [0, 0, '安徽']}, {'name': '辽宁', 'value': [0, 0, '辽宁']},

{'name': '陕西', 'value': [0, 0, '陕西']}, {'name': '江西', 'value': [0, 0, '江西']},

{'name': '重庆', 'value': [0, 0, '重庆']}, {'name': '广西', 'value': [0, 0, '广西']},

{'name': '天津', 'value': [0, 0, '天津']}, {'name': '云南', 'value': [0, 0, '云南']},

{'name': '内蒙古', 'value': [0, 0, '内蒙古']}, {'name': '山西', 'value': [0, 0, '山西']},

{'name': '黑龙江', 'value': [0, 0, '黑龙江']}, {'name': '吉林', 'value': [0, 0, '吉林']},

{'name': '贵州', 'value': [0, 0, '贵州']}, {'name': '新疆', 'value': [0, 0, '新疆']},

{'name': '甘肃', 'value': [0, 0, '甘肃']}, {'name': '海南', 'value': [0, 0, '海南']},

{'name': '宁夏', 'value': [0, 0, '宁夏']}, {'name': '青海', 'value': [0, 0, '青海']},

{'name': '西藏', 'value': [0, 0, '西藏']}]

for p_data in province_datas:

name = p_data['name']

for p in datas:

if min > p[1]:

min = p[1]

if max < p[1] and p[0] not in '台湾香港澳门':

max = p[1]

if name == p[0]:

p_data['value'][0] = p[1]

p_data['value'][1] = p[2]

break

data.append({'time': mtime.strftime("%m-%d"), 'data': province_datas})

sql2 = "select localConfirm from china_history_add where date='" + mtime.strftime("%Y-%m-%d") + "'"

cursor.execute(sql2)

try:

total_num.append(cursor.fetchall()[0][0])

except:

total_num.append(0)

# print(province_datas)

close(conn, cursor)

time_list = time_list[::-1]

total_num = total_num[::-1]

# print(max, min)

return data, time_list, total_num, max, min

data, time_list, total_num, maxNum, minNum = read_data()

def get_year_chart(year: str):

map_data = [

[[x["name"], x["value"]] for x in d["data"]] for d in data if d["time"] == year

][0]

min_data, max_data = (minNum, maxNum)

data_mark: List = []

i = 0

for x in time_list:

if x == year:

data_mark.append(total_num[i])

else:

data_mark.append("")

i = i + 1

map_chart = (

Map()

.add(

series_name="",

data_pair=map_data,

zoom=1,

center=[119.5, 34.5],

is_map_symbol_show=False,

itemstyle_opts={

"normal": {"areaColor": "#323c48", "borderColor": "#404a59"},

"emphasis": {

"label": {"show": Timeline},

"areaColor": "rgba(255,255,255, 0.5)",

},

},

)

.set_global_opts(

title_opts=opts.TitleOpts(

title=str(int(year[:2:1])) + "月" + str(int(year[3::1])) + "日 全国分地区疫情确诊情况(近25天数据) 数据来源:腾讯",

subtitle="",

pos_left="center",

pos_top="top",

title_textstyle_opts=opts.TextStyleOpts(

font_size=25, color="rgba(255,255,255, 0.9)"

),

),

tooltip_opts=opts.TooltipOpts(

is_show=True,

formatter=JsCode(

"""function(params) {

if ('value' in params.data) {

return params.data.value[2] + ': ' + params.data.value[0];

}

}"""

),

),

visualmap_opts=opts.VisualMapOpts(

is_calculable=True,

dimension=0,

pos_left="30",

pos_top="center",

range_text=["High", "Low"],

range_color=["lightskyblue", "yellow", "orangered"],

textstyle_opts=opts.TextStyleOpts(color="#ddd"),

min_=min_data,

max_=max_data,

is_piecewise=True

),

)

)

line_chart = (

Line()

.add_xaxis(time_list)

.add_yaxis("", total_num)

.add_yaxis(

"",

data_mark,

markpoint_opts=opts.MarkPointOpts(data=[opts.MarkPointItem(type_="max")]),

)

.set_series_opts(label_opts=opts.LabelOpts(is_show=False))

.set_global_opts(

title_opts=opts.TitleOpts(

title="全国疫情确诊数量(近25天数据)", pos_left="72%", pos_top="5%"

)

)

)

bar_x_data = [x[0] for x in map_data]

bar_y_data = [{"name": x[0], "value": x[1][0]} for x in map_data]

bar = (

Bar()

.add_xaxis(xaxis_data=bar_x_data)

.add_yaxis(

series_name="",

y_axis=bar_y_data,

label_opts=opts.LabelOpts(

is_show=True, position="right", formatter="{b} : {c}"

),

)

.reversal_axis()

.set_global_opts(

xaxis_opts=opts.AxisOpts(

max_=maxNum, axislabel_opts=opts.LabelOpts(is_show=False)

),

yaxis_opts=opts.AxisOpts(axislabel_opts=opts.LabelOpts(is_show=False)),

tooltip_opts=opts.TooltipOpts(is_show=False),

visualmap_opts=opts.VisualMapOpts(

is_calculable=True,

dimension=0,

pos_left="10",

pos_top="top",

range_text=["High", "Low"],

range_color=["lightskyblue", "yellow", "orangered"],

textstyle_opts=opts.TextStyleOpts(color="#ddd"),

min_=min_data,

max_=max_data,

),

)

)

pie_data = [[x[0], x[1][0]] for x in map_data]

pie = (

Pie()

.add(

series_name="",

data_pair=pie_data,

radius=["15%", "35%"],

center=["80%", "82%"],

itemstyle_opts=opts.ItemStyleOpts(

border_width=1, border_color="rgba(0,0,0,0.3)"

),

)

.set_global_opts(

tooltip_opts=opts.TooltipOpts(is_show=True, formatter="{b} {d}%"),

legend_opts=opts.LegendOpts(is_show=False),

)

)

grid_chart = (

Grid()

.add(

bar,

grid_opts=opts.GridOpts(

pos_left="10", pos_right="45%", pos_top="50%", pos_bottom="5"

),

)

.add(

line_chart,

grid_opts=opts.GridOpts(

pos_left="65%", pos_right="80", pos_top="10%", pos_bottom="50%"

),

)

.add(pie, grid_opts=opts.GridOpts(pos_left="45%", pos_top="60%"))

.add(map_chart, grid_opts=opts.GridOpts())

)

return grid_chart

@app.route('/')

def fun():

timeline = Timeline(

init_opts=opts.InitOpts(width="2000px", height="1000px", theme=ThemeType.DARK)

)

for y in time_list:

g = get_year_chart(year=y)

timeline.add(g, time_point=str(y))

timeline.add_schema(

orient="vertical",

is_auto_play=True,

is_inverse=True,

play_interval=5000,

pos_left="null",

pos_right="5",

pos_top="20",

pos_bottom="20",

width="60",

label_opts=opts.LabelOpts(is_show=True, color="#fff"),

)

timeline.render('./templates/charts.html')

return render_template('charts.html')

if __name__ == "__main__":

app.run()

# 世界今日累计数据

三、结果展示

做的有些不太美观,可以根据自己的喜好从pyechars官网中选择自己喜欢的图表,直接把代码拷过来

稍作修给即可。

四、实现实时监控

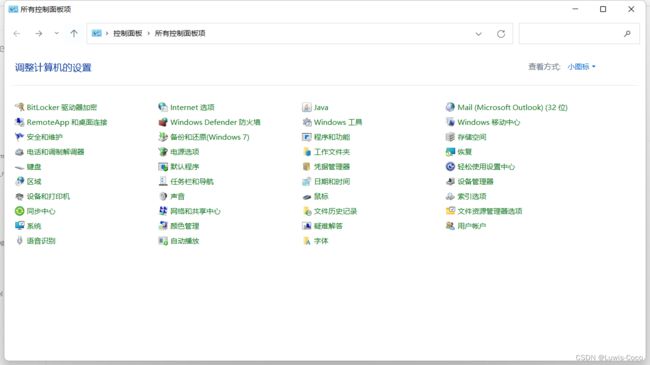

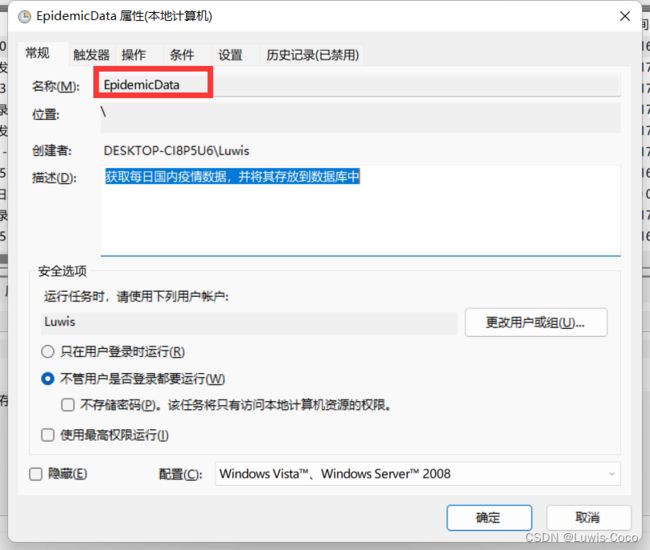

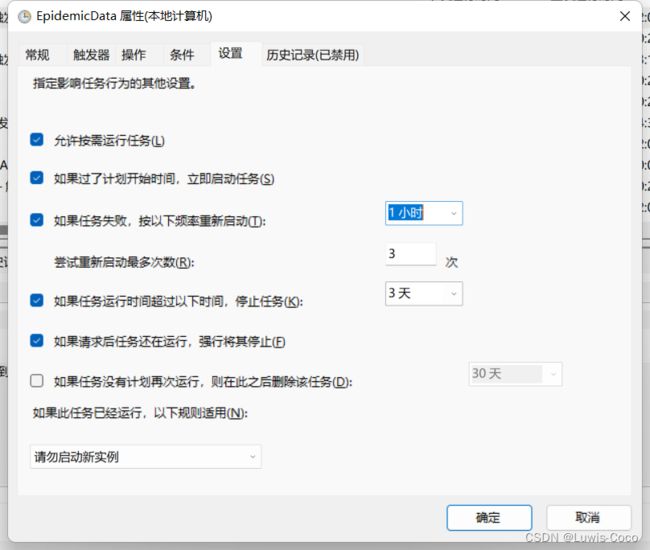

在控制面板中搜索计划任务,

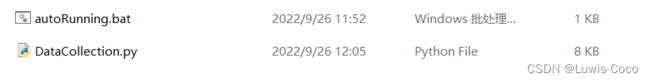

在获取数据的代码相同目录下创建一个.bat文件(把.txt文件后缀改为.bat即可),里面的内容写

python 获取数据代码文件名.py(例如我这里是python DataCollection.py)。

注意:文件名中不能有汉字,不然计划任务执行不了!!!

以上就是实时获取数据的方法。

也可以将数据可视化的代码用相同的方法进行设置,设置之后就可以直接在浏览器输入IP地址进行访问了。

五、总结

代码还存在很多的不足,欢迎大家交流讨论。