LLaMA模型之中文词表的蜕变

在目前的开源模型中,LLaMA模型无疑是一颗闪亮的⭐️,但是相对于ChatGLM、BaiChuan等国产大模型,其对于中文的支持能力不是很理想。原版LLaMA模型的词表大小是32K,中文所占token是几百个左右,这将会导致中文的编解码效率低。

在将LLaMA系列模型用于中文语言时需要进行中文词表扩充,基于sentencepiece工具训练,产生新的词表,然后与原始词表合并得到一个新词表。

本文将LLaMA模型中文词表扩充分为以下步骤:训练数据准备、词表训练、词表合并、词表测试。

训练数据准备

这里使用MedicalGPT中的天龙八部小说作为训练文本。

数据是txt文件,一行文本作为一条数据。

词表训练代码

import sentencepiece as spm

spm.SentencePieceTrainer.train(

input='tianlongbabu.txt',

model_prefix='bpe_llama',

shuffle_input_sentence=False,

train_extremely_large_corpus=True,

max_sentence_length=2048,

pad_id=3,

model_type='BPE',

vocab_size=5000,

split_digits=True,

split_by_unicode_script=True,

byte_fallback=True,

allow_whitespace_only_pieces=True,

remove_extra_whitespaces=False,

normalization_rule_name="nfkc",

)

print('训练完成')

sentencepiece训练参数:

Usage: …/build/src/spm_train [options] files

- –input(逗号分隔的输入句子列表) 类型:字符串 默认值:“”

- –input_format(输入格式。支持的格式为

text或tsv。) 类型:字符串 默认值:“” - –model_prefix(输出模型前缀) 类型:字符串 默认值:“”

- –model_type(模型算法:unigram、bpe、word或char) 类型:字符串 默认值:“unigram”

- –vocab_size(词汇表大小) 类型:int32 默认值:8000

- –accept_language(此模型可以接受的语言的逗号分隔列表) 类型:字符串 默认值:“”

- –self_test_sample_size(自测样本的大小) 类型:int32 默认值:0

- –character_coverage(用于确定最小符号的字符覆盖率) 类型:double 默认值:0.9995

- –input_sentence_size(训练器加载的句子的最大大小) 类型:uint64_t 默认值:0

- –shuffle_input_sentence(提前随机抽样输入句子。当–input_sentence_size > 0时有效) 类型:bool 默认值:true

- –seed_sentencepiece_size(seed sentencepieces的大小) 类型:int32 默认值:1000000

- –shrinking_factor(与损失相关的保留顶部shrinking_factor片段) 类型:double 默认值:0.75

- –num_threads(训练时的线程数) 类型:int32 默认值:16

- –num_sub_iterations(EM子迭代的数量) 类型:int32 默认值:2

- –max_sentencepiece_length(sentence piece的最大长度) 类型:int32 默认值:16

- –max_sentence_length(句子的最大长度(字节)) 类型:int32 默认值:4192

- –split_by_unicode_script(使用Unicode脚本拆分句子片段) 类型:bool 默认值:true

- –split_by_number(通过数字(0-9)拆分标记) 类型:bool 默认值:true

- –split_by_whitespace(使用空格拆分句子片段) 类型:bool 默认值:true

- –split_digits(将所有数字(0-9)拆分为单独的片段) 类型:bool 默认值:false

- –treat_whitespace_as_suffix(将空格标记视为后缀而不是前缀。) 类型:bool 默认值:false

- –allow_whitespace_only_pieces(允许只包含(连续的)空格标记的片段) 类型:bool 默认值:false

- –control_symbols(控制符号的逗号分隔列表) 类型:字符串 默认值:“”

- –control_symbols_file(从文件加载控制符号。) 类型:字符串 默认值:“”

- –user_defined_symbols(用户定义符号的逗号分隔列表) 类型:字符串 默认值:“”

- –user_defined_symbols_file(从文件加载user_defined_symbols。) 类型:字符串 默认值:“”

- –required_chars(UTF8字符,无论character_coverage如何,始终在字符集中使用) 类型:字符串 默认值:“”

- –required_chars_file(从文件加载required_chars。) 类型:字符串 默认值:“”

- –byte_fallback(将未知片段分解为UTF-8字节片段) 类型:bool 默认值:false

- –vocabulary_output_piece_score(在vocab文件中定义分数) 类型:bool 默认值:true

- –normalization_rule_name(规范化规则名称。从nfkc或identity中选择) 类型:字符串 默认值:“nmt_nfkc”

- –normalization_rule_tsv(规范化规则TSV文件。) 类型:字符串 默认值:“”

- –denormalization_rule_tsv(反规范化规则TSV文件。) 类型:字符串 默认值:“”

- –add_dummy_prefix(在文本开头添加虚拟空格) 类型:bool 默认值:true

- –remove_extra_whitespaces(删除前导、尾随和重复的内部空格) 类型:bool 默认值:true

- –hard_vocab_limit(如果设置为false,则–vocab_size被视为软限制。) 类型:bool 默认值:true

- –use_all_vocab(如果设置为true,则使用所有标记作为vocab。对于word/char模型有效。) 类型:bool 默认值:false

- –unk_id(覆盖UNK(

)id。) 类型:int32 默认值:0 - –bos_id(覆盖BOS(

)id。将-1设置为禁用BOS。) 类型:int32 默认值:1 - –eos_id(覆盖EOS()id。将-1设置为禁用EOS。) 类型:int32 默认值:2

- –pad_id(覆盖PAD(

)id。将-1设置为禁用PAD。) 类型:int32 默认值:-1 - –unk_piece(覆盖UNK(

)片段。) 类型:字符串 默认值:“ ” - –bos_piece(覆盖BOS(

)片段。) 类型:字符串 默认值:“” - –eos_piece(覆盖EOS()片段。) 类型:字符串 默认值:“”

- –pad_piece(覆盖PAD(

)片段。) 类型:字符串 默认值:“ ” - –unk_surface(

的虚拟表面字符串。在解码中, 被解码为 unk_surface。) 类型:字符串 默认值:“ ⁇ ” - –train_extremely_large_corpus(增加unigram标记化的位深度。) 类型:bool 默认值:false

- –random_seed(随机生成器的种子值。) 类型:uint32 默认值:4294967295

- –enable_differential_privacy(是否在训练时添加差分隐私。目前仅UNIGRAM模型支持。) 类型:bool 默认值:false

- –differential_privacy_noise_level(DP时添加的噪声量) 类型:float 默认值:0

- –differential_privacy_clipping_threshold(DP时剪切计数的阈值) 类型:uint64_t 默认值:0

- –help(显示帮助) 类型:bool 默认值:false

- –version(显示版本) 类型:bool 默认值:false

- –minloglevel(低于此级别的消息实际上不会被记录在任何地方) 类型:int 默认值:0

- 测试

import sentencepiece as spm

sp = spm.SentencePieceProcessor()

sp.load("bpe_llama.model")

print(sp.encode_as_pieces("这老者姓左,名叫子穆,是“无量剑”东宗的掌门。那道姑姓辛,道号双清,是“无量剑”西宗掌门。"))

print(sp.encode_as_ids("这老者姓左,名叫子穆,是“无量剑”东宗的掌门。那道姑姓辛,道号双清,是“无量剑”西宗掌门。"))

词表合并代码

import os

os.environ["PROTOCOL_BUFFERS_PYTHON_IMPLEMENTATION"]="python"

from transformers import LlamaTokenizer

from sentencepiece import sentencepiece_model_pb2 as sp_pb2_model

import sentencepiece as spm

llama_tokenizer_dir = 'llama-2-7b-bin'

chinese_sp_model_file = 'bpe_llama.model'

# 分词器加载

llama_tokenizer = LlamaTokenizer.from_pretrained(llama_tokenizer_dir)

chinese_sp_model = spm.SentencePieceProcessor()

chinese_sp_model.Load(chinese_sp_model_file)

# 解析

llama_spm = sp_pb2_model.ModelProto()

llama_spm.ParseFromString(llama_tokenizer.sp_model.serialized_model_proto())

chinese_spm = sp_pb2_model.ModelProto()

chinese_spm.ParseFromString(chinese_sp_model.serialized_model_proto())

# 词表长度

print(len(llama_tokenizer),len(chinese_sp_model))

# 添加新token到llama词表

llama_spm_tokens_set=set(p.piece for p in llama_spm.pieces)

print(len(llama_spm_tokens_set))

print(f"Before:{len(llama_spm_tokens_set)}")

for p in chinese_spm.pieces:

piece = p.piece

if piece not in llama_spm_tokens_set:

new_p = sp_pb2_model.ModelProto().SentencePiece()

new_p.piece = piece

new_p.score = 0

llama_spm.pieces.append(new_p)

print(f"New model pieces: {len(llama_spm.pieces)}")

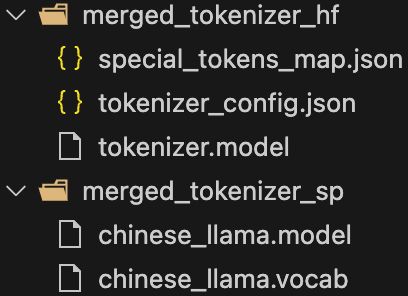

output_sp_dir = '../merged_tokenizer_sp'

output_hf_dir = '../merged_tokenizer_hf'

vocab_content = ''

for p in llama_spm.pieces:

vocab_content += f"{p.piece} {p.score}\n"

# 保存词表

with open(output_sp_dir+'/chinese_llama.vocab', "w", encoding="utf-8") as f:

f.write(vocab_content)

# 保存spm模型

with open(output_sp_dir+'/chinese_llama.model', 'wb') as f:

f.write(llama_spm.SerializeToString())

# 保存llama新tokenizer

tokenizer = LlamaTokenizer(vocab_file=output_sp_dir+'/chinese_llama.model')

tokenizer.save_pretrained(output_hf_dir)

print(f"Chinese-LLaMA tokenizer has been saved to {output_hf_dir}")

词表测试代码

from transformers import LlamaTokenizer

llama_tokenizer = LlamaTokenizer.from_pretrained(llama_tokenizer_dir)

chinese_llama_tokenizer = LlamaTokenizer.from_pretrained(output_hf_dir)

print(tokenizer.all_special_tokens)

print(tokenizer.all_special_ids)

print(tokenizer.special_tokens_map)

text='''白日依山尽,黄河入海流。欲穷千里目,更上一层楼。'''

text='''大模型是指具有非常大的参数数量的人工神经网络模型。 在深度学习领域,大模型通常是指具有数亿到数万亿参数的模型。'''

print("Test text:\n",text)

print(f"Tokenized by LLaMA tokenizer:{len(llama_tokenizer.tokenize(text))},{llama_tokenizer.tokenize(text)}")

print(f"Tokenized by GoGPT-LLaMA tokenizer:{len(chinese_llama_tokenizer.tokenize(text))},{chinese_llama_tokenizer.tokenize(text)}")

从结果可以看到,中文分词后长度显著减小,英文分词没有产生影响。

注:在对中文词表扩展后的LLaMA模型做增量预训练时,需要调整嵌入层的大小(model.resize_token_embeddings(len(tokenizer))),因为词表大小发生变化。

参考

[1] https://github.com/shibing624/MedicalGPT/tree/main

[2] https://github.com/yanqiangmiffy/how-to-train-tokenizer/tree/main