8、Filebeat + Logstash 采集日志(一)

一、Filebeat 入门

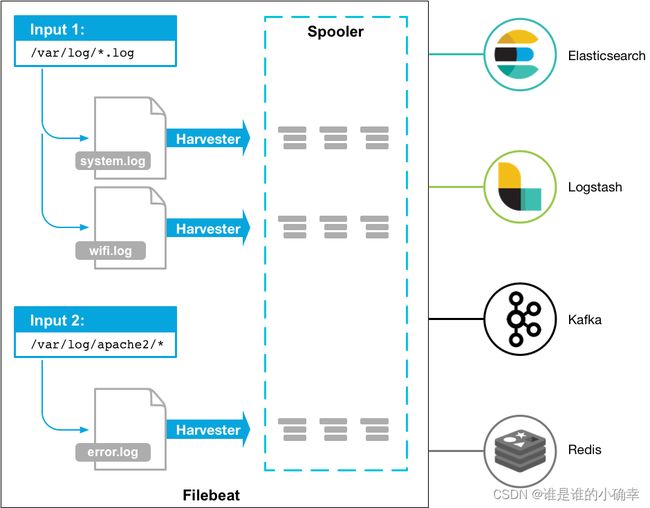

Filebeat 是一个轻量级的日志采集器,官网文档上有对它的详细说明,可以把它作为代理程序安装在服务器上,用于监控指定路径下的文件,收集日志事件,并转发到指定的输出端。这里的输出端,可以是 Elasticsearch、Logstash、Redis、Kafka,也可以是 Console、File、Cloud 等。

Filebeat 采集功能主要是由 harvesters 和 inputs 两个组件组成。harvester 是负责打开和关闭文件,每个文件对应一个 harvester,当监控到文件大小发生变化时,harvester 会读取对应文件中的新内容,并将新的日志数据发送到 libbeat,由 libbeat 聚合事件并将聚合数据发送到配置的输出端;而 inputs 则是用来管理 harvester 的,输入端支持多种类型,有:log,stdin,redis,docker,udp,tcp,syslog 等。当启动 Filebeat 时,会启动一个或多个 input ,这些 input 位于自己配置采集日志数据的指定位置上。官方文档提供了一张图,能够简单清晰的说明 Filebeat 的大致工作流程,如下:

为了更深入的了解 Filebeat,再说明下 Filebeat 的一些重要工作机制吧。

Filebeat 检测事件的策略,它是使用换行符检测事件的结束,如果是按行逐步的写入到正在收集的文件中,则在最后一行之后需要换行符,否则 Filebeat 将不会读取该文件的最后一行。

Filebeat 会监控并记录每个文件的文件状态,并且保存到注册表中。文件状态用于记录 harvester 正在读取的最后一个偏移量,并确保发送成功。如果输出端出现短暂的故障,Filebeat 会跟踪发送的最后一行,并在输出端恢复可用时继续读取,也就是说,Filebeat 会一直进行重试,直到确认成功。请注意,当 Filebeat 处于运行状态时,文件状态信息会保存在内存中给每个 input 使用,如果 Filebeat 重启时,则是从注册表中读取文件状态信息,这样能确保 Filebeat 会在最后一个已知位置继续读取日志。正是这样的机制,Filebeat 可以保证数据不会丢失。对于每天会产生大量日志文件的情况,说明记录文件状态信息的注册表也会很大,此时可以考虑配置 clean_removed 和 clean_inactive 来刷新。

Filebeat 能保证采集的数据不会丢失,那么有没有可能采集的数据会产生重复呢?有可能。如果在输出事件的过程中 Filebeat 关闭了,它是不会等待输出端确认返回成功的,当 Filebeat 重新启动之后会再次重新发送没有确认的事件,这样可以保证每一个事件都会被输出,不会丢失采集的数据,但是会造成事件的重复输出。为了解决这种问题,我们可以配置 filebeat.shutdown_timeout 参数选项,也就是 Filebeat 在关闭之前等待返回的最长时间,如果在这个时间之前确认已全部返回,则直接关闭。这个配置没有默认值,需要手动添加。

二、Filebeat 部署测试

Filebeat 下载地址:https://elasticsearch.cn/download/,我选择的版本是 filebeat-6.8.6.tar.gz。通常,会把 filebeat 解压到与指定日志文件的同级目录下:

tar -zxvf ./opt/filebeat-6.8.6.tar.gz -C 指定目录

1、input-单个log,output-Console 测试

以输入端采集 log 文件,输出端为控制台 console 为例,修改 filebeat.yml 配置如下(这里测试不使用 ES 做输出端,需要将默认的 Elasticsearch output 注释掉):

#=========================== Filebeat inputs =============================

filebeat.inputs:

- type: log

enabled: true

paths:

- /home/dataaudit/xxwei/test.log

#================================ Outputs =====================================

output.console:

pretty: true

#-------------------------- Elasticsearch output ------------------------------

#output.elasticsearch:

# Array of hosts to connect to.

# hosts: ["localhost:9200"]

# Enabled ilm (beta) to use index lifecycle management instead daily indices.

#ilm.enabled: false

# Optional protocol and basic auth credentials.

#protocol: "https"

#username: "elastic"

#password: "changeme"

启动 filebeat(暂不使用后台模式启动):

./filebeat -e -c filebeat.yml

接着,通过命令行模式向日志文件 test.log 新增一条日志:

$ echo "hello the world" >/home/dataaudit/xxwei/test.log

切回 filebeat 运行窗口,控制台会出现如下的打印信息:

2022-07-10T13:16:13.835+0800 INFO log/harvester.go:255 Harvester started for file: /home/dataaudit/xxwei/test.log

{

"@timestamp": "2022-07-10T05:16:13.836Z",

"@metadata": {

"beat": "filebeat",

"type": "doc",

"version": "6.8.6"

},

"input": {

"type": "log"

},

"beat": {

"name": "ZJHZ-CMREAD-XNTEST177-VINT-SQ",

"hostname": "ZJHZ-CMREAD-XNTEST177-VINT-SQ",

"version": "6.8.6"

},

"host": {

"name": "ZJHZ-CMREAD-XNTEST177-VINT-SQ"

},

"log": {

"file": {

"path": "/home/dataaudit/xxwei/test.log"

}

},

"message": "hello the world",

"source": "/home/dataaudit/xxwei/test.log",

"offset": 0,

"prospector": {

"type": "log"

}

}

Filebeat 将采集的数据放在了 message 中,顺带输出了@timestamp、@metadata、input、beat、host、log、source、offset、prospector 等内容。实际上,这些并不都是我们需要的,怎么控制只输出我们自己需要的内容呢?我们把 filebeat.yml 做些修改,如下:

output.console:

pretty: true

codec.format:

string: '%{[@timestamp]} %{[message]} %{[input.type]}'

重启 filebeat 后,重复上面的步骤,得到的自定义输出内容如下:

2022-07-10T13:37:23.633+0800 INFO log/harvester.go:255 Harvester started for file: /home/dataaudit/xxwei/test.log

2022-07-10T13:37:23.633Z welcome to China log

如果我们需要 json 格式的那种,需要把 filebeat.yml 再做些修改,如下:

output.console:

pretty: true

codec.format:

string: '{\"@timestamp\":\"%{[@timestamp]}\",\"message\":\"%{[message]}\",\"input\":\"%{[input.type]}\"}'

再次重启 filebeat ,重复上面步骤,控制台打印如下:

2022-07-10T13:48:38.417+0800 INFO log/harvester.go:255 Harvester started for file: /home/dataaudit/xxwei/test.log

{"@timestamp":"2022-07-10T13:48:38.417Z","message":"welcome to HangZhou","input":"log"}

2022-07-10T13:54:08.425+0800 INFO log/harvester.go:255 Harvester started for file: /home/dataaudit/xxwei/test.log

{"@timestamp":"2022-07-10T13:54:08.425Z","message":"welcome to China","input":"log}"

我们可以看到,Harvester 一直监控着 test.log 文件的变化,一旦有新的日志产生就会向输出端发送。上面输出到控制台打印,只是一个小小的热身测试。接下来,演示采集不同路径下的文件,输出端指定 Logstash。

2、input-多个log,output-Logstash 测试

首先,部署下 Logstash 服务,这里使用的版本是 logstash-6.8.6.tar.gz,解压到指定目录:

tar -zxvf ./opt/logstash-6.8.6.tar.gz -C 指定目录

Filebeat 可以采集不同路径下的日志,而这些日志的种类和格式往往并不是统一的,不加以区分的话,后续 Logstash 处理起来会比较麻烦,新增 tags 用于区分;filebeat.yml 需要注释掉 Outputs,同时放开 Logstash output,而 Logstash 就部署在一台机器上,没必要开启负载均衡,loadbalance 仍默认 false 就行。修改和保存 filebeat.yml 配置如下:

#=========================== Filebeat inputs =============================

filebeat.inputs:

- type: log

enabled: true

paths:

- /home/dataaudit/xxwei/test.log

tags: ["my-test"]

- type: log

enabled: true

paths:

- /home/dataaudit/data-audit/logs/audit_debug*.log

tags: ["my-audit"]

#================================ Outputs =====================================

#output.console:

# pretty: true

#codec.format:

#string: '{\"@timestamp\":\"%{[@timestamp]}\",\"message\":\"%{[message]}\",\"input\":\"%{[input.type]}\"}'

#----------------------------- Logstash output --------------------------------

output.logstash:

hosts: ["10.129.41.163:5044"]

loadbalance: false

接着,进入 logstash-6.8.6 的 config 目录下,新建一个配置文件 logstash.conf,可参考给的 logstash-simple.conf 来定义我们的 logstash.conf:Logstash 输入端肯定是 Filebeat,输入端 input 不用修改;为了更直观的看到 Logstash 转发的情况,将 Logstash 转发后的输出端使用控制台打印。新增并保存 logstash.conf 配置如下:

#testing filebeat --> logstash --> Console

input {

beats {

port => 5044

}

}

#输出到控制台

output {

if "my-test" in [tags] {

stdout { codec => rubydebug }

}

if "my-audit" in [tags] {

stdout { codec => rubydebug }

}

}

在所有的配置文件修改完毕后,就可以开启测试了。注意,要先启动 Logstash 服务,再去启动 Filebeat 服务。因为 Logstash 集成了很多扩展的插件而略显笨重,启动会比较慢些,需要耐心等待。

启动 Logstash(暂不使用后台模式启动),启动信息如下:

$ bin/logstash -f config/logstash.conf

Sending Logstash logs to /home/dataaudit/logstash-6.8.6/logs which is now configured via log4j2.properties

[2022-07-10T18:17:25,401][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2022-07-10T18:17:25,417][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.8.6"}

[2022-07-10T18:17:32,681][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>8, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2022-07-10T18:17:33,053][INFO ][logstash.inputs.beats ] Beats inputs: Starting input listener {:address=>"0.0.0.0:5044"}

[2022-07-10T18:17:33,074][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#" }

[2022-07-10T18:17:33,135][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2022-07-10T18:17:33,149][INFO ][org.logstash.beats.Server] Starting server on port: 5044

[2022-07-10T18:17:33,366][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

启动 Filebeat(暂也不使用后台模式启动),启动信息如下:

$ ./filebeat -e -c filebeat.yml

2022-07-10T18:27:10.914+0800 INFO instance/beat.go:611 Home path: [/home/dataaudit/filebeat-6.8.6] Config path: [/home/dataaudit/filebeat-6.8.6] Data path: [/home/dataaudit/filebeat-6.8.6/data] Logs path: [/home/dataaudit/filebeat-6.8.6/logs]

2022-07-10T18:27:10.914+0800 INFO instance/beat.go:618 Beat UUID: a792cbfd-c27e-41b7-ab1f-b9a32ceeb237

2022-07-10T18:27:10.915+0800 INFO [seccomp] seccomp/seccomp.go:116 Syscall filter successfully installed

(中间显示省略)

2022-07-10T18:27:10.917+0800 INFO [publisher] pipeline/module.go:110 Beat name: ZJHZ-CMREAD-XNTEST177-VINT-SQ

2022-07-10T18:27:10.917+0800 INFO instance/beat.go:402 filebeat start running.

2022-07-10T18:27:10.917+0800 INFO [monitoring] log/log.go:117 Starting metrics logging every 30s

2022-07-10T18:27:10.917+0800 INFO registrar/registrar.go:134 Loading registrar data from /home/dataaudit/filebeat-6.8.6/data/registry

2022-07-10T18:27:10.918+0800 INFO registrar/registrar.go:141 States Loaded from registrar: 3

2022-07-10T18:27:10.918+0800 WARN beater/filebeat.go:367 Filebeat is unable to load the Ingest Node pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the Ingest Node pipelines or are using Logstash pipelines, you can ignore this warning.

2022-07-10T18:27:10.918+0800 INFO crawler/crawler.go:72 Loading Inputs: 2

2022-07-10T18:27:10.918+0800 INFO log/input.go:148 Configured paths: [/home/dataaudit/xxwei/test.log]

2022-07-10T18:27:10.918+0800 INFO input/input.go:114 Starting input of type: log; ID: 2427290630460198775

2022-07-10T18:27:10.919+0800 INFO log/input.go:148 Configured paths: [/home/dataaudit/data-audit/logs/audit_debug.log]

2022-07-10T18:27:10.919+0800 INFO input/input.go:114 Starting input of type: log; ID: 5156196218961992287

2022-07-10T18:27:10.919+0800 INFO crawler/crawler.go:106 Loading and starting Inputs completed. Enabled inputs: 2

2022-07-10T18:27:10.919+0800 INFO cfgfile/reload.go:150 Config reloader started

2022-07-10T18:27:10.919+0800 INFO cfgfile/reload.go:205 Loading of config files completed.

需要注意的是,每隔30s Filebeat 监控文件无变化时,会打印如下的 INFO 信息:

2022-07-10T18:27:40.920+0800 INFO [monitoring] log/log.go:144 Non-zero metrics in the last 30s {"monitoring": {"metrics": {"beat":{"cpu":{"system":{"ticks":20,"time":{"ms":28}},"total":{"ticks":40,"time":{"ms":49},"value":40},"user":{"ticks":20,"time":{"ms":21}}},"handles":{"limit":{"hard":65535,"soft":65535},"open":5},"info":{"ephemeral_id":"201eeeb4-f281-4b35-807d-6e5ccd3325e0","uptime":{"ms":30017}},"memstats":{"gc_next":4194304,"memory_alloc":1913504,"memory_total":4809224,"rss":15142912}},"filebeat":{"events":{"added":2,"done":2},"harvester":{"open_files":0,"running":0}},"libbeat":{"config":{"module":{"running":0},"reloads":1},"output":{"type":"logstash"},"pipeline":{"clients":2,"events":{"active":0,"filtered":2,"total":2}}},"registrar":{"states":{"current":3,"update":2},"writes":{"success":2,"total":2}},"system":{"cpu":{"cores":8},"load":{"1":0,"15":0.05,"5":0.01,"norm":{"1":0,"15":0.0063,"5":0.0013}}}}}}

2022-07-10T18:28:10.919+0800 INFO [monitoring] log/log.go:144 Non-zero metrics in the last 30s {"monitoring": {"metrics": {"beat":{"cpu":{"system":{"ticks":20,"time":{"ms":1}},"total":{"ticks":40,"time":{"ms":4},"value":40},"user":{"ticks":20,"time":{"ms":3}}},"handles":{"limit":{"hard":65535,"soft":65535},"open":5},"info":{"ephemeral_id":"201eeeb4-f281-4b35-807d-6e5ccd3325e0","uptime":{"ms":60016}},"memstats":{"gc_next":4194304,"memory_alloc":2132680,"memory_total":5028400}},"filebeat":{"harvester":{"open_files":0,"running":0}},"libbeat":{"config":{"module":{"running":0}},"pipeline":{"clients":2,"events":{"active":0}}},"registrar":{"states":{"current":3}},"system":{"load":{"1":0,"15":0.05,"5":0.01,"norm":{"1":0,"15":0.0063,"5":0.0013}}}}}}

2022-07-10T18:28:40.919+0800 INFO [monitoring] log/log.go:144 Non-zero metrics in the last 30s {"monitoring": {"metrics": {"beat":{"cpu":{"system":{"ticks":30,"time":{"ms":4}},"total":{"ticks":50,"time":{"ms":6},"value":50},"user":{"ticks":20,"time":{"ms":2}}},"handles":{"limit":{"hard":65535,"soft":65535},"open":5},"info":{"ephemeral_id":"201eeeb4-f281-4b35-807d-6e5ccd3325e0","uptime":{"ms":90016}},"memstats":{"gc_next":4194304,"memory_alloc":2486424,"memory_total":5382144}},"filebeat":{"harvester":{"open_files":0,"running":0}},"libbeat":{"config":{"module":{"running":0}},"pipeline":{"clients":2,"events":{"active":0}}},"registrar":{"states":{"current":3}},"system":{"load":{"1":0,"15":0.05,"5":0.01,"norm":{"1":0,"15":0.0063,"5":0.0013}}}}}}

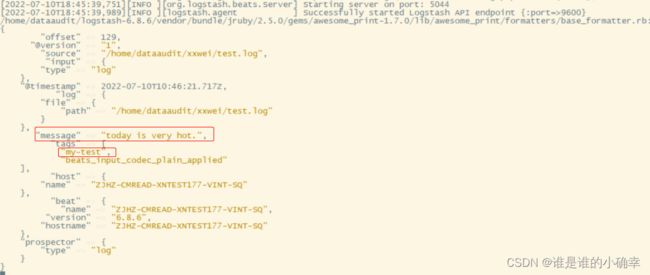

给其中一个日志文件新增一条日志,模拟产生的日志:

$ echo "today is very hot." >>/home/dataaudit/xxwei/test.log

Filebeat 控制台的打印信息:

![]()

Logstash 控制台的打印信息:

从结果看,Logstash 输出的日志不是我想要的那种,实际上我只需要 message 的内容就好,那怎么实现呢?想到了两种方案,一种是通过 Filebeat 控制,这个在测试一就提及到过,在输出端配置codec.format ,如下:

#----------------------------- Logstash output --------------------------------

output.logstash:

hosts: ["10.129.41.163:5044"]

loadbalance: false

codec.format:

string: '%{[message]}'

重启,经测试发现并不起作用,结果还是上面的那种输出。

第二种就是 使用 Logstash 的 filter 过滤器处理,过滤掉 @version、@timestamp、offset、source、input、host、tags、prospector 等(只保留 message 就好),在 logstash.conf 配置如下内容:

filter {

mutate {

remove_field => ["log","beat","host","input","source","tags","offset","prospector","@version","@timestamp"]

}

}

重启测试,这里模拟发三条日志:

![]()

Logstash 控制台的打印信息:

Logstash 的 filter 过滤器很实用,有机会用到会去深入学习和总结。

最后

这里把 Logstash 的输出端替换成 kafka 的话,则就实现了 Filebeat + Logstash + kafka 的日志采集;同理,输出端把 kafka 替换成 elasticsearch,也就实现了 Filebeat + Logstash + elasticsearch 的日志采集。瞬间感觉 so easy 啊,而实际上这一套采集日志的架构是要部署在生产环境使用的,远远没有测试环境的这么简单,因此后面继续探索。