pytorch_rnn_gru_lstm实现

循环神经网络

借助pytorch,一个续写歌词的案例,复习下循环神经网络

主要使用的是 n to n的网络模型

在自己本地上使用jupyter

这里便于显示,精简了一些代码,并添加了相关注释

获取数据

使用歌词的歌词数据来源于kaggle

import pandas as pd

import numpy as np

import time

import math

data = pd.read_csv('./input/songdata.csv')

data.head()

| artist | song | link | text | |

|---|---|---|---|---|

| 0 | ABBA | Ahe’s My Kind Of Girl | … | look at her face, it’s a wonderful face … |

| 1 | ABBA | Andante,Andante | … | Take it easy with me,please… |

| 2 | ABBA | As Good As New | … | I’ll never know why i had to go … |

| 3 | ABBA | Bang | … | Making somebody happy is a question… |

| 4 | ABBA | Bang-A-Boomerang | … | Making somebody happy is a question… |

# 只选取ABBA的歌词

data = data[data['artist']=='ABBA']

# 有一些歌词text是重复的,去重

data = data['text'].unique()

# 现在的data还是numpy数据,将其转为一个长字符串作为语料库

corpus = ' '.join(data.tolist())

corpus = corpus.replace('\n', ' ')

print(len(corpus)) # >>> 151551 # 这么长的预料,便于测试,这里支取少量的数据

corpus = corpus[:1000]

# 建立单个字符的索引,不以整个英语单词为索引

index2char = dict([(i, char) for i, char in enumerate(set(corpus))])

char2index = dict([(char, i) for i, char in enumerate(set(corpus))])

# 将语料库全部转换成数字

corpus_index = [char2index[char] for char in corpus]

# 一共有多少不同的字符,即字典长度

vocab_size = len(char2index) # 38

# 时序数据采样

# 借助pytorch将数据做成dataloader

import torch

import torch.nn as nn

from torch.utils.data import Dataset, DataLoader, TensorDataset

# 是否具有GPU

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# 将语料库转换成Tensor

# 获取X,Y,Y为X数据后移一个位置的一串数据, n to n 的模型

def get_data(corpus_index, seq_len):

X = []

Y = []

for i in range(len(corpus_index)-seq_len):

x = corpus_index[i: i+seq_len]

y = corpus_index[i+1: i+seq_len+1]

X.append(x)

Y.append(y)

return torch.Tensor(X), torch.Tensor(Y)

# 设每串数据长度为50个单位

X_data, Y_data = get_data(corpus_index, 50)

print(X_data.shape)

# 制作成dataloader,便于后续的使用

batch_size = 16

dataset = TensorDataset(X_data, Y_data)

train_loader = DataLoader(dataset=dataset, batch_size=batch_size, shuffle=True, drop_last=True)

# 然后是需要将数据改成onehot编码

def one_hot(x, size):

# 这里的x仅仅是一串数据,在这个例子中的shape是 (seq_len)

# size为字典长度

res = torch.zeros(x.shape[0], size, dtype=torch.float, device=x.device)

# 对应位置置1

res.scatter_(1, x.long().view(-1, 1), 1)

return res

# 将数据全部转成onehot

def to_onehot(X, size):

return [one_hot(X[:, i], size) for i in range(X.shape[1])]

X_one_hot = to_onehot(X_data[:2], vocab_size)

print(len(X_one_hot)) # >>> 50

print(X_one_hot[0].shape) # >>> (2, 38)

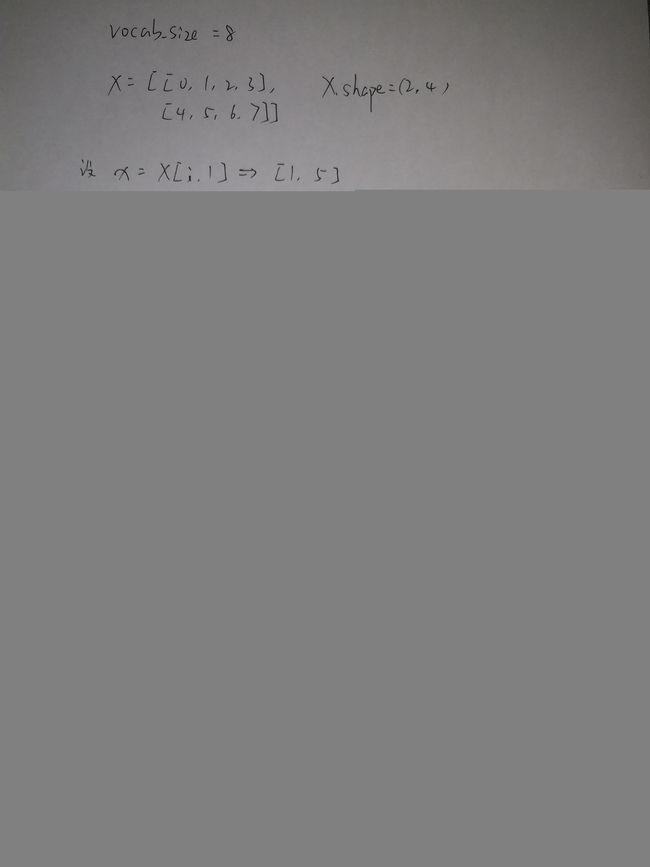

上面的时序数据转成onehot可能比较抽象

反正刚开始的时候我是有点懵,然后画在纸上才搞清楚

比较懒,喜欢直接写在纸上然后拍照

可能图片有点暗,但不影响

RNN

dim_input = vocab_size # 输入维度 onehot编码输进去

dim_hidden = 32 # 隐层维度

dim_output = vocab_size # 输出维度 出来也是onehot 分类问题

# rnn模型参数

def get_rnn_params():

def _init(shape):

param = torch.zeros(shape, device=device, dtype=torch.float32)

nn.init.normal_(param, 0, 0.1)

return nn.Parameter(param)

W_xh = _init((dim_input, dim_hidden))

W_hh = _init((dim_hidden, dim_hidden))

b_h = _init((1, dim_hidden))

W_hq = _init((dim_hidden, dim_output))

b_q = _init((1, dim_output))

return W_xh, W_hh, b_h, W_hq, b_q

# 初始化H_0

def init_rnn_state(batch_size, dim_hidden):

return torch.zeros((batch_size, dim_hidden), device=device)

# rnn计算

def rnn(inputs, state, params):

# inputs和outputs皆为num_steps个形状为(batch_size, vocab_size)的矩阵

# vocab_size为vocab_size个one_hot编码

W_xh, W_hh, b_h, W_hq, b_q = params

H = state

outputs = []

for X in inputs:

H = torch.tanh(torch.matmul(X, W_xh)+torch.matmul(H, W_hh)+b_h)

Y = torch.matmul(H, W_hq) + b_q

outputs.append(Y)

return outputs, H

# 测试下

X = X_data[:2]

print(X.shape) # >>> (2, 50)

state = init_rnn_state(X.shape[0], dim_hidden) # 初始化状态 H0

inputs = to_onehot(X.to(device), vocab_size)

params = get_rnn_params()

outputs, state_new = rnn(inputs, state, params)

print(len(inputs), inputs[0].shape) # >>> 50 (2, 38) # seq_len=50, vocab_size=38

print(len(outputs), outputs[0].shape) # >>> 50 (2, 38)

print(len(state), state[0].shape) # >>> 2 (32) # dim_hidden=32

print(len(state_new), state_new[0].shape) # >>> 2 (32)

裁剪梯度

循环神经网络中较容易出现梯度衰减或梯度爆炸,这会导致网络几乎无法训练。

裁剪梯度(clip gradient)是一种应对梯度爆炸的方法。

假设我们把所有模型参数的梯度拼接成一个向量 g g g,并设裁剪的阈值是 θ \theta θ。

裁剪后的梯度

m i n ( θ ∣ ∣ g ∣ ∣ , 1 ) min(\frac{\theta}{||g||},1) min(∣∣g∣∣θ,1)

g 的 L 2 L_2 L2范数不超过 θ \theta θ

def grad_clipping(params, theta):

norm = torch.tensor([0.0], device=device)

for param in params:

norm += (param.grad.data ** 2).sum()

norm = norm.sqrt().item()

if norm > theta:

for param in params:

param.grad.data *= (theta/norm)

困惑度

我们通常使用困惑度(perplexity)来评价语言模型的好坏。类似于交叉熵

困惑度是对交叉熵损失函数做指数运算后得到的值。特别地,

- 最佳情况下,模型总是把标签类别的概率预测为1,此时困惑度为1;

- 最坏情况下,模型总是把标签类别的概率预测为0,此时困惑度为正无穷;

- 基线情况下,模型总是预测所有类别的概率都相同,此时困惑度为类别个数。

显然,任何一个有效模型的困惑度必须小于类别个数。在本例中,困惑度必须小于词典大小vocab_size。

预测函数

以下函数基于前缀prefix(含有数个字符的字符串)来预测接下来的num_chars个字符。

其中我们将循环神经单元rnn设置成了函数参数,可以编写不同的rnn函数,包括后续的GRU和LSTM

def predict_rnn(prefix, num_chars, rnn, params, init_rnn_state, dim_hidden):

state = init_rnn_state(1, dim_hidden)

output = [char2index[prefix[0]]] # output记录prefix加上预测的num_chars个字符

for t in range(num_chars+len(prefix)-1):

# 将上一时间步的输出作为当前时间步的输入

X = to_onehot(torch.tensor([[output[-1]]], device=device), vocab_size)

# 计算输出和更新隐藏状态

Y, state = rnn(X, state, params)

# 下一个时间步的输入是prefix里的字符或者当前的最佳预测字符

if t<len(prefix)-1:

output.append(char2index[prefix[t+1]])

else:

output.append(Y[0].argmax(dim=1).item())

return ''.join([index2char[i] for i in output])

# 测试下

# 因为模型参数为随机值,所以预测结果也是随机的。

predict_rnn('love', 10, rnn, params, init_rnn_state, dim_hidden) # >>> 'loveSv,Sv,Sv,S'

模型训练函数

- 使用困惑度评价模型。

- 在迭代模型参数前裁剪梯度。

- 对时序数据采用不同采样方法将导致隐藏状态初始化的不同。

# 梯度下降

def sgd(params, lr, batch_size):

for param in params:

param.data -= lr * param.grad / batch_size

# 当然这个函数也考虑到了后续的gru和lstm

# clipping_theta 裁剪梯度的参数

# print_every 每个多少个epoch打印一次信息

# print_len 续写的长度

# prefixes 续写的开头,为一个列表,可以同时续写不同的开头

def train_and_predict(rnn, get_params, init_rnn_state, dim_hiddens, num_epochs,

lr, clipping_theta, print_every, print_len, prefixes):

params = get_params()

loss = nn.CrossEntropyLoss()

for epoch in range(num_epochs):

l_sum, n, start = 0.0, 0, time.time()

for X_batch, y_batch in train_loader:

state = init_rnn_state(batch_size, dim_hidden)

inputs = to_onehot(X_batch.to(device), vocab_size)

outputs, state = rnn(inputs, state, params)

outputs = torch.cat(outputs, dim=0)

y = torch.flatten(y_batch.T)

l = loss(outputs.cpu(), y.long())

# 梯度清0

if params[0].grad is not None:

for param in params:

param.grad.data.zero_()

l.backward()

grad_clipping(params, clipping_theta) # 裁剪梯度

sgd(params, lr, 1)

# 因为误差已经取过均值,梯度不用再做平均

l_sum += l.item() * y.shape[0]

n += y.shape[0]

if (epoch + 1) % print_every == 0:

time1 = time.time()

print('epoch %d, perplexity %f, time %.2f sec' % (epoch + 1, math.exp(l_sum / n), time1 - start))

for prefix in prefixes:

print(' -', predict_rnn(prefix, print_len, rnn, params, init_rnn_state, dim_hiddens))

print('predict_rnn time %.2f sec\n'%(time.time()-time1))

# 这里只是一个学习的例子,参数设置比较简单

num_epochs = 10

lr = 1e2

clipping_theta = 1e-2

print_every= 2 # 每2个epoch打印预测

print_len = 200 # 续写的长度

prefixes = ['love', 'hello'] # 续写的开头

# 开始训练

train_and_predict(rnn, get_rnn_params, init_rnn_state, dim_hidden, num_epochs, lr, clipping_theta, print_every, print_len, prefixes)

pytorch rnn模块

# rnn

class RNNModel(nn.Module):

def __init__(self, vocab_size, dim_hidden):

super(RNNModel, self).__init__()

self.vocab_size = vocab_size

self.hidden_size = dim_hidden

self.rnn = nn.RNN(input_size=self.vocab_size, hidden_size=self.hidden_size)

self.linear = nn.Linear(self.hidden_size, self.vocab_size)

def forward(self, inputs, state):

# inputs.shape: (batch_size, seq_len)

X = to_onehot(inputs, vocab_size)

X = torch.stack(X) # X.shape: (seq_len, batch_size, vocab_size)

hiddens, state = self.rnn(X, state)

hiddens = hiddens.view(-1, hiddens.shape[-1])

output = self.linear(hiddens)

return output, state

# 预测函数

def predict_rnn_pytorch(prefix, num_chars, model):

state = None

output = [char2index[prefix[0]]] # output记录prefix加上预测的num_chars个字符

for t in range(num_chars + len(prefix) - 1):

X = torch.tensor([output[-1]], device=device).view(1, 1)

(Y, state) = model(X, state) # 前向计算不需要传入模型参数

if t < len(prefix) - 1:

output.append(char2index[prefix[t + 1]])

else:

output.append(Y.argmax(dim=1).item())

return ''.join([index2char[i] for i in output])

model = RNNModel(vocab_size, dim_hidden).to(device)

print(predict_rnn_pytorch('love', 10, model))

# 训练函数

def train_and_predict_pytorch(model, dim_hidden, num_epochs, lr, clipping_theta, print_every, print_len, prefixes):

loss = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=lr)

model.to(device)

for epoch in range(num_epochs):

l_sum, n, start = 0.0, 0, time.time()

state = None

for X_batch, y_batch in train_loader:

X_batch = X_batch.to(device)

y_batch = y_batch.to(device)

if state is not None:

# 使用detach函数从计算图分离隐藏状态

if isinstance (state, tuple): # LSTM, state:(h, c)

state[0].detach_()

state[1].detach_()

else:

state.detach_()

(output, state) = model(X_batch, state) # output.shape: (num_steps * batch_size, vocab_size)

y = torch.flatten(y_batch.T)

l = loss(output, y.long())

optimizer.zero_grad()

l.backward()

grad_clipping(model.parameters(), clipping_theta)

optimizer.step()

l_sum += l.item() * y.shape[0]

n += y.shape[0]

if (epoch + 1) % print_every == 0:

time1 = time.time()

print('epoch %d, perplexity %.2f, time %.2f sec' % (epoch + 1, math.exp(l_sum / n), time1 - start))

for prefix in prefixes:

print(' -', predict_rnn_pytorch(prefix, print_len, model))

print('predict_rnn time %.2f sec\n'%(time.time()-time1))

# 训练

num_epochs = 10

lr = 1e-2

clipping_theta = 1e-2

print_every= 2

print_len = 200

prefixes = ['love', 'hello']

train_and_predict_pytorch(model, dim_hidden, num_epochs, lr, clipping_theta, print_every, print_len, prefixes)

GRU

def get_gru_params():

def _init(shape):

param = torch.zeros(shape, device=device, dtype=torch.float32)

nn.init.normal_(param, 0, 0.1)

return torch.nn.Parameter(param)

# 重置门

W_xr = _init((dim_input, dim_hidden))

W_hr = _init((dim_hidden, dim_hidden))

b_r = _init((1, dim_hidden))

# 更新门

W_xz = _init((dim_input, dim_hidden))

W_hz = _init((dim_hidden, dim_hidden))

b_z = _init((1, dim_hidden))

# H_t

W_xh = _init((dim_input, dim_hidden))

W_hh = _init((dim_hidden, dim_hidden))

b_h = _init((1, dim_hidden))

# 输出

W_hq = _init((dim_hidden, dim_output))

b_q = _init((1, dim_output))

return W_xr, W_hr, b_r, W_xz, W_hz, b_z, W_xh, W_hh, b_h, W_hq, b_q

def gru(inputs, state, params):

W_xr, W_hr, b_r, W_xz, W_hz, b_z, W_xh, W_hh, b_h, W_hq, b_q = params

H = state

outputs = []

for X in inputs:

R = torch.sigmoid(torch.matmul(X, W_xr)+torch.matmul(H, W_hr)+b_r)

Z = torch.sigmoid(torch.matmul(X, W_xz)+torch.matmul(H, W_hz)+b_z)

H_hat = torch.tanh(torch.matmul(X, W_xh)+torch.matmul(R*H, W_hh)+b_h)

H = Z*H + (1-Z) * H_hat

Y = torch.matmul(H, W_hq) + b_q

outputs.append(Y)

return outputs, H

# 测试

params = get_gru_params()

predict_rnn('love', 10, gru, params, init_rnn_state, dim_hidden) # >>> 'loveWnntntntnn'

# 训练

num_epochs = 10

lr = 1e2

clipping_theta = 1e-2

print_every= 2

print_len = 200

prefixes = ['love', 'hello']

train_and_predict(gru, get_gru_params, init_rnn_state, dim_hidden, num_epochs, lr, clipping_theta, print_every, print_len, prefixes)

pytorch GRU模块

class GRUModel(nn.Module):

def __init__(self, vocab_size, dim_hidden):

super(GRUModel, self).__init__()

self.vocab_size = vocab_size

self.hidden_size = dim_hidden

self.rnn = nn.GRU(input_size=self.vocab_size, hidden_size=self.hidden_size)

self.linear = nn.Linear(self.hidden_size, self.vocab_size)

def forward(self, inputs, state):

X = to_onehot(inputs, vocab_size)

X = torch.stack(X)

hiddens, state = self.rnn(X, state)

hiddens = hiddens.view(-1, hiddens.shape[-1])

output = self.linear(hiddens)

return output, state

# 测试

model = GRUModel(vocab_size, dim_hidden).to(device)

predict_rnn_pytorch('love', 10, model) # >>> loveHbH''H''H'

# 训练

num_epochs = 10

lr = 1e-2

clipping_theta = 1e-2

print_every= 2

print_len = 200

prefixes = ['love', 'hello']

train_and_predict_pytorch(model, dim_hidden, num_epochs, lr, clipping_theta, print_every, print_len, prefixes)

LSTM

def get_lstm_params():

def _init(shape):

param = torch.zeros(shape, device=device, dtype=torch.float32)

nn.init.normal_(param, 0, 0.1)

return torch.nn.Parameter(param)

# 遗忘门

W_xf = _init((dim_input, dim_hidden))

W_hf = _init((dim_hidden, dim_hidden))

b_f = _init((1, dim_hidden))

# 输入门

W_xi = _init((dim_input, dim_hidden))

W_hi = _init((dim_hidden, dim_hidden))

b_i = _init((1, dim_hidden))

# 输出门

W_xo = _init((dim_input, dim_hidden))

W_ho = _init((dim_hidden, dim_hidden))

b_o = _init((1, dim_hidden))

# 候选记忆细胞

W_xc = _init((dim_input, dim_hidden))

W_hc = _init((dim_hidden, dim_hidden))

b_c = _init((1, dim_hidden))

# 输出

W_hq = _init((dim_hidden, dim_output))

b_q = _init((1, dim_output))

return W_xf, W_hf, b_f, W_xi, W_hi, b_i, W_xo, W_ho, b_o, W_xc, W_hc, b_c, W_hq, b_q

# 函数init_rnn_state初始化隐藏变量,这里的返回值是一个元组。

def init_lstm_state(batch_size, num_hiddens):

return (torch.zeros((batch_size, num_hiddens), device=device),

torch.zeros((batch_size, num_hiddens), device=device))

def lstm(inputs, state, params):

W_xf, W_hf, b_f, W_xi, W_hi, b_i, W_xo, W_ho, b_o, W_xc, W_hc, b_c, W_hq, b_q = params

(H, C) = state

outputs = []

for X in inputs:

F = torch.sigmoid(torch.matmul(X, W_xf)+torch.matmul(H, W_hf)+b_f)

I = torch.sigmoid(torch.matmul(X, W_xi)+torch.matmul(H, W_hi)+b_i)

O = torch.sigmoid(torch.matmul(X, W_xo)+torch.matmul(H, W_ho)+b_o)

C_hat = torch.tanh(torch.matmul(X, W_xc)+torch.matmul(H, W_hc)+b_c)

C = F * C + I * C_hat

H = O * torch.tanh(C)

Y = torch.matmul(H, W_hq) + b_q

outputs.append(Y)

return outputs, (H, C)

# 训练

num_epochs = 10

lr = 1e2

clipping_theta = 1e-2

print_every= 2

print_len = 200

prefixes = ['love', 'hello']

train_and_predict(lstm, get_lstm_params, init_lstm_state, dim_hidden, num_epochs, lr, clipping_theta, print_every, print_len, prefixes)

pytorch LSTM模块

class LSTMModel(nn.Module):

def __init__(self, vocab_size, dim_hidden):

super(LSTMModel, self).__init__()

self.vocab_size = vocab_size

self.hidden_size = dim_hidden

self.lstm = nn.LSTM(input_size=self.vocab_size, hidden_size=self.hidden_size)

self.linear = nn.Linear(self.hidden_size, self.vocab_size)

def forward(self, inputs, state):

X = to_onehot(inputs, vocab_size)

X = torch.stack(X)

hiddens, state = self.lstm(X, state)

hiddens = hiddens.view(-1, hiddens.shape[-1])

output = self.linear(hiddens)

return output, state

# 训练

model = LSTMModel(vocab_size, dim_hidden).to(device)

num_epochs = 10

lr = 1e-2

clipping_theta = 1e-2

print_every= 2

print_len = 200

prefixes = ['love', 'hello']

train_and_predict_pytorch(model, dim_hidden, num_epochs, lr, clipping_theta, print_every, print_len, prefixes)

深度循环神经网络

双向循环神经网络

参考

- 动手学深度学习

- 吴恩达系列视频