Mindspore实现手写数字识别

废话不多说,首先说一下我使用的环境:

python3.9

mindspore 2.1

使用jupyter notebook

Step1:导入相关依赖的包

import os

from matplotlib import pyplot as plt

import numpy as np

import mindspore as ms

import mindspore.context as context

import mindspore.dataset as ds

import mindspore.dataset.transforms.c_transforms as C

import mindspore.dataset.vision.c_transforms as CV

from mindspore.nn.metrics import Accuracy

from mindspore import nn

from mindspore.train import Model

from mindspore.train.callback import ModelCheckpoint, CheckpointConfig, LossMonitor, TimeMonitor

context.set_context(mode=context.PYNATIVE_MODE, device_target='CPU')Step2:下载mindspore官方的手写数字识别的数据集:

from download import download

url = "https://mindspore-website.obs.cn-north-4.myhuaweicloud.com/" \

"notebook/datasets/MNIST_Data.zip"

path = download(url, "./", kind="zip", replace=True)Step3:打印数据的相关信息

DATA_DIR_TRAIN = "MNIST_Data/train" # 训练集信息

DATA_DIR_TEST = "MNIST_Data/test" # 测试集信息

#读取数据

ds_train = ds.MnistDataset(DATA_DIR_TRAIN)

ds_test = ds.MnistDataset(DATA_DIR_TEST )

#显示数据集的相关特性

print('训练数据集数量:',ds_train.get_dataset_size())

print('测试数据集数量:',ds_test.get_dataset_size())

image=ds_train.create_dict_iterator().__next__()

print('图像长/宽/通道数:',image['image'].shape)

print('一张图像的标签样式:',image['label']) #一共 10 类,用 0-9 的数字表达类别

Step4:数据预处理函数,对数据进行归一化、裁剪成指定大小、HWC转换为CHW,最后使用map函数进行映射。设定打乱数据集的操作和设置batch_size的大小。

def create_dataset(training=True, batch_size=128, resize=(28, 28),

rescale=1/255, shift=0, buffer_size=64):

ds = ms.dataset.MnistDataset(DATA_DIR_TRAIN if training else DATA_DIR_TEST)

# 定义 Map 操作尺寸缩放,归一化和通道变换

resize_op = CV.Resize(resize)

rescale_op = CV.Rescale(rescale,shift)

hwc2chw_op = CV.HWC2CHW()

# 对数据集进行 map 操作

ds = ds.map(input_columns="image", operations=[rescale_op,resize_op, hwc2chw_op])

ds = ds.map(input_columns="label", operations=C.TypeCast(ms.int32))

#设定打乱操作参数和 batchsize 大小

ds = ds.shuffle(buffer_size=buffer_size)

ds = ds.batch(batch_size, drop_remainder=True)

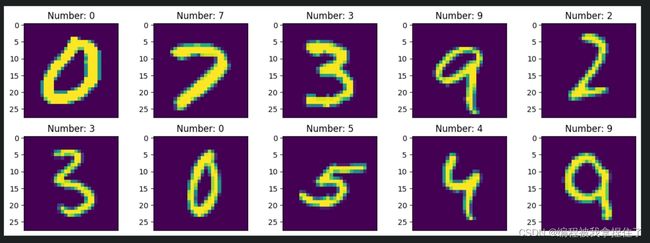

return dsStep5:画出来前10张·图片看看效果

#显示前 10 张图片以及对应标签,检查图片是否是正确的数据集

ds = create_dataset(training=False)

data = ds.create_dict_iterator().__next__()

images = data['image'].asnumpy()

labels = data['label'].asnumpy()

plt.figure(figsize=(15,5))

for i in range(1,11):

plt.subplot(2, 5, i)

plt.imshow(np.squeeze(images[i]))

plt.title('Number: %s' % labels[i])

plt.xticks([])

plt.show()Step6:构建网络模型

#创建模型。模型包括 3 个全连接层,最后输出层使用 softmax 进行多分类,共分成(0-9)10 类

class ForwardNN(nn.Cell):

def __init__(self):

super(ForwardNN, self).__init__()

self.conv1 = _conv3x3(1, 64, stride=1)

self.bn1 = _bn(64)

self.relu = ops.ReLU()

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, pad_mode="same")

self.resblock1 = ResidualBlock(64,128,stride=1)

self.resblock2 = ResidualBlock(128,128,stride=1)

self.flatten = nn.Flatten()

self.GAP = nn.AdaptiveAvgPool2d((1,1))

self.fc = nn.Dense(128,10)

def construct(self, input_x):

x = self.conv1(input_x) # 第一层卷积 7X7,步长为 2

x = self.bn1(x) # 第一层的 Batch Norm

x = self.relu(x) # Rule 激活层

x = self.maxpool(x) # 最大池化 3X3,步长为 2

x = self.resblock1(x)

x = self.resblock2(x)

# x = self.fc(self.flatten(self.GAP(x)))

return x

in_ = ms.Tensor(np.random.randn(32,1,28,28).astype(np.float32))

print(in_.shape)

model = ForwardNN()

aa = model(in_)

print(aa.shape)我这里使用的是自己搭建的有两个resBlock的卷积网络,大家可以自己尝试,也可以使用全连接网络试试。

Step7:设置超参数和相关的指标

#创建网络,损失函数,评估指标 优化器,设定相关超参数

lr = 0.001

num_epoch = 10

momentum = 0.9

net = ForwardNN()

loss = nn.loss.SoftmaxCrossEntropyWithLogits(sparse=True, reduction='mean')

metrics={"Accuracy": Accuracy()}

opt = nn.Adam(net.trainable_params(), lr)这就开始训练了。

Step8:开始预测

#使用测试集评估模型,打印总体准确率

metrics=model.eval(ds_eval)

print(metrics)准确率97%还不错。