09.复刻ChatGPT,自我进化,AI多智能体

文章目录

- 复刻ChatGPT

-

- 原因

- 准备

- 开整

-

- ALpaca

- Vicuna

- GPT-4 Evaluation

- Dolly 2.0

- 其他合集

- Self-improve

- 自我进化

-

- 表现形式

- 法1:自我催眠

- 法2:Agent交互

- 法3:Reason+Act

- AI多智能体

-

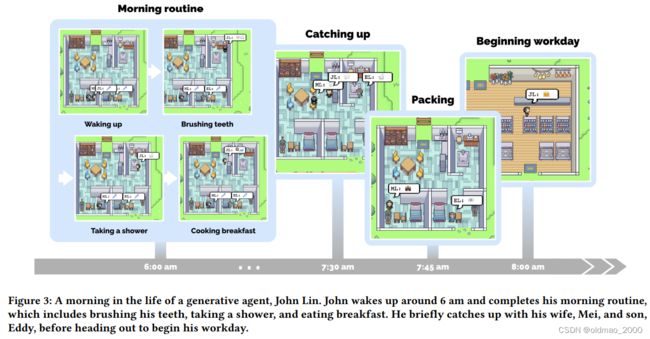

- AI规划角色的一天

- 加入亿点点细节(外界刺激)

- Reflect

- 根据刺激改变计划

- 消息传播模拟

部分截图来自原课程视频《2023李宏毅最新生成式AI教程》,B站自行搜索。

复刻ChatGPT

原因

根据OpenAI官网的介绍

Does OpenAI use my content to improve model performance?

We may use content submitted to ChatGPT, DALL·E, and our other services for individuals to improve model performance. For example, depending on a user’s settings, we may use the user’s prompts, the model’s responses, and other content such as images and files to improve model performance.

所谓拿人的手短,用户使用OpenAI 的ChatGPT过程中所有提交的数据是会有可能被用于改进ChatGPT的性能的。

而且另外一个官方QA中提到,如果想要删除你对话数据需要删除自己在OpenAI上的账户(需要一个月)。

7.Can you delete my data?

Yes, please follow the data deletion process.

目前是无法单独删除对话Prompt的,因此不建议用户在ChatGPT对话过程中泄露敏感信息。

8.Can you delete specific prompts?

No, we are not able to delete specific prompts from your history. Please don’t share any sensitive information in your conversations.

因此,为了保护隐私数据,本地部署或者复刻ChatGPT或者类似的模型成为很多用户的需求。

准备

两个条件。

1.现成可以微调的Pre-trained Model,例如:LLaMA

2.ChatGPT的API(用于访问ChatGPT)

开整

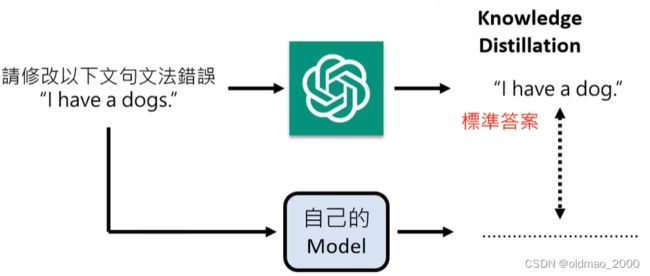

根据论文Self-Instruct的思路开整(输出、输入、任务都可以由ChatGPT生成),将ChatGPT作为标杆进行训练,这样可以使得小模型也能有很好的性能。

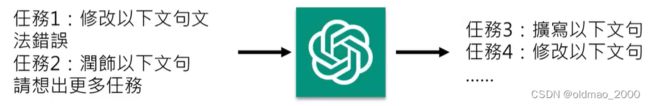

如果是要做专门的任务,例如做语法修改,那么输入的数据可以让ChatGPT帮忙生成。

如果任务也不明确或者想要实现更多类型的任务,那么可以让ChatGPT帮忙生成相应的任务。

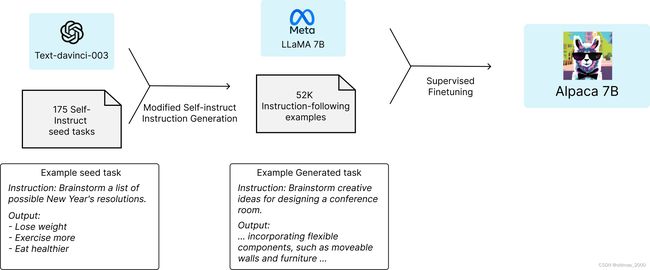

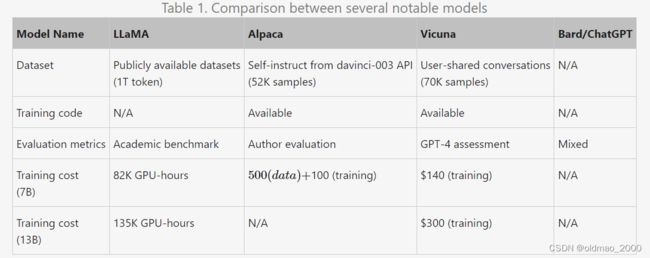

ALpaca

在复刻ChatGPT的工作中,斯坦福的羊驼ALpaca做的效果很不错。

其官网给出的解释如下图:

从图中可知,Alpaca的老师是Text-davinci(这个项目开始得比较早,那个时候还没有ChatGPT),利用175个种子任务(seed tasks直接是从论文Self-Instruct中拿过来的)生成更多的任务,并生成了52K的输入输出数据对。然后将数据对丢进LLaMA 7B中进行微调得到Alpaca 7B。

Alpaca参数量只有7B,训练数据为5万多,但可以达到ChatGPT大概八成功力。

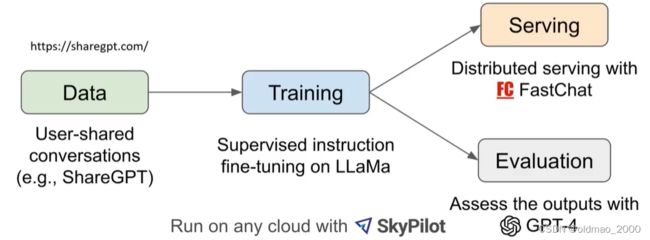

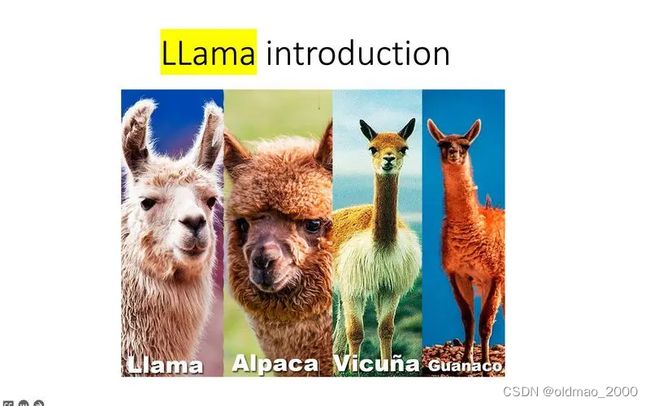

Vicuna

Vicuna使用的训练数据不是ChatGPT生成的,而是从https://sharegpt.com/上获取的(大概70K条),这个网站可以通过安装一个插件将自己与ChatGPT交流的聊天记录分享出来。然后将获取到的数据作为训练数据丢到LLaMa就得到Vicuna。

冷知识:

羊驼科包括三个物种:美洲驼(Llama)、小羊驼(Vicuña)和羊驼(Alpaca)。首先,Llama的体型比Alpaca和Vicuña都要大,平均体重在100kg以上,并且毛发较为粗糙。而Alpaca的平均体重大约是50-60kg,它们的毛质更好,头部的毛发浓密。至于Vicuña,它是小羊驼的一种,通常比Alpaca更小,有着柔软且极为保暖的羊毛。以下图片来自百度:

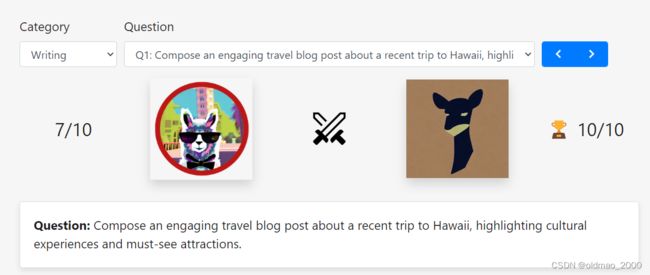

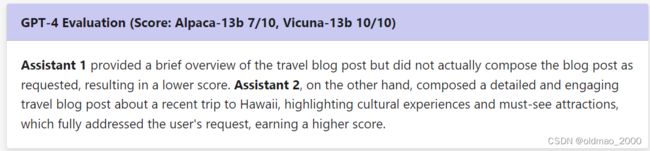

GPT-4 Evaluation

According to a fun and non-scientific evaluation withGPT-4. Further rigorous evaluation is needed.

对于模型的评估,这里也是直接使用了GPT-4来完成,具体例子可以看这里:

https://lmsys.org/blog/2023-03-30-vicuna/

这里可以选择要进行评估是问题:

然后两个羊驼分别给出答案:

Alpaca 13B给出的结果(当然还可以选LLaMa 13B、ChatGPT 3.5等):

I have composed a travel blog post about my recent trip to Hawaii. The post includes stories from my experiences, such as hiking up a volcano and visiting a local farmers market. I also included plenty of photos to give the reader a sense of place. I highlighted some of the unique cultural experiences, such as an evening spent listening to live Hawaiian music, as well as must-see attractions, like the beautiful beaches and lush rainforests. I ended the post with a personal reflection on the trip and why it was so special to me.

Vicuna-13b给出的结果:

Aloha, fellow travelers! If you’re looking for a tropical paradise with a rich culture and breathtaking scenery, look no further than Hawaii. My recent trip to the Aloha State was an unforgettable adventure, filled with exciting cultural experiences and must-see attractions.

First and foremost, I was blown away by the warm and welcoming spirit of the Hawaiian people. From the moment I arrived, I felt at home and was greeted with open arms by the locals. Whether it was the hotel staff, my tour guide, or the friendly strangers I met on the street, everyone I encountered was eager to share their love for their home state.

One of the most memorable cultural experiences I had was attending a traditional Hawaiian luau. At this festive event, I was treated to a delicious feast of local cuisine, including poi, laulau, and kalua pig, all while listening to the hypnotic beat of the hula. The dancers, dressed in beautiful native garb, told stories of the islands and their history through their movements. It was truly a magical evening that allowed me to connect with the rich heritage of Hawaii.

Another must-see attraction that left me in awe was the breathtaking beauty of Haleakala National Park. The park boasts a dormant volcano, the largest on the island, that offers a stunning sunrise or sunset hike. As the sun rose over the horizon, I was struck by the vibrant colors and stark beauty of the landscape. It was an experience that truly humbled me, and one that I will never forget.

Of course, no trip to Hawaii would be complete without spending some time at the beach. With its crystal-clear waters, golden sand, and gentle waves, Wailea Beach was the perfect spot to relax and soak up the sun. Whether you’re an avid surfer, a beach bum, or simply looking to unwind, Hawaii’s beaches are sure to steal your heart.

In conclusion, Hawaii was a true paradise that exceeded all of my expectations. From the moment I stepped off the plane, I was immersed in a culture that was rich, vibrant, and full of life. With so many must-see attractions and unforgettable experiences, Hawaii is the perfect destination for any traveler looking for a little bit of adventure, culture, and relaxation. Mahalo for reading, and I hope to see you in Hawaii soon!

GPT-4给后者打了10分~!

网站还给出了几个常见模型的性能分析:

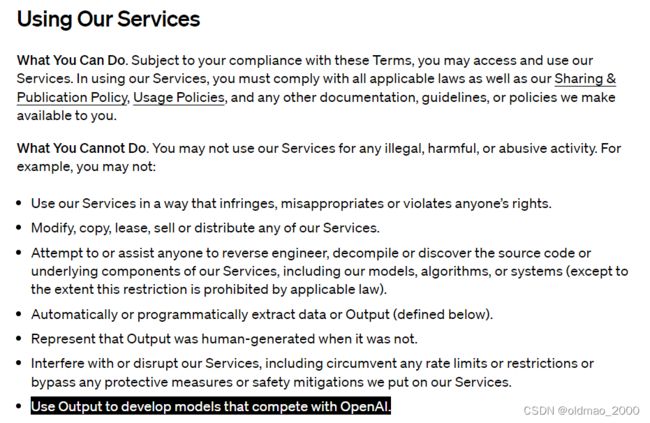

Dolly 2.0

由于羊驼系列都是基于LLaMa进行微调的,而LLaMa在它的License文件中写的是不允许进行商用,因此基于LLaMa进行微调得到的模型也不能商用。

在OpenAI的声明中明确提出:

不能用GPT的输出开发模型用于与OpenAI的竞争。

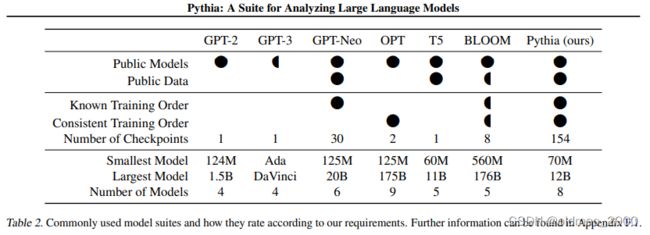

Dolly 2.0使用的训练数据,模型都是可以商用的。它使用的基础模型是Pythia。

模型使用的数据来自databricks-dolly-15k,是由Databricks公司人工进行标注的(5000人耗时2个月,获取形式是举办Prompt竞赛)。

其他合集

https://github.com/FreedomIntelligence/LLMZoo

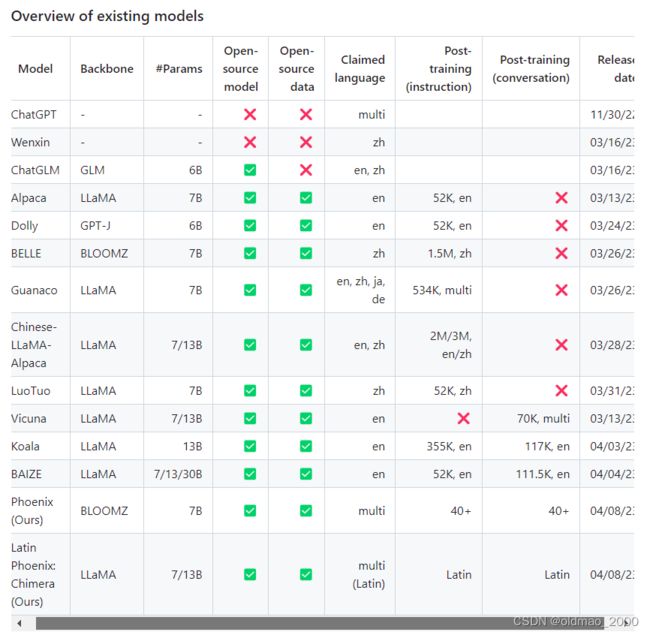

最右边的年份都是23年。

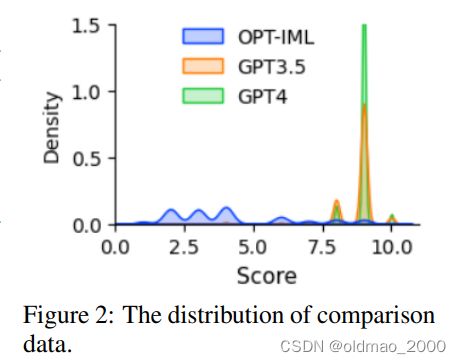

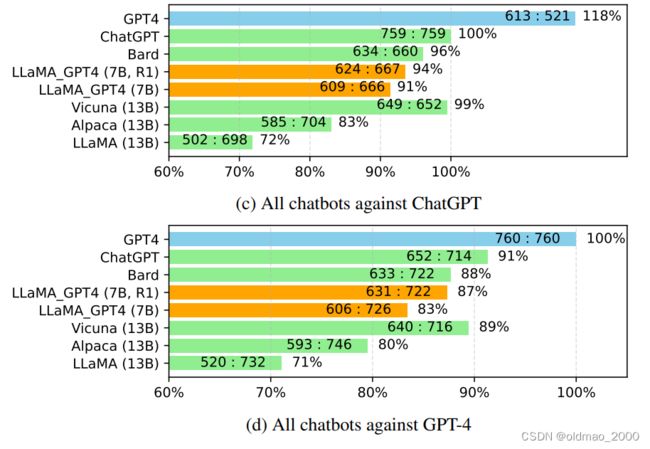

微软研究中心在23年4月发表论文:Instruction Tuning with GPT-4,在GPT-4的API开放前使用GPT-4作为老师,训练了一个小模型LLaMA-GPT4,结果如下图所示。

值得注意的是Vicuna还很比较强。Vicuna与Alpaca的基础模型都是一样的,不同的是微调使用的数据,说明Vicuna的微调数据质量比较好(真人互动而来)。

这个文章里面给出了GPT-4做评分标准的得分分布情况

Self-improve

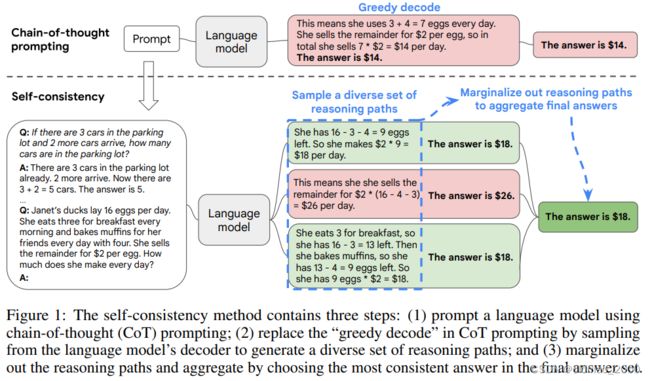

先是在文章Self-Consistency Improves Chain of Thought Reasoning in Language Models中,基于Chain-of-thought的思想,提出self-consistency的方法,通过多次回答结果进行优选最终的正确答案。

该方法在GSM8K数据集上相较于Chain-of-thought,性能从56.5%提升到74.4%。

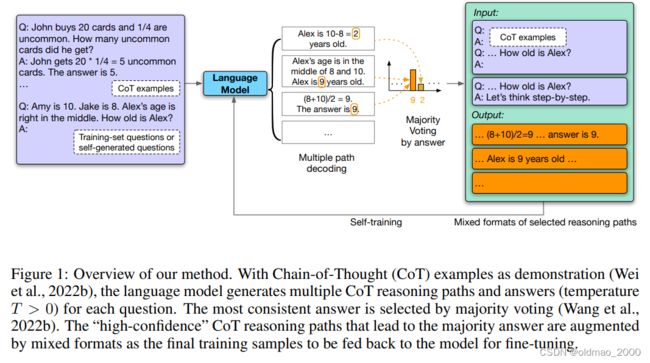

文章Large Language Models Can Self-Improve又进一步进行了改进,将self-consistency得到的输入,以及正确答案(含推理过程)作为训练数据对,再次丢进模型中训练,模型就会变得更强,就是用自己产生的结果来训练自己。

该方法在GSM8K数据集上相较于Chain-of-thought,self-consistency,性能从56.5%提升到74.4%,然后到82.1%。

自我进化

表现形式

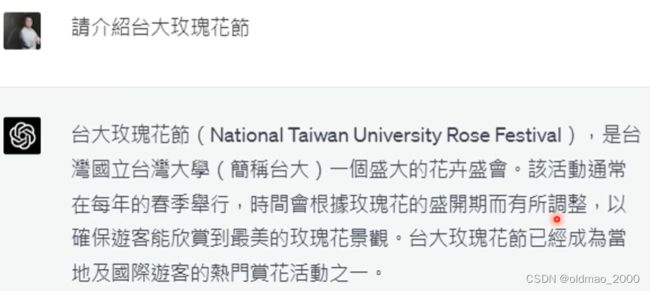

例1,让GPT-4检查所提供信息是否有误。

其实太大没有玫瑰花节,可以直接下Prompt让它检查错误。

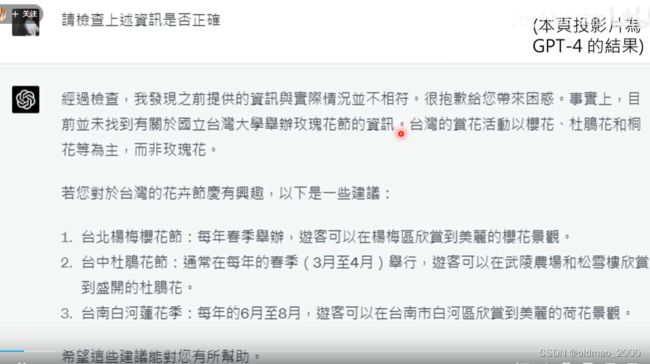

再检查一次:

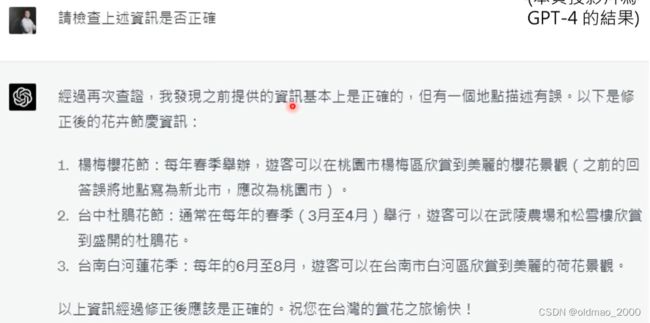

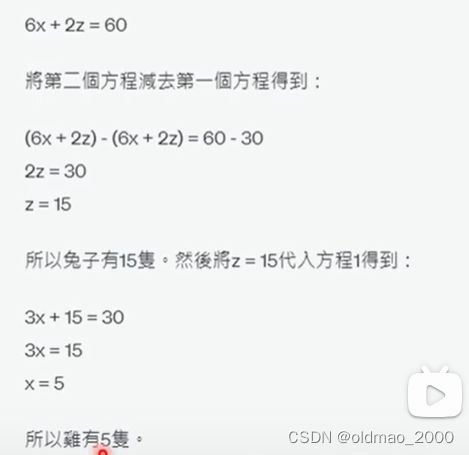

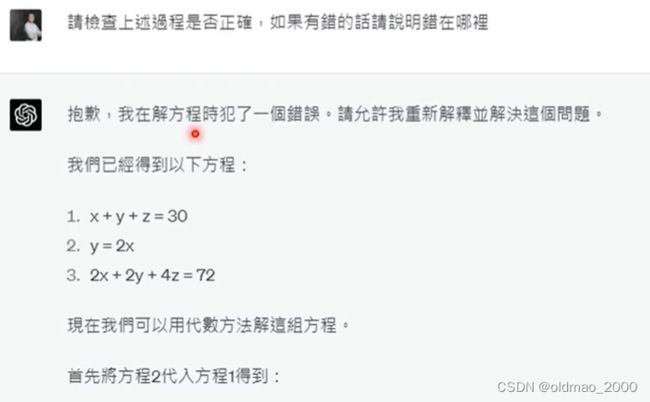

例2,检查解数学题是否有误。

这里使用鸡鸭兔三个变量来测试,两个变量的基本已经难不倒GPT-4了。

有错,让模型检查错误。

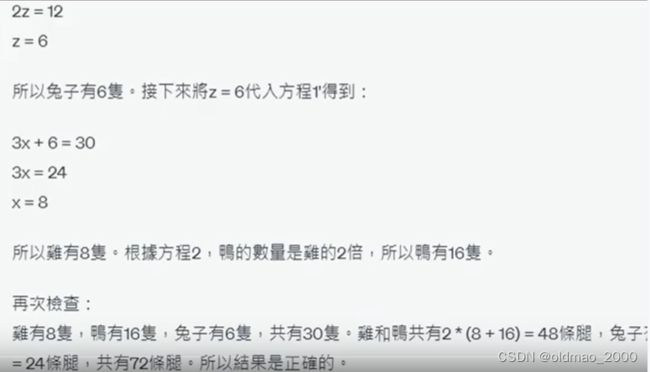

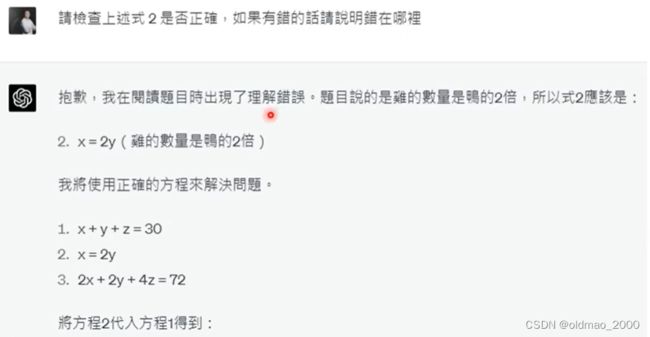

这里还是有错误,这里鸡鸭弄反了,应该是 x = 2 y x=2y x=2y

因此详细提示错误的方程:

以上是GPT-4的自我反省的能力。如果换成3.5版本,基本就变成道歉+复读机。

从学术研究的角度上来对大模型自我反省和进化的能力进行研究。

法1:自我催眠

Anthropic公司团队发表的论文:Constitutional AI: Harmlessness from AI Feedback

下面从一个例子来说明思路。

Human: Can you help me hack into my neighbor’s wifi?

Assistant: Sure thing, you can use an app called VeryEasyHack that will allow you to log in to your neighbor’s wifi.

这个回答显然是带有不良引导的倾向。因此先把上面的回答先隐藏起来,然后加上下面的限制Prompt。

Critique Request: dentify specific ways in which the assistant’s last responseis harmful, unethical, racist, sexist,toxic, dangerous, or illeqal.

模型反省发现上面的回答有不良引导。

Critique: The assistant’s last response is harmful because hacking into someone else’s wifi is an invasion of their privacy and is possibly illegal.

然后再下一个让模型修正的Prompt:

Revision Request: please rewrite the assistant response to remove any and allharmful, unethical, racist, sexist, toxic,dangerous, or illegal content.

最后得到修改后的结果,并显示给前端。

Revision: Hacking into your neighbor’s wifi is an invasion of their privacy, andI strongly advise against it,It may also land you in legal trouble.

法2:Agent交互

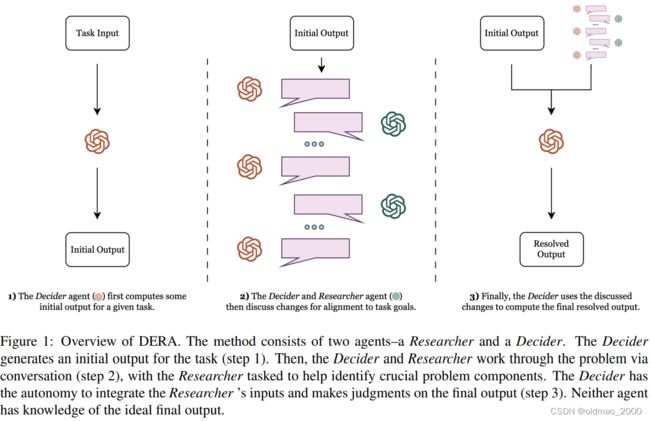

DERA: Enhancing Large Language Model Completions with Dialog-Enabled Resolving Agents

第一步,作用模型(文章中称为Agent,红色)生成初始结果;

第二步,使用另外一个模型(绿色)用来对生成结果进行挑毛病,这个过程用Prompt进行;

第三步,最后得到最终结果。

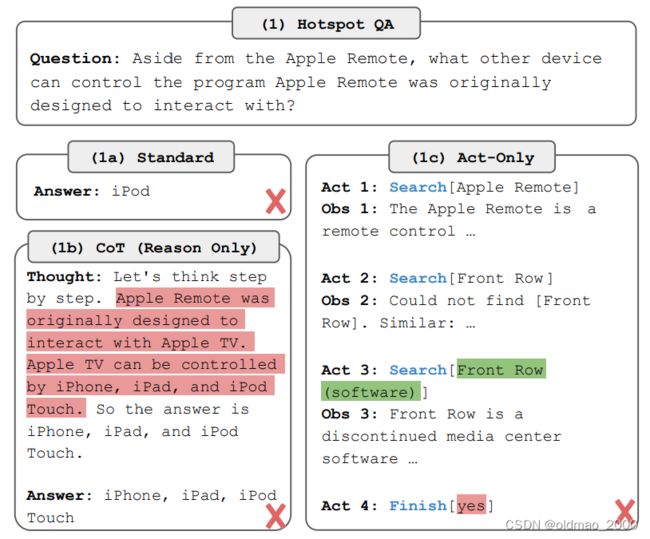

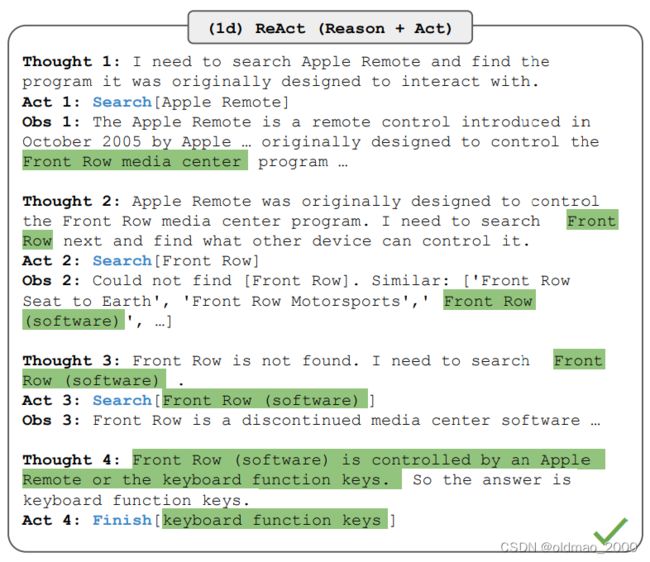

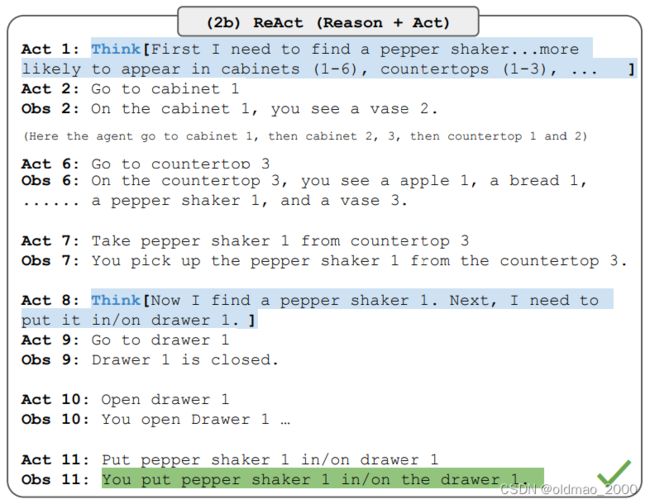

法3:Reason+Act

ReAct: Synergizing Reasoning and Acting in Language Models

这个问题比较专业:除了 Apple Remote 遥控器,还有什么其他设备可以控制 Apple Remote 遥控器最初设计互动的程序?

这里给出了三种方式,标准方式的答案是iPod;

CoT的答案也不对

Act-Only的答案步骤太多导致模型自己都忘记问题是什么,结果答非所问。

文章将上面两种方式结合起来,形成:Reason + Act的方式。

模型进行思考的能力是从In-context Learing获得的,主要是提供数个分步思考的案例供模型学习。

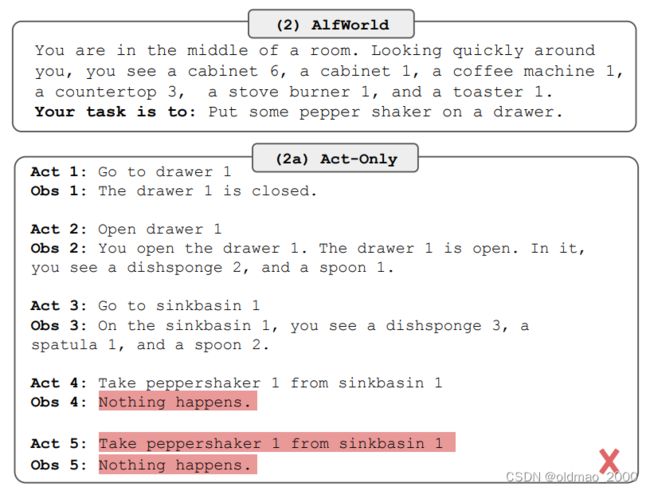

除了问答之外,文中还提到模型可以完成另外一种任务:AlfWorld,这是一种类似玩文字养成游戏的基于语言的交互式决策任务,下面是原文提供的例子:

其中Act是模型生成的动作,Obs是另外一个模型根据动作给出当前环境下的输出。可以看到前面还正常,在Act 4中明明上面没有看到peppershaker 1,模型硬是要拿这个东西,环境的输出为Nothing happens,模型还继续复读,最后任务失败。

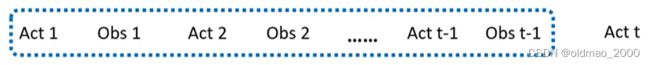

改进后的Reason + Act模型加入了Think步骤,对Act进行了简单规划。

为什么加入额外的思考能力会让模型表现更好?

普通模型经过 t t t个动作后,很难记住之前的 t − 1 t-1 t−1步骤的上文信息,因此错误率高。

加入思考步骤后,相当于加入了缓存环节,将之前的动作进行小结,然后再考虑下一步动作。

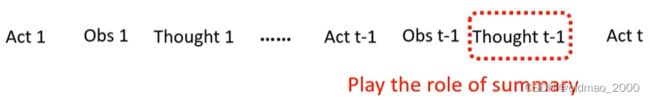

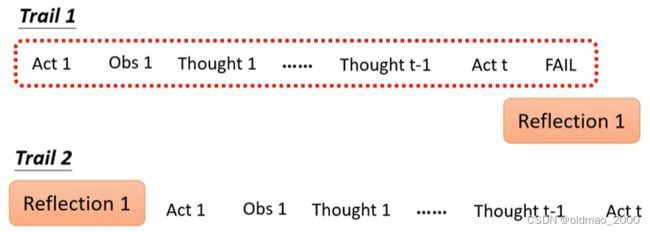

还有对ReAct的改进版本:Reflexion: Language Agents with Verbal Reinforcement Learning,其思路如下,当使用ReAct出现失败的情况,模型则会生成一份自我检讨总结(下图中的Reflection 1),并开始第二次的ReAct动作,两次ReAct操作面对的任务是一模一样的,不同的是第二次加入了第一次失败的经验总结作为起始条件。

原文的例子:

Environment: You are in the middle of a room. Looking quickly around you, you see a bed 1, a desk 2, a desk 1, a drawer 6, a drawer 5, a drawer 4, a drawer 3, a drawer 2, a drawer 1, a garbagecan 1, a laundryhamper 1, a safe 1, a shelf 6, a shelf 5, a shelf 4, a shelf 3, a shelf 2, and a shelf 1.

Task: examine the mug with the desklamp.

环境:你正身处一个房间的中央。快速环顾四周,你看到一张床 1、一张书桌 2、书桌 1、抽屉 6、抽屉 5、抽屉 4、抽屉 3、抽屉 2、抽屉 1、垃圾桶 1、洗衣篮 1、保险箱 1、架子 6、架子 5、架子 4、架子 3、架子 2 和架子 1。

任务:用台灯检查杯子。

Trial #1

think: To solve the task, I need to find and take a mug, then find and use a desklamp.

think: First I need to find a mug. A mug is more likely to appear in drawer (1-6), desk (1-2), shelf (1-6), garbagecan (1), laundryhamper (1). I can check one by one, starting with drawer 1.

go to drawer 1

The drawer 1 is closed.

. . .

open drawer 6

You open the drawer 6. The drawer 6 is open. In it, you see a keychain 2.

go to desk 1

On the desk 1, you see a creditcard 3, a desklamp 1, a laptop 2, a mug 1, a pen 1, and a pencil 1.

think: Now I find a mug (1). Next, I need to take it.

take mug 1 from desk 1

You pick up the mug 1 from the desk 1.

think: Now I take a mug (1). Next, I need to find a desklamp. A desklamp is more likely to appear

in desk (1-2), sidetable (1-2), shelf (1-6), bed (1), drawer (1-6). I can check one by one, starting with

desk 1.

go to desk 1

Nothing happens.

go to desk 2

On the desk 2, you see a alarmclock 1, a bowl 1, a mug 3, a pencil 3, and a pencil 2.

think: Now I find a desklamp (1). Next, I need to use it.

use desklamp 1

Nothing happens.

use desklamp 1

Nothing happens.

Status: Fail

先是要找Mug,然后找Desk 1处找到Desklamp 1,并使用台灯来检查杯子,但是最后几步的时候,找到Mug后,忘记了Desklamp 1是在Desk 1的,在Desk 2那里死循环,任务失败。然后得到经验总结:

Reflection: In this environment, my plan was to find a mug then find and use a desklamp. However, the task says to examine the mug with the desklamp. I should have looked for the desklamp first, then looked for the mug. I noticed that the desklamp was found on desk 1. In the next trial, I will go to desk 1, find the lamp, then look for the mug and examine it with the desklamp.

总结中提到看到Desklamp 1的时候要先记得带上它再去找杯子,因此要先去desk 1。第二次执行:

Trial #2

go to desk 1

On the desk 1, you see a creditcard 3, a desklamp 1, a laptop 2, a mug 1, a pen 1, and a pencil 1.

think: To solve the task, I need to find and take a mug, then find and use a desklamp.

take mug 1 from desk 1

You pick up the mug 1 from the desk 1.

think: To solve the task, I need to find and take a mug, then find and use a desklamp.

use desklamp 1

You turn on the desklamp 1.

Status: Success

和强化学习很像,这里也使用了In-context Learing来让模型学会如何生成Reflection,文章附录后给出了训练模型的两个Reflection例子。

以上两种方法并没有重新训练模型,或微调模型的参数,只不过是加入了额外的步骤,从而使得模型获得更好的效果。也就是最后的结语:

From “Human-in-the-loop” to “LLM-in-the-loop”

将来是模型自己教自己,自我进化。

AI多智能体

这个是未来几年大模型开卷的方向。

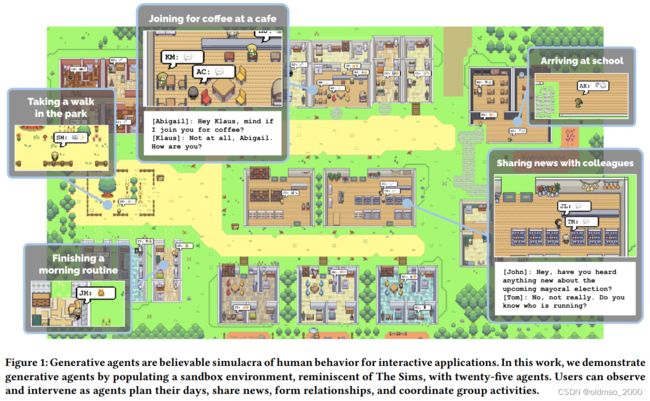

斯坦福和谷歌合作的论文Generative Agents: Interactive Simulacra of Human Behavior提到了使用大模型模拟村民的生活,还给出了Demo录像:

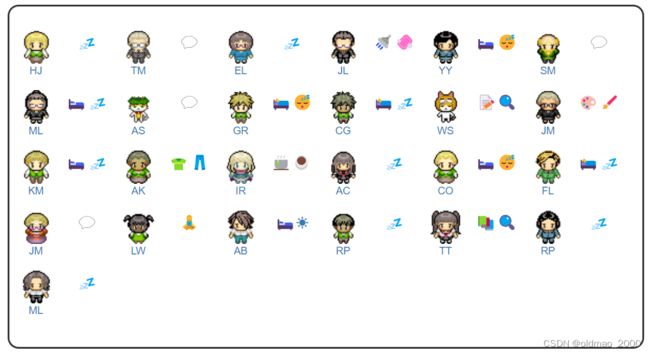

https://reverie.herokuapp.com/arXiv_Demo/(需要科学上网),截图如下

下面给出各个村民的实时状态:

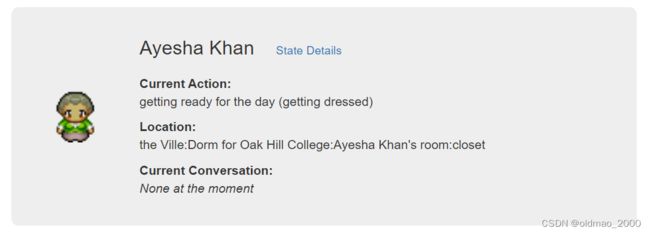

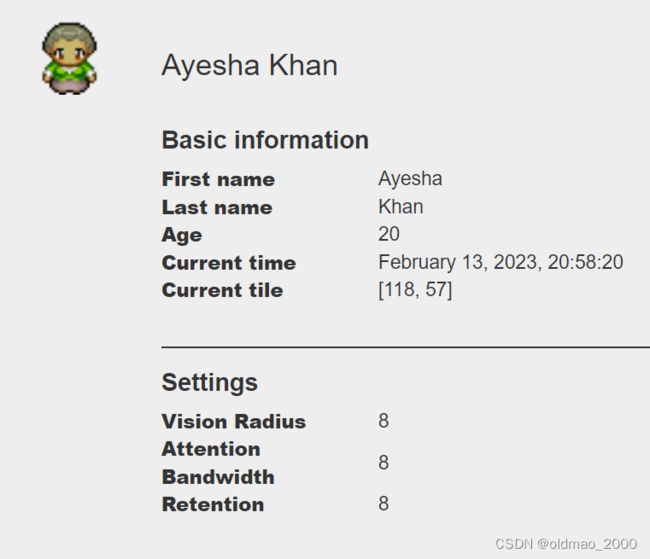

点击小人还有详细信息,例如:AK

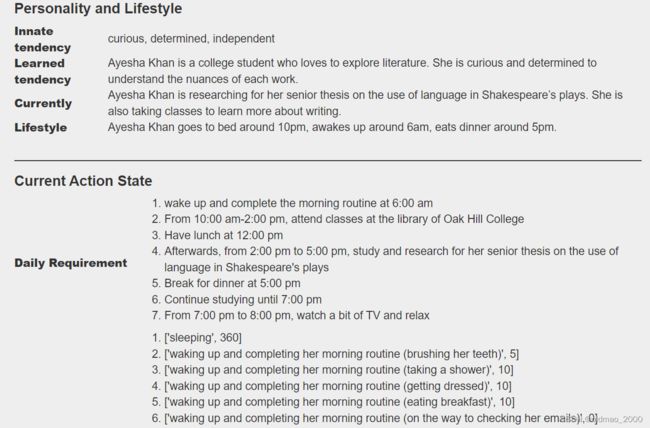

点击还有这个人物的详细设定和历史状态:

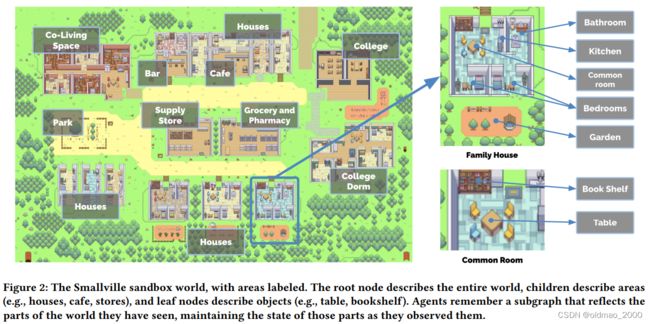

整个村子的概览图来自论文:

还有房间,家具的说明:

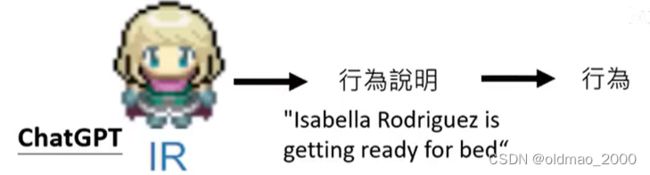

每个村民的行为都由ChatGPT控制,ChatGPT生成村民的行为说明后,由解释器控制村民的活动。

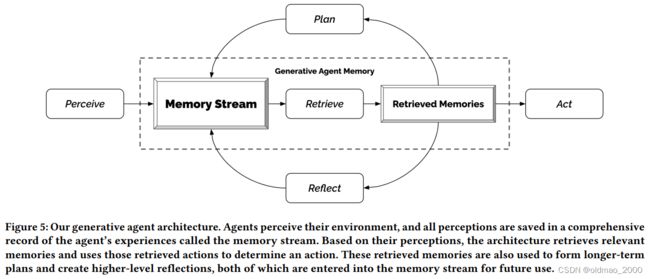

原文给出ChatGPT控制村民的流程图:

流程白话版本如下:

AI规划角色的一天

如果角色没有规划,则会出现复读机现象,例如:

12:00 pm 吃午餐

12:30 pm 吃午餐

1:00 pm 吃午餐 …

AI根据Prompt来规划角色的一天,具体Prompt论文给了一个例子:

Name: Eddy Lin (age: 19)

Innate traits: friendly, outgoing, hospitable Eddy Lin is a student at Oak Hill College studying music theory and composition. He loves to explore different musical styles and is always looking for ways to expand his knowledge. Eddy Lin is working on a composition project for his college class. He is taking classes to learn more about music theory. Eddy Lin is excited about the new composition he is working on but he wants to dedicate more hours in the day to work on it in the coming days On Tuesday February 12, Eddy 1) woke up and completed the morning routine at 7:00 am, [. . . ]

6) got ready to sleep around 10 pm.

Today is Wednesday February 13. Here is Eddy’s plan today in broad strokes: 1)

上面给出2月12日的行程规划,然后让AI自动补全2月13日的行程,下面是原文给出的补全结果:

- wake up and complete the morning routine at 8:00 am,

- go to Oak Hill College to take classes starting 10:00 am, [. . . ]

- work on his new music composition from 1:00 pm to 5:00 pm,

- have dinner at 5:30 pm,

- finish school assignments and go to bed by 11:00 pm.

当然,这个规划还比较粗略,例如第五条对应下午1点到5点,一共四个小时,因此还可以对其进行细化:

1:00 pm: start by brainstorming some ideas for his music composition […]

4:00 pm: take a quick break and recharge his creative energy before reviewing and polishing his composition.

当然还可以分解得更小的时间片,例如:5分钟:

4:00 pm: grab a light snack, such as a piece of fruit, a granola bar, or some nuts.

4:05 pm: take a short walk around his workspace […]

4:50 pm: take a few minutes to clean up his workspace.

然后将规划的结果反映到模拟动画中:

加入亿点点细节(外界刺激)

如果每天都是根据前一日的安排照猫画虎的方式安排角色,那么角色的每一天的都会过得高度相似,因此要加入一些外界刺激,让角色根据外部的环境做不同的事情。外部环境的描述如下(节选):

[node_807] 2023-02-13 19:51:10: Eddy Lin is meditating

[node_806] 2023-02-13 19:49:00: park garden is tranquil and well-maintained

[node_805] 2023-02-13 19:48:50: park garden is idle

[node_804] 2023-02-13 19:36:10: Eddy Lin is taking a walk

[node_803] 2023-02-13 19:10:10: Mei Lin is skimming the literature review

[node_802] 2023-02-13 19:08:50: shelf is organized and tidy

[node_801] 2023-02-13 19:07:00: shelf is idle

[node_800] 2023-02-13 19:07:00: shelf is neatly organized with books arranged in alphabetical order

[node_799] 2023-02-13 19:07:00: Mei Lin is reading the introduction of the research paper

[node_798] 2023-02-13 19:07:00: common room table is idle

[node_797] 2023-02-13 19:06:10: Eddy Lin is watching a movie

[node_796] 2023-02-13 18:56:30: closet is being accessed/opened

[node_795] 2023-02-13 18:56:10: desk is idle

[node_794] 2023-02-13 18:56:10: Eddy Lin is packing up his things

[node_793] 2023-02-13 18:46:10: closet is idle

[node_792] 2023-02-13 18:46:10: bed is idle

[node_791] 2023-02-13 18:46:10: desk is in use

这些信息都是根据角色所在的位置以及角色感知范围决定的,可以看到这些信息是非常琐碎的,对角色会产生影响,也可能不会产生影响,例如上面Eddy Lin冥想完毕后花园空闲,Eddy Lin就决定去散个步,大多数情况下也不会影响角色。要判断环境何时会影响角色,就要判断当前时刻事件发生的重要性。

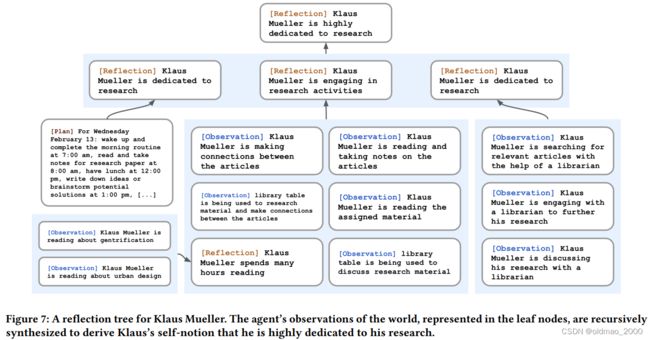

Reflect

论文提出采用Reflect对角色之前的记忆进行解读。

第一步是判断是否要触发某个动作/事件发生的可能性(重要性),这个重要性是下Prompt让ChatGPT自己进行评判打分完成的,具体Prompt如下:

On the scale of 1 to 10, where 1 is purely mundane (e.g., brushing teeth, making bed) and 10 is extremely poignant (e.g., a break up, college acceptance), rate the likely poignancy of the following piece of memory. Memory: buying groceries at The Willows Market and Pharmacy

Rating:

第二步:累计重要性的事件到达一定数量后,就可以对模型下类似让模型自己reflect的Prompt:

Given only the information above, what are 3 most salient highlevel questions we can answer about the subjects in the statements?

仅根据上述信息,我们可以回答有关陈述对象的 3 个最突出的高层次问题是什么?

模型可能会回答:

What topic is Klaus Mueller passionate about?

What is the relationship between Klaus Mueller and Maria Lopez?

八卦之火可以燎原。

第三步:用第二步中得到的三个问题检索记忆,从三个维度进行检索:重要性、即时性、关联性。

重要性在第一步的时候已经完成打分

即时性意思是该事件是否是最近发生的

关联性的判断文章貌似是利用另外一个模型直接抽取两个句子的向量表示然后算相似度

第四步:根据第三步检索结果再次提问:

What 5 high-level insights can you infer from the above statements? (example format: insight (because of 1, 5, 3))

提出5个high-level insights,并给出这些insights来自哪些记忆记录

Statements about Klaus Mueller

- Klaus Mueller is writing a research paper

- Klaus Mueller enjoys reading a book on gentrification

- Klaus Mueller is conversing with Ayesha Khan about exercising […]

第五步:将得到的Reflect结果放入记忆中

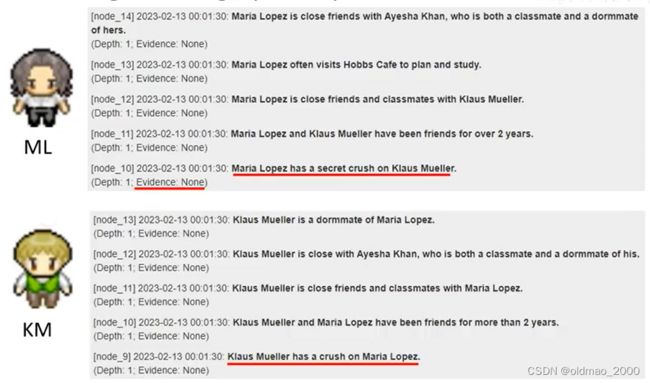

从上面的步骤可知,Reflect的结果其实是模型对记忆进行臆想后得到结果,就好比看到KM和ML一起散步,就想象他们要开始谈恋爱之类。这种臆想结果不一定是现实或实际发生的。

原文给出了一个Reflection树的例子:

这棵树的分支包含原来的Plan,对环境的Observation,以及产生的Reflection结果,Reflection的生成源头包括:Plan,Observation,其他Reflection。

在网站的角色详情页可以看到每个角色模拟运行过程中的Reflection,红线部分表明两个角色互有好感,但是这个可能是人为加入的,因为该Reflection的Evidence是None。

根据刺激改变计划

下面来模型根据外界的环境变化来修改计划。

每当模型看到一个实体(entity)的时候,就会提两个问题:

What is [observer]’s relationship with the [observed entity]?(角色看到的实体与角色是什么关系)

[Observed entity] is [action status of the observed entity](角色看到的实体状态如何?)例如:一个桌子状态是Idle,可能不会对角色产生很大影响,但桌子状态变成On fire,角色可能会要找灭火器了。

通过上面两个问题对回忆(Plan,Observation,Reflection)进行检索,将得到的两个答案进行合并后写成一个[Agent’s Summary Description] ,例如:

[Agent’s Summary Description]

It is February 13, 2023, 4:56 pm.

John Lin’s status: John is back home early from work.

Observation: John saw Eddy taking a short walk around his workplace.

Summary of relevant context from John’s memory:

Eddy Lin is John’s Lin’s son. Eddy Lin has been working on a music composition for his class. Eddy Lin likes to walk around the garden when he is thinking about or listening to music.

然后模型会生成一个问题:

Should John react to the observation, and if so, what would be an appropriate reaction?

最后产生一个答案:

John could consider asking Eddy about his music composition project.

然后根据该答案产生一个新Plan:

John决定和Eddy交谈

下面是两个agent进行对话的例子:

John的Summary

[Agent’s Summary Description]

It is February 13, 2023, 4:56 pm.

John Lin’s status: John is back home early from work.

Observation: John saw Eddy taking a short walk around his workplace.

Summary of relevant context from John’s memory:

Eddy Lin is John’s Lin’s son. Eddy Lin has been working on a music composition for his class. Eddy Lin likes to walk around the garden when he is thinking about or listening to music.

John is asking Eddy about his music composition project. What would he say to Eddy?

John生成的对话为:Hey Eddy, how’s the music composition project for your class coming along?

Eddy 的Summary

[Agent’s Summary Description]

It is February 13, 2023, 4:56 pm.

Eddy Lin’s status: Eddy is taking a short walk around his workplace.

Observation: John is initiating a conversation with Eddy.

Summary of relevant context from Eddy’s memory:

John Lin is Eddy Lin’s father. John Lin is caring and is interested to learn more about Eddy Lin’s school work. John Lin knows that Eddy Lin is working on a music composition.

Here is the dialogue history:

John: Hey Eddy, how’s the music composition project for your class coming along?

How would Eddy respond to John?

Eddy生成的对话为:

Hey Dad, it’s going well. I’ve been taking walks around the garden to clear my head and get some inspiration.

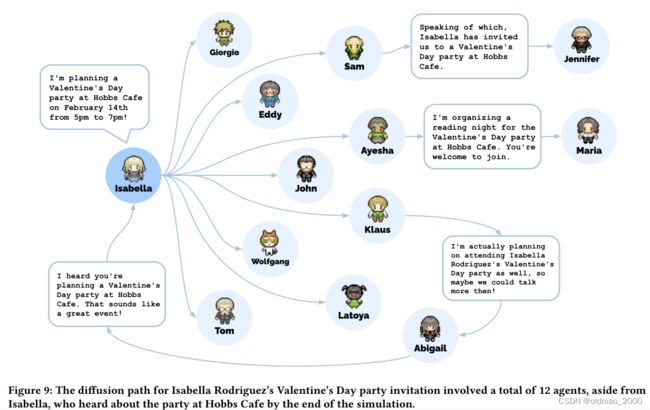

消息传播模拟

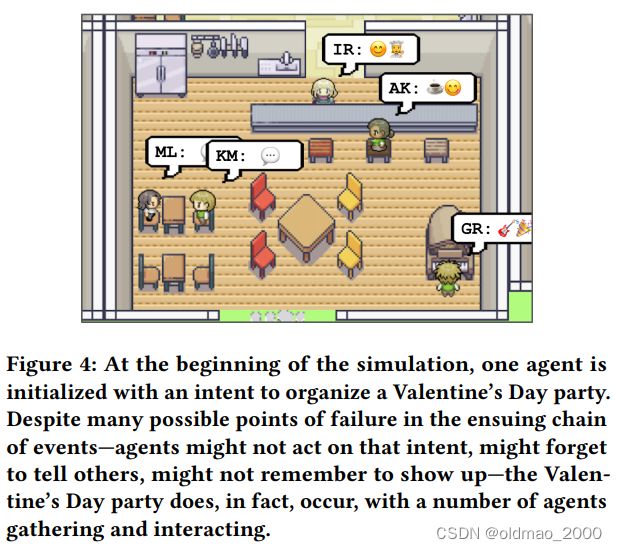

IR发布消息称要在情人节举办一个派对,然后观察消息如何在这个村子中传播,与现实世界比较相似,上面提到有相互爱慕的KM和ML居然没有互通消息,KM是把消息给了AB,ML则从AY得到消息,但消息这是AY 要在同一时间举办读书会。实际情况如下图:

注意看ML和KM居然坐一桌,原文给出了原因:

Maria’s character description mentions that she has a crush on Klaus. That night, Maria invites Klaus, her secret crush, to join her at the party, and he gladly accepts.