深度学习 Day6——T6好莱坞明星识别

- 本文为365天深度学习训练营 中的学习记录博客

- 原作者:K同学啊 | 接辅导、项目定制

文章目录

- 前言

- 一、我的环境

- 二、代码实现与执行结果

-

- 1.引入库

- 2.设置GPU(如果使用的是CPU可以忽略这步)

- 3.导入数据

- 4.查看数据

- 5.加载数据

- 6.可视化数据

- 7.再次检查数据

- 8.配置数据集

- 9.构建CNN网络模型

- 10.编译模型

- 11.训练模型

- 12.模型评估

- 13.指定图片进行预测

- 三、知识点详解

-

- 1.损失函数Loss详解

-

- 1.1. binary_crossentropy(对数损失函数)

- 1.2. categorical_crossentropy(多分类的对数损失函数)

- 1.3. sparse_categorical_crossentropy(稀疏性多分类的对数损失函数)

- 2.VGG网络结构详解

- 1.执行结果分析

- 2.尝试更改模型

-

- 2.1采用损失函数L2正则化

- 2.2官方VGG16模型

- 2.2自己搭建VGG16网络模型

- 2.3自己搭建VGG16网络模型+卷积全连接层均加dropout+正则L2

- 2.4自己搭建VGG16网络模型+全连接层加dropout+正则L2

- 总结

前言

本文将采用CNN实现好莱坞明星识别。较上篇文章,本文属于多分类问题。简单讲述实现代码与执行结果,并浅谈涉及知识点。

关键字:损失函数Loss详解,VGG网络结构详解。

一、我的环境

- 电脑系统:Windows 11

- 语言环境:python 3.8.6

- 编译器:pycharm

- 深度学习环境:TensorFlow 2.10.1

二、代码实现与执行结果

1.引入库

from PIL import Image

import numpy as np

from pathlib import Path

import tensorflow as tf

from tensorflow.keras import datasets, layers, models

from tensorflow.keras.callbacks import ModelCheckpoint

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings('ignore') # 忽略一些warning内容,无需打印

2.设置GPU(如果使用的是CPU可以忽略这步)

'''前期工作-设置GPU(如果使用的是CPU可以忽略这步)'''

gpus = tf.config.list_physical_devices("GPU")

if gpus:

gpu0 = gpus[0] # 如果有多个GPU,仅使用第0个GPU

tf.config.experimental.set_memory_growth(gpu0, True) # 设置GPU显存用量按需使用

tf.config.set_visible_devices([gpu0], "GPU")

本人电脑无独显,故该步骤被注释,未执行。

3.导入数据

'''前期工作-导入数据'''

data_dir = r"D:\DeepLearning\data\HollywoodStars"

data_dir = Path(data_dir)

4.查看数据

'''前期工作-查看数据'''

image_count = len(list(data_dir.glob('*/*.jpg')))

print("图片总数为:", image_count)

roses = list(data_dir.glob('Jennifer Lawrence/*.jpg'))

image = Image.open(str(roses[1]))

# 查看图像实例的属性

print(image.format, image.size, image.mode)

plt.imshow(image)

plt.show()

执行结果:

图片总数为: 1800

JPEG (474, 569) RGB

5.加载数据

'''数据预处理-加载数据'''

batch_size = 32

img_height = 224

img_width = 224

"""

关于image_dataset_from_directory()的详细介绍可以参考文章:https://mtyjkh.blog.csdn.net/article/details/117018789

"""

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.1,

subset="training",

label_mode="categorical",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.1,

subset="validation",

label_mode="categorical",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

class_names = train_ds.class_names

print(class_names)

运行结果:

Found 1800 files belonging to 17 classes.

Using 1620 files for training.

Found 1800 files belonging to 17 classes.

Using 180 files for validation.

['Angelina Jolie', 'Brad Pitt', 'Denzel Washington', 'Hugh Jackman', 'Jennifer Lawrence', 'Johnny Depp', 'Kate Winslet', 'Leonardo DiCaprio', 'Megan Fox', 'Natalie Portman', 'Nicole Kidman', 'Robert Downey Jr', 'Sandra Bullock', 'Scarlett Johansson', 'Tom Cruise', 'Tom Hanks', 'Will Smith']

6.可视化数据

'''数据预处理-可视化数据'''

plt.figure(figsize=(25, 20))

for images, labels in train_ds.take(2):

for i in range(20):

ax = plt.subplot(5, 4, i + 1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(class_names[np.argmax(labels[i])], fontsize=40)

plt.axis("off")

# 显示图片

plt.show()

7.再次检查数据

'''数据预处理-再次检查数据'''

# Image_batch是形状的张量(32,180,180,3)。这是一批形状180x180x3的32张图片(最后一维指的是彩色通道RGB)。

# Label_batch是形状(32,)的张量,这些标签对应32张图片

for image_batch, labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break

运行结果

(32, 224, 224, 3)

(32, 17)

8.配置数据集

本人电脑无GPU加速,故并未起到加速作用

'''数据预处理-配置数据集'''

AUTOTUNE = tf.data.AUTOTUNE

# shuffle():打乱数据,关于此函数的详细介绍可以参考:https://zhuanlan.zhihu.com/p/42417456

# prefetch():预取数据,加速运行

# cache():将数据集缓存到内存当中,加速运行

train_ds = train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)

本人电脑不带GPU,故prefetch无效

9.构建CNN网络模型

'''构建CNN网络'''

"""

关于卷积核的计算不懂的可以参考文章:https://blog.csdn.net/qq_38251616/article/details/114278995

layers.Dropout(0.4) 作用是防止过拟合,提高模型的泛化能力。

关于Dropout层的更多介绍可以参考文章:https://mtyjkh.blog.csdn.net/article/details/115826689

"""

model = models.Sequential([

layers.experimental.preprocessing.Rescaling(1./255, input_shape=(img_height, img_width, 3)),

layers.Conv2D(16, (3, 3), activation='relu', input_shape=(img_height, img_width, 3)), # 卷积层1,卷积核3*3

layers.AveragePooling2D((2, 2)), # 池化层1,2*2采样

layers.Conv2D(32, (3, 3), activation='relu'), # 卷积层2,卷积核3*3

layers.AveragePooling2D((2, 2)), # 池化层2,2*2采样

layers.Dropout(0.5),

layers.Conv2D(64, (3, 3), activation='relu'), # 卷积层3,卷积核3*3

layers.AveragePooling2D((2, 2)),

layers.Dropout(0.5),

layers.Conv2D(128, (3, 3), activation='relu'), # 卷积层3,卷积核3*3

layers.Dropout(0.5),

layers.Flatten(), # Flatten层,连接卷积层与全连接层

layers.Dense(128, activation='relu'), # 全连接层,特征进一步提取

layers.Dense(len(class_names)) # 输出层,输出预期结果

])

model.summary() # 打印网络结构

网络结构结果如下:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

rescaling (Rescaling) (None, 224, 224, 3) 0

conv2d (Conv2D) (None, 222, 222, 16) 448

average_pooling2d (AverageP (None, 111, 111, 16) 0

ooling2D)

conv2d_1 (Conv2D) (None, 109, 109, 32) 4640

average_pooling2d_1 (Averag (None, 54, 54, 32) 0

ePooling2D)

dropout (Dropout) (None, 54, 54, 32) 0

conv2d_2 (Conv2D) (None, 52, 52, 64) 18496

average_pooling2d_2 (Averag (None, 26, 26, 64) 0

ePooling2D)

dropout_1 (Dropout) (None, 26, 26, 64) 0

conv2d_3 (Conv2D) (None, 24, 24, 128) 73856

dropout_2 (Dropout) (None, 24, 24, 128) 0

flatten (Flatten) (None, 73728) 0

dense (Dense) (None, 128) 9437312

dense_1 (Dense) (None, 17) 2193

=================================================================

Total params: 9,536,945

Trainable params: 9,536,945

Non-trainable params: 0

10.编译模型

# 设置初始学习率

initial_learning_rate = 1e-4

lr_schedule = tf.keras.optimizers.schedules.ExponentialDecay(

initial_learning_rate,

decay_steps=60, # 敲黑板!!!这里是指 steps,不是指epochs

decay_rate=0.96, # lr经过一次衰减就会变成 decay_rate*lr

staircase=True)

# 将指数衰减学习率送入优化器

optimizer = tf.keras.optimizers.Adam(learning_rate=lr_schedule)

model.compile(optimizer=optimizer,

loss=tf.keras.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

11.训练模型

'''训练模型'''

epochs = 100

# 保存最佳模型参数

checkpointer = ModelCheckpoint('best_model.h5',

monitor='val_accuracy',

verbose=1,

save_best_only=True,

save_weights_only=True)

# 设置早停

earlystopper = EarlyStopping(monitor='val_accuracy',

min_delta=0.001,

patience=20,

verbose=1)

history = model.fit(train_ds,

validation_data=val_ds,

epochs=epochs,

callbacks=[checkpointer, earlystopper])

训练记录如下:

Epoch 1/100

51/51 [==============================] - ETA: 0s - loss: 2.8055 - accuracy: 0.1049

Epoch 1: val_accuracy improved from -inf to 0.13889, saving model to best_model.h5

51/51 [==============================] - 15s 278ms/step - loss: 2.8055 - accuracy: 0.1049 - val_loss: 2.7571 - val_accuracy: 0.1389

Epoch 2/100

51/51 [==============================] - ETA: 0s - loss: 2.7434 - accuracy: 0.1130

Epoch 2: val_accuracy improved from 0.13889 to 0.14444, saving model to best_model.h5

51/51 [==============================] - 15s 292ms/step - loss: 2.7434 - accuracy: 0.1130 - val_loss: 2.6944 - val_accuracy: 0.1444

Epoch 3/100

51/51 [==============================] - ETA: 0s - loss: 2.6159 - accuracy: 0.1549

Epoch 3: val_accuracy did not improve from 0.14444

51/51 [==============================] - 15s 285ms/step - loss: 2.6159 - accuracy: 0.1549 - val_loss: 2.6247 - val_accuracy: 0.1278

Epoch 4/100

51/51 [==============================] - ETA: 0s - loss: 2.4967 - accuracy: 0.1932

Epoch 4: val_accuracy did not improve from 0.14444

51/51 [==============================] - 15s 300ms/step - loss: 2.4967 - accuracy: 0.1932 - val_loss: 2.6000 - val_accuracy: 0.1278

Epoch 5/100

51/51 [==============================] - ETA: 0s - loss: 2.4010 - accuracy: 0.2105

Epoch 5: val_accuracy improved from 0.14444 to 0.18333, saving model to best_model.h5

51/51 [==============================] - 17s 328ms/step - loss: 2.4010 - accuracy: 0.2105 - val_loss: 2.5465 - val_accuracy: 0.1833

Epoch 6/100

51/51 [==============================] - ETA: 0s - loss: 2.2831 - accuracy: 0.2500

Epoch 6: val_accuracy improved from 0.18333 to 0.18889, saving model to best_model.h5

51/51 [==============================] - 18s 356ms/step - loss: 2.2831 - accuracy: 0.2500 - val_loss: 2.4655 - val_accuracy: 0.1889

Epoch 7/100

51/51 [==============================] - ETA: 0s - loss: 2.2410 - accuracy: 0.2685

Epoch 7: val_accuracy improved from 0.18889 to 0.19444, saving model to best_model.h5

51/51 [==============================] - 18s 360ms/step - loss: 2.2410 - accuracy: 0.2685 - val_loss: 2.5303 - val_accuracy: 0.1944

Epoch 8/100

51/51 [==============================] - ETA: 0s - loss: 2.1549 - accuracy: 0.2920

Epoch 8: val_accuracy improved from 0.19444 to 0.21111, saving model to best_model.h5

51/51 [==============================] - 19s 381ms/step - loss: 2.1549 - accuracy: 0.2920 - val_loss: 2.5058 - val_accuracy: 0.2111

Epoch 9/100

51/51 [==============================] - ETA: 0s - loss: 2.0604 - accuracy: 0.3204

Epoch 9: val_accuracy improved from 0.21111 to 0.27222, saving model to best_model.h5

51/51 [==============================] - 19s 379ms/step - loss: 2.0604 - accuracy: 0.3204 - val_loss: 2.4747 - val_accuracy: 0.2722

Epoch 10/100

51/51 [==============================] - ETA: 0s - loss: 1.9779 - accuracy: 0.3500

Epoch 10: val_accuracy did not improve from 0.27222

51/51 [==============================] - 20s 385ms/step - loss: 1.9779 - accuracy: 0.3500 - val_loss: 2.4349 - val_accuracy: 0.2389

Epoch 11/100

51/51 [==============================] - ETA: 0s - loss: 1.8998 - accuracy: 0.3778

Epoch 11: val_accuracy did not improve from 0.27222

51/51 [==============================] - 20s 387ms/step - loss: 1.8998 - accuracy: 0.3778 - val_loss: 2.4262 - val_accuracy: 0.2722

Epoch 12/100

51/51 [==============================] - ETA: 0s - loss: 1.8296 - accuracy: 0.4012

Epoch 12: val_accuracy did not improve from 0.27222

51/51 [==============================] - 20s 396ms/step - loss: 1.8296 - accuracy: 0.4012 - val_loss: 2.3290 - val_accuracy: 0.2556

Epoch 13/100

51/51 [==============================] - ETA: 0s - loss: 1.7464 - accuracy: 0.4395

Epoch 13: val_accuracy did not improve from 0.27222

51/51 [==============================] - 21s 402ms/step - loss: 1.7464 - accuracy: 0.4395 - val_loss: 2.3813 - val_accuracy: 0.2556

Epoch 14/100

51/51 [==============================] - ETA: 0s - loss: 1.6562 - accuracy: 0.4741

Epoch 14: val_accuracy did not improve from 0.27222

51/51 [==============================] - 21s 413ms/step - loss: 1.6562 - accuracy: 0.4741 - val_loss: 2.3683 - val_accuracy: 0.2722

Epoch 15/100

51/51 [==============================] - ETA: 0s - loss: 1.5793 - accuracy: 0.5074

Epoch 15: val_accuracy did not improve from 0.27222

51/51 [==============================] - 23s 445ms/step - loss: 1.5793 - accuracy: 0.5074 - val_loss: 2.4050 - val_accuracy: 0.2556

Epoch 16/100

51/51 [==============================] - ETA: 0s - loss: 1.4940 - accuracy: 0.5228

Epoch 16: val_accuracy improved from 0.27222 to 0.28333, saving model to best_model.h5

51/51 [==============================] - 23s 441ms/step - loss: 1.4940 - accuracy: 0.5228 - val_loss: 2.4447 - val_accuracy: 0.2833

Epoch 17/100

51/51 [==============================] - ETA: 0s - loss: 1.4428 - accuracy: 0.5235

Epoch 17: val_accuracy did not improve from 0.28333

51/51 [==============================] - 22s 427ms/step - loss: 1.4428 - accuracy: 0.5235 - val_loss: 2.4107 - val_accuracy: 0.2833

Epoch 18/100

51/51 [==============================] - ETA: 0s - loss: 1.3510 - accuracy: 0.5698

Epoch 18: val_accuracy did not improve from 0.28333

51/51 [==============================] - 22s 441ms/step - loss: 1.3510 - accuracy: 0.5698 - val_loss: 2.4776 - val_accuracy: 0.2556

Epoch 19/100

51/51 [==============================] - ETA: 0s - loss: 1.2422 - accuracy: 0.6167

Epoch 19: val_accuracy did not improve from 0.28333

51/51 [==============================] - 22s 437ms/step - loss: 1.2422 - accuracy: 0.6167 - val_loss: 2.4072 - val_accuracy: 0.2556

Epoch 20/100

51/51 [==============================] - ETA: 0s - loss: 1.1828 - accuracy: 0.6265

Epoch 20: val_accuracy improved from 0.28333 to 0.29444, saving model to best_model.h5

51/51 [==============================] - 22s 432ms/step - loss: 1.1828 - accuracy: 0.6265 - val_loss: 2.6686 - val_accuracy: 0.2944

Epoch 21/100

51/51 [==============================] - ETA: 0s - loss: 1.1126 - accuracy: 0.6444

Epoch 21: val_accuracy improved from 0.29444 to 0.31667, saving model to best_model.h5

51/51 [==============================] - 22s 423ms/step - loss: 1.1126 - accuracy: 0.6444 - val_loss: 2.5157 - val_accuracy: 0.3167

Epoch 22/100

51/51 [==============================] - ETA: 0s - loss: 1.0235 - accuracy: 0.6617

Epoch 22: val_accuracy did not improve from 0.31667

51/51 [==============================] - 22s 433ms/step - loss: 1.0235 - accuracy: 0.6617 - val_loss: 2.6514 - val_accuracy: 0.2944

Epoch 23/100

51/51 [==============================] - ETA: 0s - loss: 0.9598 - accuracy: 0.6963

Epoch 23: val_accuracy did not improve from 0.31667

51/51 [==============================] - 21s 421ms/step - loss: 0.9598 - accuracy: 0.6963 - val_loss: 2.5537 - val_accuracy: 0.3167

Epoch 24/100

51/51 [==============================] - ETA: 0s - loss: 0.8817 - accuracy: 0.7228

Epoch 24: val_accuracy did not improve from 0.31667

51/51 [==============================] - 21s 417ms/step - loss: 0.8817 - accuracy: 0.7228 - val_loss: 2.5943 - val_accuracy: 0.3167

Epoch 25/100

51/51 [==============================] - ETA: 0s - loss: 0.8433 - accuracy: 0.7426

Epoch 25: val_accuracy did not improve from 0.31667

51/51 [==============================] - 21s 421ms/step - loss: 0.8433 - accuracy: 0.7426 - val_loss: 2.7184 - val_accuracy: 0.2944

Epoch 26/100

51/51 [==============================] - ETA: 0s - loss: 0.7776 - accuracy: 0.7469

Epoch 26: val_accuracy improved from 0.31667 to 0.32222, saving model to best_model.h5

51/51 [==============================] - 21s 419ms/step - loss: 0.7776 - accuracy: 0.7469 - val_loss: 2.6321 - val_accuracy: 0.3222

Epoch 27/100

51/51 [==============================] - ETA: 0s - loss: 0.7214 - accuracy: 0.7815

Epoch 27: val_accuracy did not improve from 0.32222

51/51 [==============================] - 22s 428ms/step - loss: 0.7214 - accuracy: 0.7815 - val_loss: 2.6677 - val_accuracy: 0.2778

Epoch 28/100

51/51 [==============================] - ETA: 0s - loss: 0.6630 - accuracy: 0.7833

Epoch 28: val_accuracy improved from 0.32222 to 0.33889, saving model to best_model.h5

51/51 [==============================] - 21s 416ms/step - loss: 0.6630 - accuracy: 0.7833 - val_loss: 2.6997 - val_accuracy: 0.3389

Epoch 29/100

51/51 [==============================] - ETA: 0s - loss: 0.6287 - accuracy: 0.7938

Epoch 29: val_accuracy did not improve from 0.33889

51/51 [==============================] - 21s 413ms/step - loss: 0.6287 - accuracy: 0.7938 - val_loss: 2.7157 - val_accuracy: 0.3222

Epoch 30/100

51/51 [==============================] - ETA: 0s - loss: 0.5783 - accuracy: 0.8198

Epoch 30: val_accuracy improved from 0.33889 to 0.35000, saving model to best_model.h5

51/51 [==============================] - 21s 414ms/step - loss: 0.5783 - accuracy: 0.8198 - val_loss: 2.7749 - val_accuracy: 0.3500

Epoch 31/100

51/51 [==============================] - ETA: 0s - loss: 0.5210 - accuracy: 0.8414

Epoch 31: val_accuracy did not improve from 0.35000

51/51 [==============================] - 22s 423ms/step - loss: 0.5210 - accuracy: 0.8414 - val_loss: 2.8593 - val_accuracy: 0.3167

Epoch 32/100

51/51 [==============================] - ETA: 0s - loss: 0.4940 - accuracy: 0.8580

Epoch 32: val_accuracy did not improve from 0.35000

51/51 [==============================] - 21s 411ms/step - loss: 0.4940 - accuracy: 0.8580 - val_loss: 2.8407 - val_accuracy: 0.3278

Epoch 33/100

51/51 [==============================] - ETA: 0s - loss: 0.4592 - accuracy: 0.8593

Epoch 33: val_accuracy did not improve from 0.35000

51/51 [==============================] - 21s 410ms/step - loss: 0.4592 - accuracy: 0.8593 - val_loss: 2.7847 - val_accuracy: 0.3333

Epoch 34/100

51/51 [==============================] - ETA: 0s - loss: 0.4403 - accuracy: 0.8642

Epoch 34: val_accuracy did not improve from 0.35000

51/51 [==============================] - 21s 412ms/step - loss: 0.4403 - accuracy: 0.8642 - val_loss: 2.9447 - val_accuracy: 0.3444

Epoch 35/100

51/51 [==============================] - ETA: 0s - loss: 0.4224 - accuracy: 0.8710

Epoch 35: val_accuracy did not improve from 0.35000

51/51 [==============================] - 21s 408ms/step - loss: 0.4224 - accuracy: 0.8710 - val_loss: 2.9034 - val_accuracy: 0.3444

Epoch 36/100

51/51 [==============================] - ETA: 0s - loss: 0.3879 - accuracy: 0.8827

Epoch 36: val_accuracy did not improve from 0.35000

51/51 [==============================] - 22s 424ms/step - loss: 0.3879 - accuracy: 0.8827 - val_loss: 3.0820 - val_accuracy: 0.3444

Epoch 37/100

51/51 [==============================] - ETA: 0s - loss: 0.3686 - accuracy: 0.8914

Epoch 37: val_accuracy did not improve from 0.35000

51/51 [==============================] - 21s 409ms/step - loss: 0.3686 - accuracy: 0.8914 - val_loss: 3.0240 - val_accuracy: 0.3389

Epoch 38/100

51/51 [==============================] - ETA: 0s - loss: 0.3370 - accuracy: 0.8957

Epoch 38: val_accuracy did not improve from 0.35000

51/51 [==============================] - 21s 416ms/step - loss: 0.3370 - accuracy: 0.8957 - val_loss: 2.9707 - val_accuracy: 0.3222

Epoch 39/100

51/51 [==============================] - ETA: 0s - loss: 0.3051 - accuracy: 0.9198

Epoch 39: val_accuracy did not improve from 0.35000

51/51 [==============================] - 21s 411ms/step - loss: 0.3051 - accuracy: 0.9198 - val_loss: 3.0453 - val_accuracy: 0.3500

Epoch 40/100

51/51 [==============================] - ETA: 0s - loss: 0.2952 - accuracy: 0.9148

Epoch 40: val_accuracy improved from 0.35000 to 0.36111, saving model to best_model.h5

51/51 [==============================] - 21s 412ms/step - loss: 0.2952 - accuracy: 0.9148 - val_loss: 3.1188 - val_accuracy: 0.3611

Epoch 41/100

51/51 [==============================] - ETA: 0s - loss: 0.2839 - accuracy: 0.9204

Epoch 41: val_accuracy did not improve from 0.36111

51/51 [==============================] - 21s 409ms/step - loss: 0.2839 - accuracy: 0.9204 - val_loss: 3.0978 - val_accuracy: 0.3556

Epoch 42/100

51/51 [==============================] - ETA: 0s - loss: 0.2774 - accuracy: 0.9167

Epoch 42: val_accuracy improved from 0.36111 to 0.38333, saving model to best_model.h5

51/51 [==============================] - 21s 419ms/step - loss: 0.2774 - accuracy: 0.9167 - val_loss: 3.1818 - val_accuracy: 0.3833

Epoch 43/100

51/51 [==============================] - ETA: 0s - loss: 0.2527 - accuracy: 0.9284

Epoch 43: val_accuracy did not improve from 0.38333

51/51 [==============================] - 21s 412ms/step - loss: 0.2527 - accuracy: 0.9284 - val_loss: 3.2479 - val_accuracy: 0.3444

Epoch 44/100

51/51 [==============================] - ETA: 0s - loss: 0.2352 - accuracy: 0.9377

Epoch 44: val_accuracy did not improve from 0.38333

51/51 [==============================] - 21s 408ms/step - loss: 0.2352 - accuracy: 0.9377 - val_loss: 3.2737 - val_accuracy: 0.3444

Epoch 45/100

51/51 [==============================] - ETA: 0s - loss: 0.2081 - accuracy: 0.9457

Epoch 45: val_accuracy did not improve from 0.38333

51/51 [==============================] - 21s 410ms/step - loss: 0.2081 - accuracy: 0.9457 - val_loss: 3.2509 - val_accuracy: 0.3444

Epoch 46/100

51/51 [==============================] - ETA: 0s - loss: 0.2135 - accuracy: 0.9420

Epoch 46: val_accuracy did not improve from 0.38333

51/51 [==============================] - 21s 412ms/step - loss: 0.2135 - accuracy: 0.9420 - val_loss: 3.1913 - val_accuracy: 0.3722

Epoch 47/100

51/51 [==============================] - ETA: 0s - loss: 0.2210 - accuracy: 0.9395

Epoch 47: val_accuracy did not improve from 0.38333

51/51 [==============================] - 21s 409ms/step - loss: 0.2210 - accuracy: 0.9395 - val_loss: 3.3672 - val_accuracy: 0.3556

Epoch 48/100

51/51 [==============================] - ETA: 0s - loss: 0.1904 - accuracy: 0.9481

Epoch 48: val_accuracy did not improve from 0.38333

51/51 [==============================] - 21s 410ms/step - loss: 0.1904 - accuracy: 0.9481 - val_loss: 3.3153 - val_accuracy: 0.3556

Epoch 49/100

51/51 [==============================] - ETA: 0s - loss: 0.1923 - accuracy: 0.9469

Epoch 49: val_accuracy did not improve from 0.38333

51/51 [==============================] - 21s 409ms/step - loss: 0.1923 - accuracy: 0.9469 - val_loss: 3.3524 - val_accuracy: 0.3556

Epoch 50/100

51/51 [==============================] - ETA: 0s - loss: 0.1917 - accuracy: 0.9414

Epoch 50: val_accuracy did not improve from 0.38333

51/51 [==============================] - 22s 427ms/step - loss: 0.1917 - accuracy: 0.9414 - val_loss: 3.4341 - val_accuracy: 0.3444

Epoch 51/100

51/51 [==============================] - ETA: 0s - loss: 0.1630 - accuracy: 0.9580

Epoch 51: val_accuracy did not improve from 0.38333

51/51 [==============================] - 22s 439ms/step - loss: 0.1630 - accuracy: 0.9580 - val_loss: 3.5033 - val_accuracy: 0.3500

Epoch 52/100

51/51 [==============================] - ETA: 0s - loss: 0.1771 - accuracy: 0.9444

Epoch 52: val_accuracy did not improve from 0.38333

51/51 [==============================] - 22s 427ms/step - loss: 0.1771 - accuracy: 0.9444 - val_loss: 3.4804 - val_accuracy: 0.3500

Epoch 53/100

51/51 [==============================] - ETA: 0s - loss: 0.1714 - accuracy: 0.9494

Epoch 53: val_accuracy did not improve from 0.38333

51/51 [==============================] - 22s 423ms/step - loss: 0.1714 - accuracy: 0.9494 - val_loss: 3.5310 - val_accuracy: 0.3556

Epoch 54/100

51/51 [==============================] - ETA: 0s - loss: 0.1618 - accuracy: 0.9543

Epoch 54: val_accuracy did not improve from 0.38333

51/51 [==============================] - 21s 416ms/step - loss: 0.1618 - accuracy: 0.9543 - val_loss: 3.6081 - val_accuracy: 0.3444

Epoch 55/100

51/51 [==============================] - ETA: 0s - loss: 0.1656 - accuracy: 0.9580

Epoch 55: val_accuracy did not improve from 0.38333

51/51 [==============================] - 21s 416ms/step - loss: 0.1656 - accuracy: 0.9580 - val_loss: 3.5088 - val_accuracy: 0.3667

Epoch 56/100

51/51 [==============================] - ETA: 0s - loss: 0.1432 - accuracy: 0.9673

Epoch 56: val_accuracy did not improve from 0.38333

51/51 [==============================] - 22s 422ms/step - loss: 0.1432 - accuracy: 0.9673 - val_loss: 3.6391 - val_accuracy: 0.3611

Epoch 57/100

51/51 [==============================] - ETA: 0s - loss: 0.1355 - accuracy: 0.9660

Epoch 57: val_accuracy did not improve from 0.38333

51/51 [==============================] - 26s 513ms/step - loss: 0.1355 - accuracy: 0.9660 - val_loss: 3.5614 - val_accuracy: 0.3500

Epoch 58/100

51/51 [==============================] - ETA: 0s - loss: 0.1526 - accuracy: 0.9605

Epoch 58: val_accuracy did not improve from 0.38333

51/51 [==============================] - 20s 390ms/step - loss: 0.1526 - accuracy: 0.9605 - val_loss: 3.6141 - val_accuracy: 0.3444

Epoch 59/100

51/51 [==============================] - ETA: 0s - loss: 0.1412 - accuracy: 0.9593

Epoch 59: val_accuracy did not improve from 0.38333

51/51 [==============================] - 16s 322ms/step - loss: 0.1412 - accuracy: 0.9593 - val_loss: 3.7125 - val_accuracy: 0.3444

Epoch 60/100

51/51 [==============================] - ETA: 0s - loss: 0.1274 - accuracy: 0.9679

Epoch 60: val_accuracy did not improve from 0.38333

51/51 [==============================] - 17s 333ms/step - loss: 0.1274 - accuracy: 0.9679 - val_loss: 3.6794 - val_accuracy: 0.3500

Epoch 61/100

51/51 [==============================] - ETA: 0s - loss: 0.1354 - accuracy: 0.9611

Epoch 61: val_accuracy did not improve from 0.38333

51/51 [==============================] - 17s 338ms/step - loss: 0.1354 - accuracy: 0.9611 - val_loss: 3.6591 - val_accuracy: 0.3667

Epoch 62/100

51/51 [==============================] - ETA: 0s - loss: 0.1257 - accuracy: 0.9691

Epoch 62: val_accuracy did not improve from 0.38333

51/51 [==============================] - 18s 346ms/step - loss: 0.1257 - accuracy: 0.9691 - val_loss: 3.6611 - val_accuracy: 0.3667

Epoch 62: early stopping

12.模型评估

'''模型评估'''

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs_range = range(epochs)

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

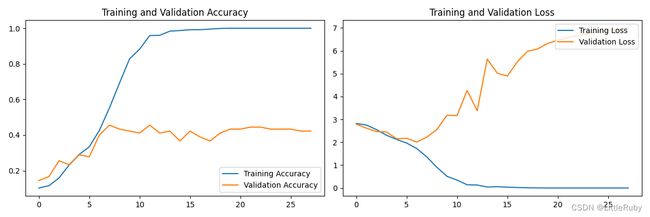

执行效果最好的参数为loss: 0.2774 - accuracy: 0.9167 - val_loss: 3.1818 - val_accuracy: 0.3833

acc 与 loss图

13.指定图片进行预测

'''指定图片进行预测'''

# 加载效果最好的模型权重

model.load_weights('best_model.h5')

img = Image.open(r"D:\DeepLearning\data\HollywoodStars\Angelina Jolie\008_d1f87068.jpg") #这里选择你需要预测的图片

print("type(img):", type(img), "img.size:", img.size)

# 对数据进行处理

img_array0 = np.asarray(img) # img.size=(224,224),tf.image.resize需传入3维或四维向量,采用np.asarray转换img_array.shape=(224,224,3)

print("img_array0.shape:", img_array0.shape)

image = tf.image.resize(img_array0, [img_height, img_width])

image1 = tf.keras.utils.array_to_img(image)

print("image:", type(image), image.shape)

print("image1:", type(image1), image1.size)

plt.imshow(image1)

plt.show()

img_array = tf.expand_dims(image, 0)

print("img_array:", type(img_array), img_array.shape)

predictions = model.predict(img_array) # 这里选用你已经训练好的模型

print("预测结果为:", class_names[np.argmax(predictions)])

type(img): img.size: (474, 474)

img_array0.shape: (474, 474, 3)

image: (224, 224, 3)

image1: (224, 224)

img_array: (1, 224, 224, 3)

1/1 [==============================] - 0s 90ms/step

预测结果为: Angelina Jolie

三、知识点详解

1.损失函数Loss详解

1.1. binary_crossentropy(对数损失函数)

与 sigmoid 相对应的损失函数,针对于二分类问题。

1.2. categorical_crossentropy(多分类的对数损失函数)

与 softmax 相对应的损失函数,如果是one-hot编码,则使用 categorical_crossentropy

1)什么是 One-Hot 编码

One-Hot 编码, 即 独热编码,又称一位有效编码,其方法是使用N位状态寄存器来对N个状态进行编码,每个状态都有它独立的寄存器位,并且在任意时候,其中只有一位有效。(百度百科)

说起来这么复杂,举个例子就很容易理解了: 比如颜色特征有3种:红色、绿色和黄色,转换成独热编码分别表示为(此时上述描述中的N=3):001,

010, 100。(当然转换成100, 010, 001也可以,只要有确定的一一对应关系即可) 红色、绿色和黄色分别转换成1, 2,

3行不行,一般不这样处理,这样处理也不叫独热编码了,只能说是文本转换成数字,具体原因可以往下看。

2)为什么要使用独热编码

在机器学习算法中,一般是通过计算特征之间距离或相似度来实现分类、回归的。一般来说,距离或相似度都是在欧式空间计算余弦相似性得到。

对于上述的离散型颜色特征,1、2、3编码方式就无法用在机器学习中,因为它们之间存在大小关系,而实际上各颜色特征之间并没有大小关系,红色>绿色???

所以,独热编码便发挥出了作用,特征之间的计算会更加合理。

3)独热编码的优缺点

- 优点:为处理离散型特征提供了方法,在一定程度上扩充了特征属性。

- 缺点:当特征的类别很多时,特征空间会变得非常大,在这种情况下,一般可以用PCA来减少维度。

4)什么时候不需要使用独热编码- 离散特征的取值之间没有大小意义时,可以使用独热编码。

当离散特征的取值之间有大小意义或者有序时,比如衣服尺寸: [X, XL, XXL],那么就不能使用独热编码,而使用数值的映射{X: 1, XL: 2, XXL: 3}。- 如果特征是离散的,并且不用独热编码就可以很合理的计算出距离,就没必要进行独热编码。

- 有些并不是基于向量空间度量的算法,数值只是类别符号,没有偏序关系,就不用进行独热编码。

调用方法一:

model.compile(optimizer="adam",

loss='categorical_crossentropy',

metrics=['accuracy'])

调用方法二:

model.compile(optimizer="adam",

loss=tf.keras.losses.CategoricalCrossentropy(),

metrics=['accuracy'])

1.3. sparse_categorical_crossentropy(稀疏性多分类的对数损失函数)

与 softmax 相对应的损失函数,如果是整数编码,则使用 sparse_categorical_crossentropy

调用方法一:

model.compile(optimizer="adam",

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

调用方法二:

model.compile(optimizer="adam",

loss=tf.keras.losses.SparseCategoricalCrossentropy(),

metrics=['accuracy'])

函数原型

tf.keras.losses.SparseCategoricalCrossentropy(

from_logits=False,

reduction=losses_utils.ReductionV2.AUTO,

name='sparse_categorical_crossentropy'

)

参数说明:

- from_logits: 为True时,会将y_pred转化为概率(用softmax),否则不进行转换,通常情况下用True结果更稳定;

- reduction:类型为tf.keras.losses.Reduction,对loss进行处理,默认是AUTO;

- name: name

2.VGG网络结构详解

深度神经网络一般由卷积部分和全连接部分构成。卷积部分一般包含卷积(可以有多个不同尺寸的核级联组成)、池化、Dropout等,其中Dropout层必须放在池化之后。全连接部分一般最多包含2到3个全连接,最后通过Softmax得到分类结果,由于全连接层参数量大,现在倾向于尽可能的少用或者不用全连接层。神经网络的发展趋势是考虑使用更小的过滤器,如11,33等;网络的深度更深(2012年AlexNet 8层,2014年VGG 19层、GoogLeNet 22层,2015年ResNet 152层);减少全连接层的使用,以及越来越复杂的网络结构,如GoogLeNet引入的Inception模块结构。

VGGNet获得2014年ImageNet亚军,VGG是牛津大学 Visual Geometry Group(视觉几何组)的缩写,以研究机构命名。

VGG 在深度学习领域中非常有名,很多人 fine-tune 的时候都是下载 VGG 的预训练过的权重模型,然后在次基础上进行迁移学习。VGG 是 ImageNet 2014 年目标定位竞赛的第一名,图像分类竞赛的第二名,需要注意的是,图像分类竞赛的第一名是大名鼎鼎的 GoogLeNet,那么为什么人们更愿意使用第二名的 VGG 呢?因为 VGG 够简单!VGG 最大的特点就是它在之前的网络模型上,通过比较彻底地采用 3x3 尺寸的卷积核来堆叠神经网络,从而加深整个神经网络的层级。

我们都知道,最早的卷积神经网络 LeNet,但 2012 年 Krizhevsk 在 ISRVC 上使用的 AlexNet 一战成名,极大鼓舞了世人对神经网络的研究,后续人们不断在 AlexNet 的架构上进行改良,并且成绩也越来越好。VGG在AlexNet基础上做了改进,整个网络全部使用了同样大小的33卷积核尺寸和22最大池化尺寸,网络结果简洁。

VGG论文给出了一个非常振奋人心的结论:卷积神经网络的深度增加和小卷积核的使用对网络的最终分类识别效果有很大的作用。记得在AlexNet论文中,也做了最后指出了网络深度的对最终的分类结果有很大的作用。

对于 AlexNet 的改进的手段有 2 个:

- 在第一层卷积层上采用感受野更小的的尺寸,和更小的 stride。

- 在 AlexNet 的基础上加深它的卷积层数量。**

VGG16包含16层,VGG19包含19层。一系列的VGG在最后三层的全连接层上完全一样,整体结构上都包含5组卷积层,卷积层之后跟一个MaxPool。所不同的是5组卷积层中包含的级联的卷积层越来越多。

AlexNet中每层卷积层中只包含一个卷积,卷积核的大小是77,。在VGGNet中每层卷积层中包含2~4个卷积操作,卷积核的大小是33,卷积步长是1,池化核是2*2,步长为2,。VGGNet最明显的改进就是降低了卷积核的尺寸,增加了卷积的层数。

使用2x2池化核,小的池化核能够带来更细节的信息捕获。当时也有average pooling,但是在图像任务上max-pooling的效果更好,max更加容易捕捉图像上的变化,带来更大的局部信息差异性,更好的描述边缘纹理等。

使用多个较小卷积核的卷积层代替一个卷积核较大的卷积层,一方面可以减少参数,另一方面相当于进行了更多的非线性映射,增加了网络的拟合表达能力。

1.执行结果分析

从模型评估图loss曲线分析,train_loss 不断下降,但是 test_loss 不断上升,传统意义上来说,这是过拟合了。

过拟合是非常入门级别的知识,当训练集较小,参数较多的时候,随着不断训练,就会学习到训练集的噪声。在不断的学习中,会学习测试集的特征,但这个特征,同样包含了噪声数据。train_loss会降低,但是面对测试集的时候,其学习的测试集噪声特性并不是普遍规律,就出现了test_loss反升的情况。

解决过拟合:

- 增加训练集数量

- 调整模型结构

- 损失函数正则化,即限制权值 Weight-decay,让有一定的缓冲,不要非等于0就是最好。

- 随机失活(dropout),随机让一些神经元失活,增加随机性

- 提前结束训练,别训练太多次数

2.尝试更改模型

2.1采用损失函数L2正则化

更改创建CNN网络模型部分代码,代码如下

#增加L2正则化

model = models.Sequential([

layers.experimental.preprocessing.Rescaling(1./255, input_shape=(img_height, img_width, 3)),

layers.Conv2D(16, (3, 3), activation='relu', input_shape=(img_height, img_width, 3),

kernel_regularizer=regularizers.l2(0.0001)), # 卷积层1,卷积核3*3

layers.AveragePooling2D((2, 2)), # 池化层1,2*2采样

layers.Conv2D(32, (3, 3), activation='relu',

kernel_regularizer=regularizers.l2(0.0001)), # 卷积层2,卷积核3*3

layers.AveragePooling2D((2, 2)), # 池化层2,2*2采样

layers.Dropout(0.5),

layers.Conv2D(64, (3, 3), activation='relu',

kernel_regularizer=regularizers.l2(0.0001)), # 卷积层3,卷积核3*3

layers.AveragePooling2D((2, 2)),

layers.Dropout(0.5),

layers.Conv2D(128, (3, 3), activation='relu',

kernel_regularizer=regularizers.l2(0.0001)), # 卷积层3,卷积核3*3

layers.Dropout(0.5),

layers.Flatten(), # Flatten层,连接卷积层与全连接层

layers.Dense(128, activation='relu',

kernel_regularizer=regularizers.l2(0.0001)), # 全连接层,特征进一步提取

layers.Dense(len(class_names)) # 输出层,输出预期结果

])

model.summary() # 打印网络结构

训练结果

Epoch 1/100

54/54 [==============================] - ETA: 0s - loss: 2.8482 - accuracy: 0.1006

Epoch 1: val_accuracy improved from -inf to 0.14444, saving model to best_model.h5

54/54 [==============================] - 16s 271ms/step - loss: 2.8482 - accuracy: 0.1006 - val_loss: 2.8117 - val_accuracy: 0.1444

Epoch 2/100

54/54 [==============================] - ETA: 0s - loss: 2.7738 - accuracy: 0.1240

Epoch 2: val_accuracy improved from 0.14444 to 0.18889, saving model to best_model.h5

54/54 [==============================] - 15s 275ms/step - loss: 2.7738 - accuracy: 0.1240 - val_loss: 2.6974 - val_accuracy: 0.1889

Epoch 3/100

54/54 [==============================] - ETA: 0s - loss: 2.6706 - accuracy: 0.1696

Epoch 3: val_accuracy improved from 0.18889 to 0.20000, saving model to best_model.h5

54/54 [==============================] - 15s 278ms/step - loss: 2.6706 - accuracy: 0.1696 - val_loss: 2.6341 - val_accuracy: 0.2000

......

54/54 [==============================] - 19s 346ms/step - loss: 0.1888 - accuracy: 0.9579 - val_loss: 3.7291 - val_accuracy: 0.4111

Epoch 64/100

54/54 [==============================] - ETA: 0s - loss: 0.1934 - accuracy: 0.9550

Epoch 64: val_accuracy did not improve from 0.42222

54/54 [==============================] - 20s 373ms/step - loss: 0.1934 - accuracy: 0.9550 - val_loss: 3.6920 - val_accuracy: 0.3889

Epoch 64: early stopping

执行效果最好的参数为loss: 0.3227 - accuracy: 0.9135 - val_loss: 3.2502 - val_accuracy: 0.4222

acc 与 loss图

2.2官方VGG16模型

# 官方调用vgg16

def keras_vgg16(class_names, img_height, img_width):

model = tf.keras.applications.VGG16(

include_top=True,

weights=None,

input_tensor=None,

input_shape=(img_height, img_width, 3),

pooling=max,

classes=len(class_names),

classifier_activation="softmax",

)

return model

训练结果:

Epoch 1/100

54/54 [==============================] - ETA: 0s - loss: 2.9707 - accuracy: 0.0977

Epoch 1: val_accuracy improved from -inf to 0.14444, saving model to best_model.h5

54/54 [==============================] - 486s 9s/step - loss: 2.9707 - accuracy: 0.0977 - val_loss: 2.7888 - val_accuracy: 0.1444

Epoch 2/100

54/54 [==============================] - ETA: 0s - loss: 2.7283 - accuracy: 0.1181

Epoch 2: val_accuracy did not improve from 0.14444

54/54 [==============================] - 567s 11s/step - loss: 2.7283 - accuracy: 0.1181 - val_loss: 2.6279 - val_accuracy: 0.1333

......

Epoch 27/100

54/54 [==============================] - ETA: 0s - loss: 1.6735e-05 - accuracy: 1.0000

Epoch 27: val_accuracy did not improve from 0.51111

54/54 [==============================] - 498s 9s/step - loss: 1.6735e-05 - accuracy: 1.0000 - val_loss: 4.6294 - val_accuracy: 0.5000

Epoch 27: early stopping

执行效果最好的参数为loss: 1.1272 - accuracy: 0.6316 - val_loss: 2.0616 - val_accuracy: 0.5111

acc 与 loss图:

2.2自己搭建VGG16网络模型

def VGG16(class_names, img_height, img_width):

model = models.Sequential([

layers.experimental.preprocessing.Rescaling(1. / 255, input_shape=(img_height, img_width, 3)),

# 1

layers.Conv2D(filters=64, kernel_size=(3, 3), activation='relu', padding='same',

input_shape=(img_height, img_width, 3)),

layers.Conv2D(filters=64, kernel_size=(3, 3), activation='relu', padding='same'),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

# 2

layers.Conv2D(filters=128, kernel_size=(3, 3), activation='relu', padding='same'),

layers.Conv2D(filters=128, kernel_size=(3, 3), activation='relu', padding='same'),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

# 3

layers.Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same'),

layers.Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same'),

layers.Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same'),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

# 4

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same'),

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same'),

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same'),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

# 5

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same'),

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same'),

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same'),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

# FC

layers.Flatten(),

layers.Dense(4096, activation='relu'),

layers.Dense(4096, activation='relu'),

layers.Dense(len(class_names), activation='softmax')

])

return model

model = VGG16(class_names, img_height, img_width)

model.summary() # 打印网络结构

训练结果

Epoch 1/100

54/54 [==============================] - ETA: 0s - loss: 2.8211 - accuracy: 0.1023

Epoch 1: val_accuracy improved from -inf to 0.14444, saving model to best_model.h5

54/54 [==============================] - 543s 10s/step - loss: 2.8211 - accuracy: 0.1023 - val_loss: 2.7904 - val_accuracy: 0.1444

Epoch 2/100

54/54 [==============================] - ETA: 0s - loss: 2.7529 - accuracy: 0.1152

Epoch 2: val_accuracy improved from 0.14444 to 0.16667, saving model to best_model.h5

......

Epoch 28/100

54/54 [==============================] - ETA: 0s - loss: 1.9864e-05 - accuracy: 1.0000

Epoch 28: val_accuracy did not improve from 0.45556

54/54 [==============================] - 443s 8s/step - loss: 1.9864e-05 - accuracy: 1.0000 - val_loss: 6.9847 - val_accuracy: 0.4222

Epoch 28: early stopping

执行效果最好的参数为loss: 1.3580 - accuracy: 0.5520 - val_loss: 2.2237 - val_accuracy: 0.4556

acc 与 loss图

2.3自己搭建VGG16网络模型+卷积全连接层均加dropout+正则L2

def VGG16_dropout_l2(class_names, img_height, img_width):

model = models.Sequential([

layers.experimental.preprocessing.Rescaling(1. / 255, input_shape=(img_height, img_width, 3)),

# 1

layers.Conv2D(filters=64, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001), input_shape=(img_height, img_width, 3)),

layers.Conv2D(filters=64, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

layers.Dropout(0.5),

# 2

layers.Conv2D(filters=128, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=128, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

layers.Dropout(0.5),

# 3

layers.Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

layers.Dropout(0.5),

# 4

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

layers.Dropout(0.5),

# 5

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

layers.Dropout(0.5),

# FC

layers.Flatten(),

layers.Dense(4096, activation='relu'),

layers.Dropout(0.5),

layers.Dense(4096, activation='relu'),

layers.Dropout(0.5),

layers.Dense(len(class_names), activation='softmax')

])

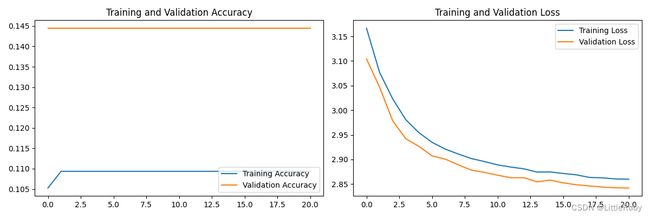

执行效果最好的参数为oss: 3.2022 - accuracy: 0.1099 - val_loss: 3.1968 - val_accuracy: 0.1444

acc 与 loss图

效果极差,acc在训练过程中几乎不变

2.4自己搭建VGG16网络模型+全连接层加dropout+正则L2

def VGG16_dropout_l2_1(class_names, img_height, img_width):

model = models.Sequential([

layers.experimental.preprocessing.Rescaling(1. / 255, input_shape=(img_height, img_width, 3)),

# 1

layers.Conv2D(filters=64, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001), input_shape=(img_height, img_width, 3)),

layers.Conv2D(filters=64, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

# 2

layers.Conv2D(filters=128, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=128, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

# 3

layers.Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

# 4

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

# 5

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same',

kernel_regularizer=regularizers.l2(0.0001)),

layers.MaxPool2D(pool_size=(2, 2), strides=2),

# FC

layers.Flatten(),

layers.Dense(4096, activation='relu'),

layers.Dropout(0.5),

layers.Dense(4096, activation='relu'),

layers.Dropout(0.5),

layers.Dense(len(class_names), activation='softmax')

])

return model

执行效果最好的参数为oss:3.1666 - accuracy: 0.1053 - val_loss: 3.1042 - val_accuracy: 0.1444

acc 与 loss图

效果仍比较差

总结

通过本次的学习,能通过tenserflow框架创建cnn网络模型进行好莱坞明星识别,尝试更改模型来提升识别率

在原有网络增加L2正则化,识别率提升;

采用官方调用的VGG16网络模型或自己搭建的VGG16网络模型,识别率提升;

VGG16网络+卷积全连接层均加dropout+正则L2,识别率下降,效果极差;

VGG16网络+全连接层均加dropout+正则L2,识别率下降,效果极差

本人电脑未安装显卡,训练速度极其慢,暂时不尝试其他调参。