多机多卡分布式训练

1. 环境搭建

- 分布式训练框架:accelerate+deepspeed+pdsh(可有可无)

- 基础环境:cuda、显卡驱动、pytorch

1.1 安装相关包

- cuda安装:参考官网安装步骤

wget https://developer.download.nvidia.com/compute/cuda/11.8.0/local_installers/cuda-repo-rhel7-11-8-local-11.8.0_520.61.05-1.x86_64.rpm

sudo rpm -i cuda-repo-rhel7-11-8-local-11.8.0_520.61.05-1.x86_64.rpm

sudo yum clean all

sudo yum -y install nvidia-driver-latest-dkms

sudo yum -y install cuda- 显卡驱动安装:下载官网驱动包并安装

- pytorch安装:参考官网安装指令

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118- accelerate安装:参考huggingface官网

pip install accelerate- deepspeed安装:参考deepspeed github

pip install deepspeed- pdsh安装:官网说明,用于分布执行shell命令

可以参考教程:并行分布式运维工具pdsh-阿里云开发者社区

tar jxvf pdsh-2.29.tar.bz2

cd pdsh-2.29

./configure --with-ssh --with-rsh --with-mrsh --with-dshgroups --with-machines=/etc/pdsh/machines

make

make install

pdsh -V注意:所有机器均需要安装一模一样的环境:版本需要一致;conda安装路径一致;同时cuda和pytorch版本相对应,如下图所示。

2. 启动分布式训练

2.1 使用accelerate

# 1、生成accelerate配置文件,使用命令行生成

accelerate config2.2 启动分布式训练脚本

方式一:使用pdsh,仅需要在主节点启动

# accelerate语法

accelerate launch --config_file python_script.py

# 示例如下

config_path=/data0/sdmt/mxm/kohya_ss/my_util/config/deepspeed_pdsh_config.yaml

accelerate launch --config_file $config_path \

train_text_to_image_sdxl.py --mixed_precision fp16 --enable_xformers_memory_efficient_attention --gradient_checkpointing --noise_offset 0.05 --cache_dir "/data0/sdmt/mxm/datasets/" --num_train_epochs 20 --resolution 1024 --proportion_empty_prompts 0.2 --learning_rate 1e-06 --lr_scheduler "constant" --lr_warmup_steps 0 --validation_prompt "a pair of casual leather shoes" --validation_epochs 5 --pretrained_model_name_or_path "stabilityai/stable-diffusion-xl-base-1.0" --pretrained_vae_model_name_or_path "madebyollin/sdxl-vae-fp16-fix" --train_data_dir "/data0/sdmt/train_img/000/10_train_1024_hug"

如下所示,启动2台服务器,服务器每台一张显卡。

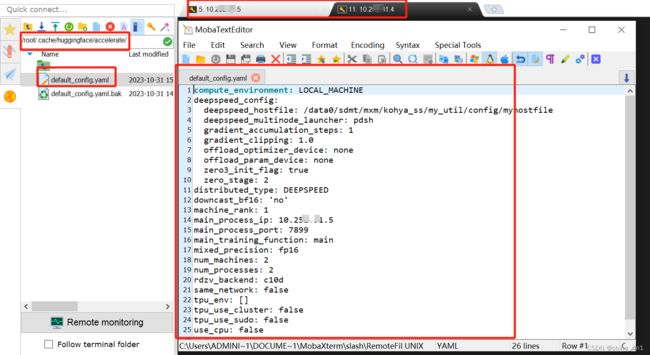

2.3 accelerate 和 deepspeed环境配置文件

- accelerate

使用accelerate config生成的配置文件default_config.yaml,默认在如下目录下:

/root/.cache/huggingface/accelerate/default_config.yaml

- deepspeed 命令运行,默认在当前目录下如下文件,环境变量是追加形式:

.deepspeed_env.yaml

注意:nccl问题,可以查看.deepspeed_env.yaml配置问题。可以直接删除

3. 问题记录

- 问题1:RuntimeError:

127.0.0.1: Some tensors share memory, this will lead to duplicate memory on disk and potential differences when loading them again:

127.0.0.1: Configuration saved in sdxl-model-finetuned/vae/config.jsonit/s]

127.0.0.1: Model weights saved in sdxl-model-finetuned/vae/diffusion_pytorch_model.safetensors

127.0.0.1: [2023-11-02 11:16:49,463] [INFO] [launch.py:347:main] Process 43174 exits successfully.

127.0.0.1: Configuration saved in sdxl-model-finetuned/unet/config.json

127.0.0.1: Traceback (most recent call last):

127.0.0.1: File "/data0/sdmt/mxm/kohya_ss/my_util/train_text_to_image_sdxl.py", line 1206, in

127.0.0.1: main(args)

127.0.0.1: File "/data0/sdmt/mxm/kohya_ss/my_util/train_text_to_image_sdxl.py", line 1157, in main

127.0.0.1: pipeline.save_pretrained(args.output_dir)

127.0.0.1: File "/root/.conda/envs/sdxl/lib/python3.10/site-packages/diffusers/pipelines/pipeline_utils.py", line 661, in save_pretrained

127.0.0.1: save_method(os.path.join(save_directory, pipeline_component_name), **save_kwargs)

127.0.0.1: File "/root/.conda/envs/sdxl/lib/python3.10/site-packages/diffusers/models/modeling_utils.py", line 361, in save_pretrained

127.0.0.1: safetensors.torch.save_file(

127.0.0.1: File "/root/.conda/envs/sdxl/lib/python3.10/site-packages/safetensors/torch.py", line 232, in save_file

127.0.0.1: serialize_file(_flatten(tensors), filename, metadata=metadata)

127.0.0.1: File "/root/.conda/envs/sdxl/lib/python3.10/site-packages/safetensors/torch.py", line 394, in _flatten

127.0.0.1: raise RuntimeError(

127.0.0.1: RuntimeError:

127.0.0.1: Some tensors share memory, this will lead to duplicate memory on disk and potential differences when loading them again: [{'down_blocks.2.attentions.0.transformer_blocks.4.attn2.to_v.weight', 'up_blocks.0.attentions.2.transformer_blocks. 解决方法:报错信息里,提到save_file() --serialize_file()。仅需要更改代码,添加设置参数 safe_serialization=False,保存为pickle格式。

pipeline.save_pretrained(args.output_dir, safe_serialization=False)- 问题2:RuntimeError:

Timed out initializing process group in store based barrier on

10.252.31.4: rank: 0, for key: store_based_barrier_key:2 (world_size=2, worker_count=1,

10.252.31.4: timeout=0:30:00)

raise RuntimeError(

127.0.0.1: RuntimeError: Timed out initializing process group in store based barrier on rank: 0, for key: store_based_barrier_key:2 (world_size=2, worker_count=1, timeout=0:30:00)

.....................

127.0.0.1: │ /root/.conda/envs/sdxl/lib/python3.10/site-packages/torch/distributed/distri │

127.0.0.1: │ buted_c10d.py:469 in _store_based_barrier │

127.0.0.1: │ │

127.0.0.1: │ 466 │ │ │ log_time = time.time() │

127.0.0.1: │ 467 │ │ │

127.0.0.1: │ 468 │ │ if timedelta(seconds=(time.time() - start)) > timeout: │

127.0.0.1: │ ❱ 469 │ │ │ raise RuntimeError( │

127.0.0.1: │ 470 │ │ │ │ "Timed out initializing process group in store based │

127.0.0.1: │ 471 │ │ │ │ "rank: {}, for key: {} (world_size={}, worker_count={ │

127.0.0.1: │ 472 │ │ │ │ │ rank, store_key, world_size, worker_count, timeou │

127.0.0.1: ╰──────────────────────────────────────────────────────────────────────────────╯

127.0.0.1: RuntimeError: Timed out initializing process group in store based barrier on

127.0.0.1: rank: 0, for key: store_based_barrier_key:2 (world_size=2, worker_count=1,

127.0.0.1: timeout=0:30:00)解决办法:原因:由于数据量大,导致数据预处理时间长,超出默认时间30分通信连接。

修改超时间,如下如所示,超时修改为120分钟,修改源代码constans.py文件在客路径下/root/.conda/envs/sdxl/lib/python3.10/site-packages/torch/distributed/constants.py,注意修改/root/.conda/envs/sdxl/lib/python3.10/site-packages/deepspeed/constants.py可能会未生效。

from torch._C._distributed_c10d import _DEFAULT_PG_TIMEOUT

# Default process group wide timeout, if applicable.

# This only applies to the gloo and nccl backends

# (only if NCCL_BLOCKING_WAIT or NCCL_ASYNC_ERROR_HANDLING is set to 1).

# To make an attempt at backwards compatibility with THD, we use an

# extraordinarily high default timeout, given that THD did not have timeouts.

default_pg_timeout = _DEFAULT_PG_TIMEOUT

from datetime import timedelta

default_pg_timeout = timedelta(minutes=120)- 训练速度影响因素:网络带宽(千兆/万兆)区别。如下所示训练时长差异,两台A800机器: