YOLOv8改进 | 检测头篇 | ASFF改进YOLOv8检测头(全网首发)

一、本文介绍

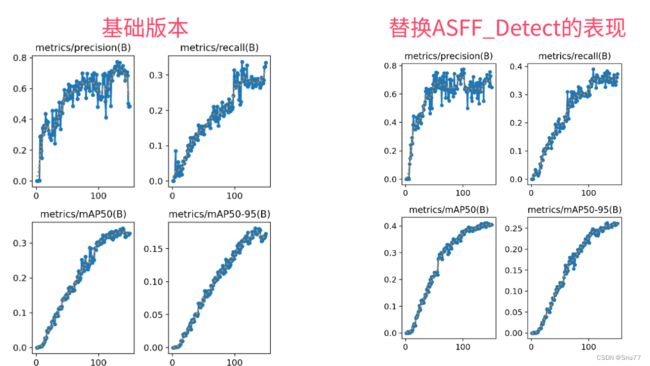

本文给大家带来的改进机制是利用ASFF改进YOLOv8的检测头形成新的检测头Detect_ASFF,其主要创新是引入了一种自适应的空间特征融合方式,有效地过滤掉冲突信息,从而增强了尺度不变性。经过我的实验验证,修改后的检测头在所有的检测目标上均有大幅度的涨点效果,此版本为三头版本,后期我会在该检测头的基础上进行二次创新形成四头版本的Detect_ASFF助力小目标检测,本文的检测头非常推荐大家使用。

推荐指数:⭐⭐⭐⭐⭐

涨点效果:⭐⭐⭐⭐⭐

专栏回顾:YOLOv8改进系列专栏——本专栏持续复习各种顶会内容——科研必备

训练结果对比图->

目录

一、本文介绍

二、ASFF的基本框架原理

三、ASFF_Detect的核心代码

四、手把手教你添加ASFF_Detect检测头

4.1 修改一

4.2 修改二

4.3 修改三

4.4 修改四

4.5 修改五

4.6 修改六

4.7 修改七

4.8 修改八

4.9 修改九

五、Detect_AFPN检测头的yaml文件

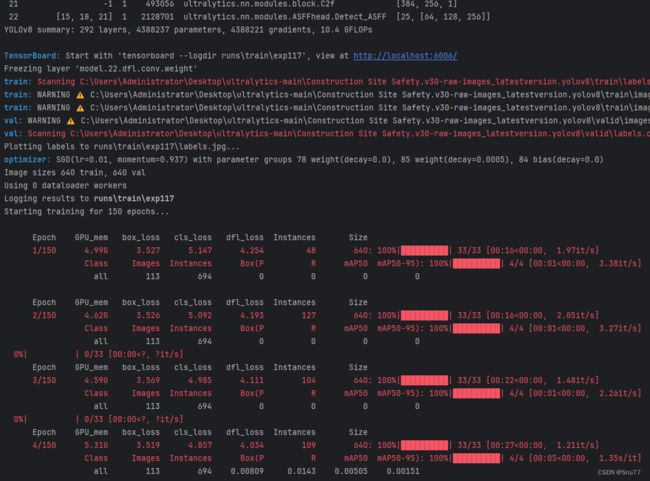

六、完美运行记录

七、本文总结

二、ASFF的基本框架原理

官方论文地址: 官方论文地址点击即可跳转

官方代码地址: 官方代码地址点击即可跳转

ASFF(自适应空间特征融合)方法针对单次对象检测任务提出,解决了不同特征尺度间的一致性问题。其主要创新是引入了一种自适应的空间特征融合方式,有效地过滤掉冲突信息,从而增强了尺度不变性。研究表明,将ASFF应用于YOLOv3可以显著提高在MS COCO数据集上的检测性能,实现了速度与准确性的平衡。ASFF方法可以通过反向传播进行训练,与模型无关,并且引入的计算开销很小,使其成为现有对象检测框架的一种实用增强。

ASFF的创新点主要包括:

1. 自适应空间特征融合:提出了一种新的金字塔特征融合策略,能够空间过滤冲突信息,压制不同尺度特征间的不一致性。

2. 改善尺度不变性:通过ASFF策略,显著提升了特征的尺度不变性,有助于提高对象检测的准确性。

3. 低推理开销:在提升检测性能的同时,几乎不增加额外的推理开销。

这些创新使ASFF成为单次对象检测领域的一个重要进展,特别是对处理不同尺度对象的能力的提升,所以将其对于一些单一尺度检测的Neck适合是不适用的大家需要注意这一点。

这张图片展示了自适应空间特征融合(ASFF)机制的工作原理,它是用于单次对象检测的。在这种结构中,不同层级的特征(表示为不同颜色的层)首先通过各自的步幅(stride)进行下采样或上采样,以便所有特征具有相同的空间维度。

- Level 1、Level 2和Level 3指的是特征金字塔中不同层级的特征,每个层级都有不同的空间分辨率。

- ASFF-1、ASFF-2和ASFF-3表示应用了ASFF机制的不同层级的特征融合。

- 在ASFF-3的放大部分,我们可以看到来自其他层级的特征(x1→3、x2→3)被调整到与第三层(x3→3)相同的尺寸,然后它们通过学习到的权重图进行加权融合,生成最终用于预测的融合特征(![]() )。

)。

通过这种方式,ASFF能够在每个空间位置自适应地选择最有用的特征,以提高检测的准确性。这种方法允许模型根据每个特定位置和尺度的上下文,灵活地决定哪些特征层级对最终预测最为重要。

三、ASFF_Detect的核心代码

现在是三头的检测版本,后期我会出四头的增加小目标检测层的版本给大家,其使用方式看章节四。

import torch

import torch.nn as nn

from ultralytics.utils.tal import dist2bbox, make_anchors

import math

import torch.nn.functional as F

def autopad(k, p=None, d=1): # kernel, padding, dilation

"""Pad to 'same' shape outputs."""

if d > 1:

k = d * (k - 1) + 1 if isinstance(k, int) else [d * (x - 1) + 1 for x in k] # actual kernel-size

if p is None:

p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

return p

class Conv(nn.Module):

"""Standard convolution with args(ch_in, ch_out, kernel, stride, padding, groups, dilation, activation)."""

default_act = nn.SiLU() # default activation

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):

"""Initialize Conv layer with given arguments including activation."""

super().__init__()

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

def forward(self, x):

"""Apply convolution, batch normalization and activation to input tensor."""

return self.act(self.bn(self.conv(x)))

def forward_fuse(self, x):

"""Perform transposed convolution of 2D data."""

return self.act(self.conv(x))

class DFL(nn.Module):

"""

Integral module of Distribution Focal Loss (DFL).

Proposed in Generalized Focal Loss https://ieeexplore.ieee.org/document/9792391

"""

def __init__(self, c1=16):

"""Initialize a convolutional layer with a given number of input channels."""

super().__init__()

self.conv = nn.Conv2d(c1, 1, 1, bias=False).requires_grad_(False)

x = torch.arange(c1, dtype=torch.float)

self.conv.weight.data[:] = nn.Parameter(x.view(1, c1, 1, 1))

self.c1 = c1

def forward(self, x):

"""Applies a transformer layer on input tensor 'x' and returns a tensor."""

b, c, a = x.shape # batch, channels, anchors

return self.conv(x.view(b, 4, self.c1, a).transpose(2, 1).softmax(1)).view(b, 4, a)

# return self.conv(x.view(b, self.c1, 4, a).softmax(1)).view(b, 4, a)

class ASFFV5(nn.Module):

def __init__(self, level, multiplier=1, rfb=False, vis=False, act_cfg=True):

"""

ASFF version for YoloV5 .

different than YoloV3

multiplier should be 1, 0.5 which means, the channel of ASFF can be

512, 256, 128 -> multiplier=1

256, 128, 64 -> multiplier=0.5

For even smaller, you need change code manually.

"""

super(ASFFV5, self).__init__()

self.level = level

self.dim = [int(1024 * multiplier), int(512 * multiplier),

int(256 * multiplier)]

# print(self.dim)

self.inter_dim = self.dim[self.level]

if level == 0:

self.stride_level_1 = Conv(int(512 * multiplier), self.inter_dim, 3, 2)

self.stride_level_2 = Conv(int(256 * multiplier), self.inter_dim, 3, 2)

self.expand = Conv(self.inter_dim, int(

1024 * multiplier), 3, 1)

elif level == 1:

self.compress_level_0 = Conv(

int(1024 * multiplier), self.inter_dim, 1, 1)

self.stride_level_2 = Conv(

int(256 * multiplier), self.inter_dim, 3, 2)

self.expand = Conv(self.inter_dim, int(512 * multiplier), 3, 1)

elif level == 2:

self.compress_level_0 = Conv(

int(1024 * multiplier), self.inter_dim, 1, 1)

self.compress_level_1 = Conv(

int(512 * multiplier), self.inter_dim, 1, 1)

self.expand = Conv(self.inter_dim, int(

256 * multiplier), 3, 1)

# when adding rfb, we use half number of channels to save memory

compress_c = 8 if rfb else 16

self.weight_level_0 = Conv(

self.inter_dim, compress_c, 1, 1)

self.weight_level_1 = Conv(

self.inter_dim, compress_c, 1, 1)

self.weight_level_2 = Conv(

self.inter_dim, compress_c, 1, 1)

self.weight_levels = Conv(

compress_c * 3, 3, 1, 1)

self.vis = vis

def forward(self, x): # l,m,s

"""

# 128, 256, 512

512, 256, 128

from small -> large

"""

x_level_0 = x[2] # l

x_level_1 = x[1] # m

x_level_2 = x[0] # s

# print('x_level_0: ', x_level_0.shape)

# print('x_level_1: ', x_level_1.shape)

# print('x_level_2: ', x_level_2.shape)

if self.level == 0:

level_0_resized = x_level_0

level_1_resized = self.stride_level_1(x_level_1)

level_2_downsampled_inter = F.max_pool2d(

x_level_2, 3, stride=2, padding=1)

level_2_resized = self.stride_level_2(level_2_downsampled_inter)

elif self.level == 1:

level_0_compressed = self.compress_level_0(x_level_0)

level_0_resized = F.interpolate(

level_0_compressed, scale_factor=2, mode='nearest')

level_1_resized = x_level_1

level_2_resized = self.stride_level_2(x_level_2)

elif self.level == 2:

level_0_compressed = self.compress_level_0(x_level_0)

level_0_resized = F.interpolate(

level_0_compressed, scale_factor=4, mode='nearest')

x_level_1_compressed = self.compress_level_1(x_level_1)

level_1_resized = F.interpolate(

x_level_1_compressed, scale_factor=2, mode='nearest')

level_2_resized = x_level_2

# print('level: {}, l1_resized: {}, l2_resized: {}'.format(self.level,

# level_1_resized.shape, level_2_resized.shape))

level_0_weight_v = self.weight_level_0(level_0_resized)

level_1_weight_v = self.weight_level_1(level_1_resized)

level_2_weight_v = self.weight_level_2(level_2_resized)

# print('level_0_weight_v: ', level_0_weight_v.shape)

# print('level_1_weight_v: ', level_1_weight_v.shape)

# print('level_2_weight_v: ', level_2_weight_v.shape)

levels_weight_v = torch.cat(

(level_0_weight_v, level_1_weight_v, level_2_weight_v), 1)

levels_weight = self.weight_levels(levels_weight_v)

levels_weight = F.softmax(levels_weight, dim=1)

fused_out_reduced = level_0_resized * levels_weight[:, 0:1, :, :] + \

level_1_resized * levels_weight[:, 1:2, :, :] + \

level_2_resized * levels_weight[:, 2:, :, :]

out = self.expand(fused_out_reduced)

if self.vis:

return out, levels_weight, fused_out_reduced.sum(dim=1)

else:

return out

class Detect_ASFF(nn.Module):

"""YOLOv8 Detect head for detection models."""

dynamic = False # force grid reconstruction

export = False # export mode

shape = None

anchors = torch.empty(0) # init

strides = torch.empty(0) # init

def __init__(self, nc=80, ch=(), multiplier=0.25, rfb=False):

"""Initializes the YOLOv8 detection layer with specified number of classes and channels."""

super().__init__()

self.nc = nc # number of classes

self.nl = len(ch) # number of detection layers

self.reg_max = 16 # DFL channels (ch[0] // 16 to scale 4/8/12/16/20 for n/s/m/l/x)

self.no = nc + self.reg_max * 4 # number of outputs per anchor

self.stride = torch.zeros(self.nl) # strides computed during build

c2, c3 = max((16, ch[0] // 4, self.reg_max * 4)), max(ch[0], min(self.nc, 100)) # channels

self.cv2 = nn.ModuleList(

nn.Sequential(Conv(x, c2, 3), Conv(c2, c2, 3), nn.Conv2d(c2, 4 * self.reg_max, 1)) for x in ch)

self.cv3 = nn.ModuleList(nn.Sequential(Conv(x, c3, 3), Conv(c3, c3, 3), nn.Conv2d(c3, self.nc, 1)) for x in ch)

self.dfl = DFL(self.reg_max) if self.reg_max > 1 else nn.Identity()

self.l0_fusion = ASFFV5(level=0, multiplier=multiplier, rfb=rfb)

self.l1_fusion = ASFFV5(level=1, multiplier=multiplier, rfb=rfb)

self.l2_fusion = ASFFV5(level=2, multiplier=multiplier, rfb=rfb)

def forward(self, x):

"""Concatenates and returns predicted bounding boxes and class probabilities."""

x1 = self.l0_fusion(x)

x2 = self.l1_fusion(x)

x3 = self.l2_fusion(x)

x = [x3, x2, x1]

shape = x[0].shape # BCHW

for i in range(self.nl):

x[i] = torch.cat((self.cv2[i](x[i]), self.cv3[i](x[i])), 1)

if self.training:

return x

elif self.dynamic or self.shape != shape:

self.anchors, self.strides = (x.transpose(0, 1) for x in make_anchors(x, self.stride, 0.5))

self.shape = shape

x_cat = torch.cat([xi.view(shape[0], self.no, -1) for xi in x], 2)

if self.export and self.format in ('saved_model', 'pb', 'tflite', 'edgetpu', 'tfjs'): # avoid TF FlexSplitV ops

box = x_cat[:, :self.reg_max * 4]

cls = x_cat[:, self.reg_max * 4:]

else:

box, cls = x_cat.split((self.reg_max * 4, self.nc), 1)

dbox = dist2bbox(self.dfl(box), self.anchors.unsqueeze(0), xywh=True, dim=1) * self.strides

if self.export and self.format in ('tflite', 'edgetpu'):

# Normalize xywh with image size to mitigate quantization error of TFLite integer models as done in YOLOv5:

# https://github.com/ultralytics/yolov5/blob/0c8de3fca4a702f8ff5c435e67f378d1fce70243/models/tf.py#L307-L309

# See this PR for details: https://github.com/ultralytics/ultralytics/pull/1695

img_h = shape[2] * self.stride[0]

img_w = shape[3] * self.stride[0]

img_size = torch.tensor([img_w, img_h, img_w, img_h], device=dbox.device).reshape(1, 4, 1)

dbox /= img_size

y = torch.cat((dbox, cls.sigmoid()), 1)

return y if self.export else (y, x)

def bias_init(self):

"""Initialize Detect() biases, WARNING: requires stride availability."""

m = self # self.model[-1] # Detect() module

# cf = torch.bincount(torch.tensor(np.concatenate(dataset.labels, 0)[:, 0]).long(), minlength=nc) + 1

# ncf = math.log(0.6 / (m.nc - 0.999999)) if cf is None else torch.log(cf / cf.sum()) # nominal class frequency

for a, b, s in zip(m.cv2, m.cv3, m.stride): # from

a[-1].bias.data[:] = 1.0 # box

b[-1].bias.data[:m.nc] = math.log(5 / m.nc / (640 / s) ** 2) # cls (.01 objects, 80 classes, 640 img)

if __name__ == "__main__":

# Generating Sample image

image1 = (1, 64, 32, 32)

image2 = (1, 128, 16, 16)

image3 = (1, 256, 8, 8)

image1 = torch.rand(image1)

image2 = torch.rand(image2)

image3 = torch.rand(image3)

image = [image1, image2, image3]

channel = (64, 128, 256)

# Model

mobilenet_v1 = Detect_ASFF(nc=80, ch=channel)

out = mobilenet_v1(image)

print(out)四、手把手教你添加ASFF_Detect检测头

这里教大家添加检测头,检测头的添加相对于其它机制来说比较复杂一点,修改的地方比较多。

具体更多细节可以看我的添加教程博客,下面的教程也是完美运行的,看那个都行具体大家选择。

添加教程->YOLOv8改进 | 如何在网络结构中添加注意力机制、C2f、卷积、Neck、检测头

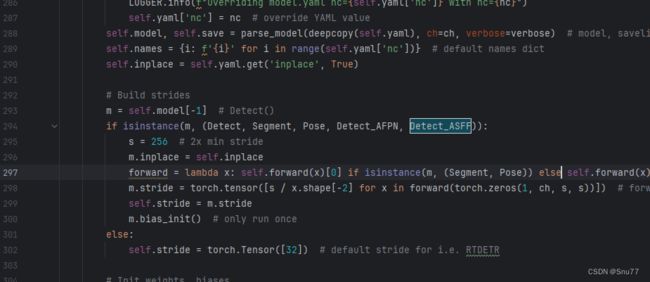

4.1 修改一

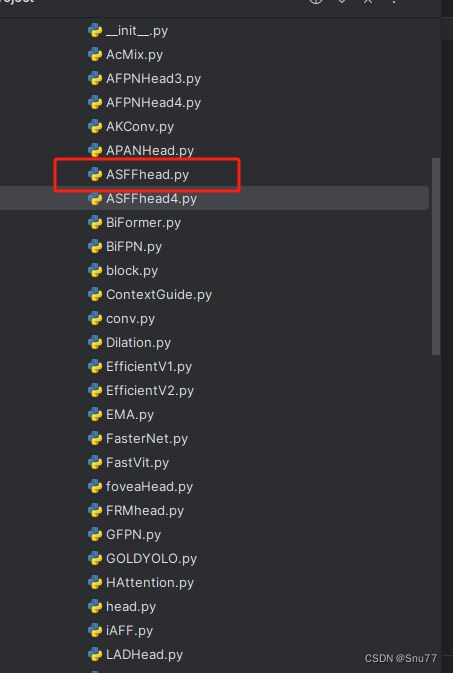

首先我们将上面的代码复制粘贴到'ultralytics/nn/modules' 目录下新建一个py文件复制粘贴进去,具体名字自己来定,我这里起名为ASFFHead.py。

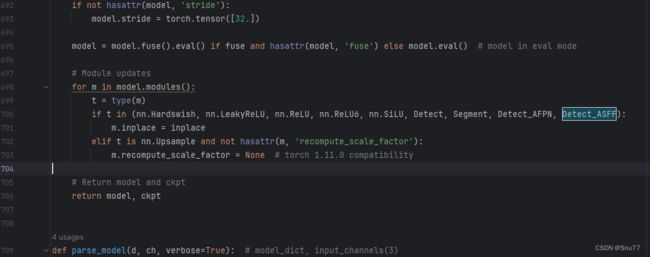

4.2 修改二

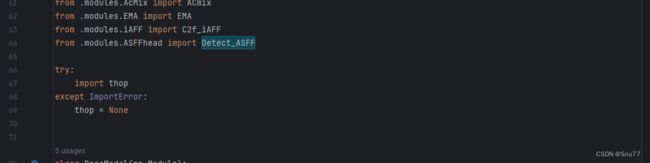

我们新建完上面的文件之后,找到如下的文件'ultralytics/nn/tasks.py'。这里需要修改的地方有点多,总共有7处,但都很简单。首先我们在该文件的头部导入我们ASFFHead文件中的检测头。

4.3 修改三

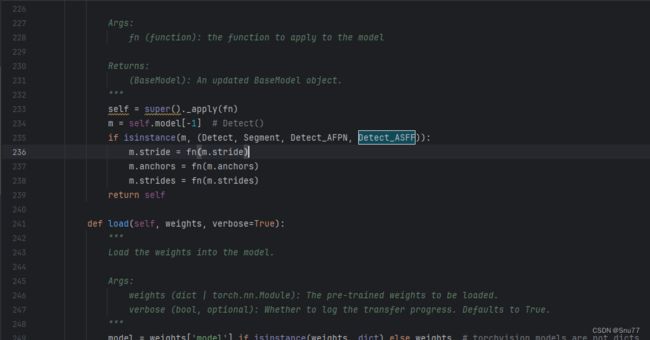

找到如下的代码进行将检测头添加进去,这里给大家推荐个快速搜索的方法用ctrl+f然后搜索Detect然后就能快速查找了。

4.4 修改四

同理将我们的检测头添加到如下的代码里。

4.5 修改五

同理

4.6 修改六

同理

4.7 修改七

同理

4.8 修改八

这里有一些不一样,我们需要加一行代码

else:

return 'detect'为啥呢不一样,因为这里的m在代码执行过程中会将你的代码自动转换为小写,所以直接else方便一点,以后出现一些其它分割或者其它的教程的时候在提供其它的修改教程。

4.9 修改九

这里也有一些不一样,需要自己手动添加一个括号,提醒一下大家不要直接添加,和我下面保持一致。

五、Detect_AFPN检测头的yaml文件

这个代码的yaml文件和正常的对比也需要修改一下,如下->

# Ultralytics YOLO , AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs

x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOP

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 3, C2f, [128, True]]

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 6, C2f, [256, True]]

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- [-1, 6, C2f, [512, True]]

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- [-1, 3, C2f, [1024, True]]

- [-1, 1, SPPF, [1024, 5]] # 9

# YOLOv8.0n head

head:

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 6], 1, Concat, [1]] # cat backbone P4

- [-1, 3, C2f, [512]] # 12

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 4], 1, Concat, [1]] # cat backbone P3

- [-1, 3, C2f, [256]] # 15 (P3/8-small)

- [-1, 1, Conv, [256, 3, 2]]

- [[-1, 12], 1, Concat, [1]] # cat head P4

- [-1, 3, C2f, [512]] # 18 (P4/16-medium)

- [-1, 1, Conv, [512, 3, 2]]

- [[-1, 9], 1, Concat, [1]] # cat head P5

- [-1, 3, C2f, [1024]] # 21 (P5/32-large)

- [[15, 18, 21], 1, Detect_ASFF, [nc]] # Detect(P3, P4, P5)

六、完美运行记录

最后提供一下完美运行的图片。

七、本文总结

到此本文的正式分享内容就结束了,在这里给大家推荐我的YOLOv8改进有效涨点专栏,本专栏目前为新开的平均质量分98分,后期我会根据各种最新的前沿顶会进行论文复现,也会对一些老的改进机制进行补充,目前本专栏免费阅读(暂时,大家尽早关注不迷路~),如果大家觉得本文帮助到你了,订阅本专栏,关注后续更多的更新~

专栏回顾:YOLOv8改进系列专栏——本专栏持续复习各种顶会内容——科研必备

![]()