flink1.17—关于TopN的测试

关于TopN的测试

flink:1.17.1

官网地址:https://nightlies.apache.org/flink/flink-docs-release-1.17/zh/docs/dev/table/sql/queries/topn/

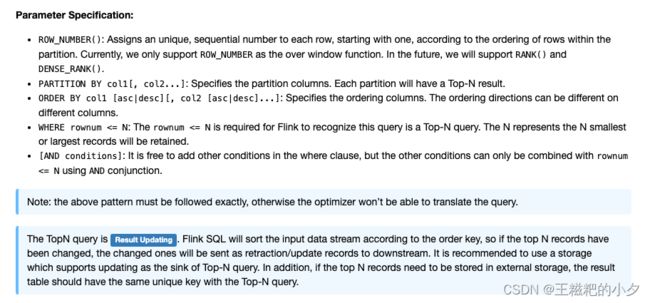

TopN语法:

SELECT [column_list]

FROM (

SELECT [column_list],

ROW_NUMBER() OVER ([PARTITION BY col1[, col2...]]

ORDER BY col1 [asc|desc][, col2 [asc|desc]...]) AS rownum

FROM table_name)

WHERE rownum <= N [AND conditions]

官网中说到:外部存储的唯一键应该与TopN中的唯一键相同。接下来主要测试一下唯一键相同和不同所产生的效果

唯一键相同

1. 首先准备数据

- 在kafak中创建topic,命名为sensor,唯一键为:user_id+room_id+event+row_num。数据格式如下:

{"event":"heartbeat","user_id":"12345","room_id":"456","duration":10,"event_time":"2023-07-23 08:38:05"} event:事件名称, user_id:用户id, room_id:直播间id, duration:时长, event_time:事件时间 - 创建一个mysql的结果表:live_user_rownumber。唯一键键同样为user_id+room_id+event+row_num

CREATE TABLE `live_user_rownumber` (

`user_id` int NOT NULL,

`room_id` int NOT NULL,

`duration` int DEFAULT NULL,

`event_time` varchar(255) DEFAULT NULL,

`event` varchar(100) NOT NULL,

`row_num` int NOT NULL,

`id` int NOT NULL AUTO_INCREMENT,

PRIMARY KEY (`id`),

UNIQUE KEY `live_user_rownumber_UN` (`user_id`,`room_id`,`event`,`row_num`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci;

- 在flinksql中创建表:

CREATE TABLE bi_live_user_rownumber (

user_id bigint,

room_id bigint,

duration bigint,

event_time STRING,

event STRING,

row_num bigint,

PRIMARY KEY (user_id,room_id,row_num,event) NOT ENFORCED

) WITH (

'connector' = 'jdbc',

'url' = 'jdbc:mysql://localhost:3306/bi',

'table-name' = 'live_user_rownumber',

'username' = 'root',

'password' = 'xxxxxx'

);

CREATE TABLE sensor_with_watermark (

`event` String,

`user_id` BIGINT,

`room_id` BIGINT,

`duration` BIGINT,

`event_time` String,

event_time_watermark as to_TIMESTAMP (event_time,'yyyy-MM-dd HH:mm:ss'),

WATERMARK FOR event_time_watermark AS event_time_watermark - INTERVAL '5' SECOND,

`partition` BIGINT METADATA VIRTUAL,

`offset` BIGINT METADATA VIRTUAL,

`ts` TIMESTAMP(3) METADATA FROM 'timestamp' )

WITH (

'properties.bootstrap.servers' = 'localhost:9092',

'connector' = 'kafka',

'format' = 'json',

'topic' = 'sensor',

'properties.group.id' =

'testGroup',

'scan.startup.mode' = 'earliest-offset' )

2. 来一段TopN的sql。这个逻辑很简单,就是计算每个直播间每个用户最后一次心跳的时间。

insert into bi_live_user_rownumber

select * from (

select user_id,

room_id,

duration,

event_time,

event,

ROW_NUMBER() over(partition by user_id,room_id order by event_time_watermark desc) as row_num

from sensor_with_watermark

where event = 'heartbeat'

) where row_num<=1

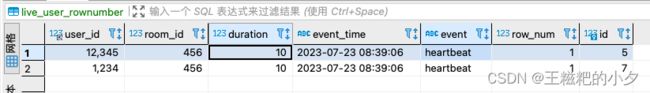

3. 来几条数据看看

第一条

{"event":"heartbeat",

"user_id":"12345",

"room_id":"456",

"duration":10,

"event_time":"2023-07-23 08:38:06"}

{"event":"heartbeat",

"user_id":"12345",

"room_id":"456",

"duration":10,

"event_time":"2023-07-23 08:38:06"}

{"event":"heartbeat",

"user_id":"1234",

"room_id":"456",

"duration":10,

"event_time":"2023-07-23 08:38:06"}

测试到这里一切都正常,这也符合我们预期的结果。

唯一键不相同

1. 将唯一约束去掉user_id,并清空数据,命令如下:

ALTER TABLE bi.live_user_rownumber DROP KEY live_user_rownumber_UN;

ALTER TABLE bi.live_user_rownumber ADD CONSTRAINT live_user_rownumber_UN UNIQUE KEY (room_id,event,row_num);

2. 修改flinksql中定义的mysql表,注意主键的变化

CREATE TABLE bi_live_user_rownumber (

user_id bigint,

room_id bigint,

duration bigint,

event_time STRING,

event STRING,

row_num bigint,

PRIMARY KEY (room_id,row_num,event) NOT ENFORCED

) WITH (

'connector' = 'jdbc',

'url' = 'jdbc:mysql://localhost:3306/bi',

'table-name' = 'live_user_rownumber',

'username' = 'root',

'password' = 'chen1993'

);

3. 执行sql

insert into bi_live_user_rownumber

select * from (

select user_id,

room_id,

duration,

event_time,

event,

ROW_NUMBER() over(partition by user_id,room_id order by event_time_watermark desc) as row_num

from sensor_with_watermark

where event = 'heartbeat'

) where row_num<=1

来几条数据看看

第一条

{"event":"heartbeat",

"user_id":"1234",

"room_id":"456",

"duration":10,

"event_time":"2023-07-23 08:39:06"}

第二条 改了user_id

{"event":"heartbeat",

"user_id":"12345",

"room_id":"456",

"duration":10,

"event_time":"2023-07-23 08:39:06"}

从结果可以看到,原来的user_id=1234的那条数据被更新成了12345。可见结果数据并不是我们想要的。因为在mysql侧,他的唯一约束是room_id+row_num+event,当表bi_live_user_rownumber中指定了PRIMARY KEY时,flink在写入mysql时会使用INSERT … ON DUPLICATE KEY UPDATE … 语法。详见flink官网:https://nightlies.apache.org/flink/flink-docs-release-1.17/zh/docs/connectors/table/jdbc/