3.hadoop HA-QJM 安装

目录

- 概述

- 实践

-

- 一主两从

-

- 解压

- 配置文件

- hadoop-env.sh

- core-site.xml

- hdfs-site.xml

- yarn-site.xml

- mapred-site.xml

- workers

- 分发

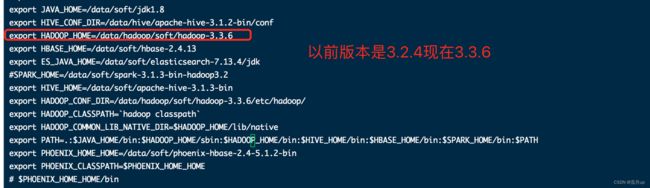

- 环境变量

- 格式化

- 启动 hdfs

- 启动 yarn

-

- 验证

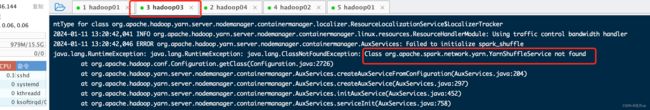

- bug

- zookeeper

- HA

-

- core-site.xml

- hdfs-site.xml

-

- 改为配置分发

- 执行

- 验证 HA

- 结束

概述

环境:hadoop 3.3.6

jdk : 1.8

linux 环境:

[root@hadoop01 soft]# uname -a

Linux hadoop01 3.10.0-693.el7.x86_64 #1 SMP Tue Aug 22 21:09:27 UTC 2017 x86_64 x86_64 x86_64 GNU/Linux

相关文章链接如下:

| 文章名称 | 链接 |

|---|---|

| hadoop安装基础环境安装一 | 地址 |

| hadoop一主三从安装 | 地址 |

官网文档(单节点安装)

官网文档速递

安装需要的包:hadoop-3.3.6.tar.gz zookeeper-3.4.14.tar.gz

HA 各组件机器分配如下:

| hostname | ip | 组件 |

|---|---|---|

| hadoop01 | 10.xx.3x.142 | NN RM |

| hadoop02 | 10.xx.3x.143 | NN |

| hadoop03 | 10.xx.3x.144 | NM DN |

| hadoop04 | 10.xx.3x.145 | NM DN |

注意:原来的这些机器 hadoop01 与其它机器做了免密,jdk 还是原有的配置, 需要做 hadoop02 与其它机器做免密。

免密请参考 hadoop安装基础环境安装一

实践

一主两从

解压

# 解压

[root@hadoop01 soft]# tar -zxvf hadoop-3.3.6.tar.gz

[root@hadoop01 soft]# ls

hadoop-3.3.6 hadoop-3.3.6.tar.gz zookeeper-3.4.14.tar.gz

配置文件

hadoop-env.sh

[root@hadoop01 hadoop]# pwd

/data/hadoop/soft/hadoop-3.3.6/etc/hadoop

[root@hadoop01 hadoop]# vi hadoop-env.sh

export JAVA_HOME=/data/soft/jdk1.8

core-site.xml

# 创建存储 hadoop_repo

mkdir -p /data/hadoop/hadoop_repo

[root@hadoop01 hadoop]# vi core-site.xml

加入下面的配置内容

<property>

<name>fs.defaultFSname>

<value>hdfs://hadoop01:9000value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/data/hadoop/hadoop_repovalue>

property>

<property>

<name>fs.trash.intervalname>

<value>1440value>

property>

<property>

<name>hadoop.proxyuser.root.hostsname>

<value>*value>

property>

<property>

<name>hadoop.proxyuser.root.groupsname>

<value>*value>

property>

hdfs-site.xml

因为只有两个 DN 所以分片设置为 2

[root@hadoop01 hadoop]# vi hdfs-site.xml

<property>

<name>dfs.replicationname>

<value>2value>

property>

yarn-site.xml

[root@hadoop01 hadoop]# vi yarn-site.xml

yarn-resourcemanager.webapp.address hadoop01:37856 可以定制对外访问地址 8088易容易被挖矿

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>spark_shuffle,mapreduce_shufflevalue>

property>

<property>

<name>yarn.nodemanager.aux-services.spark_shuffle.classname>

<value>org.apache.spark.network.yarn.YarnShuffleServicevalue>

property>

<property>

<name>yarn.nodemanager.env-whitelistname>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOMEvalue>

property>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>hadoop01value>

property>

<property>

<name>yarn.log-aggregation-enablename>

<value>truevalue>

property>

<property>

<name>yarn.log.server.urlname>

<value>http://hadoop01:19888/jobhistory/logs/value>

property>

<property>

<name>yarn.nodemanager.resource.memory-mbname>

<value>10240value>

<description>该节点上YARN可使用的物理内存总量description>

property>

<property>

<name>yarn.nodemanager.resource.cpu-vcoresname>

<value>8value>

<description>表示集群中每个节点可被分配的虚拟CPU个数description>

property>

<property>

<name>yarn.scheduler.minimum-allocation-mbname>

<value>1024value>

<description>每个容器container请求被分配的最小内存description>

property>

<property>

<name>yarn.scheduler.maximum-allocation-mbname>

<value>8096value>

<description>每个容器container请求被分配的最大内存,不能给太大description>

property>

<property>

<name>yarn.scheduler.minimum-allocation-vcoresname>

<value>1value>

<description>每个容器container请求被分配的最少虚拟CPU个数description>

property>

<property>

<name>yarn.scheduler.maximum-allocation-vcoresname>

<value>4value>

<description>每个容器container请求被分配的最多虚拟CPU个数description>

property>

mapred-site.xml

[root@hadoop01 hadoop]# vi mapred-site.xml

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

workers

[root@hadoop01 hadoop]# vi workers

[root@hadoop01 hadoop]# cat workers

hadoop03

hadoop04

分发

# 每个节点都要创建

mkdir -p /data/hadoop/soft

scp -rq /data/hadoop/soft/hadoop-3.3.6 hadoop02:/data/hadoop/soft/hadoop-3.3.6

scp -rq /data/hadoop/soft/hadoop-3.3.6 hadoop03:/data/hadoop/soft/hadoop-3.3.6

scp -rq /data/hadoop/soft/hadoop-3.3.6 hadoop04:/data/hadoop/soft/hadoop-3.3.6

分发时,注意检查一下配置是否生效

环境变量

根据自己本机环境变量配置

格式化

[root@hadoop01 bin]# pwd

/data/hadoop/soft/hadoop-3.3.6/bin

./hdfs namenode -format

2024-01-11 11:32:53,304 INFO common.Storage: Storage directory /data/hadoop/hadoop_repo/dfs/name has been successfully formatted.

启动 hdfs

[root@hadoop01 sbin]# pwd

/data/hadoop/soft/hadoop-3.3.6/sbin

# 报错,忘记配置 用户了

[root@hadoop01 sbin]# ./start-dfs.sh

Starting namenodes on [hadoop01]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [hadoop01]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

2024-01-11 11:35:01,599 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@hadoop01 sbin]# vi start-dfs.sh

[root@hadoop01 sbin]# vi stop-dfs.sh

[root@hadoop01 sbin]# vi start-yarn.sh

[root@hadoop01 sbin]# vi stop-yarn.sh

# 重新分发

// start-dfs.sh,stop-dfs.sh,start-yarn.sh,stop-yarn.sh 写在最前面

HDFS_ZKFC_USER=root

HDFS_JOURNALNODE_USER=root

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

启动后的结果

[root@hadoop01 sbin]# ./start-dfs.sh

Starting namenodes on [hadoop01]

上一次登录:四 1月 11 13:08:21 CST 2024从 10.35.232.75pts/2 上

/etc/profile: line 62: hadoop: command not found

Starting datanodes

上一次登录:四 1月 11 13:08:54 CST 2024pts/0 上

/etc/profile: line 62: hadoop: command not found

hadoop04: WARNING: /data/hadoop/soft/hadoop-3.3.6/logs does not exist. Creating.

hadoop03: WARNING: /data/hadoop/soft/hadoop-3.3.6/logs does not exist. Creating.

Starting secondary namenodes [hadoop01]

上一次登录:四 1月 11 13:08:56 CST 2024pts/0 上

/etc/profile: line 62: hadoop: command not found

2024-01-11 13:09:03,358 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@hadoop01 sbin]# jps

12433 NameNode

2422 nacos-server.jar

12823 SecondaryNameNode

19911 Jps

[root@hadoop02 ~]# jps

21780 Jps

[root@hadoop02 ~]#

[root@hadoop03 ~]# jps

12853 DataNode

13033 Jps

[root@hadoop04 ~]# jps

5771 DataNode

5951 Jps

启动 yarn

[root@hadoop01 sbin]# ./start-dfs.sh

Starting namenodes on [hadoop01]

上一次登录:四 1月 11 13:39:35 CST 2024从 10.35.232.75pts/2 上

/etc/profile: line 62: hadoop: command not found

Starting datanodes

上一次登录:四 1月 11 13:49:32 CST 2024pts/0 上

/etc/profile: line 62: hadoop: command not found

Starting secondary namenodes [hadoop01]

上一次登录:四 1月 11 13:49:34 CST 2024pts/0 上

/etc/profile: line 62: hadoop: command not found

2024-01-11 13:49:41,008 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@hadoop01 sbin]# ./start-yarn.sh

Starting resourcemanager

上一次登录:四 1月 11 13:49:37 CST 2024pts/0 上

/etc/profile: line 62: hadoop: command not found

Starting nodemanagers

上一次登录:四 1月 11 13:50:01 CST 2024pts/0 上

/etc/profile: line 62: hadoop: command not found

[root@hadoop01 sbin]#

[root@hadoop03 lib]# jps

16288 Jps

16007 NodeManager

15372 DataNode

[root@hadoop04 lib]# jps

9348 DataNode

10343 Jps

9995 NodeManager

http://10.xx.3x.142:9870

http://10.xx.3x.142:8088

验证

[root@hadoop02 ~]# hdfs dfs -mkdir /test

[root@hadoop02 ~]#

bug

hadoop一主三从安装 中就有这个问题

注意:这问题是因为生产上用的是 spark

按步就搬解决。记住重新分发一下。

zookeeper

在此只部署单机,生产上部署集群

[root@hadoop01 soft]# tar -zxvf zookeeper-3.4.14.tar.gz

[root@hadoop01 soft]# ls

hadoop-3.3.6 hadoop-3.3.6.tar.gz zookeeper-3.4.14 zookeeper-3.4.14.tar.gz

[root@hadoop01 zookeeper-3.4.14]# cd conf/

[root@hadoop01 conf]# ls

configuration.xsl log4j.properties zoo_sample.cfg

[root@hadoop01 conf]# mv zoo_sample.cfg zoo.cfg

[root@hadoop01 conf]# vi zoo.cfg

[root@hadoop01 conf]#

dataDir=/data/hadoop/zk_repo

[root@hadoop01 bin]# pwd

/data/hadoop/soft/zookeeper-3.4.14/bin

[root@hadoop01 bin]# ./zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /data/hadoop/soft/zookeeper-3.4.14/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

HA

core-site.xml

<property>

<name>fs.defaultFSname>

<value>hdfs://myclustervalue>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/data/hadoop/hadoop_repovalue>

property>

<property>

<name>fs.trash.intervalname>

<value>1440value>

property>

<property>

<name>hadoop.proxyuser.root.hostsname>

<value>*value>

property>

<property>

<name>hadoop.proxyuser.root.groupsname>

<value>*value>

property>

<property>

<name>ha.zookeeper.quorumname>

<value>hadoop01:2181value>

property>

hdfs-site.xml

注意 : 三个journal节点都需要创建. (mkdir -p 执行启动命令)

<property>

<name>dfs.replicationname>

<value>2value>

property>

<! -- 上面已有 -->

<property>

<name>dfs.nameservicesname>

<value>myclustervalue>

property>

<property>

<name>dfs.ha.namenodes.myclustername>

<value>nn1,nn2value>

property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn1name>

<value>hadoop01:8020value>

property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn2name>

<value>hadoop02:8020value>

property>

<property>

<name>dfs.namenode.http-address.mycluster.nn1name>

<value>hadoop01:9870value>

property>

<property>

<name>dfs.namenode.http-address.mycluster.nn2name>

<value>hadoop02:9870value>

property>

<property>

<name>dfs.namenode.shared.edits.dirname>

<value>qjournal://hadoop01:8485;hadoop02:8485;hadoop03:8485/myclustervalue>

property>

<property>

<name>dfs.journalnode.edits.dirname>

<value>/data/hadoop/journalvalue>

property>

<property>

<name>dfs.ha.automatic-failover.enabledname>

<value>truevalue>

property>

<property>

<name>dfs.client.failover.proxy.provider.myclustername>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvidervalue>

property>

<property>

<name>dfs.ha.nn.not-become-active-in-safemodename>

<value>truevalue>

property>

<property>

<name>dfs.ha.fencing.methodsname>

<value>sshfencevalue>

property>

<property>

<name>dfs.ha.fencing.ssh.private-key-filesname>

<value>/root/.ssh/id_rsavalue>

property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeoutname>

<value>30000value>

property>

[root@hadoop01 hadoop]# mkdir journal

[root@hadoop01 hadoop]# cd journal/

[root@hadoop01 journal]# ls

[root@hadoop01 journal]# pwd

/data/hadoop/journal

mkdir -p /data/hadoop/journal

改为配置分发

# 除了修改了的机器,其它的都删除,重新分发

cd /data/hadoop/soft/ && rm -rf hadoop-3.3.6/

scp -rq /data/hadoop/soft/hadoop-3.3.6 hadoop02:/data/hadoop/soft/hadoop-3.3.6

scp -rq /data/hadoop/soft/hadoop-3.3.6 hadoop03:/data/hadoop/soft/hadoop-3.3.6

scp -rq /data/hadoop/soft/hadoop-3.3.6 hadoop04:/data/hadoop/soft/hadoop-3.3.6

cd /data/hadoop/soft/hadoop-3.3.6/etc/hadoop && cat hdfs-site.xml

执行

# 三个 journal 节点依次执行并检查

[root@hadoop01 bin]# pwd

/data/hadoop/soft/hadoop-3.3.6/bin

[root@hadoop01 bin]# ./hdfs --daemon start journalnode

cd /data/hadoop/soft/hadoop-3.3.6/bin && ./hdfs --daemon start journalnode

[root@hadoop03 bin]# jps

10393 Jps

10175 JournalNode

# hadoop01上重新格式化

./hdfs namenode -format

# namenode 启动

cd /data/hadoop/soft/hadoop-3.3.6/bin && ./hdfs --daemon start namenode

[root@hadoop01 bin]# ./hdfs --daemon start namenode

[root@hadoop01 bin]# jps

24352 NameNode

20529 QuorumPeerMain

24515 Jps

2422 nacos-server.jar

32683 JournalNode

# namenode 备用节点上执行

[root@hadoop02 bin]# ./hdfs namenode -bootstrapStandby

2024-01-11 15:08:25,601 INFO common.Storage: Storage directory /data/hadoop/hadoop_repo/dfs/name has been successfully formatted.

# 回到 hadoop01节点上 zkfc -formatZK

[root@hadoop01 bin]# ./hdfs zkfc -formatZK

2024-01-11 15:10:29,614 INFO ha.ActiveStandbyElector: Session connected.

2024-01-11 15:10:29,629 INFO ha.ActiveStandbyElector: Successfully created /hadoop-ha/mycluster in ZK.

## 启动 hdfs

[root@hadoop01 sbin]# ./start-dfs.sh

Starting namenodes on [hadoop01 hadoop02]

上一次登录:四 1月 11 15:38:41 CST 2024pts/0 上

/etc/profile: line 62: hadoop: command not found

hadoop02: namenode is running as process 1949. Stop it first and ensure /tmp/hadoop-root-namenode.pid file is empty before retry.

hadoop01: namenode is running as process 10787. Stop it first and ensure /tmp/hadoop-root-namenode.pid file is empty before retry.

Starting datanodes

上一次登录:四 1月 11 15:45:44 CST 2024pts/0 上

/etc/profile: line 62: hadoop: command not found

Starting journal nodes [hadoop03 hadoop02 hadoop01]

上一次登录:四 1月 11 15:45:44 CST 2024pts/0 上

/etc/profile: line 62: hadoop: command not found

hadoop03: journalnode is running as process 2861. Stop it first and ensure /tmp/hadoop-root-journalnode.pid file is empty before retry.

hadoop01: journalnode is running as process 11453. Stop it first and ensure /tmp/hadoop-root-journalnode.pid file is empty before retry.

hadoop02: journalnode is running as process 2263. Stop it first and ensure /tmp/hadoop-root-journalnode.pid file is empty before retry.

2024-01-11 15:45:48,859 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting ZK Failover Controllers on NN hosts [hadoop01 hadoop02]

上一次登录:四 1月 11 15:45:48 CST 2024pts/0 上

/etc/profile: line 62: hadoop: command not found

hadoop02: zkfc is running as process 2518. Stop it first and ensure /tmp/hadoop-root-zkfc.pid file is empty before retry.

hadoop01: zkfc is running as process 11880. Stop it first and ensure /tmp/hadoop-root-zkfc.pid file is empty before retry.

[root@hadoop01 sbin]# jps

20529 QuorumPeerMain

3681 Jps

10787 NameNode

11880 DFSZKFailoverController

11453 JournalNode

[root@hadoop01 sbin]#

[root@hadoop02 hadoop_repo]# jps

2518 DFSZKFailoverController

2263 JournalNode

1949 NameNode

18126 Jps

[root@hadoop03 hadoop]# jps

10263 DataNode

12170 Jps

2861 JournalNode

[root@hadoop04 hadoop]# jps

30245 DataNode

32366 Jps

验证 HA

验证:直接 kill 掉 active 所在节点的 NameNode ,观察

备用节点是否自动转正。

[root@hadoop02 sbin]# jps

11411 DFSZKFailoverController

6342 JournalNode

11896 Jps

10649 NameNode

[root@hadoop02 sbin]# kill 10649

[root@hadoop02 sbin]# jps

11411 DFSZKFailoverController

6342 JournalNode

19782 Jps

结束

hadoop HA-QJM 至此就结束了,如有疑问,欢迎评论区留言。