train_test_split参数解释

创建时间:2021.10.20

在训练时我们时常需要划分数据集,这时常常使用sklearn.model_selection.train_test_split函数

常用方法:

X_train,X_test, y_train, y_test =sklearn.model_selection.train_test_split(train_data,train_target,test_size=0.4, random_state=0,stratify=y_train)

# train_data:所要划分的样本特征集

官网对该函数的说明是:

sklearn.model_selection.train_test_split(*arrays, test_size=None, train_size=None, random_state=None, shuffle=True, stratify=None)

用途

Split arrays or matrices into random train and test subsets

Quick utility that wraps input validation and next(ShuffleSplit().split(X, y)) and application to input data into a single call for splitting (and optionally subsampling) data in a oneliner.

(翻译)将数组或矩阵拆分为随机训练和测试子集 包含输入验证和 next(ShuffleSplit().split(X, y)) 的快速实用程序以及将数据输入到单个调用中的应用程序,以便在单行中拆分(和可选的子采样)数据 .

理解:

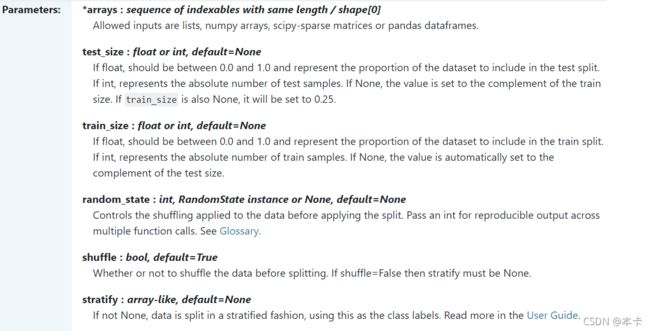

arrays:可以是列表、numpy数组、scipy稀疏矩阵或pandas的数据框

test_size:可以为浮点、整数或None,默认为None

①若为浮点时,表示测试集占总样本的百分比

②若为整数时,表示测试样本样本数

③若为None时,test size自动设置成0.25

train_size:可以为浮点、整数或None,默认为None

①若为浮点时,表示训练集占总样本的百分比

②若为整数时,表示训练样本的样本数

③若为None时,train_size自动被设置成0.75

random_state:可以为整数、RandomState实例或None,默认为None

①若为None时,每次生成的数据都是随机,可能不一样

②若为整数时,每次生成的数据都相同

stratify:可以为类似数组或None

①若为None时,划分出来的测试集或训练集中,其类标签的比例也是随机的

②若不为None时,划分出来的测试集或训练集中,其类标签的比例同输入的数组中类标签的比例相同,可以用于处理不均衡的数据集

官网案例:

>>> import numpy as np

>>> from sklearn.model_selection import train_test_split

>>> X, y = np.arange(10).reshape((5, 2)), range(5)

>>> X

array([[0, 1],

[2, 3],

[4, 5],

[6, 7],

[8, 9]])

>>> list(y)

[0, 1, 2, 3, 4]

>>> X_train, X_test, y_train, y_test = train_test_split(

... X, y, test_size=0.33, random_state=42)

...

>>> X_train

array([[4, 5],

[0, 1],

[6, 7]])

>>> y_train

[2, 0, 3]

>>> X_test

array([[2, 3],

[8, 9]])

>>> y_test

[1, 4]

>>> train_test_split(y, shuffle=False)

[[0, 1, 2], [3, 4]]