Keepalived + LVS集群

简介

Keepalived 是运行在 lvs 之上,是一个用于做双机热备(HA)的软件,它的主要功能是实现真实机的故障隔离及负载均衡器间的失败切换,提高系统的可用性。一个LVS服务会有2台服务器运行Keepalived,一台为主服务器(MASTER),一台为备份服务器(BACKUP),但是对外表现为一个虚拟IP,主服务器会发送特定的消息给备份服务器,当备份服务器收不到这个消息的时候,即主服务器宕机的时候, 备份服务器就会接管虚拟IP,继续提供服务,从而保证了高可用性。

作用

Keepalived 提供了很好的高可用性保障服务,它可以检查服务器的状态,如果有服务器出现问题,Keepalived 会将其从系统中移除,并且同时使用备份服务器代替该服务器的工作,当这台服务器可以正常工作后,Keepalived 再将其放入服务器群中,这个过程是 Keepalived 自动完成的,不需要人工干涉,我们只需要修复出现问题的服务器即可。

运行原理

基于VRRP协议的理解

Keepalived 是以 VRRP 协议为实现基础的,VRRP全称Virtual Router Redundancy Protocol,即虚拟路由冗余协议。

虚拟路由冗余协议,可以认为是实现路由器高可用的协议,即将N台提供相同功能的路由器组成一个路由器组,这个组里面有一个master 和多个 backup,master 上面有一个对外提供服务的 VIP(Virtual IP Address)(该路由器所在局域网内其他机器的默认路由为该 vip),master 会发组播,当 backup 收不到 vrrp 包时就认为 master 宕掉了,这时就需要根据 VRRP 的优先级来选举一个 backup 当 master。这样的话就可以保证路由器的高可用了。

keepalived 主要有三个模块,分别是core、check 和 vrrp。core 模块为keepalived的核心,负责主进程的启动、维护以及全局配置文件的加载和解析。check 负责健康检查,包括常见的各种检查方式。vrrp 模块是来实现 VRRP 协议的。

基于TCP/IP 协议理解

以检测 web 服务器为例,Keepalived 从3个层次来检测服务器的状态

Layer3 、Layer4 以及 Layer7 工作在IP/TCP协议栈的IP层,TCP层,及应用层,原理分别如下:

Layer3:

Keepalived使用Layer3的方式工作时,Keepalived会定期向服务器群中的服务器发送一个ICMP的数据包(既我们平时用的Ping程序),如果发现某台服务的IP地址没有激活,Keepalived 便报告这台服务器失效,并将它从服务器群中剔除,这种情况的典型例子是某台服务器被非法关机。Layer3 的方式是以服务器的IP地址是否有效作为服务器工作正常与否的标准。

Layer4:

如果您理解了Layer3的方式,Layer4就容易了。Layer4主要以TCP 端口的状态来决定服务器工作正常与否。如 web server 的服务端口一般是80,如果 Keepalived 检测到80端口没有启动,则 Keepalived 将把这台服务器从服务器群中剔除。

Layer7:

Layer7 就是工作在具体的应用层了,比Layer3,Layer4要复杂一点,在网络上占用的带宽也要大一些。Keepalived 将根据用户的设定检查服务器程序的运行是否正常,如果与用户的设定不相符,则 Keepalived 将把服务器从服务器群中剔除。

keepalived 通过选举(看服务器设置的权重)挑选出一台热备服务器做 MASTER 机器,MASTER 机器会被分配到一个指定的虚拟 ip,外部程序可通过该 ip 访问这台服务器,如果这台服务器出现故障(断网,重启,或者本机器上的 keepalived crash 等),keepalived 会从其他的备份机器上重选(还是看服务器设置的权重)一台机器做 MASTER 并分配同样的虚拟 IP,充当前一台 MASTER 的角色。

选举策略

选举策略是根据 VRRP 协议,完全按照权重大小,权重最大(0~255)的是 MASTER 机器,下面几种情况会触发选举

- keepalived 启动的时候

- master 服务器出现故障(断网,重启,或者本机器上的 keepalived crash 等,而本机器上其他应用程序 crash 不算)

- 有新的备份服务器加入且权重最大

权重规则如下:

weight值为正时,脚本检测成功时”weight”值会加到”priority”上,检测失败时不加

主失败: 主priority < 备priority+weight之和时会切换 主成功: 主priority+weight之和 > 备priority+weight之和时,主依然为主,即不发生切换 weight为负数时,脚本检测成功时”weight”不影响”priority”,检测失败时,Master节点的权值将是“priority“值与“weight”值之差

主失败: 主priotity-abs(weight) < 备priority时会发生切换 主成功: 主priority > 备priority 不切换 当两个节点的优先级相同时,以节点发送VRRP通告的 IP 作为比较对象,IP较大者为MASTER。

priority 和 weight 的设定

主从的优先级初始值priority和变化量weight设置非常关键,配错的话会导致无法进行主从切换。比如,当MASTER初始值定得太高,即使script脚本执行失败,也比BACKUP的priority + weight大,就没法进行VIP漂移了。

所以priority和weight值的设定应遵循: abs(MASTER priority - BAKCUP priority) < abs(weight)。一般情况下,初始值MASTER的priority值应该比较BACKUP大,但不能超过weight的绝对值。 另外,当网络中不支持多播(例如某些云环境),或者出现网络分区的情况,keepalived BACKUP节点收不到MASTER的VRRP通告,就会出现脑裂(split brain)现象,此时集群中会存在多个MASTER节点。

基础环境

[root@master ~]# systemctl disable firewalld --now && setenforce 0

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@master ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@master ~]# mv /etc/yum.repos.d/CentOS-* /tmp/

[root@master ~]# curl -o /etc/yum.repos.d/centos.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@master ~]# curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

安装keepalived

[root@master ~]# yum install -y keepalived

[root@master ~]# yum install -y nginx

修改 master 配置

[root@master ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 全局唯一的主机标识

router_id master

}

vrrp_script check_nginx {

script "/usr/local/src/check_nginx.sh" # 检测nginx状态脚本

interval 3 # 每隔3秒运行一次Shell脚本

weight 10 # 脚本运行成功,权重加10

}

vrrp_instance VI_1 { # vrrp配置标识 VI-1为实例名称

# 标识是主节点还是备用节点,值为 MASTER 或 BACKUP

state MASTER

# 绑定的网卡,通过vrrp协议去通信、广播

interface ens33

# 定义虚拟路由组id,保证主备节点是一致的

virtual_router_id 51

# 权重,master必须比slave大

priority 100

# master和backup同步检查时间,间隔默认1秒

advert_int 1

# 认证授权的密码,所有主备需要一样

authentication {

# 认证类型

auth_type PASS

# 字符串

auth_pass 1111

}

# 当Keepalived检测到主备节点之间的状态发生变化时,它会执行track_script中指定的脚本

track_script {

check_nginx

}

# 虚拟IP(VIP),又叫漂移IP

virtual_ipaddress {

192.168.169.100

}

}

配置脚本文件

[root@master ~]# cat /usr/local/src/check_nginx.sh

#!/bin/bash

# 获取当前时间

d=$(date +%Y%m%d%H:%M:%S)

# 检查nginx进程数量

n1=$(ps -C nginx --no-headers | wc -l)

# 如果nginx进程数量为0,则启动nginx并再次检查

if [ $n1 -eq 0 ]; then

systemctl start nginx

sleep 3 # 等待nginx启动

n2=$(ps -C nginx --no-headers | wc -l)

# 如果nginx进程数量仍然为0,说明无法启动nginx,此时需要关闭keepalived

if [ $n2 -eq 0 ]; then

echo "$d Nginx is down, keepalived will stop." >> /var/log/check_ng.log

systemctl stop keepalived

fi

fi

### 添加权限

[root@master ~]# chmod a+x /usr/local/src/check_nginx.sh

[root@master ~]# touch /var/log/check_nginx.log

[root@master ~]# systemctl restart keepalived

启动服务

[root@master ~]# systemctl enable keepalived --now

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

[root@master ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2023-12-20 03:42:27 CST; 9s ago

Process: 9405 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 9406 (keepalived)

CGroup: /system.slice/keepalived.service

├─9406 /usr/sbin/keepalived -D

├─9407 /usr/sbin/keepalived -D

└─9408 /usr/sbin/keepalived -D

Dec 20 03:42:27 master Keepalived[9406]: Starting Healthcheck child process, pid=9407

Dec 20 03:42:27 master systemd[1]: Started LVS and VRRP High Availability Monitor.

Dec 20 03:42:27 master Keepalived[9406]: Starting VRRP child process, pid=9408

Dec 20 03:42:27 master Keepalived_healthcheckers[9407]: Initializing ipvs

Dec 20 03:42:27 master Keepalived_vrrp[9408]: Registering Kernel netlink reflector

Dec 20 03:42:27 master Keepalived_vrrp[9408]: Registering Kernel netlink command channel

Dec 20 03:42:27 master Keepalived_vrrp[9408]: Registering gratuitous ARP shared channel

Dec 20 03:42:27 master Keepalived_vrrp[9408]: Opening file '/etc/keepalived/keepalived.conf'.

Dec 20 03:42:27 master Keepalived_vrrp[9408]: Using LinkWatch kernel netlink reflector...

Dec 20 03:42:27 master Keepalived_healthcheckers[9407]: Opening file '/etc/keepalived/keepalived.conf'.

[root@master ~]# systemctl enable nginx --now

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

[root@master ~]# systemctl status nginx

● nginx.service - The nginx HTTP and reverse proxy server

Loaded: loaded (/usr/lib/systemd/system/nginx.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2023-12-20 03:43:05 CST; 7s ago

Process: 9443 ExecStart=/usr/sbin/nginx (code=exited, status=0/SUCCESS)

Process: 9439 ExecStartPre=/usr/sbin/nginx -t (code=exited, status=0/SUCCESS)

Process: 9437 ExecStartPre=/usr/bin/rm -f /run/nginx.pid (code=exited, status=0/SUCCESS)

Main PID: 9445 (nginx)

CGroup: /system.slice/nginx.service

├─9445 nginx: master process /usr/sbin/nginx

├─9446 nginx: worker process

└─9447 nginx: worker process

Dec 20 03:43:05 master systemd[1]: Starting The nginx HTTP and reverse proxy server...

Dec 20 03:43:05 master nginx[9439]: nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

Dec 20 03:43:05 master nginx[9439]: nginx: configuration file /etc/nginx/nginx.conf test is successful

Dec 20 03:43:05 master systemd[1]: Started The nginx HTTP and reverse proxy server.

查看进程

[root@master ~]# ps -aux | grep keepalived

root 9406 0.0 0.0 123012 1404 ? Ss 03:42 0:00 /usr/sbin/keepalived -D

root 9407 0.0 0.0 133980 3316 ? S 03:42 0:00 /usr/sbin/keepalived -D

root 9408 0.0 0.0 133852 2616 ? S 03:42 0:00 /usr/sbin/keepalived -D

root 9452 0.0 0.0 112808 964 pts/0 R+ 03:43 0:00 grep --color=auto keepalived

[root@master ~]# ps -aux | grep nginx

root 9445 0.0 0.0 39308 936 ? Ss 03:43 0:00 nginx: master process /usr/sbin/nginx

nginx 9446 0.0 0.0 39696 1820 ? S 03:43 0:00 nginx: worker process

nginx 9447 0.0 0.0 39696 1560 ? S 03:43 0:00 nginx: worker process

root 9450 0.0 0.0 112808 968 pts/0 S+ 03:43 0:00 grep --color=auto nginx

测试

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:64:6a:65 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.208/24 brd 192.168.169.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.169.100/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::3dc3:3652:c74b:c16e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::969a:362b:d697:3ad6/64 scope link noprefixroute

valid_lft forever preferred_lft forever

测试日志

[root@master ~]# cat /usr/local/src/check_nginx.sh

#!/bin/bash

# 获取当前时间

d=$(date +%Y%m%d%H:%M:%S)

# 检查nginx进程数量

n=$(ps -C nginx --no-headers | wc -l)

# 如果nginx进程数量为0,则启动nginx并再次检查

if [ $n -eq 0 ]; then

systemctl start nginx

sleep 3 # 等待nginx启动

fi

# 杀死所有nginx进程

pkill -9 nginx

# 如果nginx进程数量仍然为0,说明无法启动nginx,此时需要关闭keepalived

if [ $n -eq 0 ]; then

echo "$d Nginx is down, keepalived will stop." >> /var/log/check_nginx.log

systemctl stop keepalived

fi

### 查看日志

[root@master ~]# cat /var/log/check_nginx.log

2023122100:35:53 Nginx is down, keepalived will stop.

修改 backup 配置

[root@slave ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 全局唯一的主机标识

#router_id slave

}

vrrp_script check_nginx {

script "/usr/local/src/check_nginx.sh"

interval 3 # 每隔3秒运行一次Shell脚本

weight 10 # 脚本运行成功,权重加10

}

vrrp_instance VI_1 {

# 标识是主节点还是备用节点,值为 MASTER 或 BACKUP

state BACKUP

# 绑定的网卡

interface ens33

# 虚拟路由id,保证主备节点是一致的

virtual_router_id 51

# 权重

priority 90

# 同步检查时间,间隔默认1秒

advert_int 1

# 认证授权的密码,所有主备需要一样

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

check_nginx

}

# 虚拟IP

virtual_ipaddress {

192.168.169.100

}

}

启动服务

[root@slave ~]# systemctl enable keepalived --now

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

[root@slave ~]# systemctl enable nginx --now

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

[root@backup ~]# systemctl restart keepalived

测试高可用

测试nginx的差异

[root@master ~]# curl 192.168.169.208 -I

HTTP/1.1 200 OK

Server: nginx/1.20.1

Date: Tue, 19 Dec 2023 21:40:20 GMT

Content-Type: text/html

Content-Length: 4833

Last-Modified: Fri, 16 May 2014 15:12:48 GMT

Connection: keep-alive

ETag: "53762af0-12e1"

Accept-Ranges: bytes

[root@master ~]# curl 192.168.169.209 -I

HTTP/1.1 200 OK

Server: nginx/1.20.1

Date: Tue, 19 Dec 2023 21:40:23 GMT

Content-Type: text/html

Content-Length: 4833

Last-Modified: Fri, 16 May 2014 15:12:48 GMT

Connection: keep-alive

ETag: "53762af0-12e1"

Accept-Ranges: bytes

[root@master ~]# curl 192.168.169.100 -I

HTTP/1.1 200 OK

Server: nginx/1.20.1

Date: Tue, 19 Dec 2023 21:40:26 GMT

Content-Type: text/html

Content-Length: 4833

Last-Modified: Fri, 16 May 2014 15:12:48 GMT

Connection: keep-alive

ETag: "53762af0-12e1"

Accept-Ranges: bytes

测试脚本自动开启nginx

[root@master ~]# systemctl restart keepalived

[root@master ~]# systemctl stop nginx

[root@master ~]# systemctl status nginx

● nginx.service - The nginx HTTP and reverse proxy server

Loaded: loaded (/usr/lib/systemd/system/nginx.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2023-12-20 05:03:14 CST; 7s ago

Process: 18223 ExecStart=/usr/sbin/nginx (code=exited, status=0/SUCCESS)

Process: 18222 ExecStartPre=/usr/sbin/nginx -t (code=exited, status=0/SUCCESS)

Process: 18219 ExecStartPre=/usr/bin/rm -f /run/nginx.pid (code=exited, status=0/SUCCESS)

Main PID: 18225 (nginx)

CGroup: /system.slice/nginx.service

├─18225 nginx: master process /usr/sbin/nginx

├─18226 nginx: worker process

└─18227 nginx: worker process

Dec 20 05:03:14 master systemd[1]: Starting The nginx HTTP and reverse proxy server...

Dec 20 05:03:14 master nginx[18222]: nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

Dec 20 05:03:14 master nginx[18222]: nginx: configuration file /etc/nginx/nginx.conf test is successful

Dec 20 05:03:14 master systemd[1]: Started The nginx HTTP and reverse proxy server.

[root@slave ~]# systemctl restart keepalived

[root@slave ~]# systemctl stop nginx

[root@slave ~]# systemctl status nginx

● nginx.service - The nginx HTTP and reverse proxy server

Loaded: loaded (/usr/lib/systemd/system/nginx.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2023-12-20 05:39:21 CST; 784ms ago

Process: 13833 ExecStart=/usr/sbin/nginx (code=exited, status=0/SUCCESS)

Process: 13830 ExecStartPre=/usr/sbin/nginx -t (code=exited, status=0/SUCCESS)

Process: 13828 ExecStartPre=/usr/bin/rm -f /run/nginx.pid (code=exited, status=0/SUCCESS)

Main PID: 13835 (nginx)

CGroup: /system.slice/nginx.service

├─13835 nginx: master process /usr/sbin/nginx

├─13836 nginx: worker process

└─13837 nginx: worker process

Dec 20 05:39:21 slave systemd[1]: Starting The nginx HTTP and reverse proxy server...

Dec 20 05:39:21 slave nginx[13830]: nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

Dec 20 05:39:21 slave nginx[13830]: nginx: configuration file /etc/nginx/nginx.conf test is successful

Dec 20 05:39:21 slave systemd[1]: Started The nginx HTTP and reverse proxy server.

在master添加iptables规则

- backup会生成虚拟IP,master禁止vrrp协议但是他不认为自己宕机了所以不会释放虚拟IP资源

- master和backup都绑定了虚拟IP对外服务会混乱俗称脑裂,这种情况是觉得不允许的

[root@master ~]# iptables -I OUTPUT -p vrrp -j DROP

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:64:6a:65 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.208/24 brd 192.168.169.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.169.100/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::3dc3:3652:c74b:c16e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::969a:362b:d697:3ad6/64 scope link noprefixroute

valid_lft forever preferred_lft forever

这条iptables命令的具体参数解读如下:

-

iptables是Linux操作系统中用于配置防火墙规则的工具。 -

-I OUTPUT表示在OUTPUT链的末尾添加一条新规则。因为OUTPUT链主要用于处理本机出发的数据包,所以在这里添加的规则会对从本机出发的所有数据包进行过滤。 -

-p vrrp表示这条规则将应用于所有VRRP协议的数据包。其中,-p参数后面通常跟的是协议类型,如TCP、UDP、ICMP等。 -

-j DROP表示对符合前面条件(即源地址或目标地址符合某条规则)的数据包执行“丢弃”操作,也就是不转发该数据包。

因此,整条命令的意思是:“如果在OUTPUT链中发现了符合VRRP协议的数据包,那么将其丢弃”。这是一种非常基础的防火墙配置,可以防止来自特定协议的数据包进入系统,有助于提高系统的安全性。

[root@slave ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:01:64:33 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.209/24 brd 192.168.169.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.169.100/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::3dc3:3652:c74b:c16e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

清楚规则

[root@master ~]# iptables -F

### master

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:64:6a:65 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.208/24 brd 192.168.169.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.169.100/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::3dc3:3652:c74b:c16e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::969a:362b:d697:3ad6/64 scope link noprefixroute

valid_lft forever preferred_lft forever

### backup

[root@slave ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:01:64:33 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.209/24 brd 192.168.169.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::3dc3:3652:c74b:c16e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

关闭master的keepalived服务

[root@master ~]# systemctl stop keepalived

### master的虚拟IP消失

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:64:6a:65 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.208/24 brd 192.168.169.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::3dc3:3652:c74b:c16e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::969a:362b:d697:3ad6/64 scope link noprefixroute

valid_lft forever preferred_lft forever

### 虚拟IP转移到backup

[root@slave ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:01:64:33 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.209/24 brd 192.168.169.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.169.100/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::3dc3:3652:c74b:c16e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

开启master的keepalived服务

[root@master ~]# systemctl start keepalived

### 虚拟机IP回到master

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:64:6a:65 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.208/24 brd 192.168.169.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.169.100/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::3dc3:3652:c74b:c16e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::969a:362b:d697:3ad6/64 scope link noprefixroute

valid_lft forever preferred_lft forever

### backup虚拟IP消失

[root@slave ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:01:64:33 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.209/24 brd 192.168.169.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::3dc3:3652:c74b:c16e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

时间同步:

主主机安装ntp服务

yum -y install ntp

systemctl start ntpd 主

systemctl enable ntpd 主

ntpdate 192.168.1.5 备

安装软件:

yum install -y nginx keepalived 主备

echo 'this is master' > /usr/share/nginx/html/index.html 主节点

echo 'this is slave' > /usr/share/nginx/html/index.html 备节点

主节点如下操作:

cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs { #全局配置标识,表明这个区域{}是全局配置

script_user root #root执行

enable_script_security #如过路径为非root可写,不要配置脚本为root用户执行(编译安装无需定义脚本执行身份)

router_id LVS_ZX #机器标识,用于邮件通知

vrrp_skip_check_adv_addr

# vrrp_strict ##严格遵守VRRP协议,禁止以下状况:1.无VIP地址 2.配置了单播邻居 3.在VRRP版本2中有IPv6地址,开启动此项会自动开启iptables防火墙规则,建议关闭此项配置

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_nginx { #VRRP 脚本声明

script "/etc/keepalived/nginx_pid.sh" #周期性执行的脚本

interval 2 #运行脚本的间隔时间,秒

weight 2 #权重,priority值减去此值要小于备服务的priority值

fall 3 #检测几次失败才为失败,整数

rise 2 #检测几次状态为正常的,才确认正常,整数

}

vrrp_instance VI_1 { #vrrp 实例部分定义,VI_1自定义名称

state MASTER #指定 keepalived 的角色,必须大写 可选值:MASTER|BACKUP

interface eno16777736 #网卡设置

virtual_router_id 51 #虚拟路由标识,是一个数字,同一个vrrp 实例使用唯一的标识,MASTER和BACKUP这个标识必须保持一致

priority 100 #定义优先级,数字越大,优先级越高。

advert_int 1 #设定 MASTER 与 BACKUP 负载均衡之间同步检查的时间间隔,单位为秒,两个节点设置必须一样

authentication { #设置验证类型和密码,两个节点必须一致

auth_type PASS

auth_pass 1111

}

virtual_ipaddress { #设置虚拟IP地址,可以设置多个虚拟IP地址,每行一个

192.168.1.10

}

track_script { #脚本监控状态

chk_nginx

}

}

}

vim /etc/keepalived/nginx_pid.sh

#!/bin/bash

#version 1

A=`ps -C nginx --no-header |wc -l`

if [ $A -eq 0 ];then

systemctl start nginx

sleep 3

if [ `ps -C nginx --no-header |wc -l` -eq 0 ];then

systemctl stop keepalived

fi

fi

chmod 755 /etc/keepalived/check_nginx.sh

启动keepalived,systemctl start keepalived

检查启动状态,systemctl status keepalived

察虚拟IP是否正常: 执行ip add

curl 192.168.1.10 查看结果

备节点如下操作:

cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

script_user root

router_id LVS_ZX

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_pid.sh"

interval 2

weight 2

fall 3

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface eno16777736

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.1.10

}

}

vim /etc/keepalived/nginx_pid.sh

#!/bin/bash

#version 1

A=`ps -C nginx --no-header |wc -l`

if [ $A -eq 0 ];then

systemctl start nginx

sleep 3

if [ `ps -C nginx --no-header |wc -l` -eq 0 ];then

systemctl stop keepalived

fi

fi

chmod 755 /etc/keepalived/check_nginx.sh

启动keepalived,systemctl start keepalived

检查启动状态,systemctl status keepalived

验证方式:

kill停主节点keepalived,查看浮动ip,恢复主节点,再次查看浮动ip

思考:

upstream test {

server 192.168.1.5:8081;

server 192.168.1.5:8080;

}

server {

listen 8080;

server_name test;

location / {

proxy_pass http://test;

}

}

Keepalived 主备 + LVS

高可用配置

安装服务

[root@master ~]# yum install -y keepalived

修改master配置

[root@master ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 全局唯一的主机标识

router_id master

}

vrrp_script check_nginx {

script "/usr/local/src/check_nginx.sh" # 检测nginx状态脚本

interval 3 # 每隔3秒运行一次Shell脚本

weight 10 # 脚本运行成功,权重加10

}

vrrp_instance VI_1 { # vrrp配置标识 VI-1为实例名称

# 标识是主节点还是备用节点,值为 MASTER 或 BACKUP

state MASTER

# 绑定的网卡,通过vrrp协议去通信、广播

interface ens33

# 定义虚拟路由组id,保证主备节点是一致的

virtual_router_id 51

# 权重,master必须比slave大

priority 100

# master和backup同步检查时间,间隔默认1秒

advert_int 1

# 认证授权的密码,所有主备需要一样

authentication {

# 认证类型

auth_type PASS

# 字符串

auth_pass 1111

}

# 当Keepalived检测到主备节点之间的状态发生变化时,它会执行track_script中指定的脚本

track_script {

check_nginx

}

# 虚拟IP(VIP),又叫漂移IP

virtual_ipaddress {

192.168.169.100

}

}

### 以下为负载均衡配置

virtual_server 192.168.169.100 80 { # 虚拟IP

delay_loop 10 # 设置健康状态检查时间

lb_algo wlc # 调度算法,这里用了 wlc 加权最小连接

lb_kind DR # 这里测试用了 Direct Route 模式

persistence_timeout 60 # 持久连接超时时间,同一IP的连接60内会被分配给同一个realserver

protocol TCP

real_server 192.168.169.210 80 { # 真实服务器IP

weight 100 # 权重

TCP_CHECK {

connect_timeout 10 # 10秒内无响应超时

nb_get_retry 3 # 失败重试次数,新版本为 retry

delay_before_retry 3 # 失败重试的间隔时间

connect_port 80 # 连接的后端端口

}

}

real_server 192.168.169.211 80 {

weight 100

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

修改backup配置

[root@backup ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 全局唯一的主机标识

#router_id slave

}

vrrp_script check_nginx {

script "/usr/local/src/check_nginx.sh"

interval 3 # 每隔3秒运行一次Shell脚本

weight 10 # 脚本运行成功,权重加10

}

vrrp_instance VI_1 {

# 标识是主节点还是备用节点,值为 MASTER 或 BACKUP

state BACKUP

# 绑定的网卡

interface ens33

# 虚拟路由id,保证主备节点是一致的

virtual_router_id 51

# 权重

priority 90

# 同步检查时间,间隔默认1秒

advert_int 1

# 认证授权的密码,所有主备需要一样

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

check_nginx

}

# 虚拟IP

virtual_ipaddress {

192.168.169.100

}

}

### 以下为负载均衡配置

virtual_server 192.168.169.100 80 { # 虚拟IP

delay_loop 10 # 设置健康状态检查时间

lb_algo wlc # 调度算法,这里用了 wlc 加权最小连接

lb_kind DR # 这里测试用了 Direct Route 模式

persistence_timeout 60 # 持久连接超时时间,同一IP的连接60内会被分配给同一个realserver

protocol TCP

real_server 192.168.169.210 80 { # 真实服务器IP

weight 100 # 权重

TCP_CHECK {

connect_timeout 10 # 10秒内无响应超时

nb_get_retry 3 # 失败重试次数,新版本为 retry

delay_before_retry 3 # 失败重试的间隔时间

connect_port 80 # 连接的后端端口

}

}

real_server 192.168.169.211 80 {

weight 100

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

配置脚本文件

[root@master ~]# cat /usr/local/src/check_nginx.sh

#!/bin/bash

# 获取当前时间

d=$(date +%Y%m%d%H:%M:%S)

# 检查nginx进程数量

n=$(ps -C nginx --no-headers | wc -l)

# 如果nginx进程数量为0,则启动nginx并再次检查

if [ $n -eq 0 ]; then

systemctl start nginx

sleep 3 # 等待nginx启动

# 如果nginx进程数量仍然为0,说明无法启动nginx,此时需要关闭keepalived

if [ $n -eq 0 ]; then

echo "$d Nginx is down, keepalived will stop." >> /var/log/check_ng.log

systemctl stop keepalived

fi

fi

启动服务

[root@master ~]# systemctl enable keepalived --now

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to

重启服务生效配置

[root@master ~]# systemctl restart keepalived

修改权限

[root@master ~]# chmod 755 /usr/local/src/lvs_dr.sh

-

测试关闭nginx服务查看是否会自动开启nginx服务

负载均衡配置

安装服务

[root@master ~]# yum install -y ipvsadm

[root@master ~]# systemctl status ipvsadm

[root@master ~]# systemctl enable ipvsadm --now

报错解决

/bin/bash: /etc/sysconfig/ipvsadm: No such file or directory

[root@master ~]# ipvsadm --save > /etc/sysconfig/ipvsadm

[root@master ~]# systemctl restart ipvsadm

[root@master ~]# systemctl status ipvsadm

● ipvsadm.service - Initialise the Linux Virtual Server

Loaded: loaded (/usr/lib/systemd/system/ipvsadm.service; enabled; vendor preset: disabled)

Active: active (exited) since Fri 2023-12-22 18:18:53 CST; 3s ago

Process: 2694 ExecStart=/bin/bash -c exec /sbin/ipvsadm-restore < /etc/sysconfig/ipvsadm (code=exited, status=0/SUCCESS)

Main PID: 2694 (code=exited, status=0/SUCCESS)

Dec 22 18:18:53 master systemd[1]: Starting Initialise the Linux Virtual Server...

Dec 22 18:18:53 master systemd[1]: Started Initialise the Linux Virtual Server.

编写调度器脚本

#!/bin/bash

echo 1 > /proc/sys/net/ipv4/ip_forward

ipvsadm=/usr/sbin/ipvsadm

vip=192.168.169.110

rs1=192.168.169.209

rs2=192.168.169.210

# 注意这里的网卡名字

ifconfig ens33:2 $vip broadcast $vip netmask 255.255.255.255 up

route add -host $vip dev ens33:2

$ipvsadm -C

$ipvsadm -A -t $vip:80 -s wrr

$ipvsadm -a -t $vip:80 -r $rs1:80 -g -w 1

$ipvsadm -a -t $vip:80 -r $rs2:80 -g -w 1

编写服务器脚本

#!/bin/bash

vip=192.168.169.110

# 注意这里的网卡名字

ifconfig lo:0 $vip broadcast $vip netmask 255.255.255.255 up

route add -host $vip lo:0

# 以下操作为更改arp内核参数,目的是为了让rs顺利发送mac地址给客户端

# 参考文档 https://www.cnblogs.com/lgfeng/archive/2012/10/16/2726308.html

echo "1" > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" > /proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/all/arp_announce

服务端安装nginx测试

yum install -y nginx && systemctl enable nginx --now

查看连接

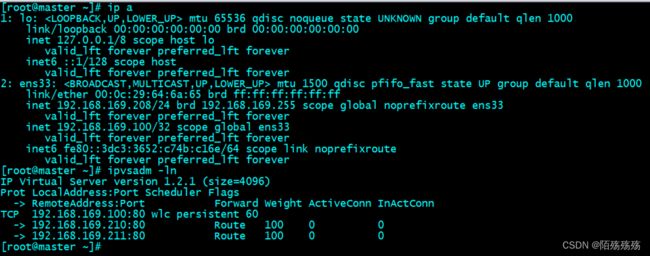

[root@master ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.169.110:80 wlc persistent 60

-> 192.168.169.209:80 Route 100 0 0

-> 192.168.169.210:80 Route 100 0 1

测试

-

VIP在master测试

-

停掉master的keepalived服务,vip会转移到backup节点