lstm的tensorflow代码实现几个函数的源码及解释

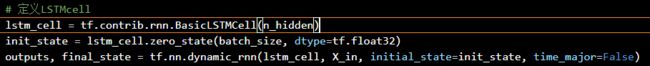

对这三行代码:

1.tf.contrib.rnn.BasicLSTMCell

总的来说,这个函数就是用来计算cell里面的运算的,比如加入三个门的计算,对应于所有列出的lstm的公式,如果说对应于普通的神经元,就相当于w*x+b这一步运算。

源码:

@tf_export("nn.rnn_cell.BasicLSTMCell") class BasicLSTMCell(LayerRNNCell): """Basic LSTM recurrent network cell. The implementation is based on: http://arxiv.org/abs/1409.2329. We add forget_bias (default: 1) to the biases of the forget gate in order to reduce the scale of forgetting in the beginning of the training. It does not allow cell clipping, a projection layer, and does not use peep-hole connections: it is the basic baseline. For advanced models, please use the full @{tf.nn.rnn_cell.LSTMCell} that follows. """ def __init__(self, num_units, forget_bias=1.0, state_is_tuple=True, activation=None, reuse=None, name=None, dtype=None): """Initialize the basic LSTM cell. Args: num_units: int, The number of units in the LSTM cell. forget_bias: float, The bias added to forget gates (see above). Must set to `0.0` manually when restoring from CudnnLSTM-trained checkpoints. state_is_tuple: If True, accepted and returned states are 2-tuples of the `c_state` and `m_state`. If False, they are concatenated along the column axis. The latter behavior will soon be deprecated. activation: Activation function of the inner states. Default: `tanh`. reuse: (optional) Python boolean describing whether to reuse variables in an existing scope. If not `True`, and the existing scope already has the given variables, an error is raised. name: String, the name of the layer. Layers with the same name will share weights, but to avoid mistakes we require reuse=True in such cases. dtype: Default dtype of the layer (default of `None` means use the type of the first input). Required when `build` is called before `call`. When restoring from CudnnLSTM-trained checkpoints, must use `CudnnCompatibleLSTMCell` instead. """ super(BasicLSTMCell, self).__init__(_reuse=reuse, name=name, dtype=dtype) if not state_is_tuple: logging.warn("%s: Using a concatenated state is slower and will soon be " "deprecated. Use state_is_tuple=True.", self) # Inputs must be 2-dimensional. self.input_spec = base_layer.InputSpec(ndim=2) self._num_units = num_units self._forget_bias = forget_bias self._state_is_tuple = state_is_tuple self._activation = activation or math_ops.tanh @property def state_size(self): return (LSTMStateTuple(self._num_units, self._num_units) if self._state_is_tuple else 2 * self._num_units) @property def output_size(self): return self._num_units def build(self, inputs_shape): if inputs_shape[1].value is None: raise ValueError("Expected inputs.shape[-1] to be known, saw shape: %s" % inputs_shape) input_depth = inputs_shape[1].value h_depth = self._num_units self._kernel = self.add_variable( _WEIGHTS_VARIABLE_NAME, shape=[input_depth + h_depth, 4 * self._num_units]) self._bias = self.add_variable( _BIAS_VARIABLE_NAME, shape=[4 * self._num_units], initializer=init_ops.zeros_initializer(dtype=self.dtype)) self.built = True def call(self, inputs, state): """Long short-term memory cell (LSTM). Args: inputs: `2-D` tensor with shape `[batch_size, input_size]`. state: An `LSTMStateTuple` of state tensors, each shaped `[batch_size, num_units]`, if `state_is_tuple` has been set to `True`. Otherwise, a `Tensor` shaped `[batch_size, 2 * num_units]`. Returns: A pair containing the new hidden state, and the new state (either a `LSTMStateTuple` or a concatenated state, depending on `state_is_tuple`). """ sigmoid = math_ops.sigmoid one = constant_op.constant(1, dtype=dtypes.int32) # Parameters of gates are concatenated into one multiply for efficiency. if self._state_is_tuple: c, h = state else: c, h = array_ops.split(value=state, num_or_size_splits=2, axis=one) gate_inputs = math_ops.matmul( array_ops.concat([inputs, h], 1), self._kernel) gate_inputs = nn_ops.bias_add(gate_inputs, self._bias) # i = input_gate, j = new_input, f = forget_gate, o = output_gate i, j, f, o = array_ops.split( value=gate_inputs, num_or_size_splits=4, axis=one) forget_bias_tensor = constant_op.constant(self._forget_bias, dtype=f.dtype) # Note that using `add` and `multiply` instead of `+` and `*` gives a # performance improvement. So using those at the cost of readability. add = math_ops.add multiply = math_ops.multiply new_c = add(multiply(c, sigmoid(add(f, forget_bias_tensor))), multiply(sigmoid(i), self._activation(j))) new_h = multiply(self._activation(new_c), sigmoid(o)) if self._state_is_tuple: new_state = LSTMStateTuple(new_c, new_h) else: new_state = array_ops.concat([new_c, new_h], 1) return new_h, new_state

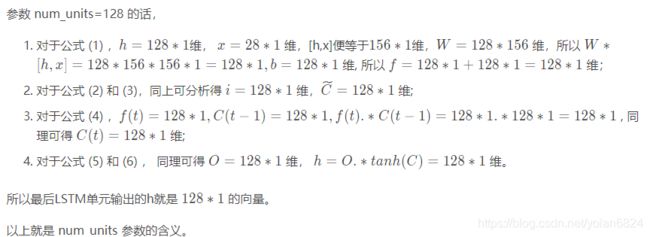

这里num_units的解释可以看这里:num_units解释

下面这张图是上面链接的截图:

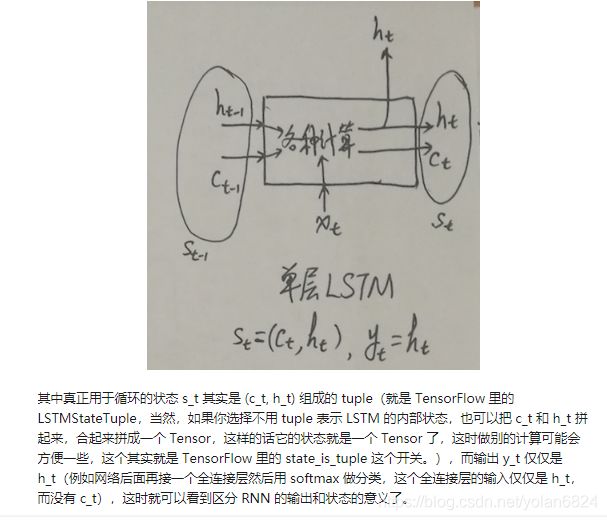

区分好lstm cell的state和output

学会区分 RNN 的 output 和 state

总结一下就是:(下面这张图片是上面这篇文章中的截图)

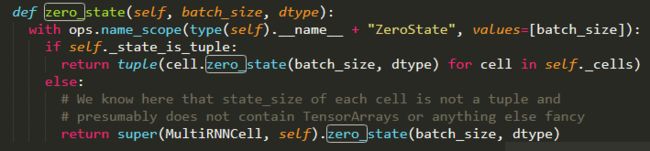

2.lstm_cell.zero_state(batch_size, dtype=tf.float32)

这个函数是用来设置初始状态的。

3.tf.nn.dynamic_rnn(lstm_cell, X_in, initial_state=init_state, time_major=False)

这个函数是用来运行rnn循环网络的。可以设置rnn cell循环的次数,也可以用于设置rnn的层数。

@tf_export("nn.dynamic_rnn")

def dynamic_rnn(cell, inputs, sequence_length=None, initial_state=None,

dtype=None, parallel_iterations=None, swap_memory=False,

time_major=False, scope=None):

"""Creates a recurrent neural network specified by RNNCell `cell`.

Performs fully dynamic unrolling of `inputs`.

Example:

```python

# create a BasicRNNCell

rnn_cell = tf.nn.rnn_cell.BasicRNNCell(hidden_size)

# 'outputs' is a tensor of shape [batch_size, max_time, cell_state_size]

# defining initial state

initial_state = rnn_cell.zero_state(batch_size, dtype=tf.float32)

# 'state' is a tensor of shape [batch_size, cell_state_size]

outputs, state = tf.nn.dynamic_rnn(rnn_cell, input_data,

initial_state=initial_state,

dtype=tf.float32)

```

```python

# create 2 LSTMCells

rnn_layers = [tf.nn.rnn_cell.LSTMCell(size) for size in [128, 256]]

# create a RNN cell composed sequentially of a number of RNNCells

multi_rnn_cell = tf.nn.rnn_cell.MultiRNNCell(rnn_layers)

# 'outputs' is a tensor of shape [batch_size, max_time, 256]

# 'state' is a N-tuple where N is the number of LSTMCells containing a

# tf.contrib.rnn.LSTMStateTuple for each cell

outputs, state = tf.nn.dynamic_rnn(cell=multi_rnn_cell,

inputs=data,

dtype=tf.float32)

```

Args:

cell: An instance of RNNCell.

inputs: The RNN inputs.

If `time_major == False` (default), this must be a `Tensor` of shape:

`[batch_size, max_time, ...]`, or a nested tuple of such

elements.

If `time_major == True`, this must be a `Tensor` of shape:

`[max_time, batch_size, ...]`, or a nested tuple of such

elements.

This may also be a (possibly nested) tuple of Tensors satisfying

this property. The first two dimensions must match across all the inputs,

but otherwise the ranks and other shape components may differ.

In this case, input to `cell` at each time-step will replicate the

structure of these tuples, except for the time dimension (from which the

time is taken).

The input to `cell` at each time step will be a `Tensor` or (possibly

nested) tuple of Tensors each with dimensions `[batch_size, ...]`.

sequence_length: (optional) An int32/int64 vector sized `[batch_size]`.

Used to copy-through state and zero-out outputs when past a batch

element's sequence length. So it's more for performance than correctness.

initial_state: (optional) An initial state for the RNN.

If `cell.state_size` is an integer, this must be

a `Tensor` of appropriate type and shape `[batch_size, cell.state_size]`.

If `cell.state_size` is a tuple, this should be a tuple of

tensors having shapes `[batch_size, s] for s in cell.state_size`.

dtype: (optional) The data type for the initial state and expected output.

Required if initial_state is not provided or RNN state has a heterogeneous

dtype.

parallel_iterations: (Default: 32). The number of iterations to run in

parallel. Those operations which do not have any temporal dependency

and can be run in parallel, will be. This parameter trades off

time for space. Values >> 1 use more memory but take less time,

while smaller values use less memory but computations take longer.

swap_memory: Transparently swap the tensors produced in forward inference

but needed for back prop from GPU to CPU. This allows training RNNs

which would typically not fit on a single GPU, with very minimal (or no)

performance penalty.

time_major: The shape format of the `inputs` and `outputs` Tensors.

If true, these `Tensors` must be shaped `[max_time, batch_size, depth]`.

If false, these `Tensors` must be shaped `[batch_size, max_time, depth]`.

Using `time_major = True` is a bit more efficient because it avoids

transposes at the beginning and end of the RNN calculation. However,

most TensorFlow data is batch-major, so by default this function

accepts input and emits output in batch-major form.

scope: VariableScope for the created subgraph; defaults to "rnn".

Returns:

A pair (outputs, state) where:

outputs: The RNN output `Tensor`.

If time_major == False (default), this will be a `Tensor` shaped:

`[batch_size, max_time, cell.output_size]`.

If time_major == True, this will be a `Tensor` shaped:

`[max_time, batch_size, cell.output_size]`.

Note, if `cell.output_size` is a (possibly nested) tuple of integers

or `TensorShape` objects, then `outputs` will be a tuple having the

same structure as `cell.output_size`, containing Tensors having shapes

corresponding to the shape data in `cell.output_size`.

state: The final state. If `cell.state_size` is an int, this

will be shaped `[batch_size, cell.state_size]`. If it is a

`TensorShape`, this will be shaped `[batch_size] + cell.state_size`.

If it is a (possibly nested) tuple of ints or `TensorShape`, this will

be a tuple having the corresponding shapes. If cells are `LSTMCells`

`state` will be a tuple containing a `LSTMStateTuple` for each cell.

Raises:

TypeError: If `cell` is not an instance of RNNCell.

ValueError: If inputs is None or an empty list.

"""

rnn_cell_impl.assert_like_rnncell("cell", cell)

with vs.variable_scope(scope or "rnn") as varscope:

# Create a new scope in which the caching device is either

# determined by the parent scope, or is set to place the cached

# Variable using the same placement as for the rest of the RNN.

if not context.executing_eagerly():

if varscope.caching_device is None:

varscope.set_caching_device(lambda op: op.device)

# By default, time_major==False and inputs are batch-major: shaped

# [batch, time, depth]

# For internal calculations, we transpose to [time, batch, depth]

flat_input = nest.flatten(inputs)

if not time_major:

# (B,T,D) => (T,B,D)

flat_input = [ops.convert_to_tensor(input_) for input_ in flat_input]

flat_input = tuple(_transpose_batch_time(input_) for input_ in flat_input)

parallel_iterations = parallel_iterations or 32

if sequence_length is not None:

sequence_length = math_ops.to_int32(sequence_length)

if sequence_length.get_shape().ndims not in (None, 1):

raise ValueError(

"sequence_length must be a vector of length batch_size, "

"but saw shape: %s" % sequence_length.get_shape())

sequence_length = array_ops.identity( # Just to find it in the graph.

sequence_length, name="sequence_length")

batch_size = _best_effort_input_batch_size(flat_input)

if initial_state is not None:

state = initial_state

else:

if not dtype:

raise ValueError("If there is no initial_state, you must give a dtype.")

state = cell.zero_state(batch_size, dtype)

def _assert_has_shape(x, shape):

x_shape = array_ops.shape(x)

packed_shape = array_ops.stack(shape)

return control_flow_ops.Assert(

math_ops.reduce_all(math_ops.equal(x_shape, packed_shape)),

["Expected shape for Tensor %s is " % x.name,

packed_shape, " but saw shape: ", x_shape])

if not context.executing_eagerly() and sequence_length is not None:

# Perform some shape validation

with ops.control_dependencies(

[_assert_has_shape(sequence_length, [batch_size])]):

sequence_length = array_ops.identity(

sequence_length, name="CheckSeqLen")

inputs = nest.pack_sequence_as(structure=inputs, flat_sequence=flat_input)

(outputs, final_state) = _dynamic_rnn_loop(

cell,

inputs,

state,

parallel_iterations=parallel_iterations,

swap_memory=swap_memory,

sequence_length=sequence_length,

dtype=dtype)

# Outputs of _dynamic_rnn_loop are always shaped [time, batch, depth].

# If we are performing batch-major calculations, transpose output back

# to shape [batch, time, depth]

if not time_major:

# (T,B,D) => (B,T,D)

outputs = nest.map_structure(_transpose_batch_time, outputs)

return (outputs, final_state)

下面这部分函数是dynamic_rnn具体每一步的实现,我就只放注释了,用来理解以下上面这个函数的output。

def _dynamic_rnn_loop(cell,

inputs,

initial_state,

parallel_iterations,

swap_memory,

sequence_length=None,

dtype=None):

"""Internal implementation of Dynamic RNN.

Args:

cell: An instance of RNNCell.

inputs: A `Tensor` of shape [time, batch_size, input_size], or a nested

tuple of such elements.

initial_state: A `Tensor` of shape `[batch_size, state_size]`, or if

`cell.state_size` is a tuple, then this should be a tuple of

tensors having shapes `[batch_size, s] for s in cell.state_size`.

parallel_iterations: Positive Python int.

swap_memory: A Python boolean

sequence_length: (optional) An `int32` `Tensor` of shape [batch_size].

dtype: (optional) Expected dtype of output. If not specified, inferred from

initial_state.

Returns:

Tuple `(final_outputs, final_state)`.

final_outputs:

A `Tensor` of shape `[time, batch_size, cell.output_size]`. If

`cell.output_size` is a (possibly nested) tuple of ints or `TensorShape`

objects, then this returns a (possibly nested) tuple of Tensors matching

the corresponding shapes.

final_state:

A `Tensor`, or possibly nested tuple of Tensors, matching in length

and shapes to `initial_state`.

Raises:

ValueError: If the input depth cannot be inferred via shape inference

from the inputs.

"""