mahout源码分析之Decision Forest 三部曲之二BuildForest(1)

Mahout版本:0.7,hadoop版本:1.0.4,jdk:1.7.0_25 64bit。

BuildForest是在mahout-examples-0.7-job.jar包的org\apache\mahout\classifier\df\mapreduce 路径下。直接运行该类,可以看到该类的使用方式:

Usage:

[--data <path> --dataset <dataset> --selection <m> --no-complete --minsplit

<minsplit> --minprop <minprop> --seed <seed> --partial --nbtrees <nbtrees>

--output <path> --help]

Options

--data (-d) path Data path

--dataset (-ds) dataset Dataset path

--selection (-sl) m Optional, Number of variables to select randomly

at each tree-node.

For classification problem, the default is

square root of the number of explanatory

variables.

For regression problem, the default is 1/3 of

the number of explanatory variables.

--no-complete (-nc) Optional, The tree is not complemented

--minsplit (-ms) minsplit Optional, The tree-node is not divided, if the

branching data size is smaller than this value.

The default is 2.

--minprop (-mp) minprop Optional, The tree-node is not divided, if the

proportion of the variance of branching data is

smaller than this value.

In the case of a regression problem, this value

is used. The default is 1/1000(0.001).

--seed (-sd) seed Optional, seed value used to initialise the

Random number generator

--partial (-p) Optional, use the Partial Data implementation

--nbtrees (-t) nbtrees Number of trees to grow

--output (-o) path Output path, will contain the Decision Forest

--help (-h) Print out help

这个类刚开始也是设置参数,然后直接进入到buildForest()方法中。这个方法主要包含下面的四个步骤:

DecisionTreeBuilder treeBuilder = new DecisionTreeBuilder();

Builder forestBuilder;

if (isPartial) {

forestBuilder = new PartialBuilder(treeBuilder, dataPath, datasetPath, seed, getConf());

}

DecisionForest forest = forestBuilder.build(nbTrees);

DFUtils.storeWritable(getConf(), forestPath, forest);

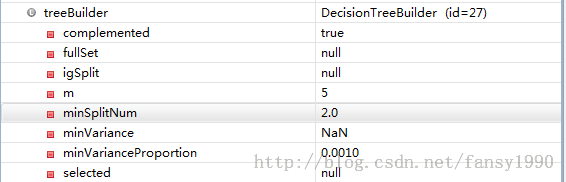

1. 新建treeBuilder,设置每次随机选择属性的样本个数,默认是所有属性的1/3,设置complemented,默认是true的,其他的属性参数基本也是默认的,设置断点,可以看到该变量的值如下:

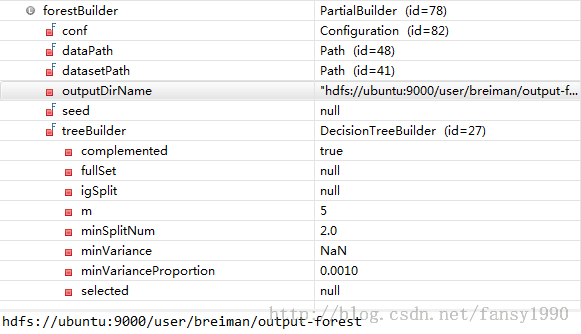

2. 新建PartialBuilder,设置相关的参数,得到下面的forestBuilder的值如下:

3.build方法,这个是重点了。

进入到Builder中的build方法中,看到是一些设置相关变量的代码:setRandomSeed、setNbTrees、setTreeBuilder。然后把dataset的路径加入到了distributedCache中,这样在Mapper中就可以直接读出这个路径了(相当于放在了内存中)。然后就是新建Job了,名字为decision forest builder,初始化这个Job,运行:

Job job = new Job(conf, "decision forest builder");

configureJob(job);

if (!runJob(job)) {

log.error("Job failed!");

return null;

}

初始化:configureJob,看到Builder的子类PartialBuilder中的configureJob方法。

Configuration conf = job.getConfiguration();

job.setJarByClass(PartialBuilder.class);

FileInputFormat.setInputPaths(job, getDataPath());

FileOutputFormat.setOutputPath(job, getOutputPath(conf));

job.setOutputKeyClass(TreeID.class);

job.setOutputValueClass(MapredOutput.class);

job.setMapperClass(Step1Mapper.class);

job.setNumReduceTasks(0); // no reducers

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(SequenceFileOutputFormat.class);

可以看到都是一些基本的设置,设置输出的<key,value>的格式,设置Mapper为Step1Mapper,设置Reducer为空,设置输入、输出的路径格式(序列、字符串)。

那下面其实只用分析Step1Mapper就可以了。

分析Step1Mapper需要分析它的数据流,打开该类文件,看到该Mapper有setup、map、cleanup三个函数,且在cleanup函数中进行输出。首先看setup函数,这个函数如下:

protected void setup(Context context) throws IOException, InterruptedException {

super.setup(context);

Configuration conf = context.getConfiguration();

configure(Builder.getRandomSeed(conf), conf.getInt("mapred.task.partition", -1),

Builder.getNumMaps(conf), Builder.getNbTrees(conf));

}

进入到configure中查看该函数源码:

protected void configure(Long seed, int partition, int numMapTasks, int numTrees) {

converter = new DataConverter(getDataset());

// prepare random-numders generator

log.debug("seed : {}", seed);

if (seed == null) {

rng = RandomUtils.getRandom();

} else {

rng = RandomUtils.getRandom(seed);

}

log.info("partition : {}",partition);

System.out.println(new Date()+"partition : "+partition);

// mapper's partition

Preconditions.checkArgument(partition >= 0, "Wrong partition ID");

this.partition = partition;

// compute number of trees to build

nbTrees = nbTrees(numMapTasks, numTrees, partition);

// compute first tree id

firstTreeId = 0;

for (int p = 0; p < partition; p++) {

firstTreeId += nbTrees(numMapTasks, numTrees, p);

}

System.out.println(new Date()+"partition : "+partition);

log.info("partition : {}", partition);

log.info("nbTrees : {}", nbTrees);

log.info("firstTreeId : {}", firstTreeId);

}

因seed没有设置,所以传入的是null,那么这里的代码会自动进行赋值,然后到了partition这个变量,这个变量是由conf.getInt("mapred.task.partition", -1)这样得到的,但是在conf里面应该没有设置mapred.task.partition这个变量,所以这样得到的partition应该是-1,然后就到了Preconditions.checkArgument(partition>=0,"Wrong partition ID")这一行代码了,但是这里如果partition是-1的话,肯定会报错的吧,但是程序没有报错,所以可以认定这里的partition不是-1?

编写了BuildForest的仿制代码如下:

package mahout.fansy.partial;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.util.ToolRunner;

import org.apache.mahout.classifier.df.DecisionForest;

import org.apache.mahout.classifier.df.builder.DecisionTreeBuilder;

import org.apache.mahout.classifier.df.mapreduce.Builder;

import org.apache.mahout.classifier.df.mapreduce.partial.PartialBuilder;

import org.apache.mahout.common.AbstractJob;

public class BuildForestFollow extends AbstractJob {

private int m;

private int nbTrees;

private Path datasetPath;

private Path dataPath;

private Path outPath;

private boolean complemented=true;

private Configuration conf;

@Override

public int run(String[] args) throws Exception {

addInputOption();

addOutputOption();

addOption("selection","sl", " Optional, Number of variables to select randomly at each tree-node."+

"For classification problem, the default is square root of the number of explanatory"+

"variables. For regression problem, the default is 1/3 of"+

"the number of explanatory variables");

addOption("nbtrees","t","Number of trees to grow ");

addOption("dataset","ds","Dataset path ");

if (parseArguments(args) == null) {

return -1;

}

dataPath = getInputPath();

outPath = getOutputPath();

datasetPath=new Path(getOption("dataset"));

m=Integer.parseInt(getOption("selection"));

nbTrees=Integer.parseInt(getOption("nbtrees"));

conf=getConf();

init();

return 0;

}

private void init() throws IOException, ClassNotFoundException, InterruptedException{

FileSystem ofs = outPath.getFileSystem(getConf());

if (ofs.exists(outputPath)) {

ofs.deleteOnExit(outPath);

}

DecisionTreeBuilder treeBuilder = new DecisionTreeBuilder();

treeBuilder.setM(m);

treeBuilder.setComplemented(complemented);

Builder forestBuilder=new PartialBuilder(treeBuilder, dataPath, datasetPath, null, conf);;

forestBuilder.setOutputDirName(outputPath.toString()); // 此处一定要设置为这样的方式,而非outputPath.getName(),

//否则后面会出现权限问题

DecisionForest forest = forestBuilder.build(nbTrees);

System.out.println(forest);

}

public static void main(String[] args) throws Exception{

ToolRunner.run(new Configuration(),new BuildForestFollow(), args);

}

}

编写测试代码如下:

package mahout.fansy.partial.test;

import com.google.common.base.Preconditions;

import mahout.fansy.partial.BuildForestFollow;

public class TestBuildForestFollow {

/**

* @param args

* @throws Exception

*/

public static void main(String[] args) throws Exception {

String[] arg=new String[]{"-jt","ubuntu:9001","-fs","ubuntu:9000",

"-i","hdfs://ubuntu:9000/user/breiman/input/glass.data",

"-ds","hdfs://ubuntu:9000/user/breiman/glass.info",

"-sl","5",

"-t","10",

"-o","hdfs://ubuntu:9000/user/breiman/output-forest"

};

BuildForestFollow.main(arg);

// int a=1;

// Preconditions.checkArgument(a >= 0, "Wrong partition ID");

}

}

上面的测试代码同样没有对conf设置mapred.task.partition变量,但是程序依然可以跑,没有报错。所以我就想在Step1Mapper中进行设置信息打印出来,

由于debug模式不会用,所以我就把log.debug全部改为了log.info,并替换了mahout-examples-0.7-job.jar文件中的相应class文件,但是依然没有打印出来相关的信息,郁闷。。。

然后我就修改了Builder类中的相应信息(在290行左右,修改完编译后同样替换mahout-examples-0.7-job.jar中对应的文件):

Job job = new Job(conf, "decision forest builder");

log.info("partition : {}",conf.getInt("mapred.task.partition", -1));

log.info("Configuring the job...");

这样就可以在job提交之前,conf不会变的情况下进行partition的查看。运行前面的测试,得到:

13/09/21 23:53:10 INFO common.AbstractJob: Command line arguments: {--dataset=[hdfs://ubuntu:9000/user/breiman/glass.info], --endPhase=[2147483647], --input=[hdfs://ubuntu:9000/user/breiman/input/glass.data], --nbtrees=[10], --output=[hdfs://ubuntu:9000/user/breiman/output-forest], --selection=[5], --startPhase=[0], --tempDir=[temp]}

13/09/21 23:53:11 INFO mapreduce.Builder: partition : -1

13/09/21 23:53:11 INFO mapreduce.Builder: Configuring the job...

13/09/21 23:53:11 INFO mapreduce.Builder: Running the job...

这里可以看到partition的确是-1,那么在setup函数中在执行conf.getInt("mapred.task.partition", -1)这一句之前哪里对conf进行了修改么?然后对mapred.task.parition进行了赋值?可能的解决方法还是应该去看Setp1Mapper 中的信息,在conf.getInt("mapred.task.partition", -1)之后,Preconditions.checkArgument(partition >= 0, "Wrong partition ID");之前查看partition的值,但是如何做呢?

分享,成长,快乐

转载请注明blog地址:http://blog.csdn.net/fansy1990