mahout源码KMeansDriver分析之五CIMapper初探

接着上篇,继续分析代码。下面就到了MR的循环了,这里MR应该算是比较好理解的,重点是退出循环的条件设置,即如何判断前后两次中心点误差小于给定阈值。

首先,while循环:

while (iteration <= numIterations) {

conf.set(PRIOR_PATH_KEY, priorPath.toString());

String jobName = "Cluster Iterator running iteration " + iteration + " over priorPath: " + priorPath;

System.out.println(jobName);

Job job = new Job(conf, jobName);

job.setMapOutputKeyClass(IntWritable.class);

job.setMapOutputValueClass(ClusterWritable.class);

job.setOutputKeyClass(IntWritable.class);

job.setOutputValueClass(ClusterWritable.class);

job.setInputFormatClass(SequenceFileInputFormat.class);

job.setOutputFormatClass(SequenceFileOutputFormat.class);

job.setMapperClass(CIMapper.class);

job.setReducerClass(CIReducer.class);

FileInputFormat.addInputPath(job, inPath);

clustersOut = new Path(outPath, Cluster.CLUSTERS_DIR + iteration);

priorPath = clustersOut;

FileOutputFormat.setOutputPath(job, clustersOut);

job.setJarByClass(ClusterIterator.class);

if (!job.waitForCompletion(true)) {

throw new InterruptedException("Cluster Iteration " + iteration + " failed processing " + priorPath);

}

ClusterClassifier.writePolicy(policy, clustersOut);

FileSystem fs = FileSystem.get(outPath.toUri(), conf);

iteration++;

if (isConverged(clustersOut, conf, fs)) {

break;

}

}

这个循环可以看出每个MR的输入都是一样的,输出为outPath+"/clusters-"+iteration ,然后每次MR后就会把同一个policy写入输出里面,循环次数加1,然后判断是否退出循环即isConverged(clustersOut,conf,fs)方法;下面看仿造版的MR。首先看Mapper:

package mahout.fansy.kmeans;

import java.io.IOException;

import java.util.Iterator;

import java.util.List;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.mahout.clustering.Cluster;

import org.apache.mahout.clustering.classify.ClusterClassifier;

import org.apache.mahout.clustering.iterator.ClusterIterator;

import org.apache.mahout.clustering.iterator.ClusterWritable;

import org.apache.mahout.clustering.iterator.ClusteringPolicy;

import org.apache.mahout.common.iterator.sequencefile.PathFilters;

import org.apache.mahout.common.iterator.sequencefile.PathType;

import org.apache.mahout.common.iterator.sequencefile.SequenceFileDirValueIterable;

import org.apache.mahout.math.Vector;

import org.apache.mahout.math.VectorWritable;

import org.apache.mahout.math.Vector.Element;

import com.google.common.collect.Lists;

public class TestCIMapper {

/**

* @param args

*/

private static ClusterClassifier classifier;

private static ClusteringPolicy policy;

public static void main(String[] args) throws IOException {

setup();

map();

cleanup();

}

/**

* 仿造setup函数

* @throws IOException

*/

public static void setup() throws IOException{

Configuration conf=new Configuration();

conf.set("mapred.job.tracker", "hadoop:9001"); // 这句是否可以去掉?

String priorClustersPath ="hdfs://hadoop:9000/user/hadoop/out/kmeans-output/clusters-0";

classifier = new ClusterClassifier();

classifier.readFromSeqFiles(conf, new Path(priorClustersPath));

policy = classifier.getPolicy();

policy.update(classifier);

}

/**

* 仿造map函数

*/

public static void map(){

List<VectorWritable> vList=getInputData();

for(VectorWritable value: vList){

Vector probabilities = classifier.classify(value.get());

Vector selections = policy.select(probabilities);

for (Iterator<Element> it = selections.iterateNonZero(); it.hasNext();) {

Element el = it.next();

classifier.train(el.index(), value.get(), el.get());

}

}

}

/**

* 仿造cleanup函数

*/

public static void cleanup(){

List<Cluster> clusters = classifier.getModels();

ClusterWritable cw = new ClusterWritable();

for (int index = 0; index < clusters.size(); index++) {

cw.setValue(clusters.get(index));

System.out.println("index:"+index+",cw :"+ cw.getValue().getCenter() );

}

}

/**

* 获得输入数据

* @return

*/

public static List<VectorWritable> getInputData(){

String input="hdfs://hadoop:9000/user/hadoop/out/kmeans-in-transform/part-r-00000";

Path path=new Path(input);

Configuration conf=new Configuration();

List<VectorWritable> vList=Lists.newArrayList();

for (VectorWritable cw : new SequenceFileDirValueIterable<VectorWritable>(path, PathType.LIST,

PathFilters.logsCRCFilter(), conf)) {

vList.add(cw);

}

return vList;

}

}

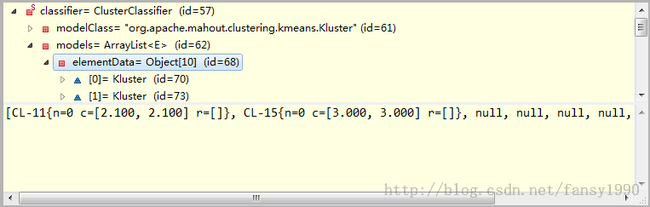

上面的代码中的setup函数函数就是把中心点和阈值读入变量而已,比如classifier的变量如下:

其实感觉好像在前面代码的处理中没有必要一个中心点使用一个文件存储吧?这里把这些文件又都读入一个变量了,还要那么多文件干嘛呢?或许这个是某个大神的得意之作,而我还没有发现其精妙之处?还有就是前面的policy变量也应该没有必要存入到文件吧,这里的classifier变量里面都有policy变量了。

下面是map函数,在map函数之前有个getInputData方法用于获得输入数据,把输入数据存入一个变量中。然后在map中foreach读出来。

在map函数中,其实只有这三句有用:

Vector probabilities = classifier.classify(value.get()); Vector selections = policy.select(probabilities); classifier.train(el.index(), value.get(), el.get());

第一行,没有设置到关于classifier的代码,第二行也是,第三行虽然设置了classifier中的models属性,但是没有改变其elementData中的center属性,而是改S0,S1之类的,如果这样没有改动的话,那在cleanup里面又是直接输出这个classifier的,那么就等于是没有改到中心点向量,那是怎么更新的呢?额 太困了,下次继续。。。

分享,快乐,成长

转载请注明出处:http://blog.csdn.net/fansy1990