Eclipse远程执行MapReduce程序(Hadoop-1.2.1)

1、环境说明

Win7 64位

Hadoop-1.2.1 Virtualbox三台主机bd11,bd12,bd13

Eclipse Indigo 必须用3系,插件放到4.x中会报错,是META-INF/MANIFEST.MF中classpath的问题,会找不到类

自编译hadoop-1.2.1的插件

2、插件参数配置

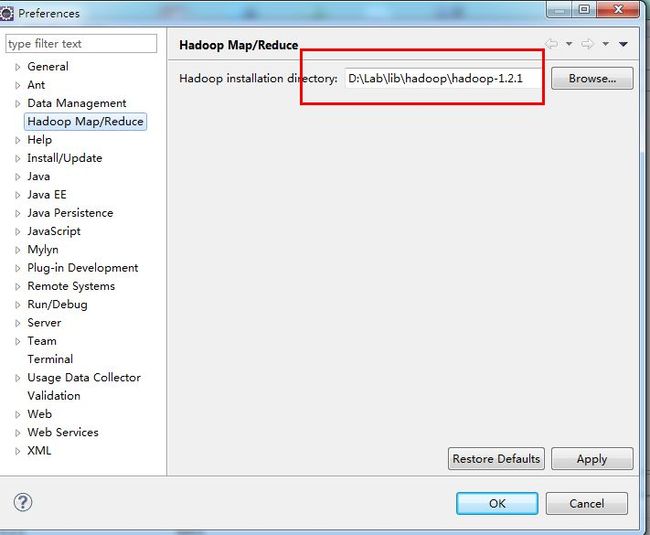

2.1、Eclipse配置

配置本地hadoop路径

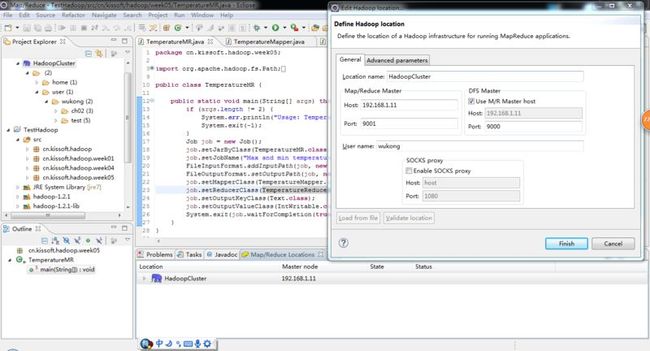

2.2、插件配置-General

2.3、Advanced parameters

| 参数名 | 参数值 | 参考文档 |

| fs.default.name | hdfs://bd11:9000 | 参考core-site.xml |

| hadoop.tmp.dir | /home/wukong/usr/hadoop-tmp | 参考core-site.xml |

| mapred.job.tracker | bd11:9001 | 参考mapred-site.xml |

2.3、可能报的错

当以上配置没有配好时,可能会报一下错误

3、运行

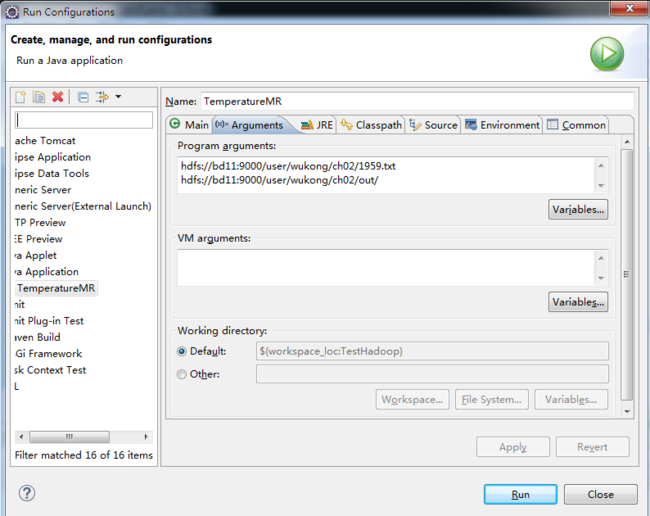

3.1、运行参数

是的,你可以把参数写死。

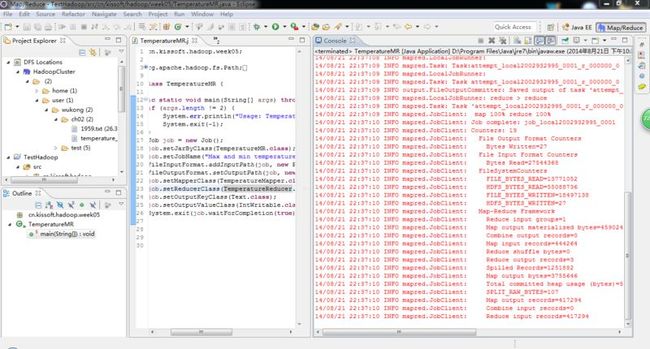

3.2、运行过程

3.3、运行结果文件

4、可能出现的错误

4.1、打开/刷新目录/创建目录没反应,也不报错

可能是引用的jar包或者以上配置的参数不对

4.2、运行mapreduce程序,抛出serverIPC版本和client不同的异常

Server IPC version 9 cannot communicate with client version 4

这种情况是由于引用的hadoop相关jar的版本与服务器上不一致导致的。

4.3、权限问题1

问题如下

14/08/21 21:39:29 ERROR security.UserGroupInformation: PriviledgedActionException as:zhaiph cause:java.io.IOException: Failed to set permissions of path: tmphadoop-xxx.staging to 0700 Exception in thread "main" java.io.IOException: Failed to set permissions of path: tmphadoop-xxx.staging to 0700 at org.apache.hadoop.fs.FileUtil.checkReturnValue(FileUtil.java:691) at org.apache.hadoop.fs.FileUtil.setPermission(FileUtil.java:664) at org.apache.hadoop.fs.RawLocalFileSystem.setPermission(RawLocalFileSystem.java:514) at org.apache.hadoop.fs.RawLocalFileSystem.mkdirs(RawLocalFileSystem.java:349) at org.apache.hadoop.fs.FilterFileSystem.mkdirs(FilterFileSystem.java:193) at org.apache.hadoop.mapreduce.JobSubmissionFiles.getStagingDir(JobSubmissionFiles.java:126) at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:942) at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:936) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1190) at org.apache.hadoop.mapred.JobClient.submitJobInternal(JobClient.java:936) at org.apache.hadoop.mapreduce.Job.submit(Job.java:550) at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:580) at MaxTemperature.main(MaxTemperature.java:36)

解决办法

hadoop-core-1.2.1.jar的问题。方法有二,要么重新编译jar,要么改源文org.apache.hadoop.fs.FileUtil.java。改源文件内容如下

private static void checkReturnValue(boolean rv, File p,FsPermission permission)

throws IOException {

// if (!rv) {

// throw new IOException("Failed to set permissions of path: " + p +

// " to " +

// String.format("%04o", permission.toShort()));

// }

}

4.4、权限问题2

错误描述

14/08/21 21:43:12 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 14/08/21 21:43:12 WARN mapred.JobClient: Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same. 14/08/21 21:43:12 WARN mapred.JobClient: No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String). 14/08/21 21:43:12 INFO input.FileInputFormat: Total input paths to process : 1 14/08/21 21:43:12 WARN snappy.LoadSnappy: Snappy native library not loaded 14/08/21 21:43:13 INFO mapred.JobClient: Running job: job_local1395395251_0001 14/08/21 21:43:13 WARN mapred.LocalJobRunner: job_local1395395251_0001 org.apache.hadoop.security.AccessControlException: org.apache.hadoop.security.AccessControlException: Permission denied: user=Johnson, access=WRITE, inode="ch02":wukong:supergroup:rwxr-xr-x at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source) at java.lang.reflect.Constructor.newInstance(Unknown Source) at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:95) at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:57) at org.apache.hadoop.hdfs.DFSClient.mkdirs(DFSClient.java:1459) at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirs(DistributedFileSystem.java:362) at org.apache.hadoop.fs.FileSystem.mkdirs(FileSystem.java:1161) at org.apache.hadoop.mapred.FileOutputCommitter.setupJob(FileOutputCommitter.java:52) at org.apache.hadoop.mapred.LocalJobRunner$Job.run(LocalJobRunner.java:319) Caused by: org.apache.hadoop.ipc.RemoteException: org.apache.hadoop.security.AccessControlException: Permission denied: user=Johnson, access=WRITE, inode="ch02":wukong:supergroup:rwxr-xr-x at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:217) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:197) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:141) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:5758) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkAncestorAccess(FSNamesystem.java:5731) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInternal(FSNamesystem.java:2502) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:2469) at org.apache.hadoop.hdfs.server.namenode.NameNode.mkdirs(NameNode.java:911) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:587) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1432) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1428) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1190) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:1426) at org.apache.hadoop.ipc.Client.call(Client.java:1113) at org.apache.hadoop.ipc.RPC$Invoker.invoke(RPC.java:229) at com.sun.proxy.$Proxy1.mkdirs(Unknown Source) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(Unknown Source) at java.lang.reflect.Method.invoke(Unknown Source) at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:85) at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:62) at com.sun.proxy.$Proxy1.mkdirs(Unknown Source) at org.apache.hadoop.hdfs.DFSClient.mkdirs(DFSClient.java:1457) ... 4 more 14/08/21 21:43:14 INFO mapred.JobClient: map 0% reduce 0% 14/08/21 21:43:14 INFO mapred.JobClient: Job complete: job_local1395395251_0001 14/08/21 21:43:14 INFO mapred.JobClient: Counters: 0

或者是直接在eclipse的DFS目录树中某个节点提示permission ^&^((%^&%%R*^*

解决办法:在hdfs-site.xml中加入以下部分。使得dfs不校验权限。

<property> <name>dfs.permissions</name> <value>false</value> </property>

4.5、Win7中执行报winutils.exe找不到

错误如下:

2015-02-05 15:19:43,080 WARN [main] util.NativeCodeLoader (NativeCodeLoader.java:<clinit>(62)) - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 2015-02-05 15:19:44,257 ERROR [main] util.Shell (Shell.java:getWinUtilsPath(336)) - Failed to locate the winutils binary in the hadoop binary path java.io.IOException: Could not locate executable D:\Lab\lib\hadoop\hadoop-2.4.1\bin\winutils.exe in the Hadoop binaries. at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:318) at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:333) at org.apache.hadoop.util.Shell.<clinit>(Shell.java:326) at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:76) at org.apache.hadoop.security.Groups.parseStaticMapping(Groups.java:93) at org.apache.hadoop.security.Groups.<init>(Groups.java:77) at org.apache.hadoop.security.Groups.getUserToGroupsMappingService(Groups.java:240) at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:255) at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(UserGroupInformation.java:232) at org.apache.hadoop.security.UserGroupInformation.loginUserFromSubject(UserGroupInformation.java:718) at org.apache.hadoop.security.UserGroupInformation.getLoginUser(UserGroupInformation.java:703) at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGroupInformation.java:605) at org.apache.hadoop.fs.FileSystem$Cache$Key.<init>(FileSystem.java:2554) at org.apache.hadoop.fs.FileSystem$Cache$Key.<init>(FileSystem.java:2546) at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2412) at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:368) at wukong.hadoop.tools.HdfsTool.rm(HdfsTool.java:26) at sample.FlowMR.main(FlowMR.java:21) 2015-02-05 15:19:51,141 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(1009)) - session.id is deprecated. Instead, use dfs.metrics.session-id 2015-02-05 15:19:51,154 INFO [main] jvm.JvmMetrics (JvmMetrics.java:init(76)) - Initializing JVM Metrics with processName=JobTracker, sessionId=

原因:就是没有这个东东。。。可以到这里去下https://github.com/srccodes/hadoop-common-2.2.0-bin,这里边有。

5、其他一些可以参考资料

http://www.cnblogs.com/huligong1234/p/4137133.html

http://www.micmiu.com/bigdata/hadoop/hadoop2-x-eclipse-plugin-build-install/

http://blog.csdn.net/poisonchry/article/details/27535333

http://www.cnblogs.com/zxub/p/3884030.html

注:插件编译相关的文章都未验证