一、基础配置1、配置主机名[root@ecs-cd34~]hostnamectlset-hostnamenode1[root@ecs-cd34~]execbash#主机名生效2、配置hosts解析(根据自己的服务器id修改)cat>>/etc/hosts/etc/docker/daemon.json<

存储集群消除pg数量过多的告警

大 大金

ceph

[root@xxxxxxxxxxxxxx~]#ceph-scluster334cfe7e-9ccc-483d-8d2c-218fde3a5fdehealthHEALTH_WARNtoomanyPGsperOSD(307>max300)nodeep-scrubflag(s)setmonmape1:3monsat{node1=100.88.28.11:6789/0,node2=100.88.28.12

linux搭建ceph集群

浓黑的daidai

linuxceph服务器

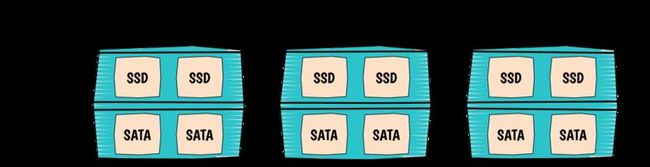

linux三节点搭建ceph集群主机IP主机名称172.26.50.75node1172.26.50.112node2172.26.50.228node3ceph-mon,ceph-mgr,ceph-mds都搭建在node1上,node2和node3上搭建ceph-osd,每个机器1个osdCeph是一个分布式的存储系统,可以在统一的系统中提供唯一的对象、块和文件存储,Ceph的大致组件如下:1.

3. ceph-mimic版本部署

Martin_wjc

7存储cephchrome前端

ceph-mimic版本部署一、ceph-mimic版本部署1、环境规划2、系统基础环境准备2.1关闭防火墙、SELinux2.2确保所有主机时间同步2.3所有主机ssh免密2.4添加所有主机解析3、配置ceph软件仓库4、安装ceph-deploy工具5、ceph集群初始化6、所有ceph集群节点安装相关软件7、客户端安装ceph-common软件8、在ceph集群中创建cephmonitor组

upmap的存储池osd坏盘处理问题

奋斗的松鼠

ceph

写在前面喜欢ceph的话欢迎关注奋斗的cepher微信公众号阅读更多好文!在《坏盘处理时osd为什么不要rm》文章中,松鼠哥对比了多组各种osd处理与数据的情况,有一个细节,那就是如果osd在重建前后要保持pg映射的一致性,那么存储池做均衡使用的是crush-compat模式,同时有读者老铁留言,说当存储池使用了upmap模式做存储池均衡的话,osd重建前后将不能保持相同的pg映射。因为松鼠哥对存

【从问题中去学习k8s】k8s中的常见面试题(夯实理论基础)(二十四)

向往风的男子

k8s学习kubernetes容器

本站以分享各种运维经验和运维所需要的技能为主《python零基础入门》:python零基础入门学习《python运维脚本》:python运维脚本实践《shell》:shell学习《terraform》持续更新中:terraform_Aws学习零基础入门到最佳实战《k8》从问题中去学习k8s《docker学习》暂未更新《ceph学习》ceph日常问题解决分享《日志收集》ELK+各种中间件《运维日常》

【从问题中去学习k8s】k8s中的常见面试题(夯实理论基础)(十九)

向往风的男子

k8s学习kubernetes容器

本站以分享各种运维经验和运维所需要的技能为主《python零基础入门》:python零基础入门学习《python运维脚本》:python运维脚本实践《shell》:shell学习《terraform》持续更新中:terraform_Aws学习零基础入门到最佳实战《k8》从问题中去学习k8s《docker学习》暂未更新《ceph学习》ceph日常问题解决分享《日志收集》ELK+各种中间件《运维日常》

【从问题中去学习k8s】k8s中的常见面试题(夯实理论基础)(二)

向往风的男子

k8s学习kubernetes容器

本站以分享各种运维经验和运维所需要的技能为主《python零基础入门》:python零基础入门学习《python运维脚本》:python运维脚本实践《shell》:shell学习《terraform》持续更新中:terraform_Aws学习零基础入门到最佳实战《k8》从问题中去学习k8s《docker学习》暂未更新《ceph学习》ceph日常问题解决分享《日志收集》ELK+各种中间件《运维日常》

【从问题中去学习k8s】k8s中的常见面试题(夯实理论基础)(二十三)

向往风的男子

k8s学习kubernetes容器

本站以分享各种运维经验和运维所需要的技能为主《python零基础入门》:python零基础入门学习《python运维脚本》:python运维脚本实践《shell》:shell学习《terraform》持续更新中:terraform_Aws学习零基础入门到最佳实战《k8》从问题中去学习k8s《docker学习》暂未更新《ceph学习》ceph日常问题解决分享《日志收集》ELK+各种中间件《运维日常》

ceph rgw:bucket policy实现

牛牛Blog

Cephcephrgwbucketpolicy实现

cephrgw:bucketpolicy实现相比于aws,rgw的bucketpolicy实现的还不是很完善,有很多细节都不支持,并且已支持的特性也在很多细节方面与s3不同,尤其是因为rgw不支持类似s3的accountuser结构,而使用tenant作为替代而导致的一些不同。并且在文档中还提及,为了修正这种不同,以及支持更多特性,在不久后会重写rgw的Authentication/Authori

ceph rgw java_ceph rgw multisite基本用法

weixin_39587113

cephrgwjava

Realm:Zonegroup:理解为数据中心,由一个或多个Zone组成,每个Realm有且仅有一个MasterZonegroup,用于处理系统变更,其他的称为SlaveZonegroup,元数据与MasterZonegroup保持一致;Zone:Zone是一个逻辑概念,包含一个或者多个RGW实例。每个Zonegroup有且仅有一个MasterZone,用于处理bucket和user等元数据变更。

一文读懂CEPH RGW基本原理

shichungang

ceph分布式大数据云计算

一文读懂CEPHRGW基本原理一、RGW简介二、RGW的组成结构三、Rgw用户信息四、BUCKET与对象索引信息五、RGW对象与RADOS对象的关系六、上传对象的处理流程七、RGW的双活机制八、RGW版本管理机制与CLS机制九、结语本文从RGW的基本原理出发,从整体上描述RGW的框架结构,突出关键结构之间的关联关系,从基础代码分析关键环节的实现细节,以达到清晰说明RGW模块“骨架”的效果。一、RG

【mysql】mysql之存储引擎学习

向往风的男子

DBAmysql学习数据库

本站以分享各种运维经验和运维所需要的技能为主《python零基础入门》:python零基础入门学习《python运维脚本》:python运维脚本实践《shell》:shell学习《terraform》持续更新中:terraform_Aws学习零基础入门到最佳实战《k8》从问题中去学习k8s《docker学习》暂未更新《ceph学习》ceph日常问题解决分享《日志收集》ELK+各种中间件《运维日常》

【ceph学习】ceph如何进行数据的读写(2)

陶二先生

cephosd

本章摘要上文说到,librados/IoctxImpl.cc中调用objecter_op和objecter的op_submit函数,进行op请求的封装、加参和提交。本文详细介绍相关函数的调用。osdc中的操作初始化Op对象,提交请求设置Op对象的时间,oid,操作类型等信息。//osdc/Objector.h//mid-levelhelpersOp*prepare_mutate_op(consto

【ceph学习】ceph如何进行数据的读写(3)

陶二先生

cephosdmessenger

本章摘要上文说到,osdc中封装请求,使用message中的相关机制将请求发送出去。本文详细介绍osd服务端如何进行请求的接收。osd初始化osd启动时,定义了message变量ms_public,该变量绑定public网络,负责接收客户端的请求。ms_public会启动对应的线程进行接收,并指定接收函数。//ceph_osd.ccMessenger*ms_public=Messenger::cr

云原生应用(7)之Docker容器数据持久化存储机制

技术路上的苦行僧

云原生应用与架构设计云原生docker容器docker容器数据持久化

一、Docker容器数据持久化存储介绍物理机或虚拟机数据持久化存储由于物理机或虚拟机本身就拥有大容量的磁盘,所以可以直接把数据存储在物理机或虚拟机本地文件系统中,亦或者也可以通过使用额外的存储系统(NFS、GlusterFS、Ceph等)来完成数据持久化存储。Docker容器数据持久化存储由于Docker容器是由容器镜像生成的,所以一般容器镜像中包含什么文件或目录,在容器启动后,我们依旧可以看到相

Openstack 与 Ceph集群搭建(下): Openstack部署

范枝洲

系统运维openstackceph

文章目录文章参考部署节点准备1.修改Host文件与hostname名称2.安装NTP软件3.网卡配置信息4.开启Docker共享挂载5.安装python虚拟环境6.安装kolla-ansible7.加载AnsiblegalaxyrequirementsOpenstack安装前预配置1.配置密码2.配置multinode文件3.修改全局配置文件Openstack正式安装1.启动bootstrap-s

学习笔记六:ceph介绍以及初始化配置

风车带走过往

K8S相关应用学习笔记ceph

k8s对接cephceph是一种开源的分布式的存储系统,包含以下几种存储类型:块存储(rbd)文件系统cephfs对象存储分布式存储的优点:Ceph核心组件介绍安装Ceph集群初始化配置Ceph安装源安装基础软件包安装ceph集群安装ceph-deploy创建monitor节点修改ceph配置文件配置初始monitor、收集所有的密钥部署osd服务创建ceph文件系统ceph是一种开源的分布式的存

云原生存储解决方案

爱技术的小伙子

云原生

云原生存储解决方案使用Rook、Ceph等工具进行云原生存储管理云原生存储简介什么是云原生存储云原生存储是指设计用于云原生环境中的存储解决方案,通常在容器化平台如Kubernetes上运行。它提供了高可用性、弹性、可扩展性和自动化管理等特性,满足现代应用的存储需求。云原生存储的重要性动态环境支持:云原生存储能够适应容器化应用的动态变化,提供灵活的存储资源管理。高可用性和持久性:确保数据在容器重启或

【从问题中去学习k8s】k8s中的常见面试题(夯实理论基础)(十二)

向往风的男子

k8s学习kubernetes容器

本站以分享各种运维经验和运维所需要的技能为主《python零基础入门》:python零基础入门学习《python运维脚本》:python运维脚本实践《shell》:shell学习《terraform》持续更新中:terraform_Aws学习零基础入门到最佳实战《k8》从问题中去学习k8s《docker学习》暂未更新《ceph学习》ceph日常问题解决分享《日志收集》ELK+各种中间件《运维日常》

【mysql】mysql之数据操作语言(insert、delete、update)

向往风的男子

DBAmysql数据库

本站以分享各种运维经验和运维所需要的技能为主《python零基础入门》:python零基础入门学习《python运维脚本》:python运维脚本实践《shell》:shell学习《terraform》持续更新中:terraform_Aws学习零基础入门到最佳实战《k8》从问题中去学习k8s《docker学习》暂未更新《ceph学习》ceph日常问题解决分享《日志收集》ELK+各种中间件《运维日常》

【从问题中去学习k8s】k8s中的常见面试题(夯实理论基础)(十五)

向往风的男子

k8s学习kubernetes容器

本站以分享各种运维经验和运维所需要的技能为主《python零基础入门》:python零基础入门学习《python运维脚本》:python运维脚本实践《shell》:shell学习《terraform》持续更新中:terraform_Aws学习零基础入门到最佳实战《k8》从问题中去学习k8s《docker学习》暂未更新《ceph学习》ceph日常问题解决分享《日志收集》ELK+各种中间件《运维日常》

【从问题中去学习k8s】k8s中的常见面试题(夯实理论基础)(十)

向往风的男子

k8s学习kubernetes容器

本站以分享各种运维经验和运维所需要的技能为主《python零基础入门》:python零基础入门学习《python运维脚本》:python运维脚本实践《shell》:shell学习《terraform》持续更新中:terraform_Aws学习零基础入门到最佳实战《k8》从问题中去学习k8s《docker学习》暂未更新《ceph学习》ceph日常问题解决分享《日志收集》ELK+各种中间件《运维日常》

【从问题中去学习k8s】k8s中的常见面试题(夯实理论基础)(六)

向往风的男子

k8s学习kubernetes容器

本站以分享各种运维经验和运维所需要的技能为主《python零基础入门》:python零基础入门学习《python运维脚本》:python运维脚本实践《shell》:shell学习《terraform》持续更新中:terraform_Aws学习零基础入门到最佳实战《k8》从问题中去学习k8s《docker学习》暂未更新《ceph学习》ceph日常问题解决分享《日志收集》ELK+各种中间件《运维日常》

xml解析

小猪猪08

xml

1、DOM解析的步奏

准备工作:

1.创建DocumentBuilderFactory的对象

2.创建DocumentBuilder对象

3.通过DocumentBuilder对象的parse(String fileName)方法解析xml文件

4.通过Document的getElem

每个开发人员都需要了解的一个SQL技巧

brotherlamp

linuxlinux视频linux教程linux自学linux资料

对于数据过滤而言CHECK约束已经算是相当不错了。然而它仍存在一些缺陷,比如说它们是应用到表上面的,但有的时候你可能希望指定一条约束,而它只在特定条件下才生效。

使用SQL标准的WITH CHECK OPTION子句就能完成这点,至少Oracle和SQL Server都实现了这个功能。下面是实现方式:

CREATE TABLE books (

id &

Quartz——CronTrigger触发器

eksliang

quartzCronTrigger

转载请出自出处:http://eksliang.iteye.com/blog/2208295 一.概述

CronTrigger 能够提供比 SimpleTrigger 更有具体实际意义的调度方案,调度规则基于 Cron 表达式,CronTrigger 支持日历相关的重复时间间隔(比如每月第一个周一执行),而不是简单的周期时间间隔。 二.Cron表达式介绍 1)Cron表达式规则表

Quartz

Informatica基础

18289753290

InformaticaMonitormanagerworkflowDesigner

1.

1)PowerCenter Designer:设计开发环境,定义源及目标数据结构;设计转换规则,生成ETL映射。

2)Workflow Manager:合理地实现复杂的ETL工作流,基于时间,事件的作业调度

3)Workflow Monitor:监控Workflow和Session运行情况,生成日志和报告

4)Repository Manager:

linux下为程序创建启动和关闭的的sh文件,scrapyd为例

酷的飞上天空

scrapy

对于一些未提供service管理的程序 每次启动和关闭都要加上全部路径,想到可以做一个简单的启动和关闭控制的文件

下面以scrapy启动server为例,文件名为run.sh:

#端口号,根据此端口号确定PID

PORT=6800

#启动命令所在目录

HOME='/home/jmscra/scrapy/'

#查询出监听了PORT端口

人--自私与无私

永夜-极光

今天上毛概课,老师提出一个问题--人是自私的还是无私的,根源是什么?

从客观的角度来看,人有自私的行为,也有无私的

Ubuntu安装NS-3 环境脚本

随便小屋

ubuntu

将附件下载下来之后解压,将解压后的文件ns3environment.sh复制到下载目录下(其实放在哪里都可以,就是为了和我下面的命令相统一)。输入命令:

sudo ./ns3environment.sh >>result

这样系统就自动安装ns3的环境,运行的结果在result文件中,如果提示

com

创业的简单感受

aijuans

创业的简单感受

2009年11月9日我进入a公司实习,2012年4月26日,我离开a公司,开始自己的创业之旅。

今天是2012年5月30日,我忽然很想谈谈自己创业一个月的感受。

当初离开边锋时,我就对自己说:“自己选择的路,就是跪着也要把他走完”,我也做好了心理准备,准备迎接一次次的困难。我这次走出来,不管成败

如何经营自己的独立人脉

aoyouzi

如何经营自己的独立人脉

独立人脉不是父母、亲戚的人脉,而是自己主动投入构造的人脉圈。“放长线,钓大鱼”,先行投入才能产生后续产出。 现在几乎做所有的事情都需要人脉。以银行柜员为例,需要拉储户,而其本质就是社会人脉,就是社交!很多人都说,人脉我不行,因为我爸不行、我妈不行、我姨不行、我舅不行……我谁谁谁都不行,怎么能建立人脉?我这里说的人脉,是你的独立人脉。 以一个普通的银行柜员

JSP基础

百合不是茶

jsp注释隐式对象

1,JSP语句的声明

<%! 声明 %> 声明:这个就是提供java代码声明变量、方法等的场所。

表达式 <%= 表达式 %> 这个相当于赋值,可以在页面上显示表达式的结果,

程序代码段/小型指令 <% 程序代码片段 %>

2,JSP的注释

<!-- -->

web.xml之session-config、mime-mapping

bijian1013

javaweb.xmlservletsession-configmime-mapping

session-config

1.定义:

<session-config>

<session-timeout>20</session-timeout>

</session-config>

2.作用:用于定义整个WEB站点session的有效期限,单位是分钟。

mime-mapping

1.定义:

<mime-m

互联网开放平台(1)

Bill_chen

互联网qq新浪微博百度腾讯

现在各互联网公司都推出了自己的开放平台供用户创造自己的应用,互联网的开放技术欣欣向荣,自己总结如下:

1.淘宝开放平台(TOP)

网址:http://open.taobao.com/

依赖淘宝强大的电子商务数据,将淘宝内部业务数据作为API开放出去,同时将外部ISV的应用引入进来。

目前TOP的三条主线:

TOP访问网站:open.taobao.com

ISV后台:my.open.ta

【MongoDB学习笔记九】MongoDB索引

bit1129

mongodb

索引

可以在任意列上建立索引

索引的构造和使用与传统关系型数据库几乎一样,适用于Oracle的索引优化技巧也适用于Mongodb

使用索引可以加快查询,但同时会降低修改,插入等的性能

内嵌文档照样可以建立使用索引

测试数据

var p1 = {

"name":"Jack",

"age&q

JDBC常用API之外的总结

白糖_

jdbc

做JAVA的人玩JDBC肯定已经很熟练了,像DriverManager、Connection、ResultSet、Statement这些基本类大家肯定很常用啦,我不赘述那些诸如注册JDBC驱动、创建连接、获取数据集的API了,在这我介绍一些写框架时常用的API,大家共同学习吧。

ResultSetMetaData获取ResultSet对象的元数据信息

apache VelocityEngine使用记录

bozch

VelocityEngine

VelocityEngine是一个模板引擎,能够基于模板生成指定的文件代码。

使用方法如下:

VelocityEngine engine = new VelocityEngine();// 定义模板引擎

Properties properties = new Properties();// 模板引擎属

编程之美-快速找出故障机器

bylijinnan

编程之美

package beautyOfCoding;

import java.util.Arrays;

public class TheLostID {

/*编程之美

假设一个机器仅存储一个标号为ID的记录,假设机器总量在10亿以下且ID是小于10亿的整数,假设每份数据保存两个备份,这样就有两个机器存储了同样的数据。

1.假设在某个时间得到一个数据文件ID的列表,是

关于Java中redirect与forward的区别

chenbowen00

javaservlet

在Servlet中两种实现:

forward方式:request.getRequestDispatcher(“/somePage.jsp”).forward(request, response);

redirect方式:response.sendRedirect(“/somePage.jsp”);

forward是服务器内部重定向,程序收到请求后重新定向到另一个程序,客户机并不知

[信号与系统]人体最关键的两个信号节点

comsci

系统

如果把人体看做是一个带生物磁场的导体,那么这个导体有两个很重要的节点,第一个在头部,中医的名称叫做 百汇穴, 另外一个节点在腰部,中医的名称叫做 命门

如果要保护自己的脑部磁场不受到外界有害信号的攻击,最简单的

oracle 存储过程执行权限

daizj

oracle存储过程权限执行者调用者

在数据库系统中存储过程是必不可少的利器,存储过程是预先编译好的为实现一个复杂功能的一段Sql语句集合。它的优点我就不多说了,说一下我碰到的问题吧。我在项目开发的过程中需要用存储过程来实现一个功能,其中涉及到判断一张表是否已经建立,没有建立就由存储过程来建立这张表。

CREATE OR REPLACE PROCEDURE TestProc

IS

fla

为mysql数据库建立索引

dengkane

mysql性能索引

前些时候,一位颇高级的程序员居然问我什么叫做索引,令我感到十分的惊奇,我想这绝不会是沧海一粟,因为有成千上万的开发者(可能大部分是使用MySQL的)都没有受过有关数据库的正规培训,尽管他们都为客户做过一些开发,但却对如何为数据库建立适当的索引所知较少,因此我起了写一篇相关文章的念头。 最普通的情况,是为出现在where子句的字段建一个索引。为方便讲述,我们先建立一个如下的表。

学习C语言常见误区 如何看懂一个程序 如何掌握一个程序以及几个小题目示例

dcj3sjt126com

c算法

如果看懂一个程序,分三步

1、流程

2、每个语句的功能

3、试数

如何学习一些小算法的程序

尝试自己去编程解决它,大部分人都自己无法解决

如果解决不了就看答案

关键是把答案看懂,这个是要花很大的精力,也是我们学习的重点

看懂之后尝试自己去修改程序,并且知道修改之后程序的不同输出结果的含义

照着答案去敲

调试错误

centos6.3安装php5.4报错

dcj3sjt126com

centos6

报错内容如下:

Resolving Dependencies

--> Running transaction check

---> Package php54w.x86_64 0:5.4.38-1.w6 will be installed

--> Processing Dependency: php54w-common(x86-64) = 5.4.38-1.w6 for

JSONP请求

flyer0126

jsonp

使用jsonp不能发起POST请求。

It is not possible to make a JSONP POST request.

JSONP works by creating a <script> tag that executes Javascript from a different domain; it is not pos

Spring Security(03)——核心类简介

234390216

Authentication

核心类简介

目录

1.1 Authentication

1.2 SecurityContextHolder

1.3 AuthenticationManager和AuthenticationProvider

1.3.1 &nb

在CentOS上部署JAVA服务

java--hhf

javajdkcentosJava服务

本文将介绍如何在CentOS上运行Java Web服务,其中将包括如何搭建JAVA运行环境、如何开启端口号、如何使得服务在命令执行窗口关闭后依旧运行

第一步:卸载旧Linux自带的JDK

①查看本机JDK版本

java -version

结果如下

java version "1.6.0"

oracle、sqlserver、mysql常用函数对比[to_char、to_number、to_date]

ldzyz007

oraclemysqlSQL Server

oracle &n

记Protocol Oriented Programming in Swift of WWDC 2015

ningandjin

protocolWWDC 2015Swift2.0

其实最先朋友让我就这个题目写篇文章的时候,我是拒绝的,因为觉得苹果就是在炒冷饭, 把已经流行了数十年的OOP中的“面向接口编程”还拿来讲,看完整个Session之后呢,虽然还是觉得在炒冷饭,但是毕竟还是加了蛋的,有些东西还是值得说说的。

通常谈到面向接口编程,其主要作用是把系统��设计和具体实现分离开,让系统的每个部分都可以在不影响别的部分的情况下,改变自身的具体实现。接口的设计就反映了系统

搭建 CentOS 6 服务器(15) - Keepalived、HAProxy、LVS

rensanning

keepalived

(一)Keepalived

(1)安装

# cd /usr/local/src

# wget http://www.keepalived.org/software/keepalived-1.2.15.tar.gz

# tar zxvf keepalived-1.2.15.tar.gz

# cd keepalived-1.2.15

# ./configure

# make &a

ORACLE数据库SCN和时间的互相转换

tomcat_oracle

oraclesql

SCN(System Change Number 简称 SCN)是当Oracle数据库更新后,由DBMS自动维护去累积递增的一个数字,可以理解成ORACLE数据库的时间戳,从ORACLE 10G开始,提供了函数可以实现SCN和时间进行相互转换;

用途:在进行数据库的还原和利用数据库的闪回功能时,进行SCN和时间的转换就变的非常必要了;

操作方法: 1、通过dbms_f

Spring MVC 方法注解拦截器

xp9802

spring mvc

应用场景,在方法级别对本次调用进行鉴权,如api接口中有个用户唯一标示accessToken,对于有accessToken的每次请求可以在方法加一个拦截器,获得本次请求的用户,存放到request或者session域。

python中,之前在python flask中可以使用装饰器来对方法进行预处理,进行权限处理

先看一个实例,使用@access_required拦截:

?