Hadoop CDH4.5升级CDH5 以及NameNode和YARN HA实战

CDH5支持很多新特性,所以打算把当前的CDH4.5升级到CDH5,软件部署还是以之前的CDH4.5集群为基础

192.168.1.10 U-1 (Active) hadoop-yarn-resourcemanager hadoop-hdfs-namenode hadoop-mapreduce-historyserver hadoop-yarn-proxyserver hadoop-hdfs-zkfc 192.168.1.20 U-2 hadoop-yarn-nodemanager hadoop-hdfs-datanode hadoop-mapreduce journalnode zookeeper zookeeper-server 192.168.1.30 U-3 hadoop-yarn-nodemanager hadoop-hdfs-datanode hadoop-mapreduce journalnode zookeeper zookeeper-server 192.168.1.40 U-4 hadoop-yarn-nodemanager hadoop-hdfs-datanode hadoop-mapreduce journalnode zookeeper zookeeper-server 192.168.1.50 U-5 hadoop-yarn-nodemanager hadoop-hdfs-datanode hadoop-mapreduce 192.168.1.70 U-7 (Standby) hadoop-yarn-resourcemanager hadoop-hdfs-namenode hadoop-hdfs-zkfc注意:因为我们是升级CDH4.5到CDH5,所以上表并没有列出来所有要安装的软件,因为在CDH4.5的时候已经安装了一些,所以上面列出的软件只是你升级的时候需要重新安装的。

操作过程如下:

1 Back Up Configuration Data and Stop Services

1 namenode进入safe mode,保存fsimage

su - hdfs hdfs dfsadmin -safemode enter hdfs dfsadmin -saveNamespace

2 停止集群中的各种hadoop服务

for x in `cd /etc/init.d ; ls hadoop-*` ; do sudo service $x stop ; done

2 Back up the HDFS Metadata

1 找到dfs.namenode.name.dir

grep -C1 name.dir /etc/hadoop/conf/hdfs-site.xml

2 备份dfs.namenode.name.dir指定的目录

tar czvf dfs.namenode.name.dir.tgz /data

3 Uninstall the CDH 4 Version of Hadoop

1 卸载hadoop组件

apt-get remove bigtop-utils bigtop-jsvc bigtop-tomcat sqoop2-client hue-common

2 删除CDH4的repository files

mv /etc/apt/sources.list.d/cloudera-cdh4.list /root/

4 Download the Latest Version of CDH 5

1 下载CDH5的repository

wget 'http://archive.cloudera.com/cdh5/one-click-install/precise/amd64/cdh5-repository_1.0_all.deb'

2 安装CDH5的repository

dpkg -i cdh5-repository_1.0_all.deb curl -s http://archive.cloudera.com/cdh5/ubuntu/precise/amd64/cdh/archive.key | apt-key add -

5 Install CDH 5 with YARN

1 安装zookeeper

2 在各个主机上安装相关组件

1 Resource Manager host

apt-get install hadoop-yarn-resourcemanager

2 NameNode host(s)

apt-get install hadoop-hdfs-namenode

3 All cluster hosts except the Resource Manager

apt-get install hadoop-yarn-nodemanager hadoop-hdfs-datanode hadoop-mapreduce

4 One host in the cluster(Active NameNode)

apt-get install hadoop-mapreduce-historyserver hadoop-yarn-proxyserver

5 All client hosts

apt-get install hadoop-client

6 Install CDH 5 with MRv1

因为CDH5已经主推YARN了,所以我们不再使用MRv1,就不安装了。

7 In an HA Deployment, Upgrade and Start the Journal Nodes

1 安装journal nodes

apt-get install hadoop-hdfs-journalnode

2 启动journal node

service hadoop-hdfs-journalnode start

8 Upgrade the HDFS Metadata

HA模式和NON-HA模式的升级方式不一样,因为我们之前的CDH4.5是HA模式的,所以我们就按照HA模式的来升级

1 在active namenode上执行

service hadoop-hdfs-namenode upgrade

2 重启standby namenode

su - hdfs hdfs namenode -bootstrapStandby service hadoop-hdfs-namenode start

3 启动datanode

service hadoop-hdfs-datanode start

4 查看版本

9 Start YARN

1 创建相关目录

su - hdfs hadoop fs -mkdir /user/history hdfs fs -chmod -R 1777 /user/history hdfs fs -chown yarn /user/history hdfs fs -mkdir /var/log/hadoop-yarn hdfs fs -chown yarn:mapred /var/log/hadoop-yarn hadoop fs -ls -R /

2 在各个hadoop集群集群上启动相关服务

service hadoop-yarn-resourcemanager start service hadoop-yarn-nodemanager start service hadoop-mapreduce-historyserver start

10 配置NameNode的HA配置

1 NameNode HA和CDH4.5的部署一样,只是要把yarn-site.xml中的mapreduce.shuffle修改为mapreduce_shuffle即可。

2 验证

11 配置YARN的HA配置

1 Stop all YARN daemons

service hadoop-yarn-nodemanager stop service hadoop-yarn-resourcemanager stop service hadoop-mapreduce-historyserver stop

2 Update the configuration used by the ResourceManagers, NodeManagers and clients

以下是U-1上的配置,core-site.xml、hdfs-site.xml、mapred-site.xml三个文件都不需要做修改,唯一要修改的是yarn-site.xml

core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://mycluster/</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>U-2:2181,U-3:2181,U-4:2181</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.permissions.superusergroup</name>

<value>hadoop</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/data</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/data01,/data02</value>

</property>

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<!-- HA Config -->

<property>

<name>dfs.ha.namenodes.mycluster</name>

<value>U-1,U-7</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.U-1</name>

<value>U-1:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.U-7</name>

<value>U-7:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.U-1</name>

<value>U-1:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.U-7</name>

<value>U-7:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://U-2:8485;U-3:8485;U-4:8485/mycluster</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/jdata</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/var/lib/hadoop-hdfs/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

</configuration>

mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>U-1:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>U-1:19888</value> </property> </configuration>

yarn-site.xml

<configuration>

<!-- Resource Manager Configs -->

<property>

<name>yarn.resourcemanager.connect.retry-interval.ms</name>

<value>2000</value>

</property>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.embedded</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yarn-rm-cluster</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>U-1,U-7</value>

</property>

<property>

<name>yarn.resourcemanager.ha.id</name>

<value>U-1</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>U-2:2181,U-3:2181,U-4:2181</value>

</property>

<property>

<name>yarn.resourcemanager.zk.state-store.address</name>

<value>U-1:2181</value>

</property>

<property>

<name>yarn.app.mapreduce.am.scheduler.connection.wait.interval-ms</name>

<value>5000</value>

</property>

<!-- RM1 configs -->

<property>

<name>yarn.resourcemanager.address.U-1</name>

<value>U-1:23140</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.U-1</name>

<value>U-1:23130</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.U-1</name>

<value>U-1:23189</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.U-1</name>

<value>U-1:23188</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.U-1</name>

<value>U-1:23125</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.U-1</name>

<value>U-1:23141</value>

</property>

<!-- RM2 configs -->

<property>

<name>yarn.resourcemanager.address.U-7</name>

<value>U-7:23140</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.U-7</name>

<value>U-7:23130</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.U-7</name>

<value>U-7:23189</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.U-7</name>

<value>U-7:23188</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.U-7</name>

<value>U-7:23125</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.U-7</name>

<value>U-7:23141</value>

</property>

<!-- Node Manager Configs -->

<property>

<description>Address where the localizer IPC is.</description>

<name>yarn.nodemanager.localizer.address</name>

<value>0.0.0.0:23344</value>

</property>

<property>

<description>NM Webapp address.</description>

<name>yarn.nodemanager.webapp.address</name>

<value>0.0.0.0:23999</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/yarn/local</value>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/yarn/log</value>

</property>

<property>

<name>mapreduce.shuffle.port</name>

<value>23080</value>

</property>

</configuration>

注意:在把yarn-site.xml拷贝到U-7后,需要把U-7上的yarn-site.xml的yarn.resourcemanager.ha.id的值修改为U-7,否则ResourceManager启动不了。

3 Start all YARN daemons

service hadoop-yarn-resourcemanager start service hadoop-yarn-nodemanager start

4 验证

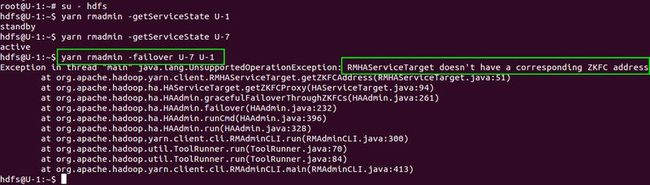

我勒个去的,这是啥问题,没有找到相应的ZKFC地址?