Muduo网络库分析(一)

开始还是先说些不说不行,说了又觉得减少键盘寿命的几句话:muduo 是一个基于 Reactor 模式的现代 C++ 网络库,它采用非阻塞 IO 模型,基于事件驱动和回调,原生支持多核多线程,适合编写 Linux 服务端多线程网络应用程序。希望你你能对Reactor模式和非阻塞IO,事件驱动和回调这些概念有一定的认识。如果了解epoll那就更好。

学习一种开源库有多种方法,你可以先通过网上或者帮助文档的简介来对库的框架有个简要了解再看源代码,你也可以先学会用库然后再看源代码。好的开源库会提供给你一些使用库的示范源代码,对于我来说这些范例就是我对库深入理解的突破口。废话少说先看muduo作者提供的一个小示例:

#include <muduo/net/TcpServer.h>

#include <muduo/base/Atomic.h>

#include <muduo/base/Logging.h>

#include <muduo/base/Thread.h>

#include <muduo/net/EventLoop.h>

#include <muduo/net/InetAddress.h>

#include <boost/bind.hpp>

#include <utility>

#include <stdio.h>

#include <unistd.h>

using namespace muduo;

using namespace muduo::net;

int numThreads = 0;

class EchoServer

{

public:

EchoServer(EventLoop* loop, const InetAddress& listenAddr)

: server_(loop, listenAddr, "EchoServer"),

oldCounter_(0),

startTime_(Timestamp::now())

{

server_.setConnectionCallback(

boost::bind(&EchoServer::onConnection, this, _1));

server_.setMessageCallback(

boost::bind(&EchoServer::onMessage, this, _1, _2, _3));

server_.setThreadNum(numThreads);

loop->runEvery(3.0, boost::bind(&EchoServer::printThroughput, this));

}

void start()

{

LOG_INFO << "starting " << numThreads << " threads.";

server_.start();

}

private:

void onConnection(const TcpConnectionPtr& conn)

{

LOG_TRACE << conn->peerAddress().toIpPort() << " -> "

<< conn->localAddress().toIpPort() << " is "

<< (conn->connected() ? "UP" : "DOWN");

conn->setTcpNoDelay(true);

}

void onMessage(const TcpConnectionPtr& conn, Buffer* buf, Timestamp)

{

size_t len = buf->readableBytes();

transferred_.addAndGet(len);

receivedMessages_.incrementAndGet();

conn->send(buf);

}

void printThroughput()

{

Timestamp endTime = Timestamp::now();

int64_t newCounter = transferred_.get();

int64_t bytes = newCounter - oldCounter_;

int64_t msgs = receivedMessages_.getAndSet(0);

double time = timeDifference(endTime, startTime_);

printf("%4.3f MiB/s %4.3f Ki Msgs/s %6.2f bytes per msg\n",

static_cast<double>(bytes)/time/1024/1024,

static_cast<double>(msgs)/time/1024,

static_cast<double>(bytes)/static_cast<double>(msgs));

oldCounter_ = newCounter;

startTime_ = endTime;

}

TcpServer server_;

AtomicInt64 transferred_;

AtomicInt64 receivedMessages_;

int64_t oldCounter_;

Timestamp startTime_;

};

int main(int argc, char* argv[])

{

LOG_INFO << "pid = " << getpid() << ", tid = " << CurrentThread::tid();

if (argc > 1)

{

numThreads = atoi(argv[1]);

}

EventLoop loop;

InetAddress listenAddr(2007);

EchoServer server(&loop, listenAddr);

server.start();

loop.loop();

}

很明显EchoServer类中的成员变量有muduo库中的TcpServer类,Timestamp类。我们尽量把EchoServer抹去,留下muduo库中的信息来把程序的流程简单串一下。(代码不是严格正确,旨在弄清流程)

int main(int argc, char* argv[])

{

LOG_INFO << "pid = " << getpid() << ", tid = " << CurrentThread::tid();

if (argc > 1)

{

numThreads = atoi(argv[1]);

}

EventLoop loop;

InetAddress listenAddr(2007);

TcpServer server_(loop, listenAddr, "EchoServer");

server_.setConnectionCallback(

boost::bind(&EchoServer::onConnection, this, _1));

server_.setMessageCallback(

boost::bind(&EchoServer::onMessage, this, _1, _2, _3));

server_.setThreadNum(numThreads);

loop->runEvery(3.0, boost::bind(&EchoServer::printThroughput, this));

server_.start();

loop.loop();

}

先从EventLoop说起:下面的成员变量和成员函数我都已加注释

class EventLoop : boost::noncopyable

{

public:

typedef boost::function<void()> Functor;

EventLoop();

~EventLoop(); // force out-line dtor, for scoped_ptr members.

///

/// Loops forever.

///

/// Must be called in the same thread as creation of the object.

///

void loop();//各个线程在这个循环中处理事件。

/// Quits loop.

///

/// This is not 100% thread safe, if you call through a raw pointer,

/// better to call through shared_ptr<EventLoop> for 100% safety.

void quit();//用于退出线程,这个操作不是线程安全的。

///

/// Time when poll returns, usually means data arrival.

///

Timestamp pollReturnTime() const { return pollReturnTime_; }//多路复用IO接口从阻塞中唤醒时的时间

int64_t iteration() const { return iteration_; }//多路复用IO接口从阻塞中唤醒的次数

/// Runs callback immediately in the loop thread.

/// It wakes up the loop, and run the cb.

/// If in the same loop thread, cb is run within the function.

/// Safe to call from other threads.

void runInLoop(const Functor& cb);//将cb这个函数操作放到loop()循环中执行

/// Queues callback in the loop thread.

/// Runs after finish pooling.

/// Safe to call from other threads.

void queueInLoop(const Functor& cb);//将cb函数操作放入对应线程循环的待处理队列中

#ifdef __GXX_EXPERIMENTAL_CXX0X__

void runInLoop(Functor&& cb);

void queueInLoop(Functor&& cb);

#endif

// timers

///

/// Runs callback at 'time'.

/// Safe to call from other threads.

///

TimerId runAt(const Timestamp& time, const TimerCallback& cb);//在time时间点执行cb操作

///

/// Runs callback after @c delay seconds.

/// Safe to call from other threads.

///

TimerId runAfter(double delay, const TimerCallback& cb);//delay时间过后执行cb操作

///

/// Runs callback every @c interval seconds.

/// Safe to call from other threads.

///

TimerId runEvery(double interval, const TimerCallback& cb);每interval时间间隔执行一次cb操作

///

/// Cancels the timer.

/// Safe to call from other threads.

///

void cancel(TimerId timerId);

#ifdef __GXX_EXPERIMENTAL_CXX0X__

TimerId runAt(const Timestamp& time, TimerCallback&& cb);

TimerId runAfter(double delay, TimerCallback&& cb);

TimerId runEvery(double interval, TimerCallback&& cb);

#endif

// internal usage

void wakeup();//主动将多路复用IO接口从阻塞中唤醒

void updateChannel(Channel* channel);//将Channel的触发状态更新到多路复用IO接口中

void removeChannel(Channel* channel);//将Channel从多路复用IO接口的监控对象中移除

//上面的updateChannel和removeChannel对应epoll_ctl操作。

// pid_t threadId() const { return threadId_; }

void assertInLoopThread()

{

if (!isInLoopThread())

{

abortNotInLoopThread();

}

}

bool isInLoopThread() const { return threadId_ == CurrentThread::tid(); }//查看当前是否在对应

线程的loop中

// bool callingPendingFunctors() const { return callingPendingFunctors_; }

bool eventHandling() const { return eventHandling_; }//表明是否正在处理事件

static EventLoop* getEventLoopOfCurrentThread();获取当前线程中的EventLoop对象

private:

void abortNotInLoopThread();

void handleRead(); //当主动(不是系统)唤醒多路复用IO接口后执行的操作。(EventLoop构造函数会将该函数设为

wakeupChannel_的回调处理函数)

void doPendingFunctors();//一般主动唤醒多路复用IO接口后需要处理待处理队列中的操作,该函数就是将待处理队列

中的操作挨个执行

void printActiveChannels() const; // DEBUG

typedef std::vector<Channel*> ChannelList;

bool looping_; /* atomic */

bool quit_; /* atomic and shared between threads, okay on x86, I guess. */

bool eventHandling_; /* atomic */

bool callingPendingFunctors_; /* atomic */

int64_t iteration_;

const pid_t threadId_;

Timestamp pollReturnTime_;

boost::scoped_ptr<Poller> poller_;//对应的多路复用IO接口对象

boost::scoped_ptr<TimerQueue> timerQueue_;//定时器事件队列

int wakeupFd_;//当需要唤醒多路复用IO接口时会向该文件描述符中写数据,因为该描述符已经注册到多路复用IO接口

中,所以会使多路复用IO接口从阻塞中返回

// unlike in TimerQueue, which is an internal class,

// we don't expose Channel to client.

boost::scoped_ptr<Channel> wakeupChannel_;//封装了wakeupFd_的Channel对象

ChannelList activeChannels_;//当多路复用IO接口从阻塞中返回时会将激活的Channel存入该队列中

Channel* currentActiveChannel_;//当前正在处理的Channel对象

MutexLock mutex_;

std::vector<Functor> pendingFunctors_; //待处理的操作队列

};

精简的代码中首先定义了一个EventLoop对象。毫无疑问会调用EventLoop的构造函数:(看注释)

EventLoop* EventLoop::getEventLoopOfCurrentThread()

{

return t_loopInThisThread;

}

EventLoop::EventLoop()

: looping_(false),

quit_(false),

eventHandling_(false),

callingPendingFunctors_(false),

iteration_(0),

threadId_(CurrentThread::tid()),//设置threadId_为当前线程的id

poller_(Poller::newDefaultPoller(this)),//设置多路复用IO接口poll或者epoll(本文以epoll为例)

timerQueue_(new TimerQueue(this)),

wakeupFd_(createEventfd()),

wakeupChannel_(new Channel(this, wakeupFd_)),//将wakeupFd_和wakeupChannel_绑定

currentActiveChannel_(NULL)

{

LOG_DEBUG << "EventLoop created " << this << " in thread " << threadId_;

if (t_loopInThisThread)

{

LOG_FATAL << "Another EventLoop " << t_loopInThisThread

<< " exists in this thread " << threadId_;

}

else

{

t_loopInThisThread = this;//t_loopInThisThread是thread_变量

}

wakeupChannel_->setReadCallback(

boost::bind(&EventLoop::handleRead, this));//将wakupChannel触发后的回调设置为EventLoop::handleRead

// we are always reading the wakeupfd

wakeupChannel_->enableReading();//该操作设置wakeupChannel_中的wakeupFd_可读事件注册到poller_中,此后

只要往wakeupFd_中写入数据都会将多路复用IO接口从阻塞中返回。进而执行相应操作。这就是wakeup。

}

回到精简的代码中:

InetAddress listenAddr(2007);

这段代码不再累赘的诉说。毫无疑问这是对ip和端口的封装,供服务端监听端口连接或者客户端连接远端用的。

TcpServer server_(loop, listenAddr, "EchoServer");

直接看TCPServer的构造函数吧:

TcpServer::TcpServer(EventLoop* loop,

const InetAddress& listenAddr,

const string& nameArg,

Option option)

: loop_(CHECK_NOTNULL(loop)),

hostport_(listenAddr.toIpPort()),

name_(nameArg),

acceptor_(new Acceptor(loop, listenAddr, option == kReusePort)),

threadPool_(new EventLoopThreadPool(loop)),

connectionCallback_(defaultConnectionCallback),

messageCallback_(defaultMessageCallback),

nextConnId_(1)

{

acceptor_->setNewConnectionCallback(

boost::bind(&TcpServer::newConnection, this, _1, _2));//设置新连接到来后的需要处理的回调

}

构造函数中初始化了成员变量boost::scoped_ptr<Acceptor> acceptor_,和

boost::scoped_ptr<EventLoopThreadPool> threadPool_。

acceptor_用于接收客户端的连接,看构造函数:

Acceptor::Acceptor(EventLoop* loop, const InetAddress& listenAddr, bool reuseport)

: loop_(loop),

acceptSocket_(sockets::createNonblockingOrDie()),//初始化服务端监听连接的文件描述符

acceptChannel_(loop, acceptSocket_.fd()),将acceptSocket_的文件描述符和acceptChannel_绑定在一起

并规定在指定的loop所在的线程中进行监听连接。

listenning_(false),

idleFd_(::open("/dev/null", O_RDONLY | O_CLOEXEC))

{

assert(idleFd_ >= 0);

acceptSocket_.setReuseAddr(true);

acceptSocket_.setReusePort(reuseport);

acceptSocket_.bindAddress(listenAddr);

acceptChannel_.setReadCallback(

boost::bind(&Acceptor::handleRead, this));//设置acceptChannel_活动后需要处理的回调函数

为Acceptor::handleRead

}

acceptor_->setNewConnectionCallback( boost::bind(&TcpServer::newConnection, this, _1, _2));

此代码中将acceptor_的NewConnectionCallback newConnectionCallback_成员赋值为TcpServer::newConnection回调。

当acceptor_监听的端口有连接过来时会调用Acceptor::handleRead:

void Acceptor::handleRead()

{

loop_->assertInLoopThread();

InetAddress peerAddr;

//FIXME loop until no more

int connfd = acceptSocket_.accept(&peerAddr);

if (connfd >= 0)

{

// string hostport = peerAddr.toIpPort();

// LOG_TRACE << "Accepts of " << hostport;

if (newConnectionCallback_)

{

newConnectionCallback_(connfd, peerAddr);

}

else

{

sockets::close(connfd);

}

}

else

{

LOG_SYSERR << "in Acceptor::handleRead";

// Read the section named "The special problem of

// accept()ing when you can't" in libev's doc.

// By Marc Lehmann, author of livev.

if (errno == EMFILE)

{

::close(idleFd_);

idleFd_ = ::accept(acceptSocket_.fd(), NULL, NULL);

::close(idleFd_);

idleFd_ = ::open("/dev/null", O_RDONLY | O_CLOEXEC);

}

}

}

在Acceptor::handleRead运行中又会调用newConnectionCallback_(connfd, peerAddr);由于TCPServer的构造函数中:acceptor_->setNewConnectionCallback(

boost::bind(&TcpServer::newConnection, this, _1, _2));

所以调用newConnectionCallback_(connfd, peerAddr)就是调用server_.newConnection(connfd, peerAddr)。

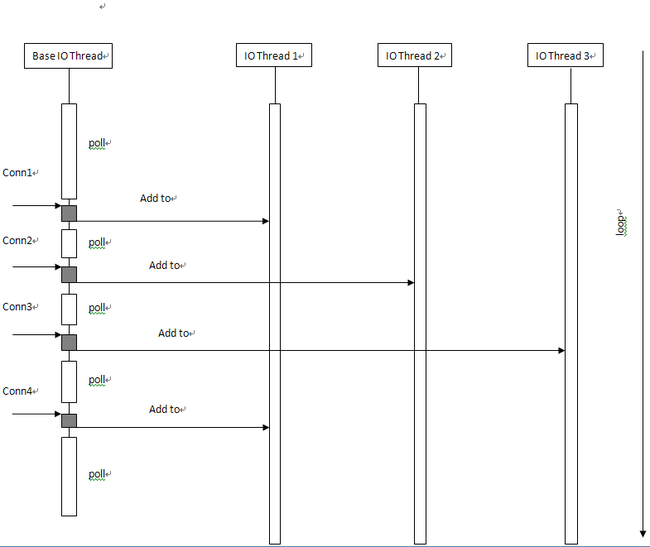

threadPool_是一个线程池管理对象它用于开启线程,并管理线程本代码中的loop对象是在主线程中执行循环。而threadPool根据numThreads开启的线程中会有自己的EventLoop对象。(后续分析)

回到之前的代码,在接收到新的连接后具体还是要执行server_.newConnection(connfd, peerAddr):

void TcpServer::newConnection(int sockfd, const InetAddress& peerAddr)

{

loop_->assertInLoopThread();

EventLoop* ioLoop = threadPool_->getNextLoop();//新连接对象即将分配给ioLoop对象,其实就是分配给ioLoop

所在的线程。让每个线程的连接数得到平均分配。

char buf[32];

snprintf(buf, sizeof buf, ":%s#%d", hostport_.c_str(), nextConnId_);

++nextConnId_;

string connName = name_ + buf;

LOG_INFO << "TcpServer::newConnection [" << name_

<< "] - new connection [" << connName

<< "] from " << peerAddr.toIpPort();

InetAddress localAddr(sockets::getLocalAddr(sockfd));

// FIXME poll with zero timeout to double confirm the new connection

// FIXME use make_shared if necessary

TcpConnectionPtr conn(new TcpConnection(ioLoop,

connName,

sockfd,

localAddr,

peerAddr));

connections_[connName] = conn;

conn->setConnectionCallback(connectionCallback_);//设置进入连接后处理的回调函数connectionCallback_

在server_初始化时已经赋值为EchoServer::onConnection

conn->setMessageCallback(messageCallback_);//设置连接中接收到数据期间会调用的回调

是messageCallback_而已经赋值EchoServer::onMessage

conn->setWriteCompleteCallback(writeCompleteCallback_);

conn->setCloseCallback(

boost::bind(&TcpServer::removeConnection, this, _1)); //连接关闭时执行的回调

ioLoop->runInLoop(boost::bind(&TcpConnection::connectEstablished, conn));

//在ioLoop的循环中执行TcpConnection::connectEstablished()

}

在TcpConnection::connectEstablished()中会将新连接的文件描述符注册到所在线程的EventLoop上,并且调用

TCPConnection的connectionCallback_(1)最终还是由于conn->setConnectionCallback(connectionCallback_)

所以最终还是要执行到EchoServer::onConnection()。

其实说到现在真正的IO线程还没开启。server_.start()将会给你根据所给的线程数开启线程并在线程中初始化好各自的

多路复用IO接口即Poller对象和其它信息。(后续分析)

主线程最后执行loop.loop()。进入循环开始处理到来的新连接。而其他IO线程也已经进入各自的loop中冲力各自拥有 的连接上的事件。(后续分析)

从C语言角度将这些分析的代码回调函数指针的赋值是关键。也是弄清流程的关键。

简单将分析到现在的时序图画出来:途中只是对主线程对新连接处理的一个时序图。io线程的时序图后续补充。

后续IO Thread将对各自拥有的连接进行处理。