第五章 高级搜索

5.1 搜索排序

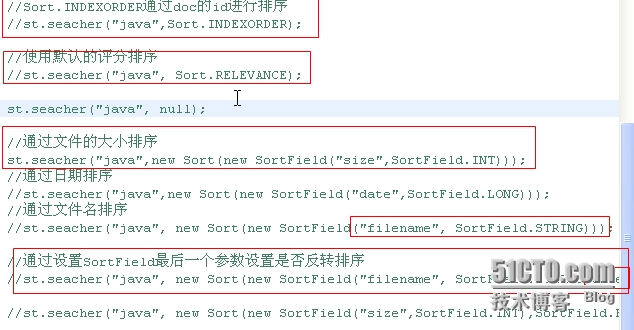

public void seacher(String queryContion,intnum,Sort sort) {

try {

IndexSearcher searcher=new IndexSearcher(indexReader);

QueryParser parser = newQueryParser(Version.LUCENE_35,"contents",analyzer);

Query query=parser.parse(queryContion);

System.out.println("使用的Query:"+query.getClass().getName());

TopDocs topDocs=searcher.search(query, num,sort);

intlength= topDocs.totalHits;

System.out.println("总共查询出来总数:"+length);

ScoreDoc[] scoreDocs= topDocs.scoreDocs;

for(ScoreDoc scoreDoc : scoreDocs) {

Document doc=searcher.doc(scoreDoc.doc);

System.out.println(doc.get("id") + "---->"

+ doc.get("filename") + "[" +doc.get("fullpath")

+ "]-->\n" + doc.get("contents").substring(0,40));

}

} catch(CorruptIndexException e) {

e.printStackTrace();

} catch(IOException e) {

e.printStackTrace();

} catch(ParseException e) {

e.printStackTrace();

}

}

5.2 搜索过滤

@Test

public void searchByQueryParse(){

SearchFilterOperaopera=new SearchFilterOpera("D:/luceneIndex/index", analyzer, true);

//Filterfilter=newTermRangeFilter("id","3","6",true,true);

//Filterfilter=newTermRangeFilter("filename","b","d",true,true);

//NumericRangeFilterfilter=NumericRangeFilter.newLongRange("size", 200L, 4700L, true,true);

QueryWrapperFilterfilter=new QueryWrapperFilter(new TermQuery(newTerm("id","3")));

//opera.searchByQueryParse("filename:[aTO z]",null,10);

//opera.searchByQueryParse("filename:[aTO g]",termFilter,10);

opera.searchByQueryParse("id:{1TO 9}",filter,20);

//opera.searchByQueryParse("filename:{aTO g}",10);

//没有办法匹配数字范围(自己扩展Parser)

//opera.searchByQueryParse("size:[200TO 13000]",10);

//完全匹配

//opera.searchByQueryParse("contents:\"完全是宠溺\"",10);

//距离为1匹配

//opera.searchByQueryParse("contents:\"完全宠溺\"~1",10);

//模糊查询

//opera.searchByQueryParse("contents:*",10);

}

public void searchByQueryParse(StringqueryContion,Filter filter,int num) {

try{

IndexSearchersearcher=new IndexSearcher(indexReader);

QueryParserparser = new QueryParser(Version.LUCENE_35,"contents",analyzer);

Queryquery=parser.parse(queryContion);

System.out.println("query类型:"+query.getClass().getName()+"====>"+query.toString());

TopDocs topDocs= searcher.search(query,filter, num);

intlength= topDocs.totalHits;

System.out.println("总共查询出来总数:"+length);

ScoreDoc[]scoreDocs= topDocs.scoreDocs;

for(ScoreDoc scoreDoc : scoreDocs) {

Documentdoc=searcher.doc(scoreDoc.doc);

System.out.println(doc.get("id")+ "---->"

+doc.get("filename") + "[" + doc.get("fullpath")

+"]-->\n" );

}

}catch (CorruptIndexException e) {

e.printStackTrace();

}catch (IOException e) {

e.printStackTrace();

}catch (ParseException e) {

e.printStackTrace();

}

}

5.3 自定义评分

过程:

(1).创建一个类继承于CustomScoreQuery

(2).覆盖getCustomScoreProvider方法

(3).创建CustomScoreProvider类

(4).覆盖customScore方法

(5).根据field进行评分

注:Similarity模块--Lucene的搜索结果打分控制模块。

权重的控制:这是在建索引的时候就写入索引的,查询时只是读取出来,用乘的方式来对一些检索结果加分。

Controller 模块:Lucene的排序流程控制模块,里面提供的一些接口能让你对打分后的搜索结果进行一些筛选和调整。

package com.mzsx.custom.score;

import java.io.IOException;

import java.text.SimpleDateFormat;

import java.util.Date;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.IndexReader;

import org.apache.lucene.index.Term;

import org.apache.lucene.search.FieldCache;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TermQuery;

import org.apache.lucene.search.TermRangeQuery;

import org.apache.lucene.search.TopDocs;

importorg.apache.lucene.search.function.CustomScoreProvider;

import org.apache.lucene.search.function.CustomScoreQuery;

importorg.apache.lucene.search.function.FieldScoreQuery;

importorg.apache.lucene.search.function.FieldScoreQuery.Type;

importorg.apache.lucene.search.function.ValueSourceQuery;

import org.apache.lucene.store.Directory;

import com.google.gson.Gson;

import com.mzsx.write.DirectoryConext;

public class MyScoreQuery {

@SuppressWarnings("deprecation")

publicvoid searchByFileSizeQuery() {

try {

IndexSearchersearcher = newIndexSearcher(DirectoryConext.getDirectory("D:\\luceneIndex\\index"));

Query q = newTermRangeQuery("id","1","8",true,true);

//1、创建一个评分域

FilenameSizeQuery query = new FilenameSizeQuery(q);

TopDocs tds =null;

tds =searcher.search(query, 100);

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd hh:mm:ss");

for(ScoreDocsd:tds.scoreDocs) {

Document d = searcher.doc(sd.doc);

System.out.println(sd.doc+":("+sd.score+")" +

"["+d.get("filename")+"【"+d.get("path")+"】--->"+

d.get("size")+"-----"+sdf.format(newDate(Long.valueOf(d.get("date"))))+"]");

}

searcher.close();

} catch(CorruptIndexException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

//--------------文件大小评分--------------------

privateclass FilenameSizeQuery extends CustomScoreQuery{

publicFilenameSizeQuery(Query subQuery) {

super(subQuery);

}

@Override

protectedCustomScoreProvider getCustomScoreProvider(IndexReader reader)

throws IOException {

return newFilenameSizeProvider(reader);

}

}

privateclass FilenameSizeProvider extends CustomScoreProvider{

long[] sizes=null;

private Gson gson=newGson();

publicFilenameSizeProvider(IndexReader reader) {

super(reader);

try {

sizes=FieldCache.DEFAULT.getLongs(reader, "size");

} catch(IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

@Override

public floatcustomScore(int doc, float subQueryScore, float valSrcScore)

throws IOException {

//如何根据doc获取相应的field的值

/*

* 在reader没有关闭之前,所有的数据会存储要一个域缓存中,可以通过域缓存获取很多有用的信息

* filenames =FieldCache.DEFAULT.getStrings(reader, "filename");可以获取所有的filename域的信息

*/

System.out.println(gson.toJson(sizes));

System.out.println("doc:"+doc+",subQueryScore:"+subQueryScore+",valSrcScore:"+valSrcScore);

if(sizes[doc]>14064){

return subQueryScore*1.5F;

}

returnsubQueryScore/1.2F;

}

}

}

测试

package com.mzsx.test;

import org.junit.Test;

import com.mzsx.custom.score.MyScoreQuery;

public class CustomScoreTest {

@Test

public void searchByFileSizeQuery(){

MyScoreQuery myScoreQuery=newMyScoreQuery();

myScoreQuery.searchByFileSizeQuery();

}

}

结果

==================directory实例化===================== [4919,1377,14064,735,4747,20107,278973,66596,224899,8348,1731,4322,12311,1011713,453783] doc:1,subQueryScore:1.0,valSrcScore:1.0 [4919,1377,14064,735,4747,20107,278973,66596,224899,8348,1731,4322,12311,1011713,453783] doc:2,subQueryScore:1.0,valSrcScore:1.0 [4919,1377,14064,735,4747,20107,278973,66596,224899,8348,1731,4322,12311,1011713,453783] doc:3,subQueryScore:1.0,valSrcScore:1.0 [4919,1377,14064,735,4747,20107,278973,66596,224899,8348,1731,4322,12311,1011713,453783] doc:4,subQueryScore:1.0,valSrcScore:1.0 [4919,1377,14064,735,4747,20107,278973,66596,224899,8348,1731,4322,12311,1011713,453783] doc:5,subQueryScore:1.0,valSrcScore:1.0 [4919,1377,14064,735,4747,20107,278973,66596,224899,8348,1731,4322,12311,1011713,453783] doc:6,subQueryScore:1.0,valSrcScore:1.0 [4919,1377,14064,735,4747,20107,278973,66596,224899,8348,1731,4322,12311,1011713,453783] doc:7,subQueryScore:1.0,valSrcScore:1.0 [4919,1377,14064,735,4747,20107,278973,66596,224899,8348,1731,4322,12311,1011713,453783] doc:8,subQueryScore:1.0,valSrcScore:1.0 [4919,1377,14064,735,4747,20107,278973,66596,224899,8348,1731,4322,12311,1011713,453783] doc:10,subQueryScore:1.0,valSrcScore:1.0 [4919,1377,14064,735,4747,20107,278973,66596,224899,8348,1731,4322,12311,1011713,453783] doc:11,subQueryScore:1.0,valSrcScore:1.0 [4919,1377,14064,735,4747,20107,278973,66596,224899,8348,1731,4322,12311,1011713,453783] doc:12,subQueryScore:1.0,valSrcScore:1.0 [4919,1377,14064,735,4747,20107,278973,66596,224899,8348,1731,4322,12311,1011713,453783] doc:13,subQueryScore:1.0,valSrcScore:1.0 [4919,1377,14064,735,4747,20107,278973,66596,224899,8348,1731,4322,12311,1011713,453783] doc:14,subQueryScore:1.0,valSrcScore:1.0 5:(1.5)[JOHN.txt【null】--->20107-----2014-05-17 02:57:03] 6:(1.5)[yinwen.txt【null】--->278973-----2014-01-19 09:13:14] 7:(1.5)[【项目管理】项目应用系统开发安全管理规范(等保三级).txt【null】--->66596-----2014-07-05 12:29:38] 8:(1.5)[凤凰台.txt【null】--->224899-----2014-07-0309:48:19] 13:(1.5)[湖边有棵许愿树.txt【null】--->1011713-----2014-07-0512:29:15] 14:(1.5)[顾城诗歌全集_雨枫轩Rain8.com.txt【null】--->453783-----2014-07-03 09:48:36] 1:(0.8333333)[B+树.txt【null】--->1377-----2010-05-0804:13:18] 2:(0.8333333)[hadoop.txt【null】--->14064-----2014-01-1712:03:30] 3:(0.8333333)[hydra.txt【null】--->735-----2014-05-0407:08:33] 4:(0.8333333)[ImbaMallLog.txt【null】--->4747-----2014-07-0309:54:46] 10:(0.8333333)[无标题1.txt【null】--->1731-----2014-06-2212:08:48] 11:(0.8333333)[树的基本概念.txt【null】--->4322-----2014-07-0309:49:07] 12:(0.8333333)[汪国真诗集-雨枫轩Rain8.com.txt【null】--->12311-----2014-07-03 09:49:23]

5.4 自定义QueryParser

1.限制性能低的QueryParser

2.扩展居于数字和日期的查询

import java.text.SimpleDateFormat;

import java.util.regex.Pattern;

import org.apache.lucene.analysis.Analyzer;

importorg.apache.lucene.queryParser.ParseException;

import org.apache.lucene.queryParser.QueryParser;

importorg.apache.lucene.search.NumericRangeQuery;

import org.apache.lucene.search.TermRangeQuery;

import org.apache.lucene.util.Version;

public class CustomParser extends QueryParser {

publicCustomParser(Version matchVersion, String f, Analyzer a) {

super(matchVersion, f, a);

}

@Override

protectedorg.apache.lucene.search.Query getWildcardQuery(String field,

String termStr) throws ParseException {

thrownew ParseException("由于性能原因,已经禁用了通配符查询,请输入更精确的信息进行查询");

}

@Override

protectedorg.apache.lucene.search.Query getFuzzyQuery(String field,

String termStr, float minSimilarity) throws ParseException {

thrownew ParseException("由于性能原因,已经禁用了模糊查询,请输入更精确的信息进行查询");

}

@Override

protectedorg.apache.lucene.search.Query getRangeQuery(String field,

String part1, String part2, boolean inclusive)

throws ParseException {

if(field.equals("size")) {

return NumericRangeQuery.newIntRange(field,Integer.parseInt(part1),Integer.parseInt(part2), inclusive, inclusive);

} elseif(field.equals("date")) {

String dateType = "yyyy-MM-dd";

Pattern pattern = Pattern.compile("\\d{4}-\\d{2}-\\d{2}");

if(pattern.matcher(part1).matches()&&pattern.matcher(part2).matches()){

SimpleDateFormat sdf = new SimpleDateFormat(dateType);

try {

long start = sdf.parse(part1).getTime();

long end = sdf.parse(part2).getTime();

return NumericRangeQuery.newLongRange(field, start, end, inclusive,inclusive);

}catch (java.text.ParseException e) {

e.printStackTrace();

}

}else {

throw new ParseException("要检索的日期格式不正确,请使用"+dateType+"这种格式");

}

}

returnsuper.newRangeQuery(field, part1, part2, inclusive);

}

}

5.5 自定义过滤器

过程:

(1).定义一个Filter继承Filter类

(2).覆盖getDocIdSet方法

(3).设置DocIdSet

package com.mzsx.custom.filter;

public interface FilterAccessor {

publicString[] values();

publicString getField();

publicboolean set();

}

package com.mzsx.custom.filter;

public class FilterAccessorImpl implementsFilterAccessor {

@Override

publicString[] values() {

returnnew String[]{"3","5","7"};

}

@Override

publicboolean set() {

returntrue;

}

@Override

publicString getField() {

return"id";

}

}

package com.mzsx.custom.filter;

import java.io.IOException;

import org.apache.lucene.index.IndexReader;

import org.apache.lucene.index.Term;

import org.apache.lucene.index.TermDocs;

import org.apache.lucene.search.DocIdSet;

import org.apache.lucene.search.Filter;

import org.apache.lucene.util.OpenBitSet;

import com.google.gson.Gson;

public class MyIDFilter extends Filter {

privateFilterAccessor accessor;

publicMyIDFilter(FilterAccessor accessor) {

this.accessor= accessor;

}

@Override

publicDocIdSet getDocIdSet(IndexReader reader) throws IOException {

//创建一个bit,默认所有的元素都是0

OpenBitSetobs = new OpenBitSet(reader.maxDoc());

/*System.out.println("IndexReader:"+reader.document(1));

System.out.println("OpenBitSet:"+newGson().toJson(obs));

System.out.println("OpenBitSet:"+newGson().toJson(obs.getBits()));*/

if(accessor.set()){

set(reader,obs);

}else {

clear(reader,obs);

}

returnobs;

}

privatevoid set(IndexReader reader,OpenBitSet obs) {

try{

int[]docs = new int[1];

int[] freqs = new int[1];

//获取id所在的doc的位置,并且将其设置为0

for(StringdelId:accessor.values()) {

//获取TermDocs

TermDocstds = reader.termDocs(new Term(accessor.getField(),delId));

//会见查询出来的对象的位置存储到docs中,出现的频率存储到freqs中,返回获取的条数

intcount = tds.read(docs, freqs);

if(count==1){

obs.set(docs[0]);

}

}

}catch (IOException e) {

e.printStackTrace();

}

}

privatevoid clear(IndexReader reader,OpenBitSet obs) {

try{

//先把元素填满

obs.set(0,reader.maxDoc());

int[]docs = new int[1];

int[] freqs = new int[1];

//获取id所在的doc的位置,并且将其设置为0

for(StringdelId:accessor.values()) {

//获取TermDocs

TermDocstds = reader.termDocs(new Term(accessor.getField(),delId));

System.out.println("----->"+tds.doc());

//会见查询出来的对象的位置存储到docs中,出现的频率存储到freqs中,返回获取的条数

intcount = tds.read(docs, freqs);

if(count==1){

//将这个位置的元素删除

obs.clear(docs[0]);

}

}

}catch (IOException e) {

e.printStackTrace();

}

}

}

package com.mzsx.custom.filter;

import java.io.IOException;

import java.text.SimpleDateFormat;

import java.util.Date;

import org.apache.lucene.document.Document;

importorg.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.IndexReader;

import org.apache.lucene.index.Term;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TermQuery;

import org.apache.lucene.search.TermRangeQuery;

import org.apache.lucene.search.TopDocs;

import org.junit.Test;

import com.mzsx.write.DirectoryConext;

public class CustomFilter {

@SuppressWarnings("deprecation")

@Test

publicvoid searchByCustomFilter() {

try{

IndexSearchersearcher = new IndexSearcher(DirectoryConext.getDirectory("D:\\luceneIndex\\index"));

//Queryq = new TermQuery(new Term("contents","台"));

TermRangeQuery range=new TermRangeQuery("id","1", "8", true, true);

TopDocstds = null;

tds= searcher.search(range, new MyIDFilter(new FilterAccessorImpl())/*newMyIDFilter(new FilterAccessor() {

@Override

publicString[] values() {

returnnew String[]{"3","5","7"};

}

@Override

publicboolean set() {

returnfalse;

}

@Override

publicString getField() {

return"id";

}

})*/,200);

SimpleDateFormatsdf = new SimpleDateFormat("yyyy-MM-dd hh:mm:ss");

for(ScoreDocsd:tds.scoreDocs) {

Documentd = searcher.doc(sd.doc);

System.out.println(sd.doc+":("+sd.score+")"+

"["+d.get("filename")+"【"+d.get("path")+"】--->"+

d.get("size")+"------------>"+d.get("id"));

}

searcher.close();

}catch (CorruptIndexException e) {

e.printStackTrace();

}catch (IOException e) {

e.printStackTrace();

}

}

}

5.6 近实时搜索

如对索引进行更改后没有commit或者close操作,是不能实现近实时索引的。此时我们需要使用到NrtManager.

package com.mzsx.nrtmanager;

import java.io.File;

import java.io.IOException;

import org.apache.commons.io.FileUtils;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.NumericField;

importorg.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.IndexReader;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.index.Term;

importorg.apache.lucene.queryParser.ParseException;

import org.apache.lucene.queryParser.QueryParser;

import org.apache.lucene.search.BooleanQuery;

import org.apache.lucene.search.Filter;

import org.apache.lucene.search.FuzzyQuery;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.NRTManager;

importorg.apache.lucene.search.NRTManagerReopenThread;

import org.apache.lucene.search.NumericRangeQuery;

import org.apache.lucene.search.PhraseQuery;

import org.apache.lucene.search.PrefixQuery;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.SearcherManager;

import org.apache.lucene.search.SearcherWarmer;

import org.apache.lucene.search.TermQuery;

import org.apache.lucene.search.TermRangeQuery;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.search.WildcardQuery;

import org.apache.lucene.search.BooleanClause.Occur;

import org.apache.lucene.store.Directory;

import org.apache.lucene.util.Version;

import com.mzsx.index.IndexReaderContext;

import com.mzsx.write.DirectoryConext;

import com.mzsx.write.IndexWriterContext;

public class NRTManagerOpera {

privateString fileName = "";

privateDirectory directory = null;

privateIndexWriter indexWriter = null;

privateIndexReader indexReader = null;

privateAnalyzer analyzer = null;

privateNRTManager nrtManager = null;

privateint id = 0;

privateNRTManagerReopenThread nReopenThread = null;

privateSearcherManager searcherManager = null;

publicNRTManagerOpera(String fileName, Analyzer analyzer) {

this.fileName= fileName;

this.analyzer= analyzer;

directory= DirectoryConext.getDirectory(fileName);

indexWriter= IndexWriterContext.getIndexWrite(directory, analyzer);

try{

//indexWriter= new IndexWriter(directory, new IndexWriterConfig(Version.LUCENE_35,analyzer));

nrtManager= new NRTManager(indexWriter, new SearcherWarmer() {

@Override

publicvoid warm(IndexSearcher s) throws IOException {

System.out.println("reopenIndexSearcher");

}

});

//启动NRTManager的Reopen线程

nReopenThread= new NRTManagerReopenThread(nrtManager, 5.0D, 0.025D);

nReopenThread.setDaemon(true);

nReopenThread.setName("nReopenThread");

//启动线程不然不会有效果的

nReopenThread.start();

searcherManager= nrtManager.getSearcherManager(true);

}catch (IOException e) {

e.printStackTrace();

}

}

// 创建索引

publicvoid createdIndex(String fName) {

try{

nrtManager.deleteAll();

Filefile = new File(fName);

if(!file.isDirectory()) {

try{

thrownew Exception("您传入的不是一个目录路径。。。");

}catch (Exception e) {

e.printStackTrace();

}

}

for(File f : file.listFiles()) {

Documentdoc = getDocument(f);

nrtManager.addDocument(doc);

}

//indexWriter.commit();

}catch (CorruptIndexException e) {

e.printStackTrace();

}catch (IOException e) {

e.printStackTrace();

}catch (Exception e) {

e.printStackTrace();

}

}

// 删除指定ID

publicvoid deleteByIndexWriter(String field, String value) {

try{

nrtManager.deleteDocuments(newTerm(field, value));

//indexWriter.commit();

// indexWriter.close();

}catch (CorruptIndexException e) {

e.printStackTrace();

}catch (IOException e) {

e.printStackTrace();

}

}

// 更新索引

publicvoid update(String field, String name) {

Documentdocu = new Document();

docu.add(newField("id", "2222", Field.Store.YES,

Field.Index.NOT_ANALYZED));

docu.add(newField("contents", "修改后的文件内容",Field.Store.NO,

Field.Index.ANALYZED_NO_NORMS));

docu.add(newField("filename", "这是修改后的文件名", Field.Store.YES,

Field.Index.NOT_ANALYZED));

docu.add(newField("fullpath", "这是修改后的文件后的文件路径", Field.Store.YES,

Field.Index.NOT_ANALYZED));

try{

nrtManager.updateDocument(newTerm(field, name), docu, analyzer);

//indexWriter.commit();

}catch (CorruptIndexException e) {

e.printStackTrace();

}catch (IOException e) {

e.printStackTrace();

}

}

publicvoid commit() {

try{

indexWriter.commit();

}catch (CorruptIndexException e) {

e.printStackTrace();

}catch (IOException e) {

e.printStackTrace();

}

}

// 范围查询

publicvoid searchByTermRange(String field, String lowerTerm,

StringupperTerm, int num) {

IndexSearchersearcher = searcherManager.acquire();

try{

TermRangeQueryrange = new TermRangeQuery(field, lowerTerm,

upperTerm,true, true);

TopDocstopDocs = searcher.search(range, num);

intlength = topDocs.totalHits;

System.out.println("总共查询出来总数:" + length);

ScoreDoc[]scoreDocs = topDocs.scoreDocs;

for(ScoreDoc scoreDoc : scoreDocs) {

Documentdoc = searcher.doc(scoreDoc.doc);

System.out.println(doc.get("id")+ "---->"

+doc.get("filename") + "[" + doc.get("fullpath")

+"]-->\n");

}

}catch (CorruptIndexException e) {

e.printStackTrace();

}catch (IOException e) {

e.printStackTrace();

}finally {

try{

searcherManager.release(searcher);

}catch (IOException e) {

e.printStackTrace();

}

}

}

// 遍历文件生产document

protectedDocument getDocument(File f) throws Exception {

//System.out.println(FileUtils.readFileToString(f));

Documentdoc = new Document();

doc.add(newField("id", ("" + (id++)), Field.Store.YES,

Field.Index.NOT_ANALYZED));

doc.add(newField("contents", FileUtils.readFileToString(f),

Field.Store.YES,Field.Index.ANALYZED_NO_NORMS));

doc.add(newField("filename", f.getName(), Field.Store.YES,

Field.Index.ANALYZED));

doc.add(newField("fullpath", f.getCanonicalPath(), Field.Store.YES,

Field.Index.NOT_ANALYZED));

doc.add(newNumericField("size", Field.Store.YES, true).setLongValue(f

.length()));

doc.add(newNumericField("date", Field.Store.YES, true).setLongValue(f

.lastModified()));

returndoc;

}

}