hadoop2.4.1分布式安装结合hbase0.94.23

centos6.5 x86 虚拟机上安装完全分布式hadoop-2.4.1结合hbase-0.94.23

一、环境准备:以下3台虚拟机:

192.168.1.105 nn namenode

192.168.1.106 sn secondarynamenode/datanode

192.168.1.107 dn1 datanode

要求:

1、节点间ping通:

[root@nn ~]# cat /etc/hosts 注意最好把ipv6那行删了,要不可能报错

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

192.168.1.105 nn

192.168.1.106 sn

192.168.1.107 dn1

2、节点间无密码ssh

3、java环境(各个节点配置相同的JAVA_HOME路径什么都要相同)

4、各节点间时间要同步

无密码login

[root@dn1 ~]# ssh-keygen -t rsa -P '' Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: a7:16:b7:43:ea:1b:91:f0:c5:ad:e2:ae:16:b7:03:07 root@dn1 The key's randomart image is: +--[ RSA 2048]----+ | | | . . | | . o . | | Eo o . | | .S = | | o.oX . | | =*.o | | .+o. . | | ...+o | +-----------------+

[root@dn1 ~]# ssh-copy-id -i root@localhost The authenticity of host 'localhost (127.0.0.1)' can't be established. RSA key fingerprint is 8b:26:11:0f:ec:9d:0f:58:e2:8f:fd:e2:cf:00:d4:1d. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'localhost' (RSA) to the list of known hosts.root@localhost's password: Now try logging into the machine, with "ssh 'root@localhost'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. [root@dn1 ~]# ssh-copy-id -i root@nn The authenticity of host 'nn (192.168.1.105)' can't be established. RSA key fingerprint is 8b:26:11:0f:ec:9d:0f:58:e2:8f:fd:e2:cf:00:d4:1d. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'nn,192.168.1.105' (RSA) to the list of known hosts.root@nn's password: Now try logging into the machine, with "ssh 'root@nn'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. 验证:

[root@nn ~]# ssh sn Last login: Tue Sep 16 07:18:38 2014 from dn1 [root@sn ~]# ssh dn1 Last login: Thu Sep 18 11:18:03 2014 from dn1 [root@dn1 ~]# ssh nn Last login: Tue Sep 16 21:10:05 2014 from dn1 [root@nn ~]#

二、安装分布式hadoop

在hadoop集群没有问题的基础上 再结合hbase,要不会出现很多问题;

1、下载源码包并解压

sftp> put hadoop-2.4.1.tar.gz hbase-0.94.23.tar.gz ??°?hadoop-2.4.1.tar.gz ?′?μ? /root/hadoop-2.4.1.tar.gz 100% 135406KB 5416KB/s 00:00:25 ??°?hbase-0.94.23.tar.gz ?′?μ? /root/hbase-0.94.23.tar.gz 100% 57773KB 4814KB/s 00:00:12 sftp> [root@nn ~]# tar -zxf hadoop-2.4.1.tar.gz -C /usr/local/

2、编辑hadoop配置文件

在hadoop2.x中配置文件与1有所不同: 在hadoop2.x中:

l 去除了原来1.x中包括的$HADOOP_HOME/src目录,该目录包括关键配置文件的默认值;

l 默认不存在mapred-site.xml文件,需要将当前mapred-site.xml.template文件copy一份并重命名为mapred-site.xml,并且只是一个具有configuration节点的空文件;

l 默认不存在mapred-queues.xml文件,需要将当前mapred-queues.xml.template文件copy一份并重命名为mapred-queues.xml;

l 删除了master文件,现在master的配置在hdfs-site.xml通过属性dfs.namenode.secondary.http-address来设置,如下:(这点要注意)

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>sn:9001</value>

</property>

以下是我的配置:

(1)hadoop-env.sh

export JAVA_HOME=/usr/java/jdk1.7.0_67/

(2)core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://nn:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/tmpdir</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>102400</value>

</property>

</configuration>

(3)hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>sn:9001</value>

</property>

</configuration>

(4)[root@dn1 hadoop]# cp mapred-site.xml.template mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

(5)yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>nn</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

3、配置HADOOP_HOME (用绝对路径也可,为了方便)

[root@nn hadoop]# cat /etc/profile.d/hadoop.sh HADOOP_HOME=/usr/local/hadoop-2.4.1 PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH export HADOOP_HOME PATH [root@nn hadoop]#source /etc/profile [root@nn hadoop-2.4.1]# hadoop versionHadoop 2.4.1Subversion http://svn.apache.org/repos/asf/hadoop/common -r 1604318 Compiled by jenkins on 2014-06-21T05:43ZCompiled with protoc 2.5.0 From source with checksum bb7ac0a3c73dc131f4844b873c74b630 This command was run using /usr/local/hadoop-2.4.1/share/hadoop/common/hadoop-common-2.4.1.jar

4、启动hadoop:

[root@nn hadoop]#hadoop namenode -format ......... 14/09/18 13:41:11 INFO util.GSet: capacity = 2^16 = 65536 entries 14/09/18 13:41:11 INFO namenode.AclConfigFlag: ACLs enabled? false 14/09/18 13:41:11 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1418484038-127.0.0.1-1411018871656 14/09/18 13:41:11 INFO common.Storage: Storage directory /tmpdir/dfs/name has been successfully formatted. 14/09/18 13:41:12 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 14/09/18 13:41:12 INFO util.ExitUtil: Exiting with status 0 14/09/18 13:41:12 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************SHUTDOWN_MSG: Shutting down NameNode at nn/127.0.0.1************************************************************/

[root@nn hadoop]# start-dfs.sh Starting namenodes on [nn] nn: starting namenode, logging to /usr/local/hadoop-2.4.1/logs/hadoop-root-namenode-nn.out sn: starting datanode, logging to /usr/local/hadoop-2.4.1/logs/hadoop-root-datanode-sn.out dn1: starting datanode, logging to /usr/local/hadoop-2.4.1/logs/hadoop-root-datanode-dn1.out Starting secondary namenodes [sn] sn: starting secondarynamenode, logging to /usr/local/hadoop-2.4.1/logs/hadoop-root-secondarynamenode-sn.out [root@nn hadoop]# start-yarn.sh starting yarn daemons starting resourcemanager, logging to /usr/local/hadoop-2.4.1/logs/yarn-root-resourcemanager-nn.out dn1: starting nodemanager, logging to /usr/local/hadoop-2.4.1/logs/yarn-root-nodemanager-dn1.out sn: starting nodemanager, logging to /usr/local/hadoop-2.4.1/logs/yarn-root-nodemanager-sn.out [root@nn hadoop]# jps 10487 Jps 10206 ResourceManager 9983 NameNode [root@nn hadoop]#

[root@sn hadoop-2.4.1]# jps 4863 DataNode 5182 Jps4932 SecondaryNameNode 5013 NodeManager [root@dn1 hadoop-2.4.1]# jps 4401 NodeManager 4319 DataNode 4552 jps

6、在hadoop上进行测试,保证以下工作不出错:

[root@nn hadoop]# jps 10487 Jps 10206 ResourceManager 9983 NameNode [root@nn hadoop]# [root@sn hadoop-2.4.1]# jps 4863 DataNode 5182 Jps4932 SecondaryNameNode 5013 NodeManager [root@dn1 hadoop-2.4.1]# jps 4401 NodeManager 4319 DataNode 4552 jps

mapreduce测试:

[root@nn hadoop-2.4.1]# hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-2.4.1.jar wordcount /test/NOTICE.txt /test/wordcount.out 14/09/18 22:43:29 INFO client.RMProxy: Connecting to ResourceManager at nn/192.168.1.105:8032 14/09/18 22:43:31 INFO input.FileInputFormat: Total input paths to process : 1 14/09/18 22:43:31 INFO mapreduce.JobSubmitter: number of splits:1 14/09/18 22:43:32 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1411050377567_0001 14/09/18 22:43:33 INFO impl.YarnClientImpl: Submitted application application_1411050377567_0001 14/09/18 22:43:33 INFO mapreduce.Job: The url to track the job: http://nn:8088/proxy/application_1411050377567_0001/ 14/09/18 22:43:33 INFO mapreduce.Job: Running job: job_1411050377567_0001 14/09/18 22:43:49 INFO mapreduce.Job: Job job_1411050377567_0001 running in uber mode : false 14/09/18 22:43:49 INFO mapreduce.Job: map 0% reduce 0% 14/09/18 22:44:04 INFO mapreduce.Job: map 100% reduce 0% 14/09/18 22:44:13 INFO mapreduce.Job: map 100% reduce 100% 14/09/18 22:44:15 INFO mapreduce.Job: Job job_1411050377567_0001 completed successfully 14/09/18 22:44:15 INFO mapreduce.Job: Counters: 49 File System Counters FILE: Number of bytes read=173 FILE: Number of bytes written=185967 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=196 HDFS: Number of bytes written=123 HDFS: Number of read operations=6 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 Job Counters Launched map tasks=1 Launched reduce tasks=1 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=13789 Total time spent by all reduces in occupied slots (ms)=6376 Total time spent by all map tasks (ms)=13789 Total time spent by all reduce tasks (ms)=6376 Total vcore-seconds taken by all map tasks=13789 Total vcore-seconds taken by all reduce tasks=6376 Total megabyte-seconds taken by all map tasks=14119936 Total megabyte-seconds taken by all reduce tasks=6529024 Map-Reduce Framework Map input records=2 Map output records=11 Map output bytes=145 Map output materialized bytes=173 Input split bytes=95 Combine input records=11 Combine output records=11 Reduce input groups=11 Reduce shuffle bytes=173 Reduce input records=11 Reduce output records=11 Spilled Records=22 Shuffled Maps =1 Failed Shuffles=0 Merged Map outputs=1 GC time elapsed (ms)=439 CPU time spent (ms)=2310 Physical memory (bytes) snapshot=210075648 Virtual memory (bytes) snapshot=794005504 Total committed heap usage (bytes)=125480960 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=101 File Output Format Counters Bytes Written=123 [root@nn hadoop-2.4.1]#

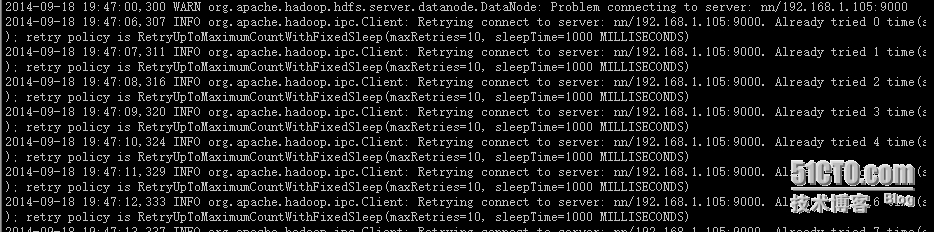

PS: 在安装的时候遇到一个错误:纠结好长时间:

就是在2个datanode日志中一直重复出现以下:好像是连接不上namenode:

INFO org.apache.hadoop.ipc.Client: Retrying connect to server: nn/192.168.1.105:9000. Already tried 6 time(s

最终: 修改/etc/hosts文件解决,不知道为什么

三、安装zookeeper:

集群环境下,hbase依赖于zookeeper进行通信;

下载源码包并解压:

[root@nn ~]#wget http://mirror.bit.edu.cn/apache/zookeeper/stable/zookeeper-3.4.6.tar.gz

[root@nn ~]# tar -zxvf zookeeper-3.3.6.tar.gz -C /usr/local/

创建日志目录:

[root@nn ~]mkdir /tmpdir/zookeeperdata/

编辑配置文件:

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/tmpdir/zookeeperdata/

dataLogDir=/usr/local/zookeeper-3.4.6/logdir

clientPort=2181

server.1=nn:2888:3888

server.2=sn:2888:3888

server.3=dn1:2888:3888

在各节点日志目录中创建myid文件

id号就是配置文件中server.x这个id,myid文件中只有一个id 号。

启动服务:

[root@nn zookeeper-3.4.6]# ./bin/zkServer.sh start

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.6/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@nn zookeeper-3.4.6]# jps

13437 Jps

11535 NameNode

13413 QuorumPeerMain

11756 ResourceManager

[root@nn zookeeper-3.4.6]# ./bin/zkServer.sh status

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.6/bin/../conf/zoo.cfg

Mode: follower

看看别的节点leader选举

[root@sn zookeeper-3.4.6]# zkServer.sh status

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.6/bin/../conf/zoo.cfg

Mode: leader

[root@dn1 zookeeper-3.4.6]# zkServer.sh status

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.6/bin/../conf/zoo.cfg

Mode: follower

四、结合hbase集群;