【学神-RHEL7】1-17-ssm存储管理器和磁盘配额

本节所讲内容:

LVM 创建的基本步骤

pvcreate vgcreate lvcreate

LVM查看

pvs pvscan pvdisplay

vgs vgscan vgdisplay

lvs lvscan lvdisplay

LVM缩减

1)首先创建lv

[root@localhost ~]#vgcreate vg1 /dev/sdb{1,2}

[root@localhost ~]# lvcreate-n lv1 -L 1.5G vg1

Logical volume "lv1" created.

lv缩减

[root@localhost ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

root rhel -wi-ao---- 10.00g

swaprhel -wi-ao---- 2.00g

lv1 vg1 -wi-a----- 1.50g

[root@localhost ~]#lvreduce -L 1G /dev/vg1/lv1

[root@localhost ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

root rhel -wi-ao---- 10.00g

swap rhel -wi-ao---- 2.00g

lv1 vg1 -wi-a----- 1.00g

vg缩减

注:缩减时,可以不卸载正在使用中的LV。另外,只能缩减没有被使用的pv。否则会提示以下内容:

[root@localhost ~]#vgreduce vg1 /dev/sdb1

Physical volume "/dev/sdb1" stillin use

在缩减之前可以查看要缩减的设备是否被使用

[root@localhost ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 rhel lvm2 a-- 12.00g 4.00m

/dev/sdb1 vg1 lvm2 a-- 1020.00m 0

/dev/sdb2 vg1 lvm2 a-- 1020.00m 1016.00m

/dev/sdb3 vg1 lvm2 a-- 1020.00m 1020.00m

[root@localhost ~]#vgreduce vg1 /dev/sdb3

Removed "/dev/sdb3" from volumegroup "vg1"

LVM删除

删除之前必须把设备进行卸载,否则会产生以下错误

[root@localhost ~]#lvremove /dev/vg1/lv1

Logical volume vg1/lv1 contains a filesystemin use.

删除lv

[root@localhost ~]#lvremove /dev/vg1/lv1

Do you really want toremove active logical volume lv1? [y/n]: y

Logical volume "lv1" successfullyremoved

删除vg

[root@localhost ~]#vgremove vg1

Volume group "vg1" successfullyremoved

删除pv

[root@localhost ~]#pvremove /dev/sdb1

Labels on physical volume"/dev/sdb1" successfully wiped

LVM快照

首先创建一个lv

[root@localhost ~]#vgcreate vg1 /dev/sdb{1,2}

[root@localhost ~]#lvcreate -n lv1 -L 1.5G vg1

Logical volume "lv1" created.

格式化lv并进行挂载

[root@localhost ~]#mkfs.xfs /dev/vg1/lv1

[root@localhost ~]#mount /dev/vg1/lv1 /lv1/

[root@localhost ~]# cp/etc/passwd /lv1/

[root@localhost ~]# ls/lv1/

passwd

[root@localhost ~]#mount /dev/vg1/lv1 /lv1/

[root@localhost ~]# cp/etc/passwd /lv1/

[root@localhost ~]# ls/lv1/

passwd

对lv1创建一个快照

[root@localhost ~]#lvcreate -s -n lv1_sp -L 300M vg1/lv1

Logical volume "lv1_sp" created.

[root@localhost ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

root rhel -wi-ao---- 10.00g

swap rhel -wi-ao---- 2.00g

lv1 vg1 owi-aos--- 1.00g

lv1_sp vg1 swi-a-s--- 300.00m lv1 0.00

注意:在挂载之前必须把源lv进行卸载,否则的话,会出现以下错误

[root@localhost ~]#mount /dev/vg1/lv1_sp /opt/

mount: wrong fs type,bad option, bad superblock on /dev/mapper/vg1-lv1_sp,

missing codepage or helper program, orother error

In some cases useful info is found insyslog - try

[root@localhost ~]#umount /lv1

[root@localhost ~]#mount /dev/vg1/lv1_sp /opt/

[root@localhost ~]# ls/opt/

passwd

系统存储管理器的使用

系统存储管理器(又称ssm)是RHEL7/CentOS7 新增的功能,是一种统一的命令界面,由红帽公司开发,用于管理各种各样的存储设备。目前,有三种可供ssm使用的卷管理后端:LVM、brtfs和crypt

1)安装系统存储管理器

[root@localhost ~]# yum-y install system-storage-manager.noarch

如果没有配置yum使用rpm的方式一样可以安装

[root@localhost~]# rpm -ivh /mnt/Packages/system-storage-manager-0.4-5.el7.noarch.rpm

查看ssm命令的帮助信息:

[root@localhost ~]# ssm--help

{check,resize,create,list,add,remove,snapshot,mount}

check Check consistency of the filesystem on the device.

resize Change or set the volume and filesystem size.

create Create a new volume with definedparameters.

list List information about alldetected, devices, pools,

volumes and snapshotsin the system.

add Add one or more devices intothe pool.

remove Remove devices from the pool,volumes or pools.

snapshot Take a snapshot of the existingvolume.

mount Mount a volume with file systemto specified locaion.

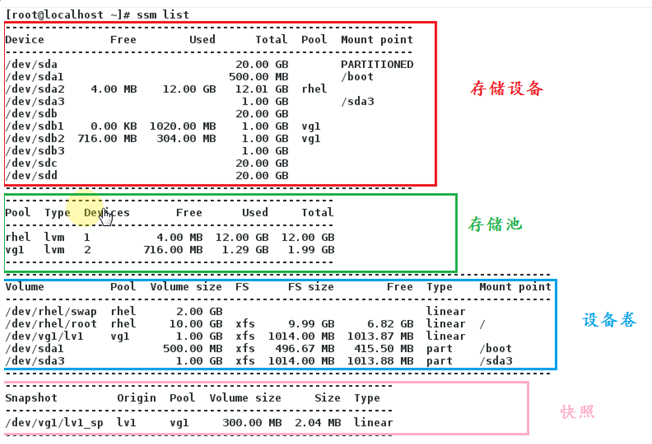

可以看到当前系统中有4个物理设备(sda、sdb、sdc、sdd)二个存储池(rhel 、vg1即lvm卷组)和两个在此存储池上创建的LVM卷(/dev/rhel/swap、/dev/rhel/root、/dev/vg1/lv1、/dev/sda1、/dev/sda3)

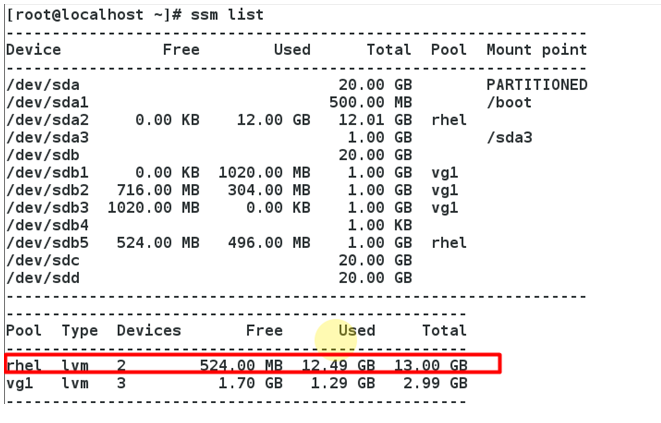

3)将物理磁盘sdb5添加到LVM存储池

语法格式:ssm add -p 存储池 设备

[root@localhost ~]# ssmadd -p rhel /dev/sdb5

Physical volume "/dev/sdb5"successfully created

Volume group "rhel" successfullyextended

新设备添加到存储池后,存储池会自动扩大

4)LVM动态扩容(不能对已经做了快照的lv进行扩容)

[root@localhost ~]# ssmresize -s+500M /dev/rhel/root

Size of logical volume rhel/root changed from10.00 GiB (2560 extents) to 10.49 GiB (2685 extents).

Logical volume root successfully resized

meta-data=/dev/mapper/rhel-root isize=256 agcount=4, agsize=655360 blks

= sectsz=512 attr=2, projid32bit=1

= crc=0 finobt=0

data = bsize=4096 blocks=2621440, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=0

log =internal bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

data blocks changedfrom 2621440 to 2749440

扩容之后通过查看可以看到,无需使用xfs_growfs命令对文件系统进行扩容,容量会自动扩展到文件系统中

[root@localhost ~]# df-h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/rhel-root 11G 3.3G 7.3G 31% /

devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs 2.0G 140K 2.0G 1% /dev/shm

tmpfs 2.0G 8.9M 2.0G 1% /run

tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

5)创建一个名为vg2的存储池,并在其上创建一个名为lv2大小为1G的LVM卷,使用xfs文件系统格式化卷,并将它挂载到/lv2目录下

如果不用ssm,需要四项步骤完成:创建vg 创建lv 格式化 挂载

[root@localhost ~]# ssmcreate -s 1G -n lv2 --fstype xfs -pvg2 /dev/sdc /lv2

[root@localhost ~]# ssmcreate -s 1G -n lv2 --fstype xfs -pvg2 /dev/sdc /lv2

[root@localhost ~]# df-h | grep lv2

/dev/mapper/vg2-lv2 1014M 33M 982M 4% /lv2

6)创建快照

[root@localhost ~]# ssmsnapshot -s 500M -n lv2_sp /dev/vg2/lv2

Logical volume "lv2_sp" created.

[root@localhost ~]#umount /lv2/

[root@localhost ~]#mkdir /lv2_snapshot

[root@localhost ~]#mount /dev/vg2/lv2_sp /lv2_snapshot/

注:挂载快照时,必须卸载原有的LVM卷

7)移除LVM卷

[root@localhost ~]# ssmremove /dev/vg2/lv2_sp

Device'/dev/vg2/lv2_sp' is mounted on '/lv2_snapshot' Unmount (N/y/q) ? Y

Do you really want toremove active logical volume lv2_sp? [y/n]: y

Logical volume "lv2_sp"successfully removed

注:删除已经挂载的卷,ssm会自动先将其卸载

磁盘配额

RHEL7磁盘配额

确认配额命令已经安装

[root@localhost ~]# rpm-qf `which xfs_quota`

xfsprogs-3.2.1-6.el7.x86_64

首先创建新的分区

[root@localhost ~]# ls/dev/sdd*

/dev/sdd /dev/sdd1

启用配额

[root@localhost ~]#mkfs.xfs /dev/sdd1

[root@localhost ~]#mkdir /sdd1

[root@localhost ~]#mount -o uquota,gquota /dev/sdd1 /sdd1/

查看

[root@localhost ~]#mount | grep sdd1

/dev/sdd1 on /sdd1 typexfs (rw,relatime,attr2,inode64,usrquota,grpquota)

如何开机自动启动配额

[root@localhost ~]# vim/etc/fstab

/dev/sdd1 /sdd1 xfs defaults,usrquota,grpquota 00

设置目录权限,并创建配额用户

[root@localhost ~]#chmod 777 /sdd1/

[root@localhost ~]#useradd who

查看配额状态

[root@localhost ~]#xfs_quota -x -c 'report' /sdd1/

User quota on /sdd1(/dev/sdd1)

Blocks

User ID Used Soft Hard Warn/Grace

------------------------------------------------------------

root 0 0 0 00 [--------]

Group quota on /sdd1(/dev/sdd1)

Blocks

Group ID Used Soft Hard Warn/Grace

------------------------------------------------------------

root 0 0 0 00 [--------]

参数:

-x 使用专家模式,只有此模式才能设置配额

-c 启用命令模式

report 显示配额信息

limit 设置配额

设置配额

[root@localhost ~]# xfs_quota-x -c 'limit bsoft=100M bhard=120M -g who' /sdd1/

再次查看

[root@localhost ~]#xfs_quota -x -c 'report' /sdd1/

User quota on /sdd1(/dev/sdd1)

Blocks

User ID Used Soft Hard Warn/Grace

------------------------------------------------------------

root 0 0 0 00 [--------]

Group quota on /sdd1(/dev/sdd1)

Blocks

Group ID Used Soft Hard Warn/Grace

------------------------------------------------------------

root 0 0 0 00 [--------]

who 0 102400 122880 00 [--------]

验证:

[root@localhost ~]# su- who

[who@localhost ~]$ ddif=/dev/zero of=/sdd1/who.txt bs=1M count=100

100+0 records in

100+0 records out

104857600 bytes (105MB) copied, 0.0714836 s, 1.5 GB/s

[who@localhost ~]$ ll-h /sdd1/who.txt

-rw-rw-r-- 1 who who 100MDec 14 22:19 /sdd1/who.txt

[who@localhost ~]$ ddif=/dev/zero of=/sdd1/who.txt bs=1M count=130

dd: errorwriting ‘/sdd1/who.txt’: Disk quota exceeded

121+0 records in

120+0 records out

125829120 bytes (126MB) copied, 0.125158 s, 1.0 GB/s

[who@localhost ~]$ ll-h /sdd1/who.txt

-rw-rw-r-- 1 who who120M Dec 14 22:20 /sdd1/who.txt

RHEL6.5 磁盘配额

限定用户或组对磁盘空间的使用。

[root@xuegod63 ~]# rpm-q quota

quota-3.17-16.el6.x86_64

2.启用quota磁盘配额功能

[root@xuegod63 ~]#mkfs.ext4 /dev/sdb3

[root@xuegod63 ~]#mkdir /tmp/sdb3

[root@xuegod63 ~]#mount /dev/sdb3 /tmp/sdb3/

[root@xuegod63 ~]# mount -o remount,usrquota,grpquota /tmp/sdb3/

[root@xuegod63 ~]#mount

/dev/sdb3 on /tmp/sdb3type ext4 (rw,usrquota,grpquota)

[root@xuegod63 ~]# tail-n 1 /etc/fstab

/dev/sdb3 /tmp/sdb3 ext4 defaults,usrquota,grpquota 0 0

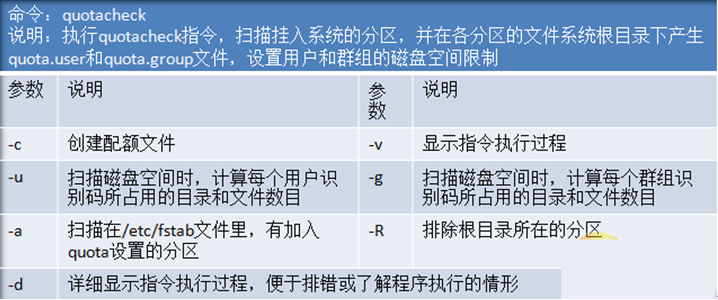

3.检测磁盘配额并生成配额文件

[root@xuegod63 ~]#quotacheck -cugv /tmp/sdb3/

[root@xuegod63 ~]# ll-a /tmp/sdb3/

total 40

drwxr-xr-x 3 root root 4096 Feb 2 22:18 .

drwxrwxrwt. 18 rootroot 4096 Feb 2 22:15 ..

-rw------- 1 root root 6144 Feb 2 22:18 aquota.group

-rw------- 1 root root 6144 Feb 2 22:18 aquota.user

setenforce 0 #要关闭selinux

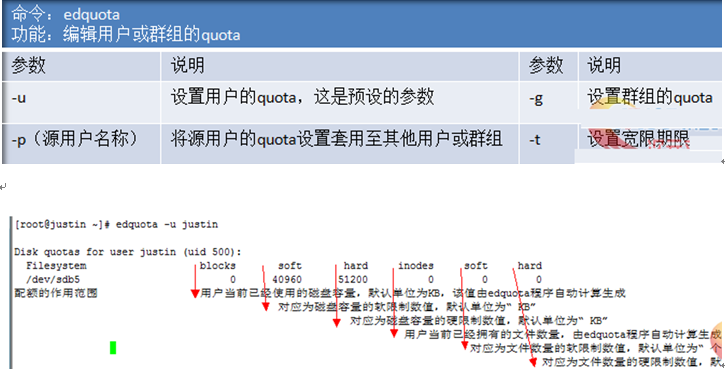

4.用户和组账号的配额设置

[root@xuegod63 ~]#useradd rm

[root@xuegod63 ~]#edquota -g rm

Disk quotas for groupmk (gid 500):

Filesystem blocks soft hard inodes soft hard

/dev/sdb3 0 50 80 0 0 0

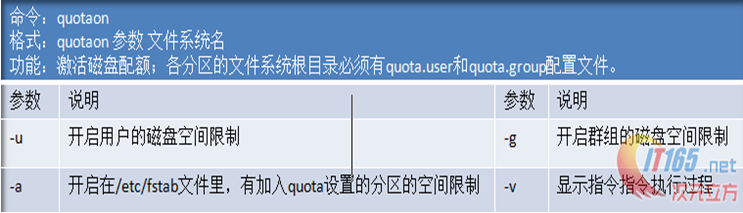

激活磁盘配额

[root@xuegod63 ~]#quotaon -ugv /tmp/sdb3/

/dev/sdb3 [/tmp/sdb3]:group quotas turned on

/dev/sdb3 [/tmp/sdb3]:user quotas turned on

验证:

[root@xuegod63 ~]#mkdir /tmp/sdb3/test

[root@xuegod63 ~]#chmod 777 /tmp/sdb3/test/

[mk@xuegod63 test]$ ddif=/dev/zero of=mk.txt bs=1Kcount=70 # 大于50K报警

sdb3: warning, groupblock quota exceeded.

70+0 records in

70+0 records out

71680 bytes (72 kB)copied, 0.00388255 s, 18.5 MB/s

[mk@xuegod63 test]$ ls

mk.txt

[mk@xuegod63 test]$ rm -rf mk.txt

[mk@xuegod63 test]$ ddif=/dev/zero of=mk.txt bs=1Kcount=90 # 大于80,报错

sdb3: write failed,group block limit reached.

dd: writing `mk.txt':Disk quota exceeded

[mk@xuegod63 test]$ ll-h

total 80K

-rw-rw-r-- 1 mk mk 80KFeb 2 22:30 mk.txt

说明:

[root@xuegod63 ~]#tune2fs -l /dev/sdb3 | grep size

Filesystemfeatures: has_journal ext_attrresize_inode dir_index filetype needs_recovery extent flex_bg sparse_superlarge_file huge_file uninit_bg dir_nlink extra_isize

Blocksize: 4096

Fragment size: 4096

说明,磁盘配额中的 blocks 。一个block是1K。

学习过程中如果问题,请留言。更多内容请加:

学神IT-linux讲师-RM老师QQ:2805537762

学神IT-戚老师QQ:3341251313

学神IT-旭斌QQ:372469347

学神IT教育RHEL7交流群:468845589