Sqoop源代码笔记

Sqoop分1和2之分,其实本质都差不多,无非就是MR运算。 命令行可参考文章: http://my.oschina.net/zhangliMyOne/blog/97236

为了节省时间,直接上了sqoop1的源码,这样可快速抽取其代码本质,作为后续开发指导之用。

=============================================================

中间比较重要的几个类是:

SqoopTool --- 工具类

SqoopOptions---运行的选项

Sqoop---整合类,也是重点

===最关键的还是Sqoop类,当然是一些具体实现类

先看ToolRunner 源码

package org.apache.hadoop.util;

import java.io.PrintStream;

import org.apache.hadoop.conf.Configuration;

/**

* A utility to help run {@link Tool}s.

*

* <p><code>ToolRunner</code> can be used to run classes implementing

* <code>Tool</code> interface. It works in conjunction with

* {@link GenericOptionsParser} to parse the

* <a href="{@docRoot}/org/apache/hadoop/util/GenericOptionsParser.html#GenericOptions">

* generic hadoop command line arguments</a> and modifies the

* <code>Configuration</code> of the <code>Tool</code>. The

* application-specific options are passed along without being modified.

* </p>

*

* @see Tool

* @see GenericOptionsParser

*/

public class ToolRunner {

/**

* Runs the given <code>Tool</code> by {@link Tool#run(String[])}, after

* parsing with the given generic arguments. Uses the given

* <code>Configuration</code>, or builds one if null.

*

* Sets the <code>Tool</code>'s configuration with the possibly modified

* version of the <code>conf</code>.

*

* @param conf <code>Configuration</code> for the <code>Tool</code>.

* @param tool <code>Tool</code> to run.

* @param args command-line arguments to the tool.

* @return exit code of the {@link Tool#run(String[])} method.

*/

public static int run(Configuration conf, Tool tool, String[] args)

throws Exception{

if(conf == null) {

conf = new Configuration();

}

GenericOptionsParser parser = new GenericOptionsParser(conf, args);

//set the configuration back, so that Tool can configure itself

tool.setConf(conf);

//get the args w/o generic hadoop args

String[] toolArgs = parser.getRemainingArgs();

return tool.run(toolArgs);

}

/**

* Runs the <code>Tool</code> with its <code>Configuration</code>.

*

* Equivalent to <code>run(tool.getConf(), tool, args)</code>.

*

* @param tool <code>Tool</code> to run.

* @param args command-line arguments to the tool.

* @return exit code of the {@link Tool#run(String[])} method.

*/

public static int run(Tool tool, String[] args)

throws Exception{

return run(tool.getConf(), tool, args);

}

/**

* Prints generic command-line argurments and usage information.

*

* @param out stream to write usage information to.

*/

public static void printGenericCommandUsage(PrintStream out) {

GenericOptionsParser.printGenericCommandUsage(out);

}

}

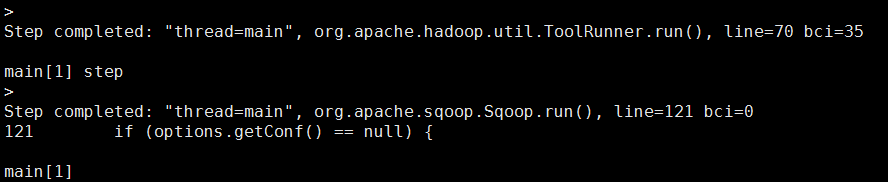

所以具体就看实现类的run方法

==============================================================================

@Override

/**

* Actual main entry-point for the program 表明是具体入口点

*/

public int run(String [] args) {

if (options.getConf() == null) {

// Configuration wasn't initialized until after the ToolRunner

// got us to this point. ToolRunner gave Sqoop itself a Conf

// though.

options.setConf(getConf());

}

try {

options = tool.parseArguments(args, null, options, false);

tool.appendArgs(this.childPrgmArgs);

tool.validateOptions(options);

} catch (Exception e) {

// Couldn't parse arguments.

// Log the stack trace for this exception

LOG.debug(e.getMessage(), e);

// Print exception message.

System.err.println(e.getMessage());

// Print the tool usage message and exit.

ToolOptions toolOpts = new ToolOptions();

tool.configureOptions(toolOpts);

tool.printHelp(toolOpts);

return 1; // Exit on exception here.

}

return tool.run(options);

}

所以最后还是落到tool.run执行。

------------------------------------------------核心代码如下:org.apache.sqoop.mapreduce.ImportJobBase

/**

* Run an import job to read a table in to HDFS.

*

* @param tableName the database table to read; may be null if a free-form

* query is specified in the SqoopOptions, and the ImportJobBase subclass

* supports free-form queries.

* @param ormJarFile the Jar file to insert into the dcache classpath.

* (may be null)

* @param splitByCol the column of the database table to use to split

* the import

* @param conf A fresh Hadoop Configuration to use to build an MR job.

* @throws IOException if the job encountered an IO problem

* @throws ImportException if the job failed unexpectedly or was

* misconfigured.

*/

public void runImport(String tableName, String ormJarFile, String splitByCol,

Configuration conf) throws IOException, ImportException {

if (null != tableName) {

LOG.info("Beginning import of " + tableName);

} else {

LOG.info("Beginning query import.");

}

String tableClassName =

new TableClassName(options).getClassForTable(tableName);

loadJars(conf, ormJarFile, tableClassName);

try {

Job job = new Job(conf);

// Set the external jar to use for the job.

job.getConfiguration().set("mapred.jar", ormJarFile);

configureInputFormat(job, tableName, tableClassName, splitByCol);

configureOutputFormat(job, tableName, tableClassName);

configureMapper(job, tableName, tableClassName);

configureNumTasks(job);

cacheJars(job, getContext().getConnManager());

jobSetup(job);

setJob(job);

boolean success = runJob(job);//提交运行

if (!success) {

throw new ImportException("Import job failed!");

}

} catch (InterruptedException ie) {

throw new IOException(ie);

} catch (ClassNotFoundException cnfe) {

throw new IOException(cnfe);

} finally {

unloadJars();

}

}

=========运行前,抽取了job的一些重要属性如下:

后续重点分析这几个类就可以了。