Mahout协同过滤算法源码分析(3)--parallelALS

Mahout版本:0.7,hadoop版本:1.0.4,jdk:1.7.0_25 64bit。

接上篇,此篇分析parallelALS的initializeM函数和for循环(for循环里面含有一个QR分解,此篇只分析到这里为止)。parallelALS的源码对应为:org.apache.mahout.cf.taste.hadoop.als.ParallelALSFactorizationJob,首先来总结一下,前篇blog已有的内容:

PathToUserRatings: <key,vlaue> --> <userID,[ItemID:rating,ItemID:rating,...];

PathToItemRatings: <key,vlaue> --> <itemID,[userID:rating,userID:rating,...];

PathToAverageRatings: <key,value> --> <0,[itemID:averageRating,itemID:averageRating,...]>

打开initializeM函数可以看到下面的源码:

private void initializeM(Vector averageRatings) throws IOException {

Random random = RandomUtils.getRandom();

FileSystem fs = FileSystem.get(pathToM(-1).toUri(), getConf());

SequenceFile.Writer writer = null;

try {

writer = new SequenceFile.Writer(fs, getConf(), new Path(pathToM(-1), "part-m-00000"), IntWritable.class,

VectorWritable.class);

Iterator<Vector.Element> averages = averageRatings.iterateNonZero();

while (averages.hasNext()) {

Vector.Element e = averages.next();

Vector row = new DenseVector(numFeatures);

row.setQuick(0, e.get());

for (int m = 1; m < numFeatures; m++) {

row.setQuick(m, random.nextDouble());

}

writer.append(new IntWritable(e.index()), new VectorWritable(row));

}

} finally {

Closeables.closeQuietly(writer);

}这里的pathToM(-1) 就对应于temp中的M--1文件夹(这个文件夹在前篇blog中提到过)。在initializeM之前先有一个对averageRatings变量初始化的代码:

Vector averageRatings = ALSUtils.readFirstRow(getTempPath("averageRatings"), getConf());这句代码就是把

PathToAverageRatings中的value读出来而已,所以averageRatings变量就是含有所有item的平均分向量。

然后initializeM做的就是把所有item的平均分扩充,这里扩成的意思是:假如原始为 item:averateRating ,那么扩充后就变为 item:[averateRating,randomRating,randomRating,...],这里扩充的randomRating的个数和我们设置的参数numFeature相关(=numFeature-1),randomRating是随机数(0到1之间的随机小数)。所以initializeM后会在M--1/part-m-00000文件中生成这样格式的输出:

<key,value> --> <itemID,[averateRating,randomRating,randomRating,...]>。

下面分析for循环里面的第一个job,第一个job是由下面的代码调用的:

runSolver(pathToUserRatings(), pathToU(currentIteration), pathToM(currentIteration - 1));runSolver函数的代码如下:

private void runSolver(Path ratings, Path output, Path pathToUorI)

throws ClassNotFoundException, IOException, InterruptedException {

Class<? extends Mapper> solverMapper = implicitFeedback ?

SolveImplicitFeedbackMapper.class : SolveExplicitFeedbackMapper.class;

Job solverForUorI = prepareJob(ratings, output, SequenceFileInputFormat.class, solverMapper, IntWritable.class,

VectorWritable.class, SequenceFileOutputFormat.class);

Configuration solverConf = solverForUorI.getConfiguration();

solverConf.set(LAMBDA, String.valueOf(lambda));

solverConf.set(ALPHA, String.valueOf(alpha));

solverConf.setInt(NUM_FEATURES, numFeatures);

solverConf.set(FEATURE_MATRIX, pathToUorI.toString());

boolean succeeded = solverForUorI.waitForCompletion(true);

if (!succeeded)

throw new IllegalStateException("Job failed!");

}由于前面的参数设置中没有implicitFeedback参数,所以这里的mapper选择为SolveExplicitFeedbackMapper。这里看到输入ratings就是前面的pathToUserRatings,out是pathToU(第一次循环是U-0文件夹),然后pathToUorI是M--1。这里的job没有reducer,所以可以主要分析mapper即可。下面是mapper的仿制代码:

package mahout.fansy.als;

import java.io.IOException;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import java.util.Map.Entry;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Writable;

import org.apache.mahout.math.SequentialAccessSparseVector;

import org.apache.mahout.math.Vector;

import org.apache.mahout.math.VectorWritable;

import org.apache.mahout.math.als.AlternatingLeastSquaresSolver;

import org.apache.mahout.math.map.OpenIntObjectHashMap;

import com.google.common.collect.Lists;

import mahout.fansy.utils.read.ReadArbiKV;

public class SolveExplicitFeedbackMapperFollow {

/**

* 第一次调用SloveExplicitFeedBackMapper的仿制代码

* @param args

*/

private static double lambda=0.065;

private static int numFeatures=20;

private static OpenIntObjectHashMap<Vector> UorM;

private static AlternatingLeastSquaresSolver solver;

public static void main(String[] args) throws IOException {

setup();

map();

}

/**

* 获得map输入文件;

* @return

* @throws IOException

*/

public static Map<Writable,Writable> getMapData() throws IOException{

String fPath="hdfs://ubuntu:9000/user/mahout/output/als/als/userRatings/part-r-00000";

Map<Writable,Writable> mapData=ReadArbiKV.readFromFile(fPath);

return mapData;

}

/**

* 仿造setup函数

*/

public static void setup(){

solver = new AlternatingLeastSquaresSolver();

UorM = ALSUtilsFollow.readMatrixByRows(

new Path("hdfs://ubuntu:9000/user/mahout/temp/als/M--1/part-m-00000"), getConf());

}

public static void map() throws IOException{

Map<Writable,Writable> map=getMapData();

for(Iterator<Entry<Writable, Writable>> iter=map.entrySet().iterator();iter.hasNext();){

Entry<Writable,Writable> entry=(Entry<Writable, Writable>) iter.next();

IntWritable userOrItemID=(IntWritable) entry.getKey();

VectorWritable ratingsWritable=(VectorWritable) entry.getValue();

// source code

Vector ratings = new SequentialAccessSparseVector(ratingsWritable.get());

List<Vector> featureVectors = Lists.newArrayList();

Iterator<Vector.Element> interactions = ratings.iterateNonZero();

while (interactions.hasNext()) {

int index = interactions.next().index();

featureVectors.add(UorM.get(index));

}

Vector uiOrmj = solver.solve(featureVectors, ratings, lambda, numFeatures);

System.out.println(userOrItemID+","+ new VectorWritable(uiOrmj));

}

}

/**

* 获得configuration

* @return

*/

private static Configuration getConf() {

Configuration conf=new Configuration();

conf.set("mapred.job.tracker", "ubuntu:9000");

return conf;

}

}

首先在setup函数中初始化了两个变量,其中的UorM就是把M--1中的数据读出来了而已,另外的一个变量solver则是进行复杂的运算。debug模式,查看UorM:

这里方框中可以看到有3692个item,和前面分析一致,同时每个都含有20个值,从0到19,这个把值往后面拉就可以看到了。

在map函数中,有两个变量值得注意,其一是ratings,这个变量就是pathToUserRatings的value,即[ItemID:rating,ItemID,rating,...]这里的ratings都是真实的用户对于item的评价。其二是featureVectors,这个是通过下面的方式转换得到的:

首先由userID所有评价过的item找出在UorM中存在的项,然后把这些项全部赋值给featureVector,即可得到userID的featureVector了。

然后,然后,然后重点来了:Vector uiOrmj = solver.solve(featureVectors, ratings, lambda, numFeatures);不管三七二十几,先打开solve函数来看看:

public Vector solve(Iterable<Vector> featureVectors, Vector ratingVector, double lambda, int numFeatures) {

Preconditions.checkNotNull(featureVectors, "Feature vectors cannot be null");

Preconditions.checkArgument(!Iterables.isEmpty(featureVectors));

Preconditions.checkNotNull(ratingVector, "rating vector cannot be null");

Preconditions.checkArgument(ratingVector.getNumNondefaultElements() > 0, "Rating vector cannot be empty");

Preconditions.checkArgument(Iterables.size(featureVectors) == ratingVector.getNumNondefaultElements());

int nui = ratingVector.getNumNondefaultElements();

Matrix MiIi = createMiIi(featureVectors, numFeatures);

Matrix RiIiMaybeTransposed = createRiIiMaybeTransposed(ratingVector);

/* compute Ai = MiIi * t(MiIi) + lambda * nui * E */

Matrix Ai = addLambdaTimesNuiTimesE(MiIi.times(MiIi.transpose()), lambda, nui);

/* compute Vi = MiIi * t(R(i,Ii)) */

Matrix Vi = MiIi.times(RiIiMaybeTransposed);

/* compute Ai * ui = Vi */

return solve(Ai, Vi);

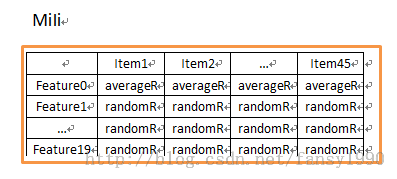

}前面检查的代码就不管了,然后第一个nui变量,这个就是去的ratingVector的不为零的个数,按照此系列的第一篇实战,这里的值应该是45,numFeature应该是20。然后到了MiIi变量,这个变量是把featureVectors进行转置,变为下面的形式:

其中第一行全部是相应item的平均评价值,下面的行则是随机数评价值。然后到了Matrix RiIiMaybeTransposed = createRiIiMaybeTransposed(ratingVector);这里的RiIiMaybeTransposed 变量是把ratings进行转置:

接下来到了Matrix Ai = addLambdaTimesNuiTimesE(MiIi.times(MiIi.transpose()), lambda, nui);即Ai的值其实是等于MiIi的转置乘以MiIi,这里的乘法是矩阵的乘法。比如行矩阵[a1,a2,a3]乘以列矩阵[b1;b2;b3],那么即是1*3的行矩阵乘以3*1的列矩阵,得到1*1的矩阵:a1*b1+a2*b2+a3*b3。通俗来说应该是M*R的矩阵乘以R*N的矩阵,然后得到M*N的矩阵。Mili.transpose()函数就是求Mili的转置,times是求两个矩阵相乘。addLambdaTimesNuiTimesE方法后面的两个参数的使用是在MiIi矩阵转置相乘后把对角线(row=col)的值更新为原始值+lambda*nui即可。

那么Vi就相对来说比较简单了:Matrix Vi = MiIi.times(RiIiMaybeTransposed); 即是Mili和RiIiMaybeTransposed的矩阵乘积了。

最后到了solve函数,这个函数返回为:

Vector solve(Matrix Ai, Matrix Vi) {

return new QRDecomposition(Ai).solve(Vi).viewColumn(0);

}额,好吧,居然是QR分解,我晕,本来基本的数学还ok,这样居然用到了QR分解,我去,看了半个钟头,感觉好复杂了。。。

分享,成长,快乐

转载请注明blog地址:http://blog.csdn.net/fansy1990