使用R语言进行协整关系检验

协整检验是为了检验非平稳序列的因果关系,协整检验是解决伪回归为问题的重要方法。首先回归伪回归例子:

伪回归Spurious regression 伪回归方程的拟合优度、显著性水平等指标都很好,但是其残差序列是一个非平稳序列,拟合一个伪回归:

#调用相关R包

library(lmtest)

library(tseries)

#模拟序列

set.seed(123456)

e1 = rnorm(500)

e2 = rnorm(500)

trd = 1:500

y1 = 0.8 * trd + cumsum(e1)

y2 = 0.6 * trd + cumsum(e2)

sr.reg = lm(y1 ~ y2)

#提取回归残差

error = residuals(sr.reg)

#作残差散点图

plot(error, main = "Plot of error")

#对残差进行单位根检验

adf.test(error)

## Dickey-Fuller = -2.548, Lag order = 7, p-value = 0.3463

## alternative hypothesis: stationary

#伪回归结果,相关参数都显著

summary(sr.reg)

## Residuals:

## Min 1Q Median 3Q Max

## -30.654 -11.526 0.359 11.142 31.006

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -29.32697 1.36716 -21.4 <2e-16 ***

## y2 1.44079 0.00752 191.6 <2e-16 ***

## Residual standard error: 13.7 on 498 degrees of freedom

## Multiple R-squared: 0.987, Adjusted R-squared: 0.987

## F-statistic: 3.67e+04 on 1 and 498 DF, p-value: <2e-16

dwtest(sr.reg)

## DW = 0.0172, p-value < 2.2e-16

恩格尔-格兰杰检验Engle-Granger 第一步:建立两变量(y1,y2)的回归方程, 第二部:对该回归方程的残差(resid)进行单位根检验其中,原假设两变量不存在协整关系,备择假设是两变量存在协整关系。利用最小二乘法对回归方程进行估计,从回归方程中提取残差进行检验。

set.seed(123456)

e1 = rnorm(100)

e2 = rnorm(100)

y1 = cumsum(e1)

y2 = 0.6 * y1 + e2

# (伪)回归模型

lr.reg = lm(y2 ~ y1)

error = residuals(lr.reg)

adf.test(error)

## Dickey-Fuller = -3.988, Lag order = 4, p-value = 0.01262

## alternative hypothesis: stationary

error.lagged = error[-c(99, 100)]

# 建立误差修正模型ECM.REG

dy1 = diff(y1)

dy2 = diff(y2)

diff.dat = data.frame(embed(cbind(dy1, dy2), 2)) #emed表示嵌入时间序列dy1,dy2到diff.dat

colnames(diff.dat) = c("dy1", "dy2", "dy1.1", "dy2.1")

ecm.reg = lm(dy2 ~ error.lagged + dy1.1 + dy2.1, data =diff.dat)

summary(ecm.reg)

## Residuals:

## Min 1Q Median 3Q Max

## -2.959 -0.544 0.137 0.711 2.307

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.0034 0.1036 0.03 0.97

## error.lagged -0.9688 0.1585 -6.11 2.2e-08 ***

## dy1.1 0.8086 0.1120 7.22 1.4e-10 ***

## dy2.1 -1.0589 0.1084 -9.77 5.6e-16 ***

## Residual standard error: 1.03 on 94 degrees of freedom

## Multiple R-squared: 0.546, Adjusted R-squared: 0.532

## F-statistic: 37.7 on 3 and 94 DF, p-value: 4.24e-16

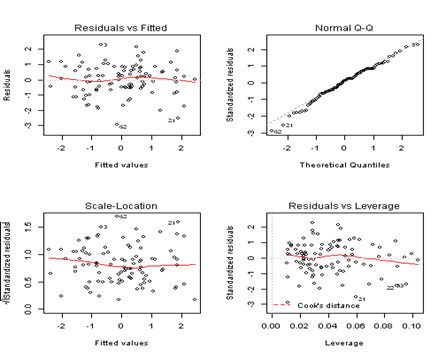

par(mfrow = c(2, 2))

plot(ecm.reg)

Johansen-Juselius(JJ)协整检验法,该方法是一种用向量自回归(VAR)模型进行检验的方法,适用于对多重一阶单整I(1)序列进行协整检验。JJ检验有两种:特征值轨迹检验和最大特征值检验。我们可以调用urca包中的ca.jo命令完成这两种检验。其语法:

ca.jo(x, type = c("eigen", "trace"), ecdet = c("none", "const", "trend"), K = 2,spec=c("longrun", "transitory"), season = NULL, dumvar = NULL)

其中:x为矩阵形式数据框;type用来设置检验方法;ecdet用于设置模型形式:none表示不带截距项,const表示带常数截距项,trend表示带趋势项。K表示自回归序列的滞后阶数;spec表示向量误差修正模型反映的序列间的长期或短期关系;season表示季节效应;dumvar表示哑变量设置。

set.seed(12345)

e1 = rnorm(250, 0, 0.5)

e2 = rnorm(250, 0, 0.5)

e3 = rnorm(250, 0, 0.5)

# 模拟没有移动平均的向量自回归序列;

u1.ar1 = arima.sim(model = list(ar =0.75), innov = e1, n = 250)

u2.ar1 = arima.sim(model = list(ar =0.3), innov = e2, n =250)

y3 = cumsum(e3)

y1 =0.8* y3 + u1.ar1

y2 =-0.3 * y3 + u2.ar1

# 合并y1,y2,y3构成进行JJ检验的数据库;

y.mat = data.frame(y1, y2, y3)

# 调用urca包中cajo命令对向量自回归序列进行JJ协整检验

vecm = ca.jo(y.mat)

jo.results = summary(vecm)#cajorls命令可以得到限制协整阶数的向量误差修正模型的最小二乘法回归结果

vecm.r2 = cajorls(vecm, r =2);vecm.r2

## Call:lm(formula = substitute(form1), data = data.mat)

## Coefficients:

## y1.d y2.d y3.d

## ect1 -0.33129 0.06461 0.01268

## ect2 0.09447 -0.70938 -0.00916

## constant 0.16837 -0.02702 0.02526

## y1.dl1 -0.22768 0.02701 0.06816

## y2.dl1 0.14445 -0.71561 0.04049

## y3.dl1 0.12347 -0.29083 -0.07525

## $beta

## ect1 ect2

## y1.l2 1.000e+00 0.0000

## y2.l2 -3.402e-18 1.0000

## y3.l2 -7.329e-01 0.2952

所有代码摘自《Analysis of Integrated and Cointegrated Time Series with R》

出处:http://blog.163.com/yugao1986@126/blog/static/69228508201341235046978